DATA ANALYSIS GUIDE-SPSS

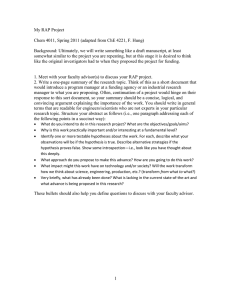

advertisement