A MULTI-AGENT BASED MEDICAL IMAGE MULTI-DISPLAY VISUALIZATION SYSTEM

advertisement

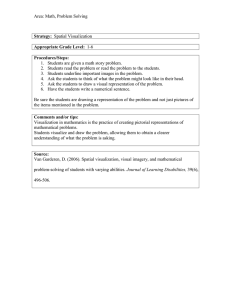

A MULTI-AGENT BASED MEDICAL IMAGE MULTI-DISPLAY VISUALIZATION SYSTEM Filipe Marreiros, Victor Alves and Luís Nelas* Informatics Department School of Engineering – University of Minho Braga, Portugal f_marreiros@yahoo.com.br, valves@di.uminho.pt *Radiconsult Braga, Portugal luis.nelas@radiconsult.com Abstract – The evolution of equipments used in the medical imaging practice, from 3-tesla Magnetic Resonance (MR) units and 64-slice Computer Tomography (CT) systems to the latest generation of hybrid Positron Emission Tomography (PET)/CT technologies is fast producing a volume of images that threatens to overload the capacity of the interpreting radiologists. On the other hand multi-agents systems are being used in a wide variety of research and application fields. Our work concerns the development of a multi-agent system that enables a multi-display medical image diagnostic system. The multi-agent system architecture permits the system to grow (scalable) i.e., the number of displays according to the user’s available resources. There are two immediate benefits of this scalable feature: the possibility to use inexpensive hardware to build a cluster system and the real benefit for physicians is that the visualization area increases allowing for easier and faster navigation. In this way an increase in the display area can help a physician analyse and interpret more information in less time. Keywords: multi-agent systems, multi-display systems, medical image viewers, computer aided diagnostics. I. INTRODUCTION There is some innovation in the process to set the fundamentals under which problem-solving via multiagents can benefit from the combination among agents and humans. Indeed, the specification, development and analysis of constructive, multi-agent systems received a push with an human-in-the-loop, a consequence of a more appropriate agent oriented approach to problem solving in areas such as The Medicine, where more healthcare providers invest on computerized medical records, more clinical data is made accessible, and more clinical insights become available. A. Motivation and objectives The evolution of equipments used in the medical imaging practice, from 3-tesla Magnetic Resonance (MR) units and 64-slice Computer Tomography (CT) systems to the latest generation of hybrid Positron Emission Tomography (PET)/CT technologies is fast producing a volume of images that threatens to overload the capacity of the interpreting radiologists. The current workflow reading approaches are becoming inadequate for reviewing the 300 to 500 images of a routine CT of the chest, abdomen, or pelvis, and are less so far the 1500 to 2000 images of a CT angiography or functional MR study [1]. However the image visualization computer programs continue to present the same schema to analyse the images. Basically, imitating the manual process of film visualization as can be found in medical viewers like eFilm Workstation, DicomWorks, Rubo Medical, ImageJ, just to name a few[2,3,4,5]. These observations have given us the motivation to overcome these limitations by increasing the display area to allow a faster navigation and analysis of the medical images, performed by the user (e.g. radiologist, referring physician). To achieve this we created a multidisplay system that allows the visualization of selected medical images across the displays in use. To support this overall multi-display system a multi-agent system was developed that allows scalability and gives us control and interoperability of the system components. Another of our goals that was also achieved was to build an inexpensive system. B. Background The focus of the work carried out in the imagiolagy field has been on image processing rather then image presentation. A fact easily noticed by analysing some medical image viewers available. From the few published work about medical image presentation, we have to point out the work developed by the Simon Fraser University [6,7,8,9]. The main goal of their work is to study the best ways to present MRI images in a simple computer screen where they try to emulate the traditional viewboxes using several techniques to overcome the screen real estate problem [9]. This can be described as the problem of presenting information within the space available on a computer screen. Our approach was to overcome this limitation, through a scalable multi-display system that can grow according to the needs and resources available still maintaining the possibility to control the hanging protocol (i.e. the way images are disposed in the viewing area) 1) Multi-agent Systems Multi-agent Systems (MAS) may de seen as a new methodology in distributed problem-solving, i.e. agentbased computing has been hailed as a significant breakthrough in distributed problem solving and/or a new revolution in software development and analysis [10,11,12]. Indeed, agents are the focus of intense interest on many sub-fields of Computer Science, being used in a wide variety of applications, ranging from small systems to large, open, complex and critical ones [13,14,15,16]. Agents are not only a very promising technology, but are emerging as a new way of thinking, a conceptual paradigm for analyzing problems and for designing systems, for dealing with complexity, distribution and interactivity. It may even be seen as a new form of computing and intelligence. There are many definitions and classifications for agents [17]. Although there is no universally accepted definition of agent, in this work such an entity is to be understood as a computing artefact, being it in hardware or software. In terms of agent interaction we use a shared memory (blackboard) for message passing. The majority of the multi-agent systems found in this field are used normally for computed aided diagnostics, data search filters and for knowledge management. Neuronal networks based agents have been applied with success for computed aided diagnostic [18]. Systems with these features can greatly help physicians, aiding them to make decisions. Agents are also used for data search filters. Together they can search several databases, using a fixed or flexible search criteria based on the knowledge they posses of the user, its preferences and goals. The knowledge agents can predict the user’s intensions or preferences. They can be used to configure an interface according to the user’s profile. This profile based on the preferences and continuous observation of the user’s interaction with the interface. The agents learn the user work pattern and automatically set the interface. 2) Multi-display Systems Multi-display systems have been used in a wide variety of applications, being mainly found within the Virtual Reality field. They can be used to create large display systems, as for example Walls or Caves. Basically there are two ways to build a multi-display system. One (the simplest) makes use of the operating system and the graphics hardware. The actual operating system has the feature to allow multi-display, to do so we can either use a graphic card with multiple outputs or use several graphic cards. This allows the work area (desktop) to grow according to the number of monitors used. The second approach, most common in the Virtual Reality field (VR) is to use PC clusters were each PC is connected to a monitor. In order for the system to work there has to be a communication medium to allow the flow of data through the entire system. At low level in the majority of the cases sockets and the TCP/IP or UDP/IP protocols are used. In the VR field software frameworks handle these communications at a higher level of abstraction. An example is VR Juggler [19] that, besides the communication, can be connected to sceengraphs and tracking systems, allowing this way an easy process for the creation of a complex VR system. C. Structure The remainder of this paper is organised as follows. In the next section we introduce the multi-agent system architecture, presenting the system’s agents and how they cooperate. The factors and choices that had to be considered during the implementation of the multi-agent system are to be found in section III. In Section IV we present an overview of the multi-display system, its basic components and structure. Conclusions can be found in section V. II. MULTI-AGENT SYSTEM ARCHITECTURE The system architecture is depicted in figure 1 where the interactions between agents and the environment can be visualised. These agents, their interactions, goals and tasks will be addressed in the next section. Figure 1 - Multi-agent system architecture A. The system agents We use three distinct agents, Data Prepare Agent dpa, Control Station Agent csa and Visualization Terminal Agent vta. 1) Environment The environment is formed by the new studies in the Radiology Information System (RIS) work list, a list of parsed studies. A file that contains the location of all these studies is maintained in the local hard drive. The environment also manages the Internet Protocol (IP) location of the agents and the blackboard. In the terminal machines a configuration file indicating the positioning (column-row) is used. The blackboard is implemented by a process that runs in the main memory and is responsible for the maintenance of a list of properties of the Active Visualization Terminals (i.e. it maintains and updates there IPs, the terminal position and the screen resolution). This way the Control Station Agent can access this information and communicate directly with the Visualization Terminal Agents. 2) Data Prepare Agent When a new study is available for analyses we make use of this agent to parse the correspondent DICOM (Digital Imaging and Communications in Medicine) file [20], dividing each DICOM file into three files. The first containing the DICOM Tags, the second the image raw data values and the third file a set of attributes of the image series (i.e. number of slices, image dimensions, etc.). When this process is finished, this agent edits a file containing the references of all parsed studies i.e. the systems work list. 3) Control Station Agent The DICOM standard defines a hierarchical structure in tree form, which contemplates several levels (figure 2). As one can observe the lower level is the Image level where we can find the images obtained from the medical equipments (i.e. modalities). The next level is the Series level where the images are grouped. In most modalities the images in a series are related, for example in CT normally they correspond to an acquisition along the patient body. The Study level groups these series. And finally the Patient level contains all the studies of a particular patient. Users can interact with the interface to load in a maximum of two series of studies in the work list. These series can contain a number of images or slices that are displayed in the Control Station Interface and in the Visualization Terminals Interface. Navigation through the series is made with the use of sliders and the layout can be set by the user. All these possible changes are reflected in the Visualisation Terminals Interface. To accomplish this, messages are sent to the environment with image data, image attributes (e.g. window level and window width) the layout and the navigation information. The Control Station Agent continuously collects messages sent by the Visualization Terminal Agents with status information (e.g. IP, monitor position, and screen resolution of the visualisation terminal). With this information the control station can send the right messages to each visualization terminal, but it still has to continually track the positioning index of the monitors. Position indexes are assigned from left to right, top to bottom. Consider the configuration presented in figure 3, were we have tree monitors and there correspondent positioning and position indexes. If new monitors are detected the position indexes have to be recomputed to correct the system status. Figure 3 - Possible monitor configuration and correspondent index positions Figure 2 - DICOM Hierarchical Structure The Control Station Agent is our most complex agent. It is responsible for finding the studies of the work list, send information to the Visualization Terminals Agents and also enable an interface with the user through its Control Station Interface. To know which studies are presented in the work list this agent has to continually sense the environment looking for work list study references. This agent is also responsible for the garbage collection where studies are removed when the radiologist finishes his reading. 4) Visualization Terminal Agent This is the agent used to actually display the images in the monitors. But first it has to continually communicate to the blackboard its status (e.g. IP, address, monitor position and screen dimensions), allowing the Control Station Agent to read these messages enabling it to know the overall system status and send information to all the Visualization Terminal Agents. As already referred the Control Station Agent will send information about the study (e.g. series layout, entire series images data, data properties that are used to process the image pixels colours and navigation positioning of the two series that we allow to handle in the system). But the most important information is the monitor position index. With this variable the Visualization Terminal Agents can compute the correct placement of the images, according to the Control Station Agent. III. IMPLEMENTATION Special care was taken with technological choices to assure the successful implementation of the system’s functionalities. A. Programming Languages The programming languages of our choice were Java and Ansi C/C++. The main reason for this choice is due to the fact that the selected Application Program Interfaces (API) we are using were also developed using these languages. B. DICOM Parsing The ability to interpret the medical image’s meta-data is an essential feature of any medical image viewer. Available APIs that permitted DICOM parsing were tested. The most two outstanding products were the DCMTK – DICOM toolkit [21] and PixelMed Java DICOM Toolkit [22]. Although both APIs were freeware and presented similar functionalities, our choice was PixelMed due to its better support documentation. C. Graphic APIs The low level graphic API designed OpenGL [23] was mandatory since it is considered the standard for graphic visualization. At a higher level we find the Graphic User Interface (GUI) toolkits that consist of a predefined set of components also known has Widgets. Some of these Widgets have objects/components where OpenGL contexts can be integrated. Our quest for toolkits with this feature gave the following selection: - FLTK (Fast Light Toolkit) [24]; - FOX [25]; - Trolltech – Qt [26]; - wxWidgets [27]; - GTK+ [28]. From the selection, FLTK was the first to be excluded since its full screen mode didn’t hide the windows command bar. The following one to be excluded was GTK+ as we found some implementation problems when trying to use it under Windows. FOX was next due to its poor documentation and inferior functionalities. Although both wxWidgets and TrolltechQt have similar functionalities and documentation, we preferred wxWidgets since its freeware under Windows. D. Communication APIs Communications play a fundamental role in our system. Agents communicate with the environment (e.g. medical equipment, Radiological Information System, Hospital Information System) and among each other through the Blackboard. Fortunately wxWidgets has integrated a socket communication API. This was indeed a major benefit, since we didn’t have to handle directly with each operating system low level communication API, wxWidgets did the job. IV. MULTI-DISPLAY SYSTEM OVERVIEW An overview of our multi-display system and its basic components is present in figure 4. The following section contains a summary of the Control Station and Visualization Terminals features. Figure 4- Multi-display system overview A. Control Station Interface The Control Station Interface allows the user to interact with the system. It’s divided in two distinct frames. The work list tree with the studies at the left were the user can select two distinct series to be loaded and the images displayed in the right frame and in the corresponding visual terminals (figure 5). Figure 5 - Control Station Interface The right frame is used to process and analyse selected images and to track the virtual viewing workspace. The information presented in it as thumbnails will also appear in the Visualization Terminals as full images. Sliders are available for easy navigation through out the images in the series. The layout of the virtual viewing workspace can be configured by the radiologist using the distribution tab. In figure 5 we have a configuration of four visualization terminals, three in a top row and one at the bottom. Two series are being displayed horizontally and each series has two images being displayed vertically. A navigation paradigm was introduced to access the data in this virtual viewing workspace. By double clicking a virtual terminal only the correspondent selection will de displayed. Going one level above, double clicking an image, only the selected image will be displayed and the toolbar tools can be used for image manipulation. Currently all basic tools are under assessment (i.e. image rotation, horizontal and vertical flipping, window width/level, zoom, pan) and some extra tools being still under development. To return back to the lower level the radiologist just has to press control and click. In the same figure one of the Visualization Terminals is bigger than the others. This is due to its higher screen resolution. Proportionality and positioning of the Visualization Terminal is maintained, aiding the users in making a clearer identification of the real monitors. Ideally the real monitors are placed according to the virtual ones present in the Control Station Interface. B. Visualization Terminals Interface As already stated, the information contained in the virtual viewing workspace is the same as in the real visualization terminal. In figure 6 we present a screen shot of the first Visualization Terminal Interface when its current status was the one presented in figure 5. Figure 6 - Visualization Terminal Interface The achieved increase of image area was one of our main goals, thus allowing a faster and easier analysis of images by the radiologists. In figure 7 we present a picture of the system with four identical monitors and a notebook running the Data Prepare Agent and the Control Station Agent. V. CONCLUSION We have presented a multi-agent based multi-display visualization system and how we were able to use it to support the medical image visualization process. Due to its scalability we can easily assemble a system that grows according to the users needs. It can be build with conventional hardware (i.e. no special graphics cards or other specific hardware is needed). The visualization system (i.e. viewer) is of the greatest importance for the medical imaging experts. The navigation facilities and wider work area of this system aids them in the image viewing process thus improving the diagnostic process. Currently the whole system is under assessment and we hope in the near future to have trial versions running in imaging facilities enabling valuable feedback from the radiologists. Image processing tools are continually being development to aid the physicians analysing regions of interest. Image navigation and enhancements will continue to be improved, including better support for multimodality fusion such as PET/CT and functional MR. The next major phases of our developments will focus on agents that implement decision support tools, such as computer-assisted diagnosis and intelligent hanging protocols. All being easily integrated in our system due to its multi-agent based development. Although our work aimed the medical field, it can be easily reformulated for other areas e.g. in an advertising context we can imagine a store with a network of computers where we want to set dynamically the advertising images of those monitors using a control station. With a similar system as the one here presented this could easily accomplished. ACKNOWLEDGEMENTS We are indebted to CIT- Centro de Imagiologia da Trindade, and to HGSA – Hospital Geral de Santo António, for their help in terms of experts, technicians and machine time. Figure 7 - Multi-display system REFERENCES [1] Radiological Society of North America http://www.rsna.com [2] MERGE eMed http://www.merge.com [3] DicomWorks http://dicom.online.fr [4] Rubo Medical Imaging http://www.rubomedical.com [5] ImageJ - Image Processing and Analysis in Java http://rsb.info.nih.gov/ij [6] van der Heyden, J. E., “Magnetic resonance image viewing and the Screen real estate problem”, Msc. Thesis, Simon Fraser University, 1998. [7] van der Heyden, J. E., Carpendale, M.S.T., Inkpen, K., Atkins, M.S., “Visual Representation of Magnetic Resonance Images”, Proceedings Vis98, 1998, pp 423-426. [8] van der Heyden, J. E., Atkins, M.S., Inkpen K., Carpendale, M.S.T., “MR image viewing and the screen real estate problem”, Proceedings of the SPIE-Medical Imaging 1999, 3658: 370-381, Feb. 1999. [9] van der Heyden, J. E., Atkins, M.S., Inkpen K., Carpendale, M.S.T., “A User Centered Task Analysis of Interface Requirements for MRI Viewing”, Graphics Interface 1999, pp 18-26. [10]Neves, J., Machado, J., Analide, C., Novais, P., and Abelha, A. “Extended Logic Programming applied to the specification of Multi-agent Systems and their Computing Environment”, in Proceedings of the ICIPS’97 (Special Session on Intelligent Network Agent Systems), Beijing, China, 1997. [11]Faratin, P., Sierra C. and N. Jennings “Negotiation Decision Functions for Autonomous Agents” in Int. Journal of Robotics and Autonomous Systems, 24(34):159-182, 1997. [12]Wooldridge, M., “Introduction to MultiAgent Systems”, 1st edition, John Wiley & Sons, Chichester, [13]Gruber, T.R. “The role of common ontology in achieving sharable, reusable knowledge bases”, in Proceedings of the Second International Conference (KR’91), J. Allen, R. Filkes, and E. Sandewall (eds.), pages 601-602 Cambridge, Massachusetts, USA, 1991 [14]Abelha, A., PhD Thesis, “Multi-agent systems as Working Cooperative Entities in Health Care Units” (In Portuguese), Departamento de Informática, Universidade do Minho, Braga, Portugal, 2004. [15]Alves V., Neves J., Maia M., Nelas L., “A Computational Environment for Medical Diagnosis Support Systems”. ISMDA2001, Madrid, Spain, 2001. [16]Alves V., Neves J., Maia M., Nelas L., Marreiros F., “Web-based Medical Teaching using a Multi-Agent System” in the twenty-fifth SGAI International Conference on Innovative Techniques and Applications of Artificial Intelligence – AI2005 , Cambridge, UK, December, 2005. [17]Franklin, Stan, Graesser, Art, “Is it an Agent, or just a Program?: A Taxonomy for Autonomous Agents”, Proceedings of the Third International Workshop on Agent Theories, Architectures, and Languages, Springer-Verlag, 1996 [18]Alves V., “Resolução de Problemas em Ambientes Distribuídos: Uma Contribuição nas Áreas da Inteligência Artificial e da Saúde” PhD Thesis, Braga, Portugal, 2002. [19]VR Juggler – Open Source Virtual Reality Tools http://www.vrjuggler.org [20]DICOM Standard http://medical.nema.org/dicom/2004.html [21]DCMTK - DICOM Toolkit http://dicom.offis.de/dcmtk.php.en [22]PixelMed Java DICOM Toolkit http://www.pixelmed.com [23]OpenGL http://www.opengl.org [24]FLTK - Fast Light Toolkit http://www.fltk.org [25]FOX Toolkit http://www.fox-toolkit.org [26]TROLLTECH - Qt http://www.trolltech.com/products/qt/index.html [27] wxWidgets http:// www.wxwidgets.org [28] GTK+ http://www.gtk.org