06/05/2008 Jae Hyun Kim Chapter 1 Probability Theory (i) : One Random Variable

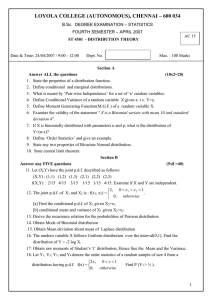

advertisement

Bioinformatics Tea Seminar: Statistical Methods in Bioinformatics

Chapter 1

Probability Theory (i) : One Random Variable

06/05/2008

Jae Hyun Kim

Content

Discrete Random Variable

Discrete Probability Distributions

Probability Generating Functions

Continuous Random Variable

Probability Density Functions

Moment Generating Functions

jaekim@ku.edu

2

Discrete Random Variable

Discrete Random Variable

Numerical quantity that, in some experiment (Sample

Space) that involves some degree of randomness, takes

one value from some discrete set of possible values

(EVENT)

Sample Space

Set of all outcomes of an experiment (or observation)

For Example,

Flip a coin { H,T }

Toss a die {1,2,3,4,5,6}

Sum of two dice { 2,3,…,12 }

Event

Any subset of outcome

jaekim@ku.edu

3

Discrete Probability Distributions

The probability distribution

Set of values that this random variable can take, together

with their associated probabilities

Example,

Y = total number of heads when flip a coin twice

Probability Distribution Function

Cumulative Distribution Function

jaekim@ku.edu

4

One Bernoulli Trial

A Bernoulli Trial

Single trial with two possible outcomes

“success” or “failure”

Probability of success = p

jaekim@ku.edu

5

The Binomial Distribution

The Binomial Random Variable

The number of success in a fixed number of n independent

Bernoulli trials with the same probability of success for

each trial

Requirements

Each trial must result in one of two possible outcomes

The various trials must be independent

The probability of success must be the same on all trials

The number n of trials must be fixed in advance

jaekim@ku.edu

6

Bernoulli Trail and Binomial Distribution

Comments

Single Bernoulli Trial = special case (n=1) of

Binomial Distribution

Probability p is often an unknown parameter

There is no simple formula for the cumulative

distribution function for the binomial

distribution

There is no unique “binomial distribution,” but

rather a family of distributions indexed by n

and p

jaekim@ku.edu

7

The Hypergeometric Distribution

Hypergeometric Distribution

N objects ( n red, N-n white )

m objects are taken at random, without replacement

Y = number of red objects taken

Biological example

N lab mice ( n male, N-n female )

m Mutations

The number Y of mutant males: hypergeometric

distribution

jaekim@ku.edu

8

The Uniform/Geometric Distribution

The Uniform Distribution

Same values over the range

The Geometric Distribution

Number of Y Bernoulli trials before but not including the

first failure

Cumulative distribution function

jaekim@ku.edu

9

The Poisson Distribution

The Poisson Distribution

Event occurs randomly in time/space

For example,

The time between phone calls

Approximation of Binomial Distribution

When

n is large

p is small

np is moderate

Binomial (n, p, x ) = Poisson (np, x) ( = np)

jaekim@ku.edu

10

Mean

Mean / Expected Value

Expected Value of g(y)

Example

Linearity Property

In general,

jaekim@ku.edu

11

Variance

Definition

jaekim@ku.edu

12

Summary

jaekim@ku.edu

13

General Moments

Moment

r th moment of the probability distribution about

zero

Mean : First moment (r = 1)

r th moment about mean

Variance : r = 2

jaekim@ku.edu

14

Probability-Generating Function

PGF

Used to derive moments

Mean

Variance

If two r.v. X and Y have identical probability

generating functions, they are identically

distributed

jaekim@ku.edu

15

Continuous Random Variable

Probability density function f(x)

Probability

Cumulative Distribution Function

jaekim@ku.edu

16

Mean and Variance

Mean

Variance

Mean value of the function g(X)

jaekim@ku.edu

17

Chebyshev’s Inequality

Chebyshev’s Inequality

Proof

jaekim@ku.edu

18

The Uniform Distribution

Pdf

Mean & Variance

jaekim@ku.edu

19

The Normal Distribution

Pdf

Mean , Variance 2

jaekim@ku.edu

20

Approximation

Normal Approximation to Binomial

Condition

n is large

Binomial (n,p,x) = Normal (=np, 2=np(1-p), x)

Continuity Correction

Normal Approximation to Poisson

Condition

is large

Poisson (,x) = Normal(=, 2=, x)

jaekim@ku.edu

21

The Exponential Distribution

Pdf

Cdf

Mean 1/, Variance 1/2

jaekim@ku.edu

22

The Gamma Distribution

Pdf

Mean and Variance

jaekim@ku.edu

23

The Moment-Generating Function

Definition

Useful to derive

m’(0) = E[X], m’’(0) = E[X2], m(n)(0) = E[Xn]

mgf m(t) = pgf P(et)

jaekim@ku.edu

24

Conditional Probability

Conditional Probability

Bayes’ Formula

Independence

Memoryless Property

jaekim@ku.edu

25

Entropy

Definition

can be considered as function of PY(y)

a measure of how close to uniform that distribution

is, and thus, in a sense, of the unpredictability of

any observed value of a random variable having that

distribution.

Entropy vs Variance

measure in some sense the uncertainty of the value

of a random variable having that distribution

Entropy : Function of pdf

Variance : depends on sample values

jaekim@ku.edu

26