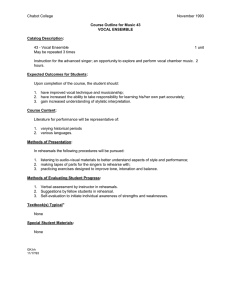

Medical Diagnosis COMS 6998 Fall 2009 Promiti Dutta

advertisement

Medical Diagnosis COMS 6998 Fall 2009 Promiti Dutta Vocal Emotion Recognition with Cochlear Implants Use of prosodic speech characteristics for automated detection of alcohol intoxication S. Luo, Q. J. Fu, J. J. Galvin M. Levit, R. Huber, A. Batliner, E. Noeth 2006 2001 An Exploratory Social-Emotional Prosthetic for Autism Spectrum Disorders Measurement of emotional involvement in spontaneous speaking behavior R. Kaliouby, A. Teeters, R. W. Picard B. Z. Pollermann 2006 2000 Vocal Emotion Recognition with Cochlear Implants Use of prosodic speech characteristics for automated detection of alcohol intoxication S. Luo, Q. J. Fu, J. J. Galvin M. Levit, R. Huber, A. Batliner, E. Noeth 2006 2001 An Exploratory Social-Emotional Prosthetic for Autism Spectrum Disorders Measurement of emotional involvement in spontaneous speaking behavior R. Kaliouby, A. Teeters, R. W. Picard B. Z. Pollermann 2006 2000 Vocal Emotion Recognition with Cochlear Implants Cochlear Implants (CI) • Can restore hearing sensation to deaf individuals • How do they work? – Use spectrally-based speech-processing strategies – Temporal envelope is extracted from number of frequency analysis bands and used to modulate pulse trains of current delivered to appropriate electrodes • CI performance is poor for challenging listening tasks – – – – Speech in noise Music perception Voice gender Speaker recognition Vocal Emotion Recognition with Cochlear Implants Cochlear Implants (CI) • Current research goal – Improve difficult listening conditions – How? • Improve transmission of spectro- temporal fine structure cues – Methods • Increase spectral resolution for apical electrodes to better code pitch information (Geurts and Wouters) • Sharpening the temporal envelope to enhance periodicity cues transmitted by the speech processor (Green et. al.) Vocal Emotion Recognition with Cochlear Implants Prosodic Information in Spoken Language • Prosodic features = variations in speech rhythm, intonation, etc. • Prosodic cues = emotion of speaker • Acoustic features associated with vocal emotion – – – – – Pitch (mean value and variability) Intensity Speech rate Voice quality Articulation Normal hearing – 70 – 80% accuracy in recognition AI, NN, Statistical classifiers equally as well Vocal Emotion Recognition with Cochlear Implants This Study • CI Users: Investigate ability to recognize vocal emotions in acted emotional speech – Limited access to pitch information and spectro-temporal fine structure cues • Normal Hearers: vocal emotion recognition using unprocessed speech and speech processed by acoustic CI simulations – Simulations: different amounts of spectral resolution and temporal information to examine relative contributions of spectral and temporal cues Vocal Emotion Recognition with Cochlear Implants Subjects • 6 NH (3 males and 3 females) – Puretone treshold better than 20 dB HL at octave frequencies from 125 to 8000 Hz in both ears • 6 CI (3 males and 3 females) – – – – – Post-lingually deafened 5 of 6 subjects: at-least one-year experience with device 1 subject: 4 months’ experience with device (3 Nucleus-22 users, 2 Nucelus-24 users, 1 Freedom User) Tested using clinically assigned speech processors • Native English speakers • Participants paid for participation Vocal Emotion Recognition with Cochlear Implants Stimuli and Speech Processing • HEI-ESD – emotional speech database – – – – 1 male; 1 female 50 simple English sentences 5 target emotions (neutral, anxious, happy, sad, and angry) Same sentences used to convey different target emotions in order to minimize contextual and discourse cues • Speech processing – Digitized using 16-bit A/D converter – 22,050 Hz sampling rate, without high-frequency preemphasis – Relative intensity cues preserved for each emoitonal qualities – Samples NOT normalized Vocal Emotion Recognition with Cochlear Implants Stimuli and Speech Processing • Database evaluated – 3 NH English-speaking listeners – 10 sentences that produced highest vocal emotion recognition scores selected for experimental testing – Total = 100 tokens (2 speakers * 5 emotions * 10 sentences) Vocal Emotion Recognition with Cochlear Implants Emotion Recognition Tests • CI subjects - unprocessed speech • NH subjects - unprocessed speech + speech processed by acoustic, sine-wave vocoder CI simulations. • Continuous Interleaved Sampling (CIS) strategy Vocal Emotion Recognition with Cochlear Implants Experimental Set-Up • • • • Subjects seated in double-walled sound-treated booth Listen to stimuli in free field over loud speaker Presentation level = 65 dBA Calibrated by average power of "angry" emotion sentences produced by male talker • Closed-set, 5-alternative identification task used to measure vocal emotion recognition • Trial - sentence randomly selected (without replacement) from stimulus set and presented to subject Vocal Emotion Recognition with Cochlear Implants Experimental Set-Up: Response • Subject respond by clicking on 1 of 5 choices on screen (neutral, anxious, happy, sad, angry) • No feedback or training • Responses collected and scored in terms of percent correct • At least 2 runs for each experimental condition • CI simulations – Test order of speech processing conditions randomized across subjects – Different between two runs Vocal Emotion Recognition with Cochlear Implants Results Vocal Emotion Recognition with Cochlear Implants Discussion • Results show both spectral and temporal cues significantly contribute to performance • Spectral cues may contribute more strongly to recognition of linguistic information • Temporal cues may contribute more strongly to recognition of emotional content coded in spoken language • Results show a potential trade-off between spectral resolution and periodicity cues when performing vocal emotion recognition task • Future: improve access to spectral and temporal fine structure cues to enhance recognition Vocal Emotion Recognition with Cochlear Implants Use of prosodic speech characteristics for automated detection of alcohol intoxication S. Luo, Q. J. Fu, J. J. Galvin M. Levit, R. Huber, A. Batliner, E. Noeth 2006 2001 An Exploratory Social-Emotional Prosthetic for Autism Spectrum Disorders Measurement of emotional involvement in spontaneous speaking behavior R. Kaliouby, A. Teeters, R. W. Picard B. Z. Pollermann 2006 2000 Use of prosodic speech characteristics for automated detection of alcohol intoxication Introduction • Spoken language influenced by – Speaker – Emotions – Physiological impairment caused by drugs or alcohol • Goal – to identify and classify stress and emotions in spoken language – Acoustic features (cepstral coeffiecients) – Prosodic features (fundamental frequency) • No experiments on automated detection of alcohol intoxication by spoken language – Structural prosodic features – one vector of prosodic features for each signal interval of a lexical unit of speech • ASR problems? Use of prosodic speech characteristics for automated detection of alcohol intoxication Contribution • New approach to determine signal intervals which underlie extraction of prosodic features • Avoid use of ASR • Use of phrasal units – Relates prosodic structural features to signal intervals localized through basic prosodic features • i.e. - zero-crossing, energy, fundamental frequency Use of prosodic speech characteristics for automated detection of alcohol intoxication Phrasal Units • Prosodic units – Micro-intervals, Entire signal is an interval, Macro intervals • Phrasal Unit - Speech intervals calculated frame-wise – Fundamental frequency, Zero-crossing, Energy Use of prosodic speech characteristics for automated detection of alcohol intoxication Features • Prosodic units – one vector – PM21 – prosodic features describing macro-tendencies in fundamental frequency and energy (21) – VUI11 – duration characteristics of voiced and unvoiced intervals (11) – LTM24 – long term cepstral coefficients (non-prosodic features) – Jitter, shimmer, short-term fluctuations in energy, fundamental frequency Use of prosodic speech characteristics for automated detection of alcohol intoxication Database • Alcoholized speech samples from Germany • 120 readings of a German fable • 33 male speakers in different alcoholization conditions – Blood level – 0 to 2.4 – Phrasal units • Average duration – 2.3 seconds • Average speech tempo – 20.8 PhU/min • Alcoholized = 0.8 per mille and higher Use of prosodic speech characteristics for automated detection of alcohol intoxication Conclusions • Prosodic speech characteristics can be used to determine intoxication • Shown how to extract prosodic features with classification abilities from speech signal without lexical segmentation • Shown phrasal units correspond to syntactic structures of language • Determined set of structural prosodic features capable of best classification for automatic detection of intoxication – 69% accuracy on unseen data Vocal Emotion Recognition with Cochlear Implants Use of prosodic speech characteristics for automated detection of alcohol intoxication S. Luo, Q. J. Fu, J. J. Galvin M. Levit, R. Huber, A. Batliner, E. Noeth 2006 2001 An Exploratory Social-Emotional Prosthetic for Autism Spectrum Disorders Measurement of emotional involvement in spontaneous speaking behavior R. Kaliouby, A. Teeters, R. W. Picard B. Z. Pollermann 2006 2000 An Exploratory Social-Emotional Prosthetic for Autism Spectrum Disorders Autism Spectrum Disorders: Interesting Facts • Affects 1 in 91 children and 1 in 58 boys • Autism prevalence figures are growing • More children will be diagnosed with autism this year than with AIDS, diabetes & cancer combined • Fastest-growing serious developmental disability in the U.S. An Exploratory Social-Emotional Prosthetic for Autism Spectrum Disorders Autism Spectrum Disorders: Overview • Neuro-developmental disorder – Mainly characterized by communication and social interaction • May exhibit atypical autonomic nervous system patterns – May be monitored by electrodes (measure skin conductance) An Exploratory Social-Emotional Prosthetic for Autism Spectrum Disorders Goal and Proposed Solution • Goal: – Find an objective set of features to describe social interactions • Proposed solution – Use a wearable device as exploratory and monitoring tool for people with ASD An Exploratory Social-Emotional Prosthetic for Autism Spectrum Disorders Wearable Device affective computing + wearable computing + real time machine perception = novel wearable device that analyzes socialemotional information in human-human interaction An Exploratory Social-Emotional Prosthetic for Autism Spectrum Disorders A Novel Device? • Small wearable camera • Sensors combined with machine vision and perception algorithms • System analyzes facial expression and head movements of the person with whom user is interacting • Possible integration of skin conductance sensors – Match video with co-occurring measurable physiological changes An Exploratory Social-Emotional Prosthetic for Autism Spectrum Disorders Wearable Device Benefit Record a corpus of natural face to face interactions + Machine perception algorithms = help identify the spacio-temporal features of a social interaction that predict how interaction is perceived An Exploratory Social-Emotional Prosthetic for Autism Spectrum Disorders Overall Benefit • Monitor progress of people with ASD in terms of social-emotional interactions • Determine effectiveness of social skill and behavioral therapies Vocal Emotion Recognition with Cochlear Implants Use of prosodic speech characteristics for automated detection of alcohol intoxication S. Luo, Q. J. Fu, J. J. Galvin M. Levit, R. Huber, A. Batliner, E. Noeth 2006 2001 An Exploratory Social-Emotional Prosthetic for Autism Spectrum Disorders Measurement of emotional involvement in spontaneous speaking behavior R. Kaliouby, A. Teeters, R. W. Picard B. Z. Pollermann 2006 2000 Measurement of emotional involvement in spontaneous speaking behavior Introduction • Measurement of vocal indicators of emotions - in laboratory settings: typically done by computing deviation of emotionally charged speech patterns from neutral pattern • Measurement of genuine emotional reactions occurring spontaneously poses the problem of comparison with base-line level Measurement of emotional involvement in spontaneous speaking behavior Similarities • Spontaneous speaking behavior • Intra-subject comparisons • Do not require a “neutral’ condition Measurement of emotional involvement in spontaneous speaking behavior Method 1 Subjects 39 diabetic patients with different impairment levels ANS Data Emotion induced through subjects' verbal recall of their emotional experiences of joy, sadness and anger At end of each episode - standard sentence on emotion congruent tone 1) 2) 3) Method • ratio between value obtained in high arousal conditions (anger and joy) and that in low arousal condition (sadness) 4) Additional variables per vocal parameter: Anger/Sadness Differential and Joy/Sadness Differential 1) Vocal Differential index is positively correlated with functioning of the autonomous nervous system Vocal Arousal Index 2) Results Standard sentence acoustically analyzed Extract basic vocal parameters (Zei & Archinard, 1998) Compute Vocal Differential Index (Emotional involvement) a) b) c) Computed from cumulative score consisting of acoustic parameters significantly related to differentiation of 3 emotions Score was composed of Z values Reflects degree of emotional involvement for each emotion Measurement of emotional involvement in spontaneous speaking behavior Method 2 Subjects 10 breast cancer patients Data Collection Interview to determine coping style Hypothesis (well-adaptive vs. ill-adaptive) Confrontation with emotional contents during interview would cause subjects to encode emotional reactions into voices 1) Method 2) 3) 1) Results 2) Interview screened for passages of high and low vocal arousal Vocal Differential Index calculated for each arousal Vocal Arousal measured for passage where subject talks about coping with illness Vocal Arousal index inside the base line range indicative of coping style Relatively narrow Vocal Differential Index related to coping difficulties