5/12/99 252y9943 ECO252 QBA2 Name

advertisement

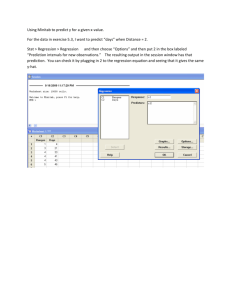

5/12/99 252y9943 ECO252 QBA2 Name FINAL EXAM Hour of Class Registered (Circle) May 5, 1999 MWF 10 11 TR 12:30 2:00 Note: If this is the only thing you look at before taking the final, you are badly cheating yourself. Problems like 5e, 5f and 6 appeared on the 1998 final. People who used this final and did not read the problems carefully got very wrong answers to them. Note: If you still think that a large p-value means that a coefficient is significant, you need a conference with an audiologist. Further note that a p-value is a probability and can only be compared with another probability (like the significance level). Note: Have you reread “Things that You Should Never Do On a Statistics Exam …?” I think I could have graded this exam by just looking for violations of these rules. I. (16 points) Do all the following. 1. Hand in your fourth regression problem (2 points) and answer the following questions. a. For the regression of the number of hours of work against the number of machines, what coefficients are significant at the 1% level? Why? What about the 5% level? (2) b. Would you say that the regression of number of hours of work against the number of machines and months of experience is more successful than the regression against machines alone? Why? (3) c. What was the surprise that occurred when you did the stepwise regression? (2) Solution: The rule on p-value: If the p-value is less than the significance level reject the null hypothesis; if the p-value is greater or equal than the significance level, do not reject the null hypothesis. a) part of the printout follows: (For the entire printout see 252x9943.) MTB > print c1-c4 Data Display Row Hours Number Exper Inter 1 2 3 4 5 6 7 8 9 10 1.0 3.1 17.0 14.0 6.0 1.8 11.5 9.3 6.0 12.2 1 3 10 8 5 1 10 5 4 10 12 8 5 2 10 1 10 2 6 18 12 24 50 16 50 1 100 10 24 180 MTB > brief 3 5/12/99 252y9943 MTB > regress c1 on 1 c2 'resid''pred' Regression Analysis The regression equation is Hours = 0.10 + 1.42 Number Predictor Constant Number Coef 0.101 1.4192 s = 2.056 Stdev 1.267 0.1908 R-sq = 87.4% t-ratio 0.08 7.44 p 0.939 0.000 R-sq(adj) = 85.8% Analysis of Variance SOURCE Regression Error Total Obs. 1 2 3 4 5 6 7 8 9 10 DF 1 8 9 Number 1.0 3.0 10.0 8.0 5.0 1.0 10.0 5.0 4.0 10.0 SS 233.84 33.83 267.67 Hours 1.000 3.100 17.000 14.000 6.000 1.800 11.500 9.300 6.000 12.200 MS 233.84 4.23 Fit 1.520 4.358 14.293 11.454 7.197 1.520 14.293 7.197 5.777 14.293 F 55.30 Stdev.Fit 1.108 0.830 1.047 0.785 0.664 1.108 1.047 0.664 0.727 1.047 p 0.000 Residual -0.520 -1.258 2.707 2.546 -1.197 0.280 -2.793 2.103 0.223 -2.093 St.Resid -0.30 -0.67 1.53 1.34 -0.61 0.16 -1.58 1.08 0.12 -1.18 Since the p-value column has .936 as the p-value for 0.101, the coefficient ‘constant,’ and .939 is above the significance levels of .01 and .05, we can say that the constant is not significant at these levels. If you look up the values of t for 8 degrees of freedom and significance levels of .005 and .025, you will find that the t-ratio of 0.08 is less than the table value. Likewise, since the p-value column has zero as the p-value for 0.1.4192, the coefficient of ‘Number,’ and zero is below the significance levels of .01 and .05, we can say that the constant is significant at these levels. If you look up the values of t for 8 degrees of freedom and significance levels of .005 and .025, you will find that the t-ratio of 0.08 is more than the table value. b) part of the printout follows: MTB > regress c1 on 2 c2 c3 'resid''pred' Regression Analysis The regression equation is Hours = 1.62 + 1.53 Number - 0.293 Exper Predictor Constant Number Exper Coef 1.6191 1.5333 -0.29311 s = 1.388 Stdev 0.9746 0.1335 0.09019 R-sq = 95.0% t-ratio 1.66 11.49 -3.25 p 0.141 0.000 0.014 R-sq(adj) = 93.5% Analysis of Variance SOURCE Regression Error Total DF 2 7 9 SS 254.19 13.48 267.67 MS 127.09 1.93 F 65.99 p 0.000 2 5/12/99 252y9943 SOURCE Number Exper Obs. 1 2 3 4 5 6 7 8 9 10 DF 1 1 Number 1.0 3.0 10.0 8.0 5.0 1.0 10.0 5.0 4.0 10.0 SEQ SS 233.84 20.34 Hours 1.000 3.100 17.000 14.000 6.000 1.800 11.500 9.300 6.000 12.200 Fit -0.365 3.874 15.487 13.299 6.355 2.859 14.021 8.699 5.994 11.676 Stdev.Fit 0.946 0.579 0.796 0.776 0.518 0.854 0.712 0.644 0.495 1.071 Residual 1.365 -0.774 1.513 0.701 -0.355 -1.059 -2.521 0.601 0.006 0.524 St.Resid 1.34 -0.61 1.33 0.61 -0.28 -0.97 -2.12R 0.49 0.00 0.59 R denotes an obs. with a large st. resid. This is one of the few really good ones. R-squared and R-squared adjusted went up, and , equally important, the coefficient of ‘Number’ remained significant while the coefficient of ‘Exper’ was significant at the 5% level. c) part of the printout follows: Stepwise Regression F-to-Enter: Response is Step Constant Number T-Ratio Inter T-Ratio 4.00 Hours on F-to-Remove: 4.00 3 predictors, with N = 1 0.1005 2 -0.4758 1.42 7.44 1.85 10.81 10 -0.040 -3.56 S 2.06 1.31 R-Sq 87.36 95.51 More? (Yes, No, Subcommand, or Help) SUBC> y No variables entered or removed More? (Yes, No, Subcommand, or Help) SUBC> n MTB > print c1-c6 This was a surprise! Though the coefficients in column 1 are the same as those in the regression of ‘Hours’ against ‘Number’ above, the variable it brought in in column 2 is the interaction variable. I had assumed that it would only help the other two variables to explain ‘Number,’ but the computer refused to bring in ‘Exper.’ 3 5/12/99 252y9943 2. The following pages show the regression of the variable 'mins', the winning time in minutes in a triathlon, against some of the following independent variables: 'female' A dummy variable that is 1 if the contestant is female. 'swim' Number of miles of swimming 'bike' Number of miles of biking 'run' Number of miles of running c6 ‘swim’ multiplied by ‘female’ c7 ‘bike’ multiplied by ‘female’ c8 ‘run’ multiplied by ‘female’ c9 ‘swim’ squared c10 ‘bike’ squared c11 ‘run’ squared a. In the regression of ‘mins’ against ‘female’, ‘swim’, ‘bike’ and ‘run’, which coefficients have signs that look wrong? Why? Which coefficients are not significant at the 99% confidence level? (3) b. Look at the regression of ‘mins’ against ‘run‘, c8 and c11 and the regression of ‘mins’ against ‘run’, and c8. Use .10 . Does either seem to be an improvement over the regression of ‘mins’ against ‘run’ alone? Why?(2) c. Explain the meaning of the F test in the regression of ‘mins’ against ‘female’, ‘swim’, ‘bike’ and ‘run’ . What is being tested and what are the conclusions? (2) d. The printout concludes with a printout of the data and of a correlation matrix. What does this suggest about the problems that are occurring with these regressions? (2) Solution: The printout enclosed with the exam follows: Worksheet size: 100000 cells MTB > RETR 'C:\MINITAB\LR13-49.MTW'. Retrieving worksheet from file: C:\MINITAB\LR13-49.MTW Worksheet was saved on 5/ 3/1999 MTB > regress c1 on 4 c2 c3 c4 c5 Regression Analysis The regression equation is mins = - 24.6 + 35.5 female - 25.0 swim + 7.13 bike - 6.37 run Predictor Constant female swim bike run s = 33.02 Coef -24.57 35.47 -25.01 7.130 -6.372 Stdev 20.13 14.77 45.75 1.331 5.384 R-sq = 98.0% t-ratio -1.22 2.40 -0.55 5.36 -1.18 p 0.241 0.030 0.593 0.000 0.255 R-sq(adj) = 97.4% a) There is no reason to expect the constant to be positive or negative. However in this equation, a negative coefficient for ‘swim’, ‘bike’ or ‘run’ would lead us to believe that an extra mile of swimming, biking or running would lead to a faster time. The positive coefficient for ‘female’ is expected, because, at least at present, women’s times in speed events have been longer than men’s. (However, at the rate that women’s times in athletic events are falling this may not always be true!) The constant and the coefficients of ‘swim’ and ‘run’ have p-values above .01. If the confidence level is 99%, the significance level is .01, so these coefficients are not significant. 4 5/12/99 252y9943 Analysis of Variance SOURCE Regression Error Total DF 4 15 19 SS 786104 16351 802455 MS 196526 1090 F 180.29 p 0.000 c) The F test here is a test of the null hypothesis that the independent variables do not explain the dependent variable. The low p-value means that we reject the null hypothesis. SOURCE female swim bike run DF 1 1 1 1 SEQ SS 6291 726098 52189 1526 Unusual Observations Obs. female mins 1 0.00 489.25 18 1.00 660.48 Fit 547.00 582.47 Stdev.Fit 17.48 17.48 Residual -57.75 78.01 St.Resid -2.06R 2.79R R denotes an obs. with a large st. resid. MTB > regress c1 on 1 c5 Regression Analysis The regression equation is mins = - 19.2 + 23.6 run Predictor Constant run Coef -19.25 23.615 s = 57.74 Stdev 23.19 1.582 R-sq = 92.5% t-ratio -0.83 14.92 p 0.417 0.000 R-sq(adj) = 92.1% Analysis of Variance SOURCE Regression Error Total DF 1 18 19 SS 742445 60011 802455 Unusual Observations Obs. run mins 1 26.2 489.2 12 18.6 589.1 MS 742445 3334 Fit 599.5 420.0 F 222.69 Stdev.Fit 25.7 16.4 p 0.000 Residual -110.2 169.1 St.Resid -2.13R 3.05R R denotes an obs. with a large st. resid. MTB > regress c1 on 2 c5 c8 Regression Analysis The regression equation is mins = - 19.2 + 22.1 run + 3.02 C8 Predictor Constant run C8 s = 54.36 Coef -19.25 22.106 3.017 Stdev 21.83 1.705 1.659 R-sq = 93.7% t-ratio -0.88 12.96 1.82 p 0.390 0.000 0.087 R-sq(adj) = 93.0% 5 5/12/99 252y9943 Analysis of Variance SOURCE Regression Error Total DF 2 17 19 SS 752216 50240 802455 SOURCE run C8 DF 1 1 SEQ SS 742445 9771 Unusual Observations Obs. run mins 2 18.6 505.1 11 26.2 540.9 12 18.6 589.1 MS 376108 2955 Fit 391.9 639.0 448.0 F 127.27 Stdev.Fit 21.9 32.5 21.9 p 0.000 Residual 113.2 -98.1 141.0 St.Resid 2.27R -2.25R 2.83R R denotes an obs. with a large st. resid. MTB > regress c1 on 2 c5 c11 Regression Analysis The regression equation is mins = - 102 + 39.6 run - 0.519 C11 Predictor Constant run C11 Coef -101.71 39.550 -0.5192 s = 54.11 Stdev 49.18 8.654 0.2778 R-sq = 93.8% t-ratio -2.07 4.57 -1.87 p 0.054 0.000 0.079 R-sq(adj) = 93.1% Analysis of Variance SOURCE Regression Error Total DF 2 17 19 SS 752675 49780 802455 SOURCE run C11 DF 1 1 SEQ SS 742445 10230 Unusual Observations Obs. run mins 12 18.6 589.1 MS 376337 2928 Fit 454.3 F 128.52 Stdev.Fit 24.0 p 0.000 Residual 134.8 St.Resid 2.78R R denotes an obs. with a large st. resid. MTB > regress c1 on 3 c5 c8 c11 Regression Analysis The regression equation is mins = - 102 + 38.0 run + 3.02 C8 - 0.519 C11 Predictor Constant run C8 C11 s = 50.01 Coef -101.71 38.042 3.017 -0.5192 Stdev 45.45 8.033 1.526 0.2567 R-sq = 95.0% t-ratio -2.24 4.74 1.98 -2.02 p 0.040 0.000 0.066 0.060 R-sq(adj) = 94.1% b) Some people have all the luck! Unlike most choices I gave these are really good. Not only do R-squared and R-squared adjusted go up, but the p-values are all below 10% and the signs of the coefficients are reasonable. So yes, these are probably improvements. 6 5/12/99 252y9943 Analysis of Variance SOURCE Regression Error Total DF 3 16 19 SS 762446 40009 802455 SOURCE run C8 C11 DF 1 1 1 SEQ SS 742445 9771 10230 Unusual Observations Obs. run mins 12 18.6 589.1 MS 254149 2501 Fit 482.3 F 101.64 Stdev.Fit 26.3 p 0.000 Residual 106.7 St.Resid 2.51R R denotes an obs. with a large st. resid. MTB > print c1-c11 Data Display Row mins female swim bike run C6 C7 C8 C9 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 489.250 505.150 245.500 204.400 114.533 108.267 79.417 566.500 74.983 116.117 540.933 589.067 280.100 235.033 127.167 120.750 90.317 660.483 83.150 131.817 0 0 0 0 0 0 0 0 0 0 1 1 1 1 1 1 1 1 1 1 2.40 2.00 1.20 1.50 0.93 0.93 0.50 2.40 0.50 0.60 2.40 2.00 1.20 1.50 0.93 0.93 0.50 2.40 0.50 0.60 112.0 100.0 55.3 48.0 24.8 24.8 18.0 112.0 20.0 25.0 112.0 100.0 55.3 48.0 24.8 24.8 18.0 112.0 20.0 25.0 26.2 18.6 13.1 10.0 6.2 6.2 5.0 26.2 4.0 6.2 26.2 18.6 13.1 10.0 6.2 6.2 5.0 26.2 4.0 6.2 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 2.40 2.00 1.20 1.50 0.93 0.93 0.50 2.40 0.50 0.60 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 112.0 100.0 55.3 48.0 24.8 24.8 18.0 112.0 20.0 25.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 26.2 18.6 13.1 10.0 6.2 6.2 5.0 26.2 4.0 6.2 5.7600 4.0000 1.4400 2.2500 0.8649 0.8649 0.2500 5.7600 0.2500 0.3600 5.7600 4.0000 1.4400 2.2500 0.8649 0.8649 0.2500 5.7600 0.2500 0.3600 Row C10 C11 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 12544.0 10000.0 3058.1 2304.0 615.0 615.0 324.0 12544.0 400.0 625.0 12544.0 10000.0 3058.1 2304.0 615.0 615.0 324.0 12544.0 400.0 625.0 686.44 345.96 171.61 100.00 38.44 38.44 25.00 686.44 16.00 38.44 686.44 345.96 171.61 100.00 38.44 38.44 25.00 686.44 16.00 38.44 7 5/12/99 252y9943 MTB > Correlation c1 c2 c3 c4 c5 c6 c7 c8 c9 c10 c11. Correlations (Pearson) mins 0.089 0.951 0.984 0.962 0.510 0.584 0.564 0.956 0.975 0.928 female swim bike run C6 C7 C8 female swim bike run C6 C7 C8 C9 C10 C11 0.000 0.000 0.000 0.792 0.716 0.726 0.000 0.000 0.000 0.973 0.965 0.432 0.480 0.470 0.985 0.954 0.932 0.985 0.420 0.494 0.479 0.979 0.989 0.954 0.417 0.487 0.487 0.982 0.983 0.985 0.982 0.980 0.426 0.412 0.403 0.993 0.483 0.488 0.471 0.478 0.478 0.479 C9 0.982 0.974 C10 C10 C11 0.975 d) There are many correlations above .9 here. These indicate that collinearity is a problem and that many regressions will give coefficients that are insignificant or have unreasonable signs. 8 5/12/99 252y9943 II. Do at least 4 of the following 7 Problems (at least 15 each) (or do sections adding to at least 60 points Anything extra you do helps, and grades wrap around) . Show your work! State H 0 and H1 where applicable. Use a significance level of 5% unless noted otherwise. Note: These problems involve comparing population means, variances, proportions or medians. To do this you use sample means, variances or proportions. If you look at a problem and tell me that the sample means, variances, proportions or medians differ without incorporating them in a test, you are wasting both your time and mine, and it is possible that my annoyance will affect how I grade the rest of your exam, since I will now suspect that you have no idea what a significant difference is or what we mean by a statistical test! 1. a. Premiums on a group of 11closed end mutual funds were as follows. (These are in per cent, but that shouldn’t affect your analysis.)Test the hypothesis that the mean is 3 per cent using (i) Either a test ratio or a critical value and (ii) A confidence interval. (6) +4.7 -0.7 +5.3 +9.2 -0.3 -0.3 +5.0 +0.4 -1.9 +0.5 -3.1 b. Test that the following data (i) has a Poisson distribution (6) and (ii)has a Poisson distribution with a mean of 4.5 (6). If you do both parts do only one with a chi-square method. x 0 1 2 3 4 5 6 7 O 23 19 42 60 89 79 48 40 Solution: a) From Table 3 of the Syllabus Supplement: Interval for Confidence Hypotheses Interval Mean ( x t 2 s x H0 : 0 unknown) H1 : 0 DF n 1 Test Ratio t H0 : 3 H1 : 3 11 0 3, DF n 1 10, .05, tn 1 t .025 2.228 2 x 1.7091 and sx s 14 .1989 3.7681 1.2908 1.1361 11 11 n . (i) Test Ratio: x 0 1.7091 3 t 1.136 . This is in the sx 1.1361 ‘accept’ region between 2.228 , so do not reject H 0 . Critical Value: Since this is a 2-sided test, xcv 0 t sx 2 3 2.228 1.1361 3 2.5312 or 0.469 to Critical Value xcv 0 t 2 s x x 0 sx x x2 +4.7 22.09 -0.7 0.49 +5.3 28.09 +9.2 84.64 -0.3 0.09 -0.3 0.09 +5.0 25.00 +0.4 0.16 -1.9 3.61 +0.5 0.25 -3.1 9.61 18.8 174.12 x 2 174 .12 , we x 18 .8 and x 18.8 1.7091 find x Since s2 x n 2 11 nx 2 n 1 and 174 .12 111.7091 2 14 .1989 10 2.531. This means that we reject H 0 if the sample mean is above 2.531 or below 0.469. Since x 1.7091 is between these critical values, do not reject H 0 . 9 5/12/99 252y9943 (ii) Confidence Interval: Since this is a two-sided test, x t s x 1.7091 2.228 1.1361 1.7091 2.5312 or 0.822 4.240 . This does not 2 contradict H 0 : 3 , because 3 is between –0.822 and 4.240, so do not reject H 0 . b) (i) H 0 : Poisson Since the parameter is unknown, the chi-squared method is the only possible method. To find the mean, sum xO and divide by n , which is the sum of O . The actual comparison can be done by E O 2 O2 and subtracting n . E E x 0 1 2 3 4 5 6 7 xO 1602 so O 23 19 42 60 89 79 48 40 4.0005 . 400 O xO 0 19 84 180 356 395 288 280 1602 We look up probabilities in the Poisson table (these are in the column labeled p ) and multiply them by n to get E . E O 2 O2 x O E O E O2 p E E E 0 23 7.3264 -15.6736 245.662 33.5310 72.205 0.018316 1 19 29.3052 10.3052 106.197 3.6238 12.319 0.073263 2 42 58.6100 16.6100 275.892 4.7073 30.097 0.146525 3 60 78.1468 18.1468 329.306 4.2139 46.067 0.195367 4 89 78.1468 -10.8532 117.792 1.5073 101.361 0.195367 5 79 62.5172 -16.4828 271.683 4.3457 99.829 0.156293 6 48 41.6784 -6.3216 39.963 0.9588 55.280 0.104196 7 40 23.8160 -16.1840 261.922 10.9977 67.182 0.059540 8+ 0 20.4520 20.4520 418.284 20.4520 0.000 0.051130 400 399.9998 0.0012 84.3388 484.339 either summing or by summing E O2 O2 n 484 .339 400 84 .339 Since there are 9 items on E E 2 7 the comparison and we have used the data to estimate 1 parameter df 9 2 7 and .05 14 .0671 , we So 2 84.3377 or 2 reject H 0 . (ii) H 0 : Poisson4.5 We use the Kolmogorov-Smirnov method, though the Chi-squared method could also be used if you did not do part i. The column Fe is the cumulative distribution from the Poisson table with a mean of 4.5. x O Cumulative O Fo Fe D 0 23 23 .05750 .01111 .0464 1 19 42 .10500 .06110 .0439 2 42 84 .21000 .17358 .0364 3 60 144 .36000 .34230 .0177 4 89 233 .58250 .53260 .0504 5 79 312 .78000 .70293 .0771 6 48 360 .90000 .83105 .0789 7 40 400 1.00000 .91341 .0876 8+ 0 400 1.00000 1.00000 .0000 400 1.36 .0680 . Since the From the Kolmogorov-Smirnov, the critical value for a 95% confidence level is 400 largest number in D is above this value, we reject H 0 . Exam continues in 252z9943. 10