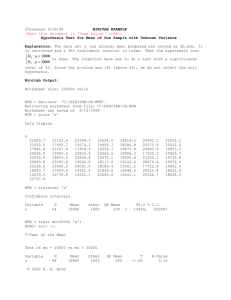

252y0581 1/4/06 KEY

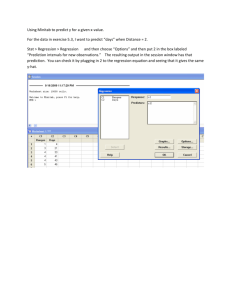

advertisement