Document 15930405

advertisement

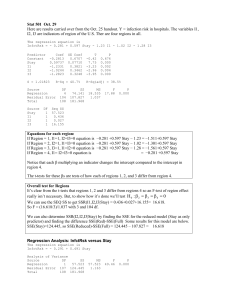

5/01/03 252y0342 Introduction This is long, but that’s because I gave a relatively thorough explanation of everything that I did. When I worked it out in the classroom, it was much, much shorter. The easiest sections were probably 1a, 2a, 2b, 2c, 7b, 7c, and 7d in Part I. There are some very easy sections in part I if you just follow suggestions and look at p-values and R-squared. After doing the above, I might have computed the spare parts in 3b in Part II. Spare Parts Computation: SSx x 2 nx 2 1019346 11265 .636 2 x 2922 243158 .7 x 265 .636 n 11 Sxy xy nx y 4419959 11265 .636 1184 .36 y 13028 1184 .36 y n 959263 .8 11 SSy y 2 ny 2 19891990 111184 .36 2 4462195 .3 And not recomputed them every time I needed them in Problems 4 and 5 ! Only then would I have tried the multiple regression. Many of you seem to have no idea what a statistical test is. We have been doing them every day. The most common examples of this were in part II. 8 10 .069565 and p 2 .09709 . Hey look! They’re different! Whoopee! And Problem 1b: p 3 115 103 you think that you will get credit for this? Whether these two proportions come from the same population or not, chances are they will be somewhat different, you need one of the three statistical tests shown in the solution to show that they are significantly different. Problem 2c: Many of you started with x 1019346 11265 .636 2 243158 .7 24315 .87 s 24315 .86 155 .90 This is n 1 10 10 fine and you got some credit for knowing how to compute a sample variance, though you probably had already computed the numerator somewhere else in this exam. But then you told me that this wasn’t 200. Where was your test?: s x2 2 nx 2 5/06/03 252y0342 ECO252 QBA2 FINAL EXAM May 7, 2003 Name KEY Hour of Class Registered (Circle) I. (18 points) Do all the following. Note that answers without reasons receive no credit. A researcher wishes to explain the selling price of a house in thousands on the basis of its assessed valuation, whether it was new and the time period. New is 1 if the house is new construction, zero otherwise. The researcher assembles the following data for a random sample of 30 home sales. Use .10 in this problem. That’s why p-values above .10 mean that the null hypothesis of insignificance is not rejected. ————— 4/25/2003 9:58:00 PM ———————————————————— Welcome to Minitab, press F1 for help. MTB > Retrieve "C:\Documents and Settings\RBOVE\My Documents\Drive D\MINITAB\2x03421.MTW". Retrieving worksheet from file: C:\Documents and Settings\RBOVE\My Documents\Drive D\MINITAB\2x0342-1.MTW # Worksheet was saved on Fri Apr 25 2003 Results for: 2x0342-1.MTW MTB > print c1 - c4 Data Display Row Price Value New Time 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 69.00 115.50 100.80 96.90 72.00 61.90 97.00 87.50 96.90 81.50 69.34 97.90 96.00 92.00 94.10 101.90 109.50 88.65 93.00 83.00 106.70 97.90 97.30 90.50 95.90 113.90 94.50 86.50 91.50 93.75 66.28 86.31 84.78 79.74 65.54 59.93 79.98 75.22 81.88 72.94 60.80 81.61 79.11 77.96 78.17 80.24 85.88 74.03 75.27 74.31 84.36 77.90 79.85 74.92 79.07 85.61 76.50 72.78 72.43 76.64 0 0 1 1 0 0 1 0 1 0 0 1 0 0 1 1 1 0 0 0 0 1 1 0 1 0 1 0 0 0 1 2 2 3 4 4 4 5 5 5 6 6 7 9 10 10 10 11 11 11 12 12 12 12 12 13 14 14 17 17 1. Looking for a place to start, the researcher does individual regressions of price against the individual independent variables. a. Explain why the researcher concludes from the regressions that valuation (‘value’) is the most important independent variable. Consider the values of R 2 and the significance tests on the slope of the equation (2) b. What kind of variable is ‘new.’ Explain why the regression of ‘price’ against ‘new’ is equivalent to a test of the equality of 2 sample means, and what the conclusion would be. (2) 5/06/03 252y0342 2 Solution: a) Note that the p-values for the first two regressions are below 10%, indicating a significant slope. The regression against ‘Time,’ however, shows a p-value above 10% for the slope, indication that time is a poor explanatory variable. If we compare the regression against ‘Value’ with the regression against ‘New,’ we find a much higher R-sq for ‘Value,’ which indicates that this independent variable does a much better job of explaining ‘Price’ than ‘New.’ b) ‘New’ is a dummy variable, it is one when something is true and zero when it is false. The equation Price = 88.5 + 9.93 New only gives us two values for ‘Price.’ If the house is not new, the predicted price is 88.5 (thousand) and if the house is new, and we use the values from the ‘Coef’ column, the predicted price is 88.458 + 9.926 = 98.3 (thousand) . Remember that a regression predicts the average value of a dependent variable for a given value of the independent variable, so these are predicted means for old and new houses respectively, and the fact that the p-value is .031, below 10% indicates that the difference is significant. MTB > regress c1 1 c2 Regression Analysis: Price versus Value The regression equation is Price = - 44.2 + 1.78 Value Predictor Coef SE Coef T P Constant -44.172 7.346 -6.01 0.000 Value 1.78171 0.09546 18.66 0.000 slope is significant. S = 3.475 R-Sq = 92.6% R-Sq(adj) = 92.3% The p-value below .10 shows us that the Analysis of Variance Source Regression Residual Error Total DF 1 28 29 SS 4206.7 338.1 4544.8 Unusual Observations Obs Value Price 6 59.9 61.900 11 60.8 69.340 MS 4206.7 12.1 Fit 62.606 64.156 F 348.37 SE Fit 1.719 1.642 P 0.000 Residual -0.706 5.184 St Resid -0.23 X 1.69 X X denotes an observation whose X value gives it large influence. MTB > regress c1 1 c3 Regression Analysis: Price versus New The regression equation is Price = 88.5 + 9.93 New Predictor Constant New Coef 88.458 9.926 S = 11.70 SE Coef 2.759 4.362 R-Sq = 15.6% T 32.07 2.28 P 0.000 0.031 R-Sq(adj) = 12.6% Analysis of Variance Source Regression Residual Error Total DF 1 28 29 Unusual Observations Obs New Price 2 0.00 115.50 6 0.00 61.90 26 0.00 113.90 SS 709.3 3835.5 4544.8 Fit 88.46 88.46 88.46 MS 709.3 137.0 SE Fit 2.76 2.76 2.76 F 5.18 P 0.031 Residual 27.04 -26.56 25.44 St Resid 2.38R -2.33R 2.24R R denotes an observation with a large standardized residual 5/06/03 252y0342 MTB > regress c1 1 c4 3 Regression Analysis: Price versus Time The regression equation is Price = 86.4 + 0.698 Time Predictor Coef SE Coef T P Constant 86.355 4.942 17.47 0.000 Time 0.6980 0.5057 1.38 0.178 slope is insignificant. S = 12.33 R-Sq = 6.4% R-Sq(adj) = 3.0% The p-value above .10 shows us that the Analysis of Variance Source Regression Residual Error Total DF 1 28 29 SS 289.6 4255.2 4544.8 Unusual Observations Obs Time Price 2 2.0 115.50 6 4.0 61.90 MS 289.6 152.0 Fit 87.75 89.15 F 1.91 SE Fit 4.07 3.27 P 0.178 Residual 27.75 -27.25 St Resid 2.38R -2.29R R denotes an observation with a large standardized residual MTB > regress c1 2 c2 c4; SUBC> dw; SUBC> vif. 2. The researcher now adds time. Compare this regression with the regression with Value alone. Are the coefficients significant? Does this explain the variation in Y better than the regression with value alone? . What would the predicted selling price be for an old house with a valuation of 80 in time 17? (3) Solution: a) This is working out beautifully. R-sq rose, as it almost always does. R-sq adjusted went up, which is better news. The low p-value associated with the ANOVA indicates a generally successful regression. The low p-value (.008 is much less than 1%) on the coefficient of ‘Time’ indicates that the coefficient is highly significant. Both other coefficients are even more significant as shown by the low pvalues. b) The regression equation is Price = - 45.0 + 1.75 Value + 0.368 Time, so, for the values given above Price = - 45.0 + 1.75(80) + 0.368(17)= -45.0 + 140 + 6.3 = 101.3 (thousand). Regression Analysis: Price versus Value, Time The regression equation is Price = - 45.0 + 1.75 Value + 0.368 Time Predictor Constant Value Time Coef -44.988 1.75060 0.3680 S = 3.097 SE Coef 6.553 0.08576 0.1281 R-Sq = 94.3% T -6.87 20.41 2.87 P 0.000 0.000 0.008 VIF 1.0 1.0 R-Sq(adj) = 93.9% Analysis of Variance Source Regression Residual Error Total Source Value Time DF 1 1 DF 2 27 29 SS 4285.8 258.9 4544.8 MS 2142.9 9.6 F 223.46 P 0.000 Seq SS 4206.7 79.2 4 5/06/03 252y0342 Unusual Observations Obs Value Price 2 86.3 115.500 11 60.8 69.340 20 74.3 83.000 Fit 106.842 63.656 89.146 SE Fit 1.385 1.474 0.680 Residual 8.658 5.684 -6.146 St Resid 3.13R 2.09R -2.03R R denotes an observation with a large standardized residual Durbin-Watson statistic = 2.73 3. The researcher now adds the variable ‘new’ Remember that there is nothing wrong with a negative coefficient unless there is some reason why it should not be negative. a. What two reasons would I find to doubt that this regression is an improvement on the regression with just value and time by just looking at the t tests and the sign of the coefficients? What does the change in R 2 adjusted tell me about this regression? (3) b. We have done 5 ANOVA’s so far. What was the null hypothesis in these ANOVA’s and what does the one where the null hypothesis was accepted tell us? (2) c. What selling price does this equation predict for an old home with a valuation of 80 in time 17? What percentage difference is this from the selling price predicted in the regression with just time and value? (2) d. The last two regressions have a Durbin-Watson statistic computed. What did this test for, what should our conclusion be, and why is it important? (3) e. The column marked VIF (variance inflation factor) is a test for (multi)collinearity. The rule of thumb is that if any of these exceeds 5, we have a multicollinearity problem. None does. What is multicollinearity and why am I worried about it? (2) f. Do an F test to show whether the regression with ‘value’, ‘time’ and ‘new’ is an improvement over the regression with ‘value’ alone. (3) Solution: a) Note that the p-value of the coefficient of ‘New’ is well above 10%, indicating that the coefficient is not significant. The negative sign on ‘New’ may also give us problems, since we usually expect new construction to be more expensive. It is possible, however, that the assessors are systematically giving higher valuations to newer housing. R-sq did go up, but it almost always goes up. R-sq adjusted fell, indicating that the explanation is not really better. b) All but one of the five ANOVAs gave us low p-values, indicating that there is a linear relation between the independent variables and the dependent variable. The high p-value on the regression against ‘Time’ alone indicates that this variable alone is no better than the average value of ‘Price’ in predicting ‘Price.’ c) Remember that we previously predicted that a home with a valuation of 80 (thousand) in time 17 would have Price = 101.3 (thousand). Our new equation gives Price = - 47.7 + 1.79 Value + 0.351 Time - 1.22 New = - 47.7 + 1.79(80) + 0.351(17) - 1.22 New(0) = -47.7 + 143.2 + 6.0 = 101.5 (thousand), a change of price of less than 0.2%. d) The Durban – Watson statistic tests for a pattern (first-order autocorrelation) in the residuals. If there is autocorrelation, we could make a better prediction by including time patterns of price variation. In this case n 30 and k 3, giving us, on the 5% table in the text d L 1.21 and dU 1.65, while the regression printout says “Durbin-Watson statistic = 2.60”. The diagram in the outline reads 0 + 0 dL + ? dU + 0 2 + 0 4 dU + ? 4 dL + 0 4 + Since the value given by Minitab is between d U and 4 d U , we do not have significant autocorrelation. In the previous regression, the D-W statistic was 2.73, n 30 and k 2, giving us, on the 5% table in the text d L 1.28 and dU 1.57 and, again no significant autocorrelation. e) Multicollinearity is close correlation between the independent variables and makes accurate values of the coefficients hard to get. 5 5/06/03 252y0342 f) The current regression has the ANOVA: Source Regression Residual Error Total Source Value Time New DF 3 26 29 DF 1 1 1 SS 4294.0 250.7 4544.8 MS 1431.3 9.6 F 148.42 P 0.000 MS 4206.7 12.1 F 348.37 P 0.000 Seq SS 4206.7 79.2 8.2 The regression against ‘Value’ alone gave: Source Regression Residual Error Total DF 1 28 29 SS 4206.7 338.1 4544.8 We can itemize the regression sum of squares in the current regression by using either the sequential sum of squares in the current regression or looking at the regression sum of squares in the ‘Value’ alone regression. Source Value 2 more variables Residual Error Total DF 1 3 26 29 SS 4206.7 87.9 250.7 4544.8 MS 4206.7 42.95 9.6 F 438.20 4.47 F.05 2.98 3,26 2.98 from the F table. Since our computed F is larger The appropriate F test tests 4.47 against F.05 than the table F, we reject the null hypothesis that the two new independent variables have no explanatory value. MTB > regress c1 3 c2 c4 c3; SUBC> dw; SUBC> vif. Regression Analysis: Price versus Value, Time, New The regression equation is Price = - 47.7 + 1.79 Value + 0.351 Time - 1.22 New Predictor Constant Value Time New Coef -47.675 1.79394 0.3508 -1.218 S = 3.105 R-Sq = 94.5% Analysis of Variance Source DF Regression 3 Residual Error 26 Total 29 Source Value Time New SE Coef 7.190 0.09804 0.1298 1.322 DF 1 1 1 SS 4294.0 250.7 4544.8 T -6.63 18.30 2.70 -0.92 P 0.000 0.000 0.012 0.366 VIF 1.3 1.0 1.3 R-Sq(adj) = 93.8% MS 1431.3 9.6 F 148.42 P 0.000 Seq SS 4206.7 79.2 8.2 Unusual Observations Obs Value Price Fit SE Fit Residual 2 86.3 115.500 107.862 1.777 7.638 11 60.8 69.340 63.502 1.487 5.838 20 74.3 83.000 89.492 0.778 -6.492 R denotes an observation with a large standardized residual Durbin-Watson statistic = 2.60 St Resid 3.00R 2.14R -2.16R 6 5/06/03 252y0342 II. Do at least 4 of the following 7 Problems (at least 15 each) (or do sections adding to at least 60 points Anything extra you do helps, and grades wrap around) . Show your work! State H 0 and H1 where applicable. Use a significance level of 5% unless noted otherwise. Do not answer questions without citing appropriate statistical tests. Remember: 1) Data must be in order for Lilliefors. Make sure that you do not cross up x and y in regressions. 1. (Berenson et. al. 1220) A firm believes that less than 15% of people remember their ads. A survey is taken to see what recall occurs with the following results (In these problems calculating proportions won’t help you unless you do a statistical test): Medium Mag TV Radio Total Remembered 25 10 8 43 Forgot 73 93 107 273 Total 98 103 115 316 a. Test the hypothesis that the recall rate is less than 15% by using proportions calculated from the ‘Total’ column. Find a p-value for this result. (5) b. Test the hypothesis that the proportion recalling was lower for Radio than TV. (4) c. Test to see if there is a significant difference in the proportion that remembered according to the medium. (6) d. The Marascuilo procedure says that if (i) equality is rejected in c) and (ii) p 2 p3 2 s p , where the chi – squared is what you used in c) and the standard deviation is 2 what you would use in a confidence interval solution to b), you can say that you have a significant difference between TV and Radio. Try it! (5) Solution: I have never seen so many people lose their common sense as did on this problem. Many of you seemed to think that the answer to c) was the answer to a) in spite of the fact that .15 appeared nowhere in your answer. An A student tried at one point to compare the total fraction that forgot with the total fraction that remembered, even though the method she used was intended to compare fractions of two different groups and the two fractions she compared could only have been the same if they were both .5, since they had to add to one. a) From the formula table. Interval for Confidence Hypotheses Test Ratio Critical Value Interval Proportion p p0 p p z 2 s p pcv p0 z 2 p H 0 : p p0 z H : p p p 1 0 pq p0 q0 sp p n n q 1 p q0 1 p0 H 1 : p .15 It is an alternate hypothesis because it does not contain an equality. The null hypothesis is thus H 0 : p .15. Initially, assume .05 and note than n 316 , x 43 so that p0 q0 x 43 .15.85 .1361 . p .0004075 .02019 . This is a one-sided test and n 316 n 316 z z .05 1.645 . This problem can be done in one of three ways. p 7 5/06/03 252y0342 (i) The test ratio is z p p0 p .1361 .15 0.6885 . Make a diagram of a normal curve with a .02019 mean at zero and a reject zone below - z z.05 1.645 . Since z 0.6885 is not in the 'reject' zone, do not reject H 0 . We cannot say that the proportion who do not recall is significantly below 15%. We can use this to get a p-value. Since our alternate hypothesis is .1361 .15 H 1 : p .15 , we want a down-side value, i.e. P p .1361 P z .02019 Pz .68846 Pz 0 P0.69 z 0 .5 .2549 .2451 . Since the p-value is above the significance level, do not reject H 0 . Make a diagram. Draw a Normal curve with a mean at .15 and represent the p-value by the area below .1361, or draw a Normal curve with a mean at zero and represent the p-value by the area below -0.69. (ii) Since the alternative hypothesis says H 1 : p .15 we need a critical value that is below .15. We use pcv p0 z p .15 1.645.02019 .1168. Make a diagram of a normal curve with a mean at .15 and a ‘reject’ zone below .1168. Since p .1361 is not in the 'reject' zone, do not reject H 0 . We cannot say that the proportion is significantly below 15%. pq . To make the 2-sided confidence interval, n p p z 2 s p , into a 1-sided interval, go in the same direction as H 1 : p .15 We get (iii) To do a confidence interval we need s p pq .1361 .8639 .000372 .01928 . Thus the interval is n 316 sp .1361 1.645 .01928 .1678 . p p z s p p 1678 does not contradict the null hypothesis. 8 10 .069565 , n1 115 and p 2 .09709 , n 2 103 . 115 103 Confidence Hypotheses Test Ratio Critical Value Interval pcv p0 z 2 p p p 0 p p z 2 sp H 0 : p p0 z If p0 0 p H 1 : p p0 p p1 p2 b) We are comparing p 3 Interval for Difference between proportions q 1 p s p p1q1 p2 q 2 n1 n2 p 0 p 01 p 02 or p 0 0 If p 0 p p p01q01 p02q02 n1 n2 Or use s p s p p0 q 0 1 n1 1 n2 n p n2 p2 p0 1 1 n1 n 2 p3 q3 p 2 q 2 .069565 .930435 .09709 .90291 .00056283 .00085108 .0014139 .037602 n3 n2 115 103 p p3 p 2 .02752 , p 0 n p n 2 p 2 115 .069565 103 .09709 8 10 1 1 .08257 , 115 103 n1 n 2 115 103 .05, z z.05 1.645 . Note that q 1 p and that q and p are between 0 and 1. p p 0 q 0 1 n1 1 n3 .08257 .91743 1115 1103 .00139415 .037338 H 0 : p 3 p 2 H 0 : p 3 p 2 0 H0 : p 0 Our hypotheses are or or H1 : p 0 H 1 : p 3 p 2 H1 : p 3 p 2 0 There are three ways to do this problem. Only one is needed 8 5/06/03 252y0342 (i) Test Ratio: z p p 0 p .02752 0 0.7371 .037338 Make a Diagram showing a 'reject' region below -1.645. Since -0.7371 is above this value, do not reject H 0 . (ii) Critical Value: pcv p0 z p becomes pcv p0 z p 2 0 1.645 .037338 .061421 . Make a Diagram showing a 'reject' region below - 0.06142. Since p .02752is not below this value, do not reject H 0 . (iii) Confidence Interval:: p p z s p becomes p p z sp 2 .02752 1.645 .037338 0.03390 . Since not reject H 0 . c) DF r 1c 1 12 2 H 0 : Homogeneousor p1 p 2 p 3 H 1 : Not homogeneousNot all ps are equal O R F Total M 25 73 98 T 010 R Total 008 043 093 107 273 103 115 316 pr .13608 .86392 1.0000 p .03390 does not contradict p 0 , do .2052 5.9915 E On 1 2 3 Total pr 13 .3358 14 .0162 15 .6492 43 .000 .13608 Oft 84 .6642 89 .9838 99 .3508 273 .000 .86392 Total 98 .0000 103 .000 115 .000 316 .000 1.00000 The proportions in rows, p r , are used with column totals to get the items in E . Note that row and column sums in E are the same as in O . (Note that 2 17.4689 333.469 316 is computed two different ways here - only one way is needed.) O2 O E 2 Row E O2 E O O E E E 1 2 3 4 5 6 25 73 10 93 8 107 316 13.3358 84.6642 14.0162 88.9838 15.6492 99.3508 316.000 -11.6642 11.6642 4.0162 -4.0162 7.6492 -7.6492 0.0000 136.053 136.053 16.130 16.130 58.510 58.510 10.2020 1.6070 1.1508 0.1813 3.7389 0.5889 17.4689 46.866 62.943 7.135 97.198 4.090 115.238 333.469 Since the 2 computed here is greater than the 2 from the table, we reject H 0 . d) The Marascuilo procedure says that if (i) equality is rejected in c) and (ii) p 2 p3 2 s p , where the chi – squared is what you used in c) and the standard deviation is 2 what you would use in a confidence interval solution to b), you can say that you have a significant difference between TV and Radio. OK – We already have DF r 1c 1 12 2, .2052 5.9915 s p p3 q3 p 2 q 2 .069565 .930435 .09709 .90291 .00056283 .00085108 .0014139 .037602 n3 n2 115 103 p p3 p 2 .02752 . I guess we really should use .2025 7.3778 2.7162 and 22 s p 2.7162 .037602 .10213 . Since p 2 p3 is obviously smaller than this, we do not have a significant difference in these 2 proportions. 9 5/06/03 252y0342 2. (Berenson et. al. 1142) A manager is inspecting a new type of battery. These are subjected to 4 different pressure levels and their time to failure is recorded. The manager knows from experience that such data is not normally distributed. Ranks are provided. PRESSURE Use low 1 2 3 4 5 8.2 8.3 9.4 9.6 11.9 rank normal 11 12 15 16 19 7.9 8.4 10.0 11.1 12.5 rank high rank whee! rank 9 13 17 18 20 6.2 6.5 7.3 7.8 9.1 4 5 7 8 14 5.3 5.8 6.1 6.9 8.0 1 2 3 6 10 a. At the 5% level analyze the data on the assumption that each column represents a random sample. Do the column medians differ? (5) b. Rerank the data appropriately and repeat a) on the assumption that the data is non-normal but cross classified by use. (5) c. This time I want to compare high pressure (H) against low - moderate pressure (L). I will write out the numbers 1-20 and label them according to pressure. 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 H H H H H H H H L H L L L H L L L L L L Do a runs test to see if the H’s and L’s appear randomly. This is called a Wald-Wolfowitz test for the equality of means in two nonnormal samples. Null hypothesis is that the sequence is random and the means are equal. What is your conclusion? (5) Solution: a) This is a Kruskal – Wallis Test, Equivalent to one-way ANOVA when the underlying distribution is non-normal. H 0 : Columns come from same distribution or medians equal. I am basically copying the outline. There are n 20 data items, so rank them from 1 to 20. Let n i be the number of items in column i and SRi be the rank sum of column i . n 1 2 3 4 5 8.2 8.3 9.4 9.6 11.9 11 12 15 16 19 SR1 73 n i 7.9 8.4 10.0 11.1 12.5 . 9 13 17 18 20 SR2 77 6.2 6.5 7.3 7.8 9.1 4 5 7 8 14 SR3 38 5.3 5.8 6.1 6.9 8.0 1 2 3 6 10 SR4 22 To check the ranking, note that the sum of the four rank sums is 73.0 + 77.0 + 38.0 + 22.0 = 210.0, and nn 1 20 21 210 . that the sum of the first n numbers is 2 2 12 SRi 2 3n 1 Now, compute the Kruskal-Wallis statistic H nn 1 i ni 12 73 .02 77 .02 38 2 22 2 321 12 1 5329 5929 1444 484 63 12 .3486 . 20 21 5 5 5 5 420 5 If the size of the problem is larger than those shown in Table 9, use the 2 distribution, with df m 1 3 , where m is the number of columns. Compare H with .2053 7.81475 . Since H is larger than .205 , reject the null hypothesis. 10 5/06/03 252y0342 b) This is a Friedman test ,equivalent to two-way ANOVA with one observation per cell when the underlying distribution is non-normal. H 0 : Columns come from same distribution or medians equal. Note that the only difference between this and the Kruskal-Wallis test is that the data is cross-classified in the Friedman test. 1 2 3 4 5 8.2 8.3 9.4 9.6 11.9 4 3 3 3 3 SR1 16 7.9 8.4 10.0 11.1 12.5 3 4 4 4 4 SR2 19 6.2 6.5 7.3 7.8 9.1 2 2 2 2 2 SR3 10 5.3 5.3 6.1 6.9 8.0 1 1 1 1 1 SR4 5 Assume that .05 . In the data, the pressures are represented by c 4 columns, and the uses by r 5 rows.. In each row the numbers are ranked from 1 to c 4 . For each column, compute SRi , the rank sum of column i . To check the ranking, note that the sum of the four rank sums is 16 + 19 + 10 + 5 = 50, and that the sum of cc 1 the c numbers in a row is . However, there are r rows, so we must multiply the expression by r . 2 rcc 1 545 SRi 50 . So we have 2 2 12 SRi2 3r c 1 Now compute the Friedman statistic F2 rc c 1 i 12 16 2 19 2 10 2 52 355 12 256 361 100 25 75 14 .04 . Since the 100 545 size of the problem is larger than those shown in Table 10, use the 2 distribution, with df c 1 , where c is the number of columns. Again, .2053 7.81475 . Since our statistic is larger than .205 , reject the null hypothesis. c) . This time I want to compare high pressure (H) against low - moderate pressure (L). I will write out the numbers 1-20 and label them according to pressure. 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 H H H H H H H H L H L L L H L L L L L L If we do a runs test, n 20 , r 6, n1 10 and n 2 10 . Can you see why r 6 ? I have underlined alternate runs to help you. The runs test table gives us critical values of 6 and 16. The directions on the table say to reject randomness if r 6 or r 16 . Since the sequence is not random, the means are not equal. 11 5/06/03 252y0342 3. A researcher studies the relationship of numbers of subsidiaries and numbers of parent companies in 11 metropolitan areas and finds the following: Area parents subsidiaries 1 2 3 4 5 6 7 8 9 10 11 x2 y x 658 396 357 266 231 223 207 156 146 143 139 2922 2602 1709 1852 1223 875 666 1519 884 477 564 657 13028 xy 432964 156816 127449 70756 53361 49729 42849 24336 21316 20449 19321 1019346 1712116 676764 661164 325318 202125 148518 314433 137904 69642 80652 91323 4419959 y2 6770404 2920681 3429904 1495729 765625 443556 2307361 781456 227529 318096 431649 19891990 a. Do Spearman’s rank correlation between x and y and test it for significance (6) b. Compute the sample correlation between x and y and test it for significance (6) c. Compute the sample standard deviation of x and test to see if it equals 200 (4) Solution: a) First rank x and y into rx and r y respectively. (As usual, people tried to compute rank correlations without ranking, I warned you!) Compute d rx ry and d 2 . Area parents 1 2 3 4 5 6 7 8 9 10 11 subsidiaries x y rx r y d rx ry d 2 658 396 357 266 231 223 207 156 146 143 139 2602 1709 1852 1223 875 666 1519 884 477 564 657 11 10 9 8 7 6 5 4 3 2 1 66 11 9 10 7 5 4 8 6 1 2 3 66 0 1 -1 1 2 2 -3 -2 2 0 -2 0 0 1 1 1 4 4 9 4 4 0 4 32 d 0 is a check on the correctness of the ranking. 6 d 192 632 From the outline: r 1 1 1 0.1455 0.8545 1 11121 nn 1 1111 1 Note that 2 s 2 2 The 11 line from the rank correlation table has n .050 .025 .010 .005 11 .5273 .6091 .7000 .7818 H 0 : s 0 If you tested at the 5% level, reject the null hypothesis of no relationship if rs is above .5273 H 1 : s 0 H 0 : s 0 or, if you tested at the 5% level, reject the null hypothesis if rs is above .6091. So, in this H 1 : s 0 case we have a significant rank correlation. 12 5/06/03 252y0342 x 2922, b) We need the usual spare parts. From above n 11, y 13028, x 2 1019346, xy 4419959 and y 2 19891990. (In spite of the fact that most column computations were done for you, many of you wasted time and energy doing them over again.) Spare Parts Computation: SSx x 2 nx 2 1019346 11265 .636 2 x 2922 243158 .7 x 265 .636 n 11 Sxy xy nx y 4419959 11265 .636 1184 .36 y 13028 959263 .8 y 1184 .36 n 11 SSy y 2 ny 2 19891990 111184 .36 2 4462195 .3 The simple sample correlation coefficient is r XY nXY X nX Y 2 R 2 nY 2 square root of XY nXY Sxy 959263 .8 .8480828 SSx SSy 243158 .7 4462195 .3 X nX Y nY 2 2 2 2 2 2 2 2 2 r .8480828 .9209 . From the outline, if we want to test H 0 : xy 0 against H1 : xy 0 and x and y are normally distributed, we use t n 2 r 1 r 2 n2 .9209 1 .9209 2 11 2 .9209 .01688 .9209 7.088 . .12993 9 2.262 , we reject H 0 . Note that R-squared is always between 0 and 1 and that correlations are Since t .025 always between -1 and +1. c) From the formula table (but the outline is better) Interval for Confidence Hypotheses Interval VarianceH 0 : 2 02 n 1s 2 2 2 Small Sample .5 .5 2 H1: : 2 02 s 2DF VarianceH 0 : 2 02 Large Sample z 2DF H1 : 2 02 2 We already know n 11 and SSx x 2 nx 2 x 2 Test Ratio 2 Critical Value n 1s 2 cv2 02 n 1s 2 .25.5 2 z 2 2 2DF 1 nx 2 1019346 11265.6362 243158.7 . Assume .05 . SSx 243158 .7 24315 .87 s 24315 .87 155 .93 n 1 10 H 0 : 200 n 1s 2 1024315 .87 6.0790 . 2 a) Only the test ratio method is normally used. 02 200 2 H 1 : 200 then s x2 n 1 10 20 .4832 DF n 1 10. .2025 Make a diagram. Show a curve with a mean at 10 and rejection zones 10 20 .4832 10 3.2470 (shaded) above .2025 and below .2975 . Since your value of 2 is between the two critical values, do not reject H 0 . 13 5/06/03 252y0342 4. Data from the previous page is repeated: Area parents 1 2 3 4 5 6 7 8 9 10 11 658 396 357 266 231 223 207 156 146 143 139 2922 subsidiaries x2 y x 2602 1709 1852 1223 875 666 1519 884 477 564 657 13028 432964 156816 127449 70756 53361 49729 42849 24336 21316 20449 19321 1019346 y2 xy 1712116 676764 661164 325318 202125 148518 314433 137904 69642 80652 91323 4419959 6770404 2920681 3429904 1495729 765625 443556 2307361 781456 227529 318096 431649 19891990 a. Test the hypothesis that the correlation between x and y is .7 (5) b. Test the hypothesis that x has the Normal distribution. (9) c. Test the hypothesis that x and y have equal variances. (4) Solution: a) From the previous page we know n 11 and XY nXY Sxy 959263 .8 X nX Y nY SSxSSy 243158 .74462195 .3 .8480828 2 R 2 2 2 2 2 2 2 r .8480828 .9209 . From the Correlation section of the outline: If we are testing H 0 : xy 0 against H 1 : xy 0 , and 0 0 , the test is quite different. 1 1 r z ln We need to use Fisher's z-transformation. Let ~ . This has an approximate mean 2 1 r ~ n 2 z z 1 1 1 0 and a standard deviation of s z of z ln , so that t . n3 sz 2 1 0 (Note: To get ln , the natural log, compute the log to the base 10 and divide by .434294482. ) H 0 : xy 0.7 Test when n 11, r .9209 and r 2 .8481 .05 . H 1 : xy 0.7 1 1 r 1 1 .9209 1 1.9209 1 1 ~ z ln ln ln ln 24 .28445 3.18984 1.5949 2 1 r 2 1 .9209 2 0.0791 2 2 1 1 0 z ln 2 1 0 sz 1 1 .7 1 1.7 1 1 ln 2 1 .7 2 ln 0.3 2 ln 5.66670 2 1.73460 0.86730 ~ z z 1.5949 0.86730 1 1 1 0.35355 . 2.058 . Compare this Finally t n3 11 3 8 sz 0.35355 with t n2 2 t .9025 2.262 . Since 2.058 lies between these two values, do not reject the null hypothesis. 14 5/06/03 252y0342 1 1 r ~ z10 log . This has an approximate mean of 2 1 r ~ n 2 z z 10 0.18861 and a standard deviation of s z 10 , so that t . 10 n3 s z 10 Note: To do the above with logarithms to the base 10, try 1 1 0 z 10 log 2 1 0 b) From the previous page we know n 11 , x x nx x nx SSx 2 2 2 x 2922 265 .636 , n 11 1019346 11265.636 243158.7 and 2 2 SSx 243158 .7 24315 .87 s 24315 .87 155 .93 n 1 n 1 10 Use the setup in Problem E9 or E10. The best method to use here is Lilliefors because the data is not stated by intervals, the distribution for which we are testing is Normal, and the parameters of the xx distribution are unknown. We begin by putting the data in order and computing z (actually t ) and s proceed as in the Kolmogorov-Smirnov method. For example, in the second row 143 265 .636 O 11 - so z 0.79 , O 1 because there is only one number in each interval, n 155 .93 each value of O is 1 .0909 . Since the highest number in the interval represented by Row 2 is n 11 z 0.79 , Fe F 0.79 Pz 0.79 Pz 0 P0.79 z 0 .5 .2852 .2146 . s x2 Row 1 2 3 4 5 6 7 8 9 10 11 x 139 143 146 156 207 223 231 266 357 396 658 z -0.81 -0.79 -0.77 -0.70 -0.38 -0.27 -0.22 0.00 0.59 0.84 2.52 O O Fo n D Fe Fo Fe 1 .0909 .0909 1 .0909 .1818 1 .0909 .2727 1 .0909 .3636 1 .0909 .4545 1 .0909 .5455 1 .0909 .6364 1 .0909 .7273 1 .0909 .8192 1 .0909 .9091 1 .0909 1.0000 11 0.9999 .5 .5 .5 .5 .5 .5 .5 .5 .5 .5 .5 - .2910 .2852 .2794 .2580 .1480 .1064 .0871 = = = = = = = = + .2224 = + .2995 = + .4941 = .2090 .2146 .2206 .2420 .3520 .3936 .4129 .5000 .7224 .7995 .9941 .1181 .0328 .0521 .1216 .1025 .1519 .2235 .2273 .0968 .1096 .0059 From the Lilliefors table for .05 and n 11 , the critical value is .249. Since the maximum deviation (.2273) is below the critical value, we do not reject H 0 . c) From the previous page SSy y 2 ny 2 y 2 ny 2 19891990 111184.362 4462195.3 SSy 4462195 .3 446219 .53 s x2 n 1 10 x 2 nx 2 SSx 243158 .7 24315 .87 n 1 n 1 10 If we follow 252meanx4 in the outline: Our Hypotheses are H 0 : x2 y2 and H 1 : x2 y2 . s 2y n 1 DF x n 1 10 and DFy n 1 10 , Since the table is set up for one sided tests, if we wish to test H 0 : x2 y2 , we must do two separate one-sided tests. First test DFx, DFy 10,10 3.72 F.025 F.025 and then test s 2y s x2 s x2 s 2y 24315 .87 0.0545 against 446219 .53 1 DFy, DFx 10,10 3.72 F.025 . If 18 .351 against F.025 .0545 either test is failed, we reject the null hypothesis. Since 18.351 is larger than this critical value, reject H 0 . 15 5/06/03 252y034 5. Data from the previous page is repeated: Area parents subsidiaries x2 y x 1 2 3 4 5 6 7 8 9 10 11 658 396 357 266 231 223 207 156 146 143 139 2922 2602 1709 1852 1223 875 666 1519 884 477 564 657 13028 y2 xy 432964 156816 127449 70756 53361 49729 42849 24336 21316 20449 19321 1019346 1712116 676764 661164 325318 202125 148518 314433 137904 69642 80652 91323 4419959 6770404 2920681 3429904 1495729 765625 443556 2307361 781456 227529 318096 431649 19891990 a. Compute a simple regression of subsidiaries against parents as the independent variable. (5) b. Compute s e . (3) c. Predict how many subsidiaries will appear in a city with 60 parent corporations. (1) d. Make your prediction in c) into a confidence interval. (3) e. Compute s b0 and make it into a confidence interval for 0 . (3) f. Do an ANOVA for this regression and explain what it says about 1 . (3) Solution: We need the usual spare parts. From above n 11, xy 4419959 and y 2 x 2922, y 13028, x SSx 11 n 11 x 2 nx 2 1019346 11265 .636 2 243158 .7 x 2922 265 .636 n 1019346, 19891990. Spare Parts Computation: (Repeated from previous page) x 2 Sxy xy nxy 4419959 11265 .636 1184 .36 959263 .8 y 13028 1184 .36 y SSy y 2 ny 2 19891990 111184 .36 2 4462195 .3 b1 Sxy SSx xy nxy 959263 .8 3.9450 x nx 243158 .7 2 b0 y b1x 1184 .36 3.9450 265 .636 136 .42 2 Yˆ b0 b1 x becomes Yˆ 136.42 3.9450 x . b) We already know that SST SSy y 2 ny 2 19891990 111184.362 4462195.3 and that XY nXY Sxy 959263 .8 R .8480828 SSx SSy 243158 .7 4462195 .3 X nX Y nY SSR b Sxy b xy nx y 3.9450 959263 .8 3784295 .7 or 2 2 2 2 2 1 2 2 2 1 SSR b1 Sxy R 2 SST .84808284462195.3 3784311 SSE SST SSR 4462195 .3 3784311 677884 .3 s e2 SSE 677884 .3 75320 .5 n2 9 16 5/06/03 252y0342 or s e2 y 2 x ny 2 b12 2 nx 2 n2 4462195 .3 3.9450 2 243158 .7 9 677910 75323 .3 9 So s e 75320 .5 274 .446 ( is always positive!) ˆ c) If Y 136.42 3.9450 x and x 60 , the prediction is Yˆ 136.42 3.945060 373.1. s e2 2 1 X0 X . In d) From the outline, the Confidence Interval is Y0 Yˆ0 t sYˆ , where sY2ˆ s e2 n X 2 nX 2 ˆ ˆ this formula, for some specific X 0 , Y0 b0 b1 X 0 . Here X 0 60 , Y0 373.1 , X 265.636 and n 11 . Then X 0 X 2 2 75320 .5 1 60 265 .636 75320 .5.26481 19945 .6 and 11 243158 .7 n X 2 nX 2 9 sYˆ 19945.6 141.229 , so that, if tn 2 t.025 2.262 the confidence interval is sY2ˆ 1 s e2 2 Y0 Yˆ0 t sYˆ 373 .1 2.262 141 .2 373 319 . This represents a confidence interval for the average value that Y will take when x 50 and is proportionally rather gigantic because we have picked a point fairly far from the data that was actually experienced. e) The outline says 2 1 X2 75320 .5 1 265 .636 75320 .50.38110 28704 .64 169 .42 . s b20 s e2 n X 2 nX 2 11 243158 .7 So the interval is 0 b0 t sb0 136.42 2.262169.42 136 383. This indicates that the intercept is 2 not significant. f) We can do a ANOVA table as follows: Source SS DF MS SSR MSR Regression 1 Error Total SSE SST n2 n 1 F MSR MSE MSE Source SS DF MS F Regression 3784311 1 3784311 50.243 Error 677884 9 75320 Total 4462195 11 1,9 5.12 so we reject the null hypothesis that x and Note that F.05 y are unrelated. This is the same as saying that H 0 : 1 0 is false. 17 5/06/03 252y0342 The Minitab output for this problem follows: The regression equation is subno = 136 + 3.94 parno Predictor Constant parno S = 274.4 Coef 136.4 3.9450 SE Coef 169.4 0.5566 R-Sq = 84.8% T 0.81 7.09 P 0.441 0.000 R-Sq(adj) = 83.1% Analysis of Variance Source Regression Residual Error Total DF 1 9 10 SS 3784219 677882 4462101 Unusual Observations Obs parno subno 1 658 2602.0 7 207 1519.0 MS 3784219 75320 Fit 2732.2 953.0 F 50.24 SE Fit 233.5 89.0 P 0.000 Residual -130.2 566.0 St Resid -0.90 X 2.18R R denotes an observation with a large standardized residual X denotes an observation whose X value gives it large influence. 18 5/06/03 252y0342 6. A chain has the following data on prices, promotion expenses and sales of one product. (You can do x x 1 2 100. Store 1 2 3 4 5 6 7 8 9 10 11 12 ): Computation of this sum is in red. It might help to divide y , x1 y and x 2 y by 10 and y 2 by sales promotion y x1 x2 x12 3842 3754 5000 1916 3224 2618 3746 3825 1096 1882 2159 2927 35989 59 59 59 79 79 79 79 79 99 99 99 99 968 200 400 600 200 200 400 600 600 200 400 400 600 4800 3481 3481 3481 6241 6241 6241 6241 6241 9801 9801 9801 9801 80852 y2 x 22 Store 1 2 3 4 5 6 7 8 9 10 11 12 price 40000 160000 360000 40000 40000 160000 360000 360000 40000 160000 160000 360000 2240000 x1 y 14760964 14092516 25000000 3671056 10394176 6853924 14032516 14630625 1201216 3541924 4661281 8567329 121407527 y 2999.08, x1 80.6667 226678 221486 295000 151364 254696 206822 295934 302175 108504 186318 213741 289773 2752491 x1 x 2 11800 23600 35400 15800 15800 31600 47400 47400 19800 39600 39600 59400 387200 x2 y 768400 1501600 3000000 383200 644800 1047200 2247600 2295000 219200 752800 863600 1756200 15479600 x 2 400.000. and a. Do a multiple regression of sales against x1 and x 2 . (10) b. Compute R 2 and R 2 adjusted for degrees of freedom. Use a regression ANOVA to test the usefulness of this regression. (6) d. Use your regression to predict sales when price is 79 cents and promotion expenses are $200. (2) e. Use the directions in the outline to make this estimate into a confidence interval and a prediction interval. (4) f. If the regression of Price alone had the following output: The regression equation is sales = 7391 - 54.4 price Predictor Constant price Coef 7391 -54.44 S = 726.2 SE Coef 1133 13.81 R-Sq = 60.9% T 6.52 -3.94 P 0.000 0.003 R-Sq(adj) = 56.9% Analysis of Variance Source Regression Residual Error Total DF 1 10 11 SS 8200079 5273437 13473517 MS 8200079 527344 F 15.55 P 0.003 Do an F-test to see if adding x 2 helped. (4). The next page is blank – please show your work. I suggested that we divide y values by 10 and y squared by 100 to make computations more tractable. No one did, so I have redone this on the following page. After a year of statistics, too many of you decided that x1 y ? x1 x 2 and did something similar for some of the other sums. Where have you been? 19 5/06/03 252y0342 y 3598.90, x 968, x 4800, x 80852, x 2240000, y 1214075.27, x y 275249.1, x y 1547960.0 and you should have found y 3598 .90 299 .908 , x x 968 80.6667 , and x x 387200. First, we compute y n 12 n 12 x 5200 400 .00 . Then, we compute or copy our spare parts: x Solution: a)(With y divided by 10) n 12, 2 2 1 2 1 2 2 1 2 1 1 2 1 2 2 n 12 SST SSy y ny 1214075 .27 12 299 .908 2 134738 * 2 2 x y nx y 275249 .1 1280.6667 299 .908 15062 Sx y X Y nX Y 1547960 .0 12400 299 .908 108401 SSx1 x12 nx12 80852 1280.6672 2766* SSx2 X 22 nX 22 2240000 124002 320000* and Sx x X X nX X 387200 1280.6667 400 0 . Sx1 y 1 2 1 2 2 1 2 1 2 1 2 * indicates quantities that must be positive. Then we substitute these numbers into the Simplified Normal Equations: X 1Y nX 1Y b1 X 12 nX 12 b2 X 1 X 2 nX 1 X 2 X Y nX Y b X X 2 2 1 1 2 nX X b X 1 2 2 2 2 nX , 2 2 15062 2766 b1 0b2 108401 0b1 320000 b2 which are and solve them as two equations in two unknowns for b1 and b2 . Because of the zero Sx1 x 2 term, these are extremely unusual normal equations, so do not use them to study for exams. The first equation can be 15062 5.445 . The second is 320000 b2 108401 . This means written as 2766 b1 15062 so b1 2766 108401 0.33875 . Finally we get b0 by solving b0 Y b1 X 1 b2 X 2 that b2 320000 299 .908 5.445 80 .6667 0.33875 400 299 .908 438 .230 135 .500 602 .64 . Thus our equation is Yˆ b b X b X 604.64 5.445X 0.33875X . 0 1 1 2 2 1 2 1 x 2 4800, x 80852, x 2240000, y 121407527, x y 2752491, x y 15479600 and you should have found y 35989 2999 .08 , x x 968 80.6667 , and x x y 387200. First, we compute n 12 n 12 x 5200 400 .00 . Then, we compute or copy our spare parts: x a)(The way most of you did it) n 12, 2 2 y 35989, x 968, 2 1 2 1 2 1 1 2 1 2 2 n 12 20 5/06/03 252y0342 SST SSy y ny 121407527 12 2999 .08 2 13473757 * 2 2 x y nx y 2752491 1280.6667 2999 .08 150620 Sx y X Y nX Y 15479600 12400 2999 .08 1084016 SSx1 x12 nx12 80852 1280.6672 2766* SSx2 X 22 nX 22 2240000 124002 320000* and Sx x X X nX X 387200 1280.6667 400 0 . Sx1 y 1 2 1 2 2 1 2 1 2 1 2 * indicates quantities that must be positive. Before we got to this point some of you decided to compute the coefficients as if this were simple regression. Unless I saw some evidence that you knew that Sx1x2 was zero, you got no credit. For a more conventional solution see exam 252y0341. Then we substitute these numbers into the Simplified Normal Equations: X 1Y nX 1Y b1 X 12 nX 12 b2 X 1 X 2 nX 1 X 2 X Y nX Y b X X 2 2 1 1 2 nX X b X 1 2 2 2 2 nX , 2 2 150620 2766 b1 0b2 1084016 0b1 320000 b2 which are and solve them as two equations in two unknowns for b1 and b2 . Because of the zero Sx1 x 2 term, these are extremely unusual normal equations, so do not use them to study for exams. The first equation can be 15062 54 .454 . The second is 320000 b2 1084016 . This means written as 2766 b1 150620 so b1 2766 108401 3.38755 . Finally we get b0 by solving b0 Y b1 X 1 b2 X 2 that b2 320000 2999 .08 54 .454 80.6667 3.38755 400 2999 .08 4392 .6245 1355 .02 6036 .68 . Thus our equation is Yˆ b b X b X 6036.68 54.454X 3.38755X . 0 1 1 2 2 1 2 b) (The way I did it) SSE SST SSR and that SSR b1 Sx1 y b2 Sx2 y 5.445 15062 0.33875 108401 82013 38721 118734 SST SSy R2 y 2 2 ny 134738 so SSE 134738 118734 16004 SSR 118734 0.881 . If we use R 2 , which is R 2 adjusted for degrees of SST 134737 freedom R 2 n 1R 2 k 110.881 2 .855 . n k 1 Source Regression Residual Error Total DF 2 9 12 9 SS 118734 16004 134737 the ANOVA reads: MS 59367.0 1778.2 F 33.39 F.05 4.26 Since our computed F is larger that the table F, we reject the hypothesis that X and Y are unrelated. b) (The way most of you did it) SSE SST SSR and that SSR b1 Sx1 y b2 Sx2 y 54.454 150620 3.38755 1084016 8201861 .5 3672158 .4 11874020 SST SSy y 2 2 ny 13473757 so SSE 13473757 11874020 1599737 21 5/06/03 252y0342 R2 SSR 11874020 0.881 . If we use R 2 , which is R 2 adjusted for degrees of SST 13473757 freedom R 2 n 1R 2 k 110.881 2 .855 . Source Regression Residual Error Total n k 1 9 DF 2 9 12 SS 11874020 1599737 13473757 the ANOVA reads: MS 5937010 177748.56 F.05 F 33.40 4.26 Since our computed F is larger that the table F, we reject the hypothesis that X and Y are unrelated. c) X 1 79, X 2 200 (My way) Yˆ 604.64 5.44579 0.33875200 606.64 430.155 67.75 244.235 (Your way) Yˆ 6036.68 54.45479 3.38755200 6036.68 4301.87 677.51 2412.22 d) We need to find s e . The best way to do this is to do an ANOVA or remember that s e2 SSE , and n k 1 that you got SSE 13473757 11874020 1599737 . SSE 1599737 9 2.262 . The outline says that an approximate s e2 177748 .56 , so s e 421 .60 . t .025 n2 9 s 421 .6 2412 275 and an approximate confidence interval is Y0 Yˆ0 t e 2412 .22 2.262 12 n prediction interval is Y Yˆ t s 2412 .22 2.262 421 .6 2412 954 . 0 0 e e) We can copy the Analysis of Variance in the question. Source Regression Residual Error Total DF 1 10 11 SS 8200079 5273437 13473517 MS 8200079 527344 F 15.55 P 0.003 But we just got Source Regression Residual Error Total DF 2 9 12 SS 11874020 1599737 13473757 MS 5937010 177748.56 F 33.40 F.05 4.26 Let’s use our SST and itemize this as Source DF SS MS X1 1 8200079 8200079 46.13 X2 1 3673941 3673941 20.67 9 12 1599737 13473757 Residual Error Total F F.05 1,9 5.12 F.05 1,9 5.12 F.05 177748.56 The appropriate F to look at is opposite X2. Since 20.67 is above the table value, reject the null hypothesis of no relationship. Yes, the added variable helped. 22 5/06/03 252y0342 I also ran this on the computer. Regression Analysis: sales versus price, promotion The regression equation is sales = 6036 - 54.4 price + 3.39 promotion Predictor Constant price promotio Coef 6035.7 -54.442 3.3875 S = 421.8 SE Coef 722.7 8.020 0.7457 R-Sq = 88.1% T 8.35 -6.79 4.54 P 0.000 0.000 0.001 R-Sq(adj) = 85.5% Analysis of Variance Source Regression Residual Error Total Source price promotio DF 1 1 DF 2 9 11 SS 11872129 1601387 13473517 MS 5936065 177932 F 33.36 P 0.000 Seq SS 8200079 3672050 Unusual Observations Obs price sales 5 79.0 3224 Fit 2412 SE Fit 193 Residual 812 St Resid 2.16R R denotes an observation with a large standardized residual 23 5/06/03 252y0342 7. The Lees present the following data on college students summer wages vs. years of work experience blocked by location. Years of Work Experience Region 1 2 3 1 16 19 24 2 21 20 21 3 18 21 22 4 14 21 25 a. Do a 2-way ANOVA on these data and explain what hypotheses you test and what the conclusions are. (9) (Or do a 1-way ANOVA for 6 points.) The following column sums are done for you: x 1 69, x 2 81, n1 4, n 2 4, x 2 1 1217 and x 2 2 1643. So x1 17.25,and x 2 20.25. b. Do a test of the equality of the means in columns 2 and 3 assuming that the columns are random samples from Normal populations with equal variances (4). c. Assume that columns 2 and 3 do not come from a Normal distribution and are not paired data and do a test for equal medians. (4) d. Test the following data for uniformity. n 20. Category 1 2 3 4 5 Numbers 0 2 0 10 8 Solution: a) This problem was on the last hour exam. 2-way ANOVA (Blocked by region) ‘s’ indicates that the null hypothesis is rejected. Region Exper 1 Exper 2 Exper 3 sum count mean Sum of squares x i.. n i SS x1 x2 x3 x i. x i2. 1 16.0 19.0 24.0 59.00 3 19.6667 1193 386.78 2 21.0 20.0 21.0 62.00 3 20.6667 1282 427.11 3 18.0 21.0 22.0 61.00 3 20.3333 1249 413.44 4 14.0 21.0 25.0 60.00 3 20.0000 1262 400.00 Sum 69.0 81.0 92.0 242.00 3 20.1667 4986 1627.33 4 +4 +4 12.00 nj 17.25 20.25 23.00 1217 +1643 +2126 297.56 +410.06 +529 x j SS x 2j x 242 , From the above x x 242 20.17 . n SSC n SST 12 2 j x j n 12 , 20.17 x =4986 =1236.63 x x 2 ij 4986 , 2 ij x 2 i. 1627 .33 x 2 .j 1236 .63 and n x 4986 12 20 .17 2 4986 4881 .95 104 .05 . 2 n x 41236 .63 12 20 .17 2 4946 .52 4881 .95 64 .57 . This is SSB in a one - way ANOVA. SSR 2 n x 2 i i. n x 31627 .33 12 20 .17 2 4881 .99 4881 .95 0.04 2 ( SSW SST SSC SSR 39.44 ) Source Rows (Regions) SS 0.04 DF 3 Columns(Experience) 64.57 2 MS 0.0133 32.285 F 0.002 4.91 F.05 F 3,6 4.76 ns F 2,6 5.14 s H0 Row means equal Column means equal Within (Error) 39.44 6 6.573 Total 104.05 11 So the results characterized by years of experience (column means) are significantly different. 24 5/06/03 252y0342 Note that if you did this as a 1-way ANOVA, the SS and DF in the Rows line in the table would be added to the Within line. Computer version: Two-way ANOVA: C40 versus C41, C42 Analysis of Variance for C40 Source DF SS MS C41 3 1.67 0.56 C42 2 66.17 33.08 Error 6 37.83 6.31 Total 11 105.67 F 0.09 5.25 P 0.964 0.048 Note that the ‘ns’ on the ANOVA on the last page seems to be due to rounding. b) The data that we can use is repeated here. 1217 417 .25 2 2126 423 .00 2 8.91667 , n1 4, x 3 23 .00, s 32 3.3333 , n3 4. 3 3 H 0 : 1 2 H 1 : 1 2 Test Ratio Method: x x1 x3 17.25 23.00 5.75 DF n1 n2 2 4 4 2 6 .05, x1 17 .25, s12 sˆ2p n1 1s12 n2 1s22 sx sˆ p n1 n2 2 1 1 n1 n2 = 38.91667 33.3333 26 .75 10 .00 6.1250 6 6 6.1250 1 1 6.1250 0.5 4 4 6 t .025 2.445 3.0625 1.750 H 0 : 1 2 H 0 : 0 Our hypotheses are or H 1 : 1 2 H 1 : 0 x 0 5.75 0 t 3.286 so reject null hypothesis. s x 1.750 c) The null hypothesis is H 0 : Columns come from same distribution or medians are equal. The data are repeated in order. The second number in each column is the rank of the number among the 11 numbers in the two groups. 14 1 21 4.5 16 2 22 6 18 3 24 7 21 4.5 25 8 . 10.5 25.5 Since this refers to medians instead of means and if we assume that the underlying distribution is not Normal, we use the nonparametric (rank test) analogue to comparison of two sample means of independent samples, the Wilcoxon-Mann-Whitney Test. Note that data is not cross-classified so that the Wilcoxon Signed Rank Test is not applicable. H 0 : 1 2 H 1: 1 2 . We get TL 10 .5 and TU 25 .5 . Check: Since the total amount of data is 4 + 4 = 8 n , 10.5 +25.5 must nn 1 88 36 .They do. 2 2 For a 5% two-tailed test with n1 4 and n 2 4 , Table 6 says that the critical values are 11 and 25. We accept the null hypothesis in a 2-sided test if the smaller of thee two rank sums lies between the critical values. The lower of the two rank sums, W 10.5 is not between these values, so reject H 0 . equal 25 5/06/03 252y0342 d) This is basically Problem E11. This is the problem I used to use to introduce Kolmogorov-Smirnov. I stopped because everyone seemed to assume that all K-S tests were tests of uniformity. Five different formulas are used for a new cola. Ten tasters are asked to sample all five formulas and to indicate which one they preferred. The sponsors of the test assume that equal numbers will prefer each formula - this is the assumption of uniformity. Instead none prefer Formulas 1 and 3; 1 person prefers Formula 2; 5 people prefer formula 4 and 4 people prefer Formula 5. Test the responses for uniformity. Solution: H 0 : Uniformity or H 0 : p1 p 2 p3 p 4 p5 , where p i is the proportion that favor cola Formula i. Since uniformity means that we expect equal numbers in each group, we can fill the E column with fours or just fill the next column with pi 15 . Cola O 1 2 3 4 5 Total 0 2 0 10 8 20 O n 0 .1 0 .5 .4 1.0 Fo E 0 .1 .1 .6 1.0 4 4 4 4 4 20 E n .2 .2 .2 .2 .2 1.0 Fe .2 .4 .6 .8 1.0 D .2 .3 .5 .2 0 The maximum deviation is 0.5. From the Kolmogorov-Smirnov table for n 20 , the critical values are .20 .10 .05 .01 CV .232 .265 .294 .352 If we fit 0.5 into this pattern, it must have a p-value of less than .01, or we may simply note that if .05 , 0.5 is larger than .294 so we reject H 0 . Can you see why it would be impossible to do this problem by the chi-squared method? 26