Document 15930301

advertisement

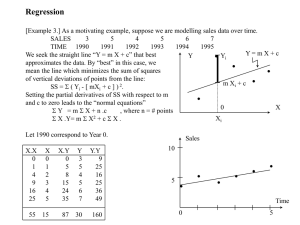

252solnI1 11/9/07 (Open this document in 'Page Layout' view!) I. LINEAR REGRESSION-Confidence Intervals and Tests 1. Confidence Intervals for 2. Tests for b1 . b1 . 3. Confidence Intervals and Tests for b0 Text 13.40-13.42, 13.49 [13.35-13.37, 13.43] (13.35-13.37, 13.43) (In 13.42[13.37] do test for 4. Prediction and Confidence Intervals for b0 as well) y Text 13.55-13.56, 13.58 [13.49-13.51] (13.47-13.49) This document includes exercises 13.35 to 13.43. ----------------------------------------------------------------------------------------------------------------------------- ---- Problems involving confidence intervals and tests for the coefficients of a simple regression. Answers below are heavily edited versions of the answers in the Instructor’s Solution Manual. Note that your text uses S YX for the standard error, which I call s e . The sum S xy and the covariance s xy xy nxy , which was introduced last term, are not the same thing. n 1 Exercise 13.40 [13.35 in 9th and 10th]: You have found n 18, b1 4.5 and sb1 1.5. a) What is the t ratio for a significance test? b) At a 95% confidence level, what are the critical values of t? c) What is your statistical decision about significance? d) construct a 95% confidence interval for the slope. s2 1 e . 1 b1 t sb Solution: From the outline s b21 s e2 df n 2 1 2 X 2 nX 2 SS x H : b 10 0 1 10 To test use t 1 . Remember 10 is most often zero – and if the null hypothesis is H : s b1 10 1 1 false in that case we say that 1 is significant. Given: n 18, b1 4.5 and sb1 1.5. H 0 : 1 0 . a)` t b1 0 4.5 16 2.120 . 3.00 b) df n 2 18 2 16 . .05 . This is a 2-sided test so use t .025 s b1 1.5 16 c) Make a diagram. The rejection regions are above t n2 t .025 2.120 and below 2 16 t n2 t.025 2.120 . Since 3.00 is in the upper rejection region, reject the null hypothesis. which is 2 equivalent to saying that the regression equation is useful. d) 1 b1 t sb1 4.5 2.1201.5 4.5 3.18 . So 1.32 1 7.68 . This is equivalent to saying the 2 coefficient is significant, since the interval does not include zero. 252solnI1 11/9/07 Exercise 13.41 [13.36 in 8th and 9th]: If SSR 60, SSE 40 and n 20 a) Find the F statistic for the ANOVA. b) Find the critical value of F for .05 . c) Make a statistical decision d) Calculate the correlation assuming that b1 is negative. e) Test the correlation for significance. Solution: If k is the number of independent variables (1 in this section), the ANOVA table we can get from this is below. We know SSR 60, SSE 40 , and n 20. We assume .05 . Source SS DF MS F MSR MSR SSR F k Regression SSR k MSE SSE MSE Error(Residual) n k 1 SSE n k 1 Total SST n 1 If we fill in the data above. We get the following. Source SS DF MS F SSR MSR MSR F k Regression 60 1 MSE SSE MSE Error(Residual) n k 1 40 n k 1 Total 100 20 1 If we divide SS by DF and MSR by MSE, we can finish the table. Source SS DF MS F F 1 , 18 MSR 60 F 27 F.05 4.41 Regression 60 1 MSE 2.222 18 Error(Residual) 40 Total 100 19 SSE MSR 60 40 2.222 . F 27 . So we have a) MSR SSR 60 60 . s e2 MSE 18 k 1 n k 1 MSE 2.222 1,18 4.41 from the H 0 : Re gression is useless - this is equivalent to H 0 : 1 0 if k 1. b) We get F.05 table. c) Our rejection zone is above 4.41 and since Fcalc F , we reject the null hypothesis. d) From the outline R 2 SSR 60 0.60 . Remember r 2 R 2 and the correlation has the same sign as SST 100 the simple regression coefficient. So r .60 .7746 . e) According to the text and the outline if we want to test H 0 : xy 0 against H1 : xy 0 and x and y are r normally distributed, we use t n 2 sr t r 1 r 2 n2 .7746 . The value of our test ratio is 5.1962 . This is a two-sided test and the rejection zones are above 1 .6 1 r 2 18 n2 n2 16 16 t t.025 2.120 and below t n2 t.025 2.120 . Since -5.1962 is in the lower rejection zone, 2 r 2 there is a significant correlation between x and y. 252solnI1 11/9/07 Exercise13.42 [13.37 in 8th and 9th}: For the Petfood problems test the slope for significance and construct a 95% confidence interval for the slope. Solution: Recall that in problems 13.4 and 13.16 x had spare parts: S xy n 12 xy nx y 384 1212.52.375 27.75 , SS x 2250 1212 .5 375 , SST SS y 2 S xy x 150 12.5 , y y 28.5 2.375 . We y 2 n 12 x 2 nx 2 ny 70 .69 122.375 3.0025 2 2 27 .75 0.074 (the slope), b0 y b1 x 2.375 0.074 12.5 1.45 (the intercept). SS x 375 Y b0 b1 x became Y 1.45 0.074 x . SSR b1 S xy 0.07427.75 2.0535. b1 SSR 2.0535 .6839 . SSE SST SSR 3.0025 2.0535 0.9490 . SST 3.0025 SSE 0.9490 0.09490 . The standard error (of the estimate) is s e S YX 0.09490 .3081 . a) s e2 n 2 12 2 R2 So s b21 s e2 s2 e .09490 0.0002531 sb 0.0002531 .01591 1 2 2 SS x 375 X nX 1 1 2 X2 .09490 1 12 .5 .04745 sb 0.004745 .21783 s b20 s e2 0 12 375 n X 2 nX 2 b 0 b 0 0.074 1.450 t b1 1 4.651 t b0 0 6.6566 We test each of these against s b1 .01591 s b0 .217831 t n2 t 10 2.228 . Make a diagram with zero in the middle and an upper ‘reject’ zone above 2.228 and 2 .025 a lower reject zone below -2.228. Since both t-ratios are in the upper ‘reject’ zone, we reject the null hypothesis and say that both coefficients are significant. b) The confidence intervals for the two coefficients are 1 b1 t sb1 0.074 2.2280.01591 0.074 .0354 and 0.039 1 0.109 2 0 b0 t 2 sb0 1.450 2.2280.21783 1.45 0.49 . So 0.96 1 1.94 . This is equivalent to saying the coefficients are significant, since the intervals do not include zero. Remember the printout given in 252solnG1. Regression Analysis: Sales versus Space The regression equation is Sales = 1.45 + 0.0740 Space Predictor Constant Space S = 0.3081 Coef 1.4500 0.07400 SE Coef 0.2178 0.01591 R-Sq = 68.4% T 6.66 4.65 P 0.000 0.001 R-Sq(adj) = 65.2% Analysis of Variance Source Regression Residual Error Total DF 1 10 11 SS 2.0535 0.9490 3.0025 MS 2.0535 0.0949 F 21.64 P 0.001 252solnI1 11/9/07 Notice the F and t ratios are those given above and that the p-values associated with them are essentially zero. We are then saying that we will reject the null hypotheses of no significance at any reasonable significance level. The F test and the t-test on the slope are essentially the same test. Note that 21.64 is 6.66 1,10 t 10 2 . squared and that F.05 .025 Exercise 13.49 in 10th edition only: I’m afraid that you need to read the statement of the problem in the text. From the Instructor’s Solution Manual 13.49 (a) Sears, Roebuck and Company’s stock moves only 60.3% as much as the overall market and is much less volatile. LSI Logic’s stock moves 142.1% more than the overall market and is considered as extremely volatile. The stocks of Disney Company, Ford Motor Company and IBM, move 10.9%, 34% and 44.9% respectively more than the overall market and are considered somewhat more volatile than the market. (b) Investors can use the beta value as a measure of the volatility of a stock to assess its risk. See (b) in problem below. Exercise13.43 in 8th and 9th : From the Instructor’s Solution Manual 13.43 (a) For Proctor and Gamble, the estimated value of its stock will increase by 0.53% on average when the S & P 500 index increases by 1%. For Ford Motor Company, the estimated value of its stock will increase by 0.77% on average when the S & P 500 index increases by 1%. For IBM, the estimated value of its stock will increase by 0.94% on average when the S & P 500 index increases by 1%. For Immunex Corp, the estimated value of its stock will increase by 1.34% on average when the S & P 500 index increases by 1%. For LSI Logic, the estimated value of its stock will increase by 2.04% on average when the S & P 500 index increases by 1%. (b) A stock is riskier than the market if the estimated absolute value of the beta exceeds one. This can be used to gauge the volatility of a stock in relative to how the market behaves in general. 252solnI1 11/9/07 James T. McClave, P. George Benson and Terry Sincich, Statistics for Business and Economics, 8th ed. , Prentice Hall, 2001, the text used in 2003, had some more problems of this type if you want more practice. Exercise10.31: From the outline s b21 s e2 1 b1 t sb1 , where df n 2 . 2 a) Given b1 31, s e 3, SS x s b21 x 2 s2 e and a Confidence Interval for b1 is X 2 nX 2 SS x 1 nx 2 = 35 and n 10 . s e2 32 0.25714 . s b 0.25714 0.5071 . 1 SS x 35 8 8 2.306 and t .05 1.860 df n 2 10 2 8 so t .025 So we find for a 90% interval 1 b1 t sb1 31 1.860 0.5071 31 0.94 or 30.06 to 31.94. 2 And for a 95% interval 1 31 2.306 0.5071 31 1.17 or 29.83 to 32.17. b) Given b1 64 , SSE 1960 , SS x x 2 nx 2 = 30 and n 14 . 2 s SSE 1960 163 .3333 163 .3333 s b21 e 5.4444 . s b 5.4444 2.3333 . 1 n2 12 SS x 30 12 12 df n 2 12 so t .025 2.179 and t .05 1.782 s e2 So we find for a 90% interval 1 64 1.782 2.3333 64 4.168 or 59.84 to 68.16. And for a 95% interval 1 64 2.179 2.3333 64 5.08 or 58.92 to 69.08. c) Given b1 8.4, SSE 146 , SS x x 2 nx 2 = 64 and n 20 . s2 SSE 146 8.1111 8.1111 s b21 e 0.12674 . s b 0.12674 0.35600 . 1 n 2 18 SS x 64 18 18 1.734 2.101 and t .05 df n 2 18 so t .025 s e2 So we find for a 90% interval 1 8.4 1.734 0.35600 8.4 0.617 . And for a 95% interval 1 8.4 2.1010.35600 8.4 0.748 . Note that if the null hypothesis is H 0 : 1 0 (or that the slope is insignificant), The confidence intervals above show that it is false. 252solnI1 11/9/07 Exercise 10.32: a) Scattergram Exercise 10.32 y 8 3 Y = 0.535714 + 0.821429X R-Squared = 0.727 -2 0 1 2 3 4 5 6 x b) Row 1 2 3 4 5 6 7 y x 1 3 3 1 4 7 2 21 1 4 3 2 5 6 0 21 x2 1 16 9 4 25 36 0 91 xy 1 12 9 2 20 42 0 86 x 21, y 21, x 91, y 89, xy 86, and 2 2 y2 1 9 9 1 16 49 4 89 x x 21 3 , y y 21 3 n 7 n 7 Spare Parts: S xy xy nx y 86 733 = 23. SS x SS y x y S xy 2 nx 2 = 91 732 = 28. 2 ny 2 89 732 = 26. 23 0.82143 SS x 28 b0 y b1 x 3 0.82143 3 0.53571 . So the equation is Y 0.5357 0.82143 x b1 n 7. c) To plot the regression line note that for x 0, Y 0.5357 0.821430 0.5357, and for x 6, Y 0.5357 0.821436 5.4641. The line is plotted above. d) To see if x contributes to y, test H 10 : 1 0 against H 11 : 1 0 . Also test H 00 : 0 0 against H 01 : 0 0 . e) SSE SST SSR SS y b1 S xy . So s e2 s b21 SSE SS y b1 S xy 26 0.8214 23 1.4216 n2 n2 5 s e2 1.4216 0.0508 . s b 0.0508 0.2253 . From the outline 1 SS x 28 1 2 2 X2 s e2 1 X 1.4216 1 3 0.6600 . s 0.6600 0.8124 . s b20 s e2 b0 n X 2 nX 2 n SS x 7 28 We use these in the t-tests. df n 2 7 2 5 . Make a diagram. Show an almost normal curve with a 5 5 2.571 . 2.571 and t .025 95% 'accept' region between t .025 252solnI1 11/9/07 H 0 : 1 10 b 10 From the Outline - To test use t 1 , where, as usual, 10 0 . So s b1 H 1 : 1 10 b 10 0.8214 0 t 1 3.646 . Since this is in our 'reject' region, reject the null hypothesis and s b1 0.2253 conclude that the slope is significant. H 0 : 0 00 b 00 b 00 0.5375 0 0.6616 . To test use t 0 , where 00 0 . So t 0 .8124 H : s b0 s b0 00 1 0 Since this is not in our 'reject' region, do not reject the null hypothesis and conclude that the intercept is insignificant. The Minitab instructions for this problem follow: #To make the graph MTB > %Fitline c1 c2; SUBC> Confidence 95.0; SUBC> Title 'Exercise 10.32'. Executing from file: W:\WMINITAB\MACROS\Fitline.MAC Macro is running ... please wait #To do the regression MTB > regress c1 on 1 c2 Regression Analysis The regression equation is y = 0.536 + 0.821 x Predictor Constant x Coef 0.5357 0.8214 s = 1.192 Stdev 0.8124 0.2253 R-sq = 72.7% t-ratio 0.66 3.65 p 0.539 0.015 R-sq(adj) = 67.2% Analysis of Variance SOURCE Regression Error Total DF 1 5 6 SS 18.893 7.107 26.000 MS 18.893 1.421 F 13.29 p 0.015 Note that the computer got the same coefficients and values of t that we did. Exercise 10.33: Data comes from the previous problem. 5 5 1.476 and t .01 3.365 df n 2 5 so t .10 So we find, for a 80% interval for the slope, 1 b1 t sb1 0.821 1.476 0.2253 0.82 0.33 or 0.49 2 to 1.15 and for the intercept 0 b0 t sb0 0.5357 1.476 0.8124 0.54 1.20 or -0.66 to 1.74 (This 2 interval includes zero.). And , for a 98% interval for the slope, 1 b1 t sb1 0.821 3.365 0.2253 0.82 0.76 or 0.06 to 2 1.58 and for the intercept 0 b0 t sb0 0.5357 3.365 0.8124 0.54 2.73 or -2.19 to 3.27 (This 2 interval includes zero.). 7 252solnI1 11/9/07 Exercise 10.42†: (10.40 in the old book) a) Scattergram. Exercise 10.42 y 100 50 Y = 44.1305 + 0.236617X R-Squared = 0.086 0 10 20 30 40 50 60 70 80 x b) Row 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 y x x2 xy y2 40 12 144 480 1600 73 71 5041 5183 5329 95 70 4900 6650 9025 60 81 6561 4860 3600 81 43 1849 3483 6561 27 50 2500 1350 729 53 42 1764 2226 2809 66 18 324 1188 4356 25 35 1225 875 625 63 82 6724 5166 3969 70 20 400 1400 4900 47 81 6561 3807 2209 80 40 1600 3200 6400 51 33 1089 1683 2601 32 45 2025 1440 1024 50 10 100 500 2500 52 65 4225 3380 2704 30 20 400 600 900 42 21 441 882 1764 839 1037 47873 48353 63605 n 19, x y x 1037 44.1579 , n 19 y 839 54.5789 n 19 Spare Parts: S xy xy nx y 2561 .26 . SS x SS y x y S xy 2 nx 2 10824 .5 . 2 ny 2 7006 .63 SST 2561 .26 0.2366 SS x 10824 .5 b0 y b1 x 54.5789 0.2366 44.1579 44.131 . Notice that this is almost the same as the mean of y. So the equation is Y 44 .1 0.237 x . As in Exercise 10.15 SS xy 2 2561 .26 2 .086 . On R2 SS x SS y 10824 .57006 .63 a zero to one scale, this is pathetic! b1 The Minitab routine below was used to calculate the solution to this problem. #252sols regress c1 on 1 c2 let c3=c2*c2 let c4=c1*c2 let c5=c1*c1 let k1=sum(c1) let k2=sum(c2) let k3=sum(c3) let k4=sum(c4) let k5=sum(c5) print c1-c5 let k17=count(c1) let k21=k2/k17 let k22=k1/k17 let k18=k21*k21*k17 Fakes simple OLS regression by hand #Put y #c3 is #c5 is #k1 is #k2 is #k3 is #k4 is #k5 is in c1, x in c2 x squared, c4 is xy y squared sum of y sum of x sum of x squared sum of xy sum of y squared #k17 is n #k21 is mean of x #k22 is mean of y 8 252solnI1 11/9/07 let k18=k3-k18 let k19=k22*k22*k17 let k19=k5-k19 let k20=k21*k22*k17 let k20=k4-k20 print k1-k5 print k17-k22 end #k18 is SSx #k19 is SSy #k20 is SSxy c) SSE SST SSR SS y b1 S xy . So SS y b1 S xy 7006 .63 0.2366 2561 .26 SSE 376 .5080 19 .40 . About 95% of n2 n2 17 managerial successes indices should lie within two standard deviations of the regression line. Twice this standard deviation is about 38.80. d) The low value of R 2 should make us suspicious that the regression explains very little. The scattergram is extremely diffuse, leading us to feel that there is not much of a pattern to the data. e) There are several ways to go about formally checking whether these interactions affect the index of managerial success:. (i) If we use the method for checking the significance of the slope used in the last problem, H 0 : 1 0 s2 b 0 0.2366 0 376 .5080 . - To test use t 1 1.27 . s b21 e s b1 SS x 2561 .66 376 .5080 H 1 : 1 0 se 2561 .66 df n 2 19 2 17 . Make a diagram. Show an almost normal curve with a 95% 'accept' region 17 17 between t .025 2.110 and t .025 2.110 . Since 1.27 is between these two values, we cannot reject the null hypothesis and must conclude that the slope is not significant. 1 2 H : 0 X2 s e2 1 X and that to test 0 0 Note: Recall that s b20 s e2 use H1 : 0 0 n SS x n X 2 nX 2 b0 0 t 4.71 . Since this is in our 'reject' region, reject the null hypothesis and conclude that the s b0 intercept is significant. (ii) Run the regression on the computer. The Minitab results are MTB > regress c1 on 1 c2 Regression Analysis The regression equation is y = 44.1 + 0.237 x Predictor Constant x Coef 44.130 0.2366 s = 19.40 Stdev 9.362 0.1865 R-sq = 8.6% t-ratio 4.71 1.27 p 0.000 0.222 R-sq(adj) = 3.3% Analysis of Variance SOURCE Regression Error Total DF 1 17 18 SS 606.0 6400.6 7006.6 MS 606.0 376.5 F 1.61 p 0.222 The p-value for the slope is 0.222. Since this is above our 5% significance level, do not reject the null hypothesis that the slope is insignificant. (iii) The ANOVA is another version of the test in (ii). You already know that SST is 7006.6. you can get the regression sum of squares, SSR, by computing SSR b1 S xy 0.23662561.26 606.0 or, because 9 252solnI1 11/9/07 SSR , SSR R 2 SST . You can get SSE SST SSR. . The degrees of freedom for a simple SST regression sum of squares, SSR, is 1 and for the SST is n 1 18, leaving df n 2 19 2 17 for SSE. The F value of 1.61 has 1 and 17 degrees of freedom and is equal to the square of the t that we 2 computed in (I). If you look up F 17 4.45, you will find that it is equal to t 17 2.110 2 . Notice that R2 .05 .025 the p-value is again 22.2%. In other words, in simple regression, the F test duplicates the t-test on the slope. 17 f) You already know that t .025 2.110 . So we find, for a 95% interval for the slope, 1 b1 t 2 sb1 0.2366 2.110 0.1865 0.24 0.39 or -0.15 to 0.63 (Notice that this interval includes zero so that the slope is not significant.) . For the intercept 0 b0 t 2 sb0 44.13 2.110 0.9.362 44.13 19.75 or 24.4 to 63.9 (This interval does not include zero.).. 10