Brief Notes #11 Linear Regression

advertisement

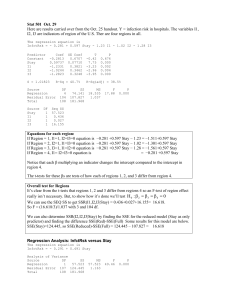

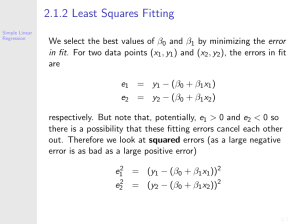

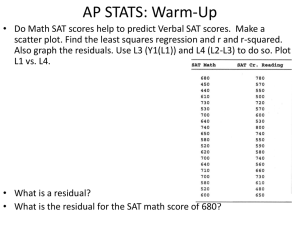

Brief Notes #11 Linear Regression (a) Simple Linear Regression • Model: Y = β0 + β1 g(X) + ε, with ε ~ (0,σ2) ⇒ Y = β0 + β1 X + ε • Data: (Xi, Yi), with i = 1, … , n Yi = β0 + β1 Xi + εi y yi ( y i − ŷ i ) ŷ i ( ŷ i − y) ( y i − y) y Ŷ = βˆ 0 + βˆ 1 X x • xi Least Squares Estimation of (β0, β1) Find ( βˆ 0 , βˆ 1 ) such that ∑ (Y i i − Ŷi ) 2 = min , where Ŷi = βˆ 0 + βˆ 1 X i . x • Solution S βˆ 1 = XY , S XX S βˆ 0 = Y − βˆ 1 X = Y − XY X , S XX 1 ∑ Xi , n i where X = Y= 1 ∑ Yi n i S XX = ∑ (X i − X ) 2 , i • i ⎡βˆ ⎤ Properties of ⎢ 0 ⎥ for εi ~ iid N(0, σ2) ˆ ⎣ β1 ⎦ ⎛ ⎡⎛ 1 X 2 ⎞ ⎜ ⎟⎟ ⎢⎜⎜ + ⎡βˆ 0 ⎤ ⎜ ⎡β 0 ⎤ n S 2 ⎝ XX ⎠ ⎢ ˆ ⎥ ~ N⎜ ⎢ ⎥ , σ ⎢⎢ ⎛ ⎞ β X β ⎣ 1⎦ ⎜⎣ 1⎦ ⎜⎜ − ⎟⎟ ⎢ ⎜ S XX ⎝ ⎠ ⎣ ⎝ • S XY = ∑ (X i − X )(Yi − Y ) . ⎛ X ⎞⎤ ⎞⎟ ⎜⎜ − ⎟⎟⎥ S XX ⎠ ⎥ ⎟ ⎝ ⎛ 1 ⎞ ⎥ ⎟⎟ ⎜⎜ ⎟⎟ ⎥ ⎝ S XX ⎠ ⎦ ⎟⎠ Properties of Residuals, e i = Yi − Ŷi - ∑e = ∑e X = ∑e Y i i i i - SSe = ∑ (Y i i i i =0 i − Ŷi ) 2 = the residual sum of squares. i SS e ~ χ 2n − 2 , 2 σ ⇒ E[SSe] = σ2(n − 2) σˆ 2 = SS e /(n − 2) = MS e (mean square error) σˆ = MS e = "standard error of regression" • Significance of Regression Let S YY = ∑ (Yi − Y ) 2 = total sum of squares. i Property: SYY = SSe + SSR, where SSe = ∑ (Y i − Ŷi ) 2 = residual sum of squares, i SSR = ∑ (Ŷ − Y ) 2 = sum of squares explained by the regression. i i Also SSe and SSR are statistically independent. SS Notice: if β1 = 0, then 2R ~ χ12 σ Definition: R2 = • SS SS R = 1− e , S YY S YY coefficient of determination of the regression. Hypothesis Testing for the Slope β1 1. H0: β1 = β10 against H1: β1 ≠ β10 (t-test) Property: t (β1 ) = βˆ 1 − β1 MS e / S XX ~ t n −2 ⇒ Accept H0 at confidence level α if: | t (β10 ) |< t n − 2,α / 2 2. H0: β1 = 0 against H1: β1 ≠ 0 (F-test) From distributional properties and independence of SSR and SSe, and under H0, F= SS R / 1 ~ F1,n − 2 SS e /( n − 2) ⇒ Accept H0 if F < F1, n − 2, α Notice that for H0: β1 = 0, the t-test and the F-test are equivalent. (b) Multiple Linear Regression • k Model: Y = β 0 + ∑ β j g j (X) + ε , with ε ~ (0,σ2) j=1 k ⇒ Y = β0 + ∑ β jX j + ε j=1 • Data: (Yi, Xi), with i = 1, … , n k Y = β 0 + ∑ β j X ij + ε i , with i = 1, … , n j=1 ⎡β 0 ⎤ ⎢β ⎥ β = ⎢ 1⎥, ⎢#⎥ ⎢ ⎥ ⎣β k ⎦ ⎡ Y1 ⎤ Let Y = ⎢⎢ # ⎥⎥ , ⎢⎣Yn ⎥⎦ ⎡ ε1 ⎤ ε = ⎢⎢ # ⎥⎥ , ⎢⎣ε n ⎥⎦ ⎡1 X 11 # H = ⎢⎢# ⎢⎣1 X n1 X 12 # X n2 " X 1k ⎤ # # ⎥⎥ " X nk ⎥⎦ ⇒Y=Hβ+ε • Least Squares Estimation k e i = Yi − Ŷi = Yi − βˆ 0 − ∑ βˆ j X ij j=1 ⎡ e1 ⎤ e = ⎢⎢ # ⎥⎥ = Y − Hβˆ ⎢⎣e n ⎥⎦ T T T T T SS e = ∑ e i2 = ∑ e e = (Y − Hβˆ ) T (Y − Hβˆ ) = Y Y − 2Y Hβˆ + βˆ H Hβˆ i dSS e (β) dβ i =0 ⇒ T T βˆ = (H H) −1 H Y • Properties of β̂ (if εi ~ iid N(0, σ2)) T βˆ ~ N(β, σ 2 (H H) −1 ) • Properties of Residuals SS e ~ χ 2n − k −1 2 σ ⇒ σˆ 2 = SS e = MS e n − k −1 SYY = SSe + SSR R2 = 1− • SS e S YY Hypothesis Testing ⎡β ⎤ Let β = ⎢ 1 ⎥ , where β1 has τ1 components and β2 has τ2 = k − τ1 components. We ⎣β 2 ⎦ want to test H0: β2 = 0 against H1: β2 ≠ 0 (at least one component of β2 is non-zero). The procedure is as follows: - Fit the complete regression model and calculate SSR and SSe; - Fit the reduced model with β2 = 0, and calculate SS R1 ; - Let SS2|1 = SSR − SS R1 = extra sum of squares due to β2 when β1 is in the regression. - Distributional property of SS2|1. Under H0, SS 2|1 σ2 ~ χ 2τ 2 Also, SS2|1 and SSe are independent. Therefore, F= SS 2|1 / τ 2 SS e /( n − k − 1) ~ Fτ2 ,n − k −1 ⇒ Accept H0 if F < Fτ 2 ,n − k −1,α