Boeing’s Language Understanding Engine (BLUE) and its performance on Peter Clark

advertisement

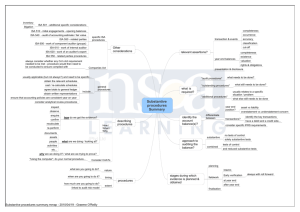

Boeing’s Language Understanding Engine (BLUE) and its performance on the STEP Shared Task Peter Clark Phil Harrison (Boeing Phantom Works) What is a Semantic Representation? (2.1) "Cervical cancer is caused by a virus." “Leftist” “Rightist” Semantic structure close to syntactic structure, need more downstream interpretation causes(virus01,c-cancer01) Semantics more explicit. forall c isa(c,c-cancer) → exists e,v isa(e,event), isa(v,virus), involves(e,v), causes(e,c) forall c isa(c,c-cancer) → exists v isa(v,virus), causes(v,c) Semantic Formalism Ground (Skolemized) first-order logic assertions No quantification (left for downstream processing) “An object is thrown from a cliff.” isa(object01,object_n1), isa(cliff01,cliff_n1), isa(throw01,throw_v1), object(throw01,object01), origin(throw01,cliff01). Embedding used for modals (6.3) "The farm hopes to make a profit" isa(farm, farm_n1), ….. agent(hope01,farm01), object(hope01,[ agent(make02,farm01), object(make02,profit02)]) BLUE’s Pipeline “An object is thrown from a cliff” Parser & LF Generator Initial Logic Generator Word Sense Disambiguation Semantic Role Labeling Coreference Resolution Metonymy Resolution Structural Reorganization isa(object01,object_n1), isa(cliff01,cliff_n1), isa(throw01,throw_v1), object(throw01,object01), origin(throw01,cliff01). Linguistic and World Knowledge Throw object Object origin Cliff 1. Parsing/Logical Form Generation Parsing: SAPIR, a bottom-up chart parser, guided by Hand-coded cost function “tuples” – examples of attachment preferences (VPN "fall" "onto" "floor") (VPN "flow" "into" "body") (NN "lead" "iodide") (NPN "force" "on" "block") (NPN "speed" "at" "point") (NPN "chromosome" "in" "cell") (NPN "force" "during" *) (NPN "distance" "from" *) (NPN "distance" "to" *) Several 100 authored by hand 53 million generated automatically Logical Form: Classical compositional rule-based approach 2. Initial logic generation “An object is thrown from a cliff” (DECL ((VAR _X1 "an" "object") (VAR _X2 "a" "cliff")) (S (PRESENT) NIL "throw" _X1 (PP "from" _X2)) "object"(object01), "cliff"(cliff01), "throw"(throw01), sobject(throw01,object01), "from"(throw01,cliff01). isa(object01,object_n1), isa(cliff01,cliff_n1), isa(throw01,throw_v1), object(throw01,object01), origin(throw01,cliff01). Logical form Initial Logic Final Logic 3. Subsequent Processing 1. Word Sense Disambiguation: Naïve use of WordNet (70% ok) For applications using CLib ontology, then do a mapping: WordNet CLib Ontology Physical-Object “object” Lexical Term Goal Concept (Word Sense) 3. Subsequent Processing 2. Semantic Role Labeling: ~40 semantic roles (from Univ Texas) Assign using a hand-built database of (~100) rules IF X “of” Y and X is Physical-Object and Y is Material THEN X material Y Throw Throw sobject Object “from” Cliff Moderate performance object Object origin Cliff 3. Subsequent Processing 3. Coreference Same name = same object Same/subsuming noun phrase = same object “The red block fell. The block that had fallen was damaged.” Ordinal reference “A block is on a block. The second block is larger.” 4. Metonymy resolution Mainly with CLib KB “H2O reacts with NaCl” React React raw-material H2O raw-material NaCl raw-material Chemical basic-unit H2O raw-material Chemical basic-unit NaCl 3. Subsequent Processing 5. Structural Reorganization Cliff “the height of the cliff” Cliff subject “the box contains a block” height kg Weight-Value Height Value Contain object Box Box “10 kg” property Block content property value Block 10 (:pair 10 kg) 3. Subsequent Processing 5. Structural Reorganization “be” and “have” subject (a) equality “the color of the block is red” Block color object Color Block (b) other relation subject “the block is red” Be Red color Be object Block Block Red Red color Red What does BLUE do, and how did it fare? What does BLUE do, and how did it fare? Predicate Argument Structure / Semantic Roles Vocabulary of ~40 semantic relations (from UTexas) Use small set of hand-coded SRL rules, e.g., IF “of”(object,material) THEN material(object,material) Occasionally will not make an assignment “An object is thrown from a cliff.” object(throw01,object01), origin(throw01,cliff01). (5.1) “I have a problem” has-part(i01,problem01). (4.1) “..training of dogs…” “of”(training01,dog1). What does BLUE do, and how did it fare? Attachment Correctness (or structural completeness/correctness) Use of “world knowledge” tuples to guide parsing Frequently right, sometimes not Occasional loss in the final logic (5.6) "Initially it was suspected that…” “Initially” lost (5.4) "When the gun crew was killed they were crouching unnaturally, which suggested that they knew that an explosion would happen.“ Parse but no logic out What does BLUE do, and how did it fare? Anaphora and Reference Resolution “an object…the object…” Cross-POS, “falls…the fall…”, “high…the height…” No pronoun resolution “lacks it” → object(lack01,it01) No propositional coreference “Cancer is caused by a virus. That has been known…” Unmentioned referent “…a restaurant…the waiter…” Not across names “Greenslow…the farm…” What does BLUE do, and how did it fare? Word Sense Disambiguation Naïve translation to WordNet (but 70% ok) (3.1) "John went into a restaurant.“ isa(John01, John_n1), agent(go01,John01), destination(go01,restaurant01). Saint John What does BLUE do, and how did it fare? Quantification / Treated as ground facts (“leftists” vs. “rightists”) “every”, “some” in initial LF, but not final logic (2.1) "Cervical cancer is caused by a virus." isa(cancer01,cancer_n1), isa(cervical01,cervical_a1), isa(virus01,virus_n1), causes(virus01,cancer01), mod(cancer01,cervical01). What does BLUE do, and how did it fare? / Negation, Modals, Conditionals, Disjunction Negation Modals Sentence polarity marked (5.2) "I am not ready yet." sentence-polarity(sentence,negative), "be"(i02,ready01). Embedded structures (4.5) "We would like our school to work similarly..." agent(like01,we01). object(like01,[ agent(work01,school01), manner(work01,similarly01)]) Conditionals Disjunction What does BLUE do, and how did it fare? Conjunction: BLUE multiplies out coordinates (3.4) "The atmosphere was warm and friendly" "be"(atmosphere01, warm01). "be"(atmosphere01, friendly01). (4.3) They visited places in England and France" object(visit01,place01), is-inside(place01,England01), is-inside(place01,France01). What does BLUE do, and how did it fare? Tense and Aspect Recorded in LF, not output in final logical form ? (4.5) "We would like our school to work similarly to the French ones..." ;;; Intermediate logical form (LF): (DECL ((VAR _X1 "we" NIL) (VAR _X2 "our" "school")) (S (WOULD) _X1 "like" _X2 (DECL ((VAR _X3 "the" (PLUR "one") (AN "French" "one") …. What does BLUE do, and how did it fare? Plurals Recorded in LF, not output in final logical form ? (4.5) "We would like our school to work similarly to the French ones..." ;;; Intermediate logical form (LF): (DECL ((VAR _X1 "we" NIL) (VAR _X2 "our" "school")) (S (WOULD) _X1 "like" _X2 (DECL ((VAR _X3 "the" (PLUR "one") (AN "French" "one") …. Collectives: use number-of-elements predicate (4.2) "seven people developed" isa(person01, person_n1), agent(develop01, person01), number-of-elements(person01,7). What does BLUE do, and how did it fare? Comparison Phrases Will do “-er” comparatives (not in shared task) "The cost was greater than ten dollars" isa(cost01,cost_n1), value(quantity01,[10,dollar_n1]), greater-than(cost01,quantity01). Won’t do “similarly to” (4.5) "We would like our school to work similarly to the French ones..." agent(work01,school04), manner(work01,similarly01), destination(work01,one01), What does BLUE do, and how did it fare? Time Tense and aspect recorded but not output Some temporal prepositions and phrases recognized (4.4) "ensures operation until 1999" time-ends(ensure01, year1999). (5.3) "...yelled. Then the propellant exploded" next-event(yell01,explode01). (5.4) "When they were killed, they were crouching" time-at(crouch01,kill01). But not all… (7.1) "the 1930s", (7.2) "the early 1970s", (7.4) "mid-'80s" (misparsed as an adjective), and (7.3) "the past 30 years" What does BLUE do, and how did it fare? Measurement Expressions (1.1) "125 m" value(height01,[125,m_n1]). Questions 5 types recognized True/false, find value, find identity, find subtypes, how many (1.4) "What is the duration?" query-for(duration01). What does BLUE do, and how did it fare? Clarity (to a naïve reader) (3.1) "John went into a restaurant.“ isa(John01, John_n1), isa(restaurant01,restaurant_n1), isa(go01,go_v1), named(John01,["John"]), agent(go01,John01), destination(go01,restaurant01). (3.3) "The waiter took the order.“ isa(waiter01,waiter_n1), isa(order01,tell_v4), isa(take01,take_v1), agent(take01,waiter01), object(take01,order01). Summary BLUE: Formalism: Skolemized first-order logic predicates Embedding for modals Mechanism: Parser → LF generator → initial logic → post-processing Performance: Many sentences are mostly right Most sentences have something wrong