Learning Language from its Perceptual Context Ray Mooney Department of Computer Sciences

advertisement

Learning Language from its

Perceptual Context

Ray Mooney

Department of Computer Sciences

University of Texas at Austin

Joint work with

David Chen

Rohit Kate

Yuk Wah Wong

1

Current State of

Natural Language Learning

• Most current state-of-the-art NLP systems

are constructed by training on large

supervised corpora.

–

–

–

–

Syntactic Parsing: Penn Treebank

Word Sense Disambiguation: SenseEval

Semantic Role Labeling: Propbank

Machine Translation: Hansards corpus

• Constructing such annotated corpora is

difficult, expensive, and time consuming.

2

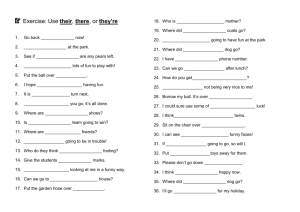

Semantic Parsing

• A semantic parser maps a natural-language

sentence to a complete, detailed semantic

representation: logical form or meaning

representation (MR).

• For many applications, the desired output is

immediately executable by another program.

• Two application domains:

– GeoQuery: A Database Query Application

– CLang: RoboCup Coach Language

3

GeoQuery:

A Database Query Application

• Query application for U.S. geography database

[Zelle & Mooney, 1996]

How many states

User

does the

Mississippi run

through?

Semantic Parsing

10

Query answer(A, count(B,

(state(B),

C=riverid(mississippi),

traverse(C,B)),

DataBase

A))

4

CLang: RoboCup Coach Language

• In RoboCup Coach competition teams compete to

coach simulated soccer players

• The coaching instructions are given in a formal

language called CLang

Coach

If the ball is in our

penalty area, then all our

players except player 4

should stay in our half.

Simulated soccer field

Semantic Parsing

CLang

((bpos (penalty-area our))

(do (player-except our{4}) (pos (half our)))

5

Learning Semantic Parsers

• Manually programming robust semantic parsers

is difficult due to the complexity of the task.

• Semantic parsers can be learned automatically

from sentences paired with their logical form.

NLMR

Training Exs

Natural

Language

Semantic-Parser

Learner

Semantic

Parser

Meaning

Rep

6

Our Semantic-Parser Learners

• CHILL+WOLFIE (Zelle & Mooney, 1996; Thompson & Mooney,

1999, 2003)

– Separates parser-learning and semantic-lexicon learning.

– Learns a deterministic parser using ILP techniques.

• COCKTAIL (Tang & Mooney, 2001)

– Improved ILP algorithm for CHILL.

• SILT (Kate, Wong & Mooney, 2005)

– Learns symbolic transformation rules for mapping directly from NL to MR.

• SCISSOR (Ge & Mooney, 2005)

– Integrates semantic interpretation into Collins’ statistical syntactic parser.

• WASP (Wong & Mooney, 2006; 2007)

– Uses syntax-based statistical machine translation methods.

• KRISP (Kate & Mooney, 2006)

– Uses a series of SVM classifiers employing a string-kernel to iteratively build

semantic representations.

7

WASP

A Machine Translation Approach to Semantic Parsing

• Uses statistical machine translation

techniques

– Synchronous context-free grammars (SCFG)

(Wu, 1997; Melamed, 2004; Chiang, 2005)

– Word alignments (Brown et al., 1993; Och &

Ney, 2003)

• Hence the name: Word Alignment-based

Semantic Parsing

8

A Unifying Framework for

Parsing and Generation

Natural Languages

Machine

translation

9

A Unifying Framework for

Parsing and Generation

Natural Languages

Semantic parsing

Machine

translation

Formal Languages

10

A Unifying Framework for

Parsing and Generation

Natural Languages

Semantic parsing

Machine

translation

Tactical generation

Formal Languages

11

A Unifying Framework for

Parsing and Generation

Synchronous Parsing

Natural Languages

Semantic parsing

Machine

translation

Tactical generation

Formal Languages

12

A Unifying Framework for

Parsing and Generation

Synchronous Parsing

Natural Languages

Semantic parsing

Machine

translation

Compiling:

Aho & Ullman

(1972)

Tactical generation

Formal Languages

13

Synchronous Context-Free Grammars

(SCFG)

• Developed by Aho & Ullman (1972) as a

theory of compilers that combines syntax

analysis and code generation in a single

phase.

• Generates a pair of strings in a single

derivation.

14

Synchronous Context-Free Grammar

Production Rule

Natural language

Formal language

QUERY What is CITY / answer(CITY)

15

Synchronous Context-Free Grammar

Derivation

QUERY

What

is

the

QUERY

answer

CITY

capital

of

(

capital

CITY

STATE

Ohio

CITY

(

loc_2

)

CITY

(

stateid

)

STATE

(

)

'ohio'

)

CITY

Ohio

the

capital

CITY

capital(CITY)

QUERY

CITY

What

of

STATE

is

CITY

loc_2(STATE)

// answer(CITY)

answer(capital(loc_2(stateid('ohio'))))

STATE

Ohio

//stateid('ohio')

What is the capital

of

16

Probabilistic Parsing Model

d1

CITY

CITY

capital

capital

CITY

of

STATE

Ohio

(

loc_2

CITY

(

)

STATE

stateid

(

)

'ohio'

)

CITY capital CITY / capital(CITY)

CITY of STATE / loc_2(STATE)

STATE Ohio / stateid('ohio')

17

Probabilistic Parsing Model

d2

CITY

CITY

capital

capital

CITY

of

RIVER

Ohio

(

loc_2

CITY

(

)

RIVER

riverid

(

)

'ohio'

)

CITY capital CITY / capital(CITY)

CITY of RIVER / loc_2(RIVER)

RIVER Ohio / riverid('ohio')

18

Probabilistic Parsing Model

d1

d2

CITY

capital

(

loc_2

CITY

(

stateid

)

capital

STATE

(

CITY

)

'ohio'

loc_2

)

CITY capital CITY / capital(CITY)

0.5

CITY of STATE / loc_2(STATE)

0.3

STATE Ohio / stateid('ohio')

0.5

+

(

CITY

(

riverid

λ

Pr(d1|capital of Ohio) = exp( 1.3 ) / Z

)

RIVER

(

)

'ohio'

)

CITY capital CITY / capital(CITY)

0.5

CITY of RIVER / loc_2(RIVER)

0.05

RIVER Ohio / riverid('ohio')

0.5

+

λ

Pr(d2|capital of Ohio) = exp( 1.05 ) / Z

normalization constant

19

Overview of WASP

Unambiguous CFG of MRL

Lexical acquisition

Training set, {(e,f)}

Lexicon, L (an SCFG)

Parameter estimation

Training

SCFG parameterized by λ

Testing

Input sentence, e'

Semantic parsing

Output MR, f'

20

Tactical Generation

• Can be seen as inverse of semantic parsing

The goalie should always stay in our half

Semantic parsing

Tactical generation

((true) (do our {1} (pos (half our))))

21

Generation by Inverting WASP

• Same synchronous grammar is used for

both generation and semantic parsing.

Tactical generation:

Semantic

parsing:

NL:

Input

Output

MRL:

QUERY What is CITY / answer(CITY)

22

Learning Language from

Perceptual Context

• Children do not learn language from

annotated corpora.

• Neither do they learn language from just

reading the newspaper, surfing the web, or

listening to the radio.

• The natural way to learn language is to

perceive language in the context of its use

in the physical and social world.

• This requires inferring the meaning of

utterances from their perceptual context.

23

Language Grounding

• The meanings of many words are grounded in our

perception of the physical world: red, ball, cup, run, hit,

fall, etc.

– Symbol Grounding: Harnad (1990)

• Even many abstract words and meanings are metaphorical

abstractions of terms grounded in the physical world: up,

down, over, in, etc.

– Lakoff and Johnson’s Metaphors We Live By

• Its difficult to put my words into ideas.

• Interest in competitions is up.

• Most work in NLP tries to represent meaning without any

connection to perception or to the physical world;

circularly defining the meanings of words in terms of other

words or meaningless symbols with no firm foundation.

24

“Mary is on the phone”

???

25

25

Ambiguous Supervision for Learning

Semantic Parsers

• A computer system simultaneously exposed to

perceptual contexts and natural language utterances

should be able to learn the underlying language

semantics.

• We consider ambiguous training data of sentences

associated with multiple potential MRs.

– Siskind (1996) uses this type “referentially uncertain”

training data to learn meanings of words.

• Extracting meaning representations from perceptual

data is a difficult unsolved problem.

– Our system directly works with symbolic MRs.

“Mary is on the phone”

???

27

27

???

“Mary is on the phone”

28

28

Ironing(Mommy, Shirt)

???

“Mary is on the phone”

29

29

Ironing(Mommy, Shirt)

Working(Sister, Computer)

???

“Mary is on the phone”

30

30

Ironing(Mommy, Shirt)

Carrying(Daddy, Bag)

Working(Sister, Computer)

???

“Mary is on the phone”

31

31

Ambiguous Training Example

Ironing(Mommy, Shirt)

Carrying(Daddy, Bag)

Working(Sister, Computer)

Talking(Mary, Phone)

Sitting(Mary, Chair)

???

“Mary is on the phone”

32

32

Next Ambiguous Training Example

Ironing(Mommy, Shirt)

Working(Sister, Computer)

Talking(Mary, Phone)

Sitting(Mary, Chair)

???

“Mommy is ironing a shirt”

33

33

Ambiguous Supervision for Learning

Semantic Parsers contd.

• Our model of ambiguous supervision

corresponds to the type of data that will be

gathered from a temporal sequence of

perceptual contexts with occasional

language commentary.

• We assume each sentence has exactly one

meaning in its perceptual context.

– Recently extended to handle sentences with no

meaning in its perceptual context.

• Each meaning is associated with at most

one sentence.

Sample Ambiguous Corpus

gave(daisy, clock, mouse)

Daisy gave the clock to the mouse.

ate(mouse, orange)

ate(dog, apple)

Mommy saw that Mary gave the

hammer to the dog.

saw(mother,

gave(mary, dog, hammer))

broke(dog, box)

The dog broke the box.

gave(woman, toy, mouse)

gave(john, bag, mouse)

John gave the bag to the mouse.

threw(dog, ball)

runs(dog)

The dog threw the ball.

saw(john, walks(man, dog))

Forms a bipartite graph

35

KRISPER:

KRISP with EM-like Retraining

• Extension of KRISP that learns from

ambiguous supervision.

• Uses an iterative EM-like method to

gradually converge on a correct meaning

for each sentence.

KRISPER’s Training Algorithm

1. Assume every possible meaning for a sentence is correct

gave(daisy, clock, mouse)

Daisy gave the clock to the mouse.

ate(mouse, orange)

ate(dog, apple)

Mommy saw that Mary gave the

hammer to the dog.

saw(mother,

gave(mary, dog, hammer))

broke(dog, box)

The dog broke the box.

gave(woman, toy, mouse)

gave(john, bag, mouse)

John gave the bag to the mouse.

The dog threw the ball.

threw(dog, ball)

runs(dog)

saw(john, walks(man, dog))

37

KRISPER’s Training Algorithm

1. Assume every possible meaning for a sentence is correct

gave(daisy, clock, mouse)

Daisy gave the clock to the mouse.

ate(mouse, orange)

ate(dog, apple)

Mommy saw that Mary gave the

hammer to the dog.

saw(mother,

gave(mary, dog, hammer))

broke(dog, box)

The dog broke the box.

gave(woman, toy, mouse)

gave(john, bag, mouse)

John gave the bag to the mouse.

The dog threw the ball.

threw(dog, ball)

runs(dog)

saw(john, walks(man, dog))

38

KRISPER’s Training Algorithm

2. Resulting NL-MR pairs are weighted and given to KRISP

gave(daisy, clock, mouse)

1/2

Daisy gave the clock to the mouse.

1/2

1/4

1/4

Mommy saw that Mary gave the

1/4

hammer to the dog.

1/4

The dog broke the box.

1/5 1/5

1/5

1/5 1/5

1/3 1/3

John gave the bag to the mouse.

1/3

1/3

The dog threw the ball.

1/3

1/3

ate(mouse, orange)

ate(dog, apple)

saw(mother,

gave(mary, dog, hammer))

broke(dog, box)

gave(woman, toy, mouse)

gave(john, bag, mouse)

threw(dog, ball)

runs(dog)

saw(john, walks(man, dog))

39

KRISPER’s Training Algorithm

3. Estimate the confidence of each NL-MR pair using the

gave(daisy, clock, mouse)

resulting trained parser

0.92

Daisy gave the clock to the mouse.

0.11

0.32

0.88

Mommy saw that Mary gave the

0.22

hammer to the dog.

0.24

0.71 0.18

0.85

The dog broke the box.

0.14

0.95

0.24 0.89

John gave the bag to the mouse.

0.33

0.97

The dog threw the ball.

0.81

0.34

ate(mouse, orange)

ate(dog, apple)

saw(mother,

gave(mary, dog, hammer))

broke(dog, box)

gave(woman, toy, mouse)

gave(john, bag, mouse)

threw(dog, ball)

runs(dog)

saw(john, walks(man, dog))

40

KRISPER’s Training Algorithm

4. Use maximum weighted matching on a bipartite graph

to find the best NL-MR pairs [Munkres, 1957]

gave(daisy, clock, mouse)

0.92

Daisy gave the clock to the mouse.

0.11

0.32

0.88

Mommy saw that Mary gave the

0.22

hammer to the dog.

0.24

0.71 0.18

0.85

The dog broke the box.

0.14

0.95

0.24 0.89

John gave the bag to the mouse.

0.33

0.97

The dog threw the ball.

0.81

0.34

ate(mouse, orange)

ate(dog, apple)

saw(mother,

gave(mary, dog, hammer))

broke(dog, box)

gave(woman, toy, mouse)

gave(john, bag, mouse)

threw(dog, ball)

runs(dog)

saw(john, walks(man, dog))

41

KRISPER’s Training Algorithm

4. Use maximum weighted matching on a bipartite graph

to find the best NL-MR pairs [Munkres, 1957]

gave(daisy, clock, mouse)

0.92

Daisy gave the clock to the mouse.

0.11

0.32

0.88

Mommy saw that Mary gave the

0.22

hammer to the dog.

0.24

0.71 0.18

0.85

The dog broke the box.

0.14

0.95

0.24 0.89

John gave the bag to the mouse.

0.33

0.97

The dog threw the ball.

0.81

0.34

ate(mouse, orange)

ate(dog, apple)

saw(mother,

gave(mary, dog, hammer))

broke(dog, box)

gave(woman, toy, mouse)

gave(john, bag, mouse)

threw(dog, ball)

runs(dog)

saw(john, walks(man, dog))

42

KRISPER’s Training Algorithm

5. Give the best pairs to KRISP in the next iteration,

and repeat until convergence

gave(daisy, clock, mouse)

Daisy gave the clock to the mouse.

ate(mouse, orange)

ate(dog, apple)

Mommy saw that Mary gave the

hammer to the dog.

saw(mother,

gave(mary, dog, hammer))

broke(dog, box)

The dog broke the box.

gave(woman, toy, mouse)

gave(john, bag, mouse)

John gave the bag to the mouse.

The dog threw the ball.

threw(dog, ball)

runs(dog)

saw(john, walks(man, dog))

43

Results on Ambig-ChildWorld Corpus

100

90

Best F-measure

80

70

60

No ambiguity

Level 1 ambiguity

Level 2 ambiguity

Level 3 ambiguity

50

40

30

20

10

0

225

450

675

Number of training examples

900

New Challenge:

Learning to Be a Sportscaster

• Goal: Learn from realistic data of natural

language used in a representative context

while avoiding difficult issues in computer

perception (i.e. speech and vision).

• Solution: Learn from textually annotated

traces of activity in a simulated

environment.

• Example: Traces of games in the Robocup

simulator paired with textual sportscaster

commentary.

45

Grounded Language Learning

in Robocup

Robocup Simulator

Simulated

Perception

Perceived Facts

Sportscaster

Score!!!!

Grounded

Language Learner

Language

Generator

SCFG

Semantic

Parser

Score!!!!

46

Robocup Sportscaster Trace

Natural Language Commentary

Meaning Representation

pass ( purple7 , purple6 )

purple7 passes the ball out to purple6

ballstopped

kick ( purple6 )

purple6 passes to purple2

pass ( purple6 , purple2 )

ballstopped

kick ( purple2 )

purple2 makes a short pass to purple3

pass ( purple2 , purple3 )

kick ( purple3 )

badPass ( purple3 , pink9 )

turnover ( purple3 , pink9 )

purple3 loses the ball to pink9

•47

Robocup Sportscaster Trace

Natural Language Commentary

Meaning Representation

pass ( purple7 , purple6 )

purple7 passes the ball out to purple6

ballstopped

kick ( purple6 )

purple6 passes to purple2

pass ( purple6 , purple2 )

ballstopped

kick ( purple2 )

purple2 makes a short pass to purple3

pass ( purple2 , purple3 )

kick ( purple3 )

badPass ( purple3 , pink9 )

turnover ( purple3 , pink9 )

purple3 loses the ball to pink9

•48

Robocup Sportscaster Trace

Natural Language Commentary

Meaning Representation

pass ( purple7 , purple6 )

purple7 passes the ball out to purple6

ballstopped

kick ( purple6 )

purple6 passes to purple2

pass ( purple6 , purple2 )

ballstopped

kick ( purple2 )

purple2 makes a short pass to purple3

pass ( purple2 , purple3 )

kick ( purple3 )

badPass ( purple3 , pink9 )

turnover ( purple3 , pink9 )

purple3 loses the ball to pink9

•49

Sportscasting Data

• Collected human textual commentary for the 4

Robocup championship games from 2001-2004.

– Avg # events/game = 2,613

– Avg # sentences/game = 509

• Each sentence matched to all events within

previous 5 seconds.

– Avg # MRs/sentence = 2.5 (min 1, max 12)

• Manually annotated with correct matchings of

sentences to MRs (for evaluation purposes only).

50

WASPER

• WASP with EM-like retraining to handle

ambiguous training data.

• Same augmentation as added to KRISP to

create KRISPER.

51

KRISPER-WASP

• First iteration of EM-like training produces very

noisy training data (> 50% errors).

• KRISP is better than WASP at handling noisy

training data.

– SVM prevents overfitting.

– String kernel allows partial matching.

• But KRISP does not support language generation.

• First train KRISPER just to determine the best

NL→MR matchings.

• Then train WASP on the resulting unambiguously

supervised data.

52

WASPER-GEN

• In KRISPER and WASPER, the correct MR for

each sentence is chosen based on maximizing the

confidence of semantic parsing (NL→MR).

• Instead, WASPER-GEN determines the best

matching based on generation (MR→NL).

• Score each potential NL/MR pair by using the

currently trained WASP-1 generator.

• Compute NIST MT score (alternative to BLEU

score) between the generated sentence and the

potential matching sentence.

53

Strategic Generation

• Generation requires not only knowing how

to say something (tactical generation) but

also what to say (strategic generation).

• For automated sportscasting, one must be

able to effectively choose which events to

describe.

54

Example of Strategic Generation

pass ( purple7 , purple6 )

ballstopped

kick ( purple6 )

pass ( purple6 , purple2 )

ballstopped

kick ( purple2 )

pass ( purple2 , purple3 )

kick ( purple3 )

badPass ( purple3 , pink9 )

turnover ( purple3 , pink9 )

55

Example of Strategic Generation

pass ( purple7 , purple6 )

ballstopped

kick ( purple6 )

pass ( purple6 , purple2 )

ballstopped

kick ( purple2 )

pass ( purple2 , purple3 )

kick ( purple3 )

badPass ( purple3 , pink9 )

turnover ( purple3 , pink9 )

56

Learning for Strategic Generation

• For each event type (e.g. pass, kick)

estimate the probability that it is described

by the sportscaster.

• Requires NL/MR matching that indicates

which events were described, but this is not

provided in the ambiguous training data.

– Use estimated matching computed by

KRISPER, WASPER or WASPER-GEN.

– Use a version of EM to determine the

probability of mentioning each event type just

based on strategic info.

57

EM for Strategic Generation

purple7 passes the ball out to purple6

1

pass ( purple7 , purple6 )

1/4

1/4

1/4

purple6 passes to purple2

1/4

1/4

1/4

purple2 makes a short pass to purple3

1/4

1/4

1/5

1/5

1/5

1/5

purple3 loses the ball to pink9

1/5

ballstopped

kick ( purple6 )

pass ( purple6 , purple2 )

ballstopped

kick ( purple2 )

pass ( purple2 , purple3 )

kick ( purple3 )

badPass ( purple3 , pink9 )

turnover ( purple3 , pink9 )

58

EM for Strategic Generation

Estimate Generation Probs

purple7 passes the ball out to purple6

1

pass ( purple7 , purple6 )

1/4

1/4

1/4

purple6 passes to purple2

1/4

1/4

1/4

purple2 makes a short pass to purple3

1/4

1/4

1/5

1/5

1/5

1/5

purple3 loses the ball to pink9

1/5

P(pass)=(1+1/4+1/4+1/4+1/5)/3=0.65

ballstopped

kick ( purple6 )

pass ( purple6 , purple2 )

ballstopped

kick ( purple2 )

pass ( purple2 , purple3 )

kick ( purple3 )

badPass ( purple3 , pink9 )

turnover ( purple3 , pink9 )

59

EM for Strategic Generation

Estimate Generation Probs

purple7 passes the ball out to purple6

1

pass ( purple7 , purple6 )

1/4

1/4

1/4

purple6 passes to purple2

1/4

1/4

1/4

purple2 makes a short pass to purple3

1/4

1/4

1/5

1/5

1/5

1/5

purple3 loses the ball to pink9

1/5

ballstopped

kick ( purple6 )

pass ( purple6 , purple2 )

ballstopped

kick ( purple2 )

pass ( purple2 , purple3 )

kick ( purple3 )

badPass ( purple3 , pink9 )

turnover ( purple3 , pink9 )

P(pass)=0.65 P(ballstopped)=(1/4+1/4)/2=0.25

60

EM for Strategic Generation

Estimate Generation Probs

purple7 passes the ball out to purple6

1

pass ( purple7 , purple6 )

1/4

1/4

1/4

purple6 passes to purple2

1/4

1/4

1/4

purple2 makes a short pass to purple3

1/4

1/4

1/5

1/5

1/5

1/5

purple3 loses the ball to pink9

1/5

ballstopped

kick ( purple6 )

pass ( purple6 , purple2 )

ballstopped

kick ( purple2 )

pass ( purple2 , purple3 )

kick ( purple3 )

badPass ( purple3 , pink9 )

turnover ( purple3 , pink9 )

P(pass)=0.65 P(ballstopped)=0.25 P(kick)=(1/4+1/4+1/5+1/5)/3=0.3

61

EM for Strategic Generation

Estimate Generation Probs

purple7 passes the ball out to purple6

1

pass ( purple7 , purple6 )

1/4

1/4

1/4

purple6 passes to purple2

1/4

1/4

1/4

purple2 makes a short pass to purple3

1/4

1/4

1/5

1/5

1/5

1/5

purple3 loses the ball to pink9

1/5

ballstopped

kick ( purple6 )

pass ( purple6 , purple2 )

ballstopped

kick ( purple2 )

pass ( purple2 , purple3 )

kick ( purple3 )

badPass ( purple3 , pink9 )

turnover ( purple3 , pink9 )

P(pass)=0.65 P(ballstopped)=0.25 P(kick)=0.3 P(badpass)=0.2

P(turnover)=0.2

62

EM for Strategic Generation

Reassign link weights

purple7 passes the ball out to purple6

0.65

pass ( purple7 , purple6 )

0.65

0.25

0.3

purple6 passes to purple2

0.65

0.65

0.25

purple2 makes a short pass to purple3

0.3

0.65

0.3

0.65

0.3

0.2

purple3 loses the ball to pink9

0.2

ballstopped

kick ( purple6 )

pass ( purple6 , purple2 )

ballstopped

kick ( purple2 )

pass ( purple2 , purple3 )

kick ( purple3 )

badPass ( purple3 , pink9 )

turnover ( purple3 , pink9 )

P(pass)=0.65 P(ballstopped)=0.25 P(kick)=0.3 P(badpass)=0.2

P(turnover)=0.2

63

EM for Strategic Generation

Normalize link weights

purple7 passes the ball out to purple6

1.0

pass ( purple7 , purple6 )

0.35

0.14

0.16

purple6 passes to purple2

0.35

0.35

0.14

purple2 makes a short pass to purple3

0.16

0.35

0.18

0.39

0.18

0.12

purple3 loses the ball to pink9

0.12

ballstopped

kick ( purple6 )

pass ( purple6 , purple2 )

ballstopped

kick ( purple2 )

pass ( purple2 , purple3 )

kick ( purple3 )

badPass ( purple3 , pink9 )

turnover ( purple3 , pink9 )

P(pass)=0.65 P(ballstopped)=0.25 P(kick)=0.3 P(badpass)=0.2

P(turnover)=0.2

64

EM for Strategic Generation

Recalculate Generation Probs and Repeat Until Convergence

purple7 passes the ball out to purple6

1.0

pass ( purple7 , purple6 )

0.35

0.14

0.16

purple6 passes to purple2

0.35

0.35

0.14

purple2 makes a short pass to purple3

0.16

0.35

0.18

0.39

0.18

0.12

purple3 loses the ball to pink9

0.12

ballstopped

kick ( purple6 )

pass ( purple6 , purple2 )

ballstopped

kick ( purple2 )

pass ( purple2 , purple3 )

kick ( purple3 )

badPass ( purple3 , pink9 )

turnover ( purple3 , pink9 )

Demo

• Game clip commentated using WASPERGEN with EM-based strategic generation,

since this gave the best results for generation.

• FreeTTS was used to synthesize speech from

textual output.

Experimental Evaluation

• Generated learning curves by training on all

combinations of 1 to 3 games and testing on all

games not used for training.

• Baselines:

– Random Matching: WASP trained on random choice of

possible MR for each comment.

– Gold Matching: WASP trained on correct matching of MR

for each comment.

• Metrics:

– Precision: % of system’s annotations that are correct

– Recall: % of gold-standard annotations correctly produced

– F-measure: Harmonic mean of precision and recall

Evaluating Matching Accuracy

• Measure how accurately various methods

assign MRs to sentences in the ambiguous

training data.

• Use gold-standard matches to evaluate

correctness.

Results on Matching

Evaluating Semantic Parsing

• Measure how accurately learned parser

maps sentences to their correct meanings in

the test games.

• Use the gold-standard matches to determine

the correct MR for each sentence that has

one.

• Generated MR must exactly match goldstandard to count as correct.

Results on Semantic Parsing

Evaluating Tactical Generation

• Measure how accurately NL generator

produces English sentences for chosen MRs

in the test games.

• Use gold-standard matches to determine the

correct sentence for each MR that has one.

• Use NIST score to compare generated

sentence to the one in the gold-standard.

Results on Tactical Generation

Evaluating Strategic Generation

• In the test games, measure how accurately

the system determines which perceived

events to comment on.

• Compare the subset of events chosen by the

system to the subset chosen by the human

annotator (as given by the gold-standard

matching).

Results on Strategic Generation

Human Evaluation

(Quasi Turing Test)

• Asked 4 fluent English speakers to evaluate overall

quality of sportscasts.

• Randomly picked a 2 minute segment from each of the

4 games.

• Each human judge evaluated 8 commented game clips,

each of the 4 segments commented once by a human

and once by the machine when tested on that game.

• The 8 clips presented to each judge were shown in

random counter-balanced order.

• Judges were not told which ones were human or

machine generated.

76

Human Evaluation Metrics

Score

English

Fluency

Semantic

Correctness

Sportscasting

Ability

5

Flawless

Always

Excellent

4

Good

Usually

Good

3

Non-native

Sometimes

Average

2

Disfluent

Rarely

Bad

1

Gibberish

Never

Terrible

77

Results on Human Evaluation

Commentator

English

Fluency

Semantic

Correctness

Sportscasting

Ability

Human

3.94

4.25

3.63

Machine

3.44

3.56

2.94

78

Immediate Future Directions

• Use strategic generation information to improve

resolution of ambiguous training data.

• Produce generation confidences (instead of NIST

scores) for scoring NL/MR matches in WASPERGEN.

• Improve WASP’s ability to handle noisy training

data.

• Improve simulated perception to extract more

detailed and interesting symbolic facts from the

simulator.

Longer Term Future Directions

• Apply approach to learning situated

language in a computer video-game

environment (Gorniak & Roy, 2005)

– Teach game AI’s how to talk to you!

• Apply approach to captioned images or

video using computer vision to extract

objects, relations, and events from real

perceptual data (Fleischman & Roy, 2007)

Conclusions

• Current language learning work uses

expensive, unrealistic training data.

• We have developed a language learning

system that can learn from language paired

with an ambiguous perceptual environment.

• We have evaluated it on the task of learning

to sportscast simulated Robocup games.

• The system learns to sportscast almost as

well as humans.

81