CS514: Intermediate Course in Operating Systems Lecture 26: Nov. 28

advertisement

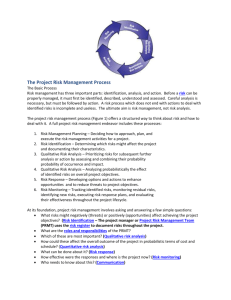

CS514: Intermediate Course in Operating Systems Professor Ken Birman Ben Atkin: TA Lecture 26: Nov. 28 Scalability • We’ve heard about many technologies • Looked at implementations and features and reliability issues – Illustrate with real systems • But what about scalability? Dimensions of Scalability • We often say that we want systems that “scale” • But what does scalability mean? • As with reliability & security, the term “scalability” is very much in the eye of the beholder Scalability • Marketing perspective: – Does a technology potentially reach large numbers of consumers? – Can the vendor sell it without deploying human resources in proportion to the size of the market? – Is the market accessible with finite resources? • Not our “worry” here in CS514, but in the real world you’ll need to keep this point of view in mind! Scalability • As a reliability question: – Suppose a system experiences some rate of disruptions r – How does r change as a function of the size of the system? • If r rises when the system gets larger we would say that the system scales poorly • Need to ask what “disruption” means, and what “size” means… Scalability • As a management question – Suppose it takes some amount of effort to set up the system – How does this effort rise for a larger configuration? – Can lead to surprising discoveries • E.g. the 2-machine demo is easy, but setup for 100 machines is extremely hard to define Scalability • As a question about throughput – Suppose the system can do t operations each second – Now I make the system larger • Does t increase as a function of system size? Decrease? • Is the behavior of the system stable, or unstable? Scalability • As a question about dependency on configuration – Many technologies need to know something about the network setup or properties – The larger the system, the less we know! – This can make a technology fragile, hard to configure, and hence poorly scalable Scalability • As a question about costs – Most systems have a basic cost • E.g. 2pc “costs” 3N messages – And many have a background overhead • E.g. gossip involves sending one message per round, receiving (on avg) one per round, and doing some retransmission work (rarely) • Can ask how these costs change as we make our system larger, or make the network noisier, etc Scalability • As a question about environments – Small systems are well-behaved – But large ones are more like the Internet • Packet loss rates and congestion can be problems • Performance gets bursty and erratic • More heterogeneity of connections and of machines on which applications run – The larger the environment, the nastier it may be! Scalability • As a pro-active question – How can we design for scalability? – We know a lot about technologies now – Are certain styles of system more scalable than others? Approaches • Many ways to evaluate systems: – – – – Experiments on the real system Emulation environments Simulation Theoretical (“analytic”) • But we need to know what we want to evaluate Dangers • “Lies, damn lies, and statistics” – It is much to easy to pick some random property of a system, graph it as a function of something, and declare success – We need sophistication in designing our evaluation or we’ll miss the point • Example: message overhead of gossip – Technically, O(n) – Does any process or link see this cost? • Perhaps not, if protocol is designed carefully Technologies we’ve seen • TCP/IP and O/S message-passing architectures like U-Net • RPC and client-server architectures • Transactions and nested transactions • Virtual synchrony and replication • Other forms of multicast • Object oriented architectures • Cluster management facilities How well do they scale? • Consider a web server • Life consists of responding to HTTP requests – RPC over TCP • Where are the costs? – TCP: lots of channels, raises costs • For example, “SO_LINGER” issue • Also uncoordinated congestion issue – Disk I/O bottleneck… Web server • Usual solution is to split server over many machines, spray requests • Jim Gray calls this a cloned architecture • Works best for unchanging data (so-called soft-state replication) Web server • Failure detection: a scaling limit – With very large server, failure detection gets hard • If a node crashes, how should we detect this? • Need for “witnesses”? A “jury”? • Notification architecture… • Modes of failure may grow with scale Web server • Sample scaling issue – Suppose failure detection is “slow” – With more nodes, we have • More failures • More opportunities for anomalous behavior that may disrupt response – Load balancing system won’t know about failures for a while… so it might send work to faulty nodes, creating a perception of down time! Porcupine • This system was a scalable email server built at U. Wash • It uses a partitioning scheme to spread load and a simple faulttolerance scheme • Papers show near-linear scalability in cluster size Porcupine • Does Porcupine scale? – Papers says “yes” – But in their work we can see a reconfiguration disruption when nodes fail or recover • With larger scale, frequency of such events will rise • And the cost is linear in system size – Very likely that on large clusters this overhead would become dominent! Transactions • Do transactional systems scale? – A complicated question – Scale in: • Number of users & patterns of use • Number of participating servers • Use or non-use of replication – Different mixes give different results Transactions • Argus didn’t scale all that well – This was the MIT system we discussed, with transactions in the language itself – High costs of 2PC and concurrency control motivated a whole series of work-arounds • Some worked well, like “lazy commit” • But others seemed awkward, like “top-level transactions” – But how typical is Argus? Transactions • Transactional replicated data – Not very scalable – Costly quorum reads and writes • Read > 1 nodes, write many too • And 2PC gets costly – Jim Gray wrote a paper on this • Concludes that naïve replication will certainly not scale Transactions • Yet transactions can scale in some dimensions – Many very, very large data server architectures have been proposed – Partitioned database architectures offer a way to scale on clusters – And queries against a database can scale if we clone the database Transactions • Gray talks about RAPS, RACS and Farms – Reliable Array of Partitioned/Cloned Servers – Farm is a set of such clusters • Little technology exists in support of this model – But the model is an attractive one – It forces database people to think in terms of scalability from the outset, by structuring their database with scalability as a primary goal! Replicated Data • Common to view reliable multicast as a tool for replicating data • Here, scalability is tied to data model – Virtual synchrony works best for small groups – Pbcast has a weaker replication model but scales much better for large ones • If our goals were stability of a video stream or some other application we might opt for other design points Air Force JBI • Goal of anywhere, anytime access to replicated data • Model selected assumes – Publish-subscribe communication – Objects represented using XML – XML ontology basis of a filtering scheme • Basically, think of objects as “forms” • Subscriber can fill in some values for fields where specific values are desired • Data is delivered where an object matches JBI has many subproblems • Name-space issue (the XML ontology used for filtering) • Online data delivery • Tracking of real-time state – Probably, need an in-core database at the user • Long-term storage – Some form of database that can be queried – Apparently automatic and self-organized Air Force JBI • Does this model scale? – Need to ask what properties are needed for applications • Real-time? Rapid response? Stability? • Guarantees of reliability? – Also need to ask what desired model of use would be • Specific applications? Or arbitrary ones? • Steady patterns of data need? Or dynamic? – And questions arise about the network Publish-subscribe • Does publish-subscribe scale? – In practice, publish-subscribe does poorly with large networks • Fragile, easily disrupted • Over-sensitive to network configuration • Behavior similar to that of SRM (high overheads in large networks) – But IBM Gryphon router does better Use Gryphon in JBI? • Raises a new problem – Not all data is being sent at all times – In fact most data lives in a “repository” • Must the JBI be capable of solving application-specific database layout problems in a general, automatic way? • Some data is sent in real-time, some sent in slow-motion. • Some data is only sent when requested – Gryphon only deals with the online part JBI Scalability • Points to the dilemma seen in many scalability settings – Systems are complex – Use patterns are complex – Very hard to pose the question in a way that can be answered convincingly How do people evaluate such questions? • Build one and evaluate it – But implementation could limit performance and might conclude the wrong thing • Evaluate component technology options (existing products) – But these were tuned for other uses and perhaps the JBI won’t match the underlying assumptions • Design a simulation – But only as good as your simulation… Two Side-by-Side Case Studies • American Advanced Automation System – Intended as replacement for air traffic control system – Needed because Pres. Reagan fired many controllers in 1981 – But project was a fiasco, lost $6B • French Phidias System – Similar goals, slightly less ambitious – But rolled out, on time and on budget, in 1999 Background • Air traffic control systems are using 1970’s technology • Extremely costly to maintain and impossible to upgrade • Meanwhile, load on controllers is rising steadily • Can’t easily reduce load Politics • Government wanted to upgrade the whole thing, solve a nagging problem • Controllers demanded various simplifications and powerful new tools • Everyone assumed that what you use at home can be adapted to the demands of an air traffic control center Technology • IBM bid the project, proposed to use its own workstations • These aren’t super reliable, so they proposed to adapt a new approach to “fault-tolerance” • Idea is to plan for failure – Detect failures when they occur – Automatically switch to backups Core Technical Issue? • Problem revolves around high availability • Waiting for restart not seen as an option: goal is 10sec downtime in 10 years • So IBM proposed a replication scheme much like the “load balancing” approach • IBM had primary and backup simply do the same work, keeping them in the same state Technology radar find tracks Identify flight Lookup record Plan actions Human action Conceptual flow of system IBM’s fault-tolerant process pair concept radar radar find find tracks tracks Identify Lookup Identify Lookup flight record flight record Plan Plan actions actions Human Human action action Why is this Hard? • The system has many “real-time” constraints on it – Actions need to occur promptly – Even if something fails, we want the human controller to continue to see updates • IBM’s technology – Based on a research paper by Flaviu Cristian – Each “process” replaced by a pair, but elements behave identically (duplicated actions are detected and automatically discarded) – But had never been used except for proof of concept purposes, on a small scale in the laboratory Politics • IBM’s proposal sounded good… – … and they were the second lowest bidder – … and they had the most aggressive schedule • So the FAA selected them over alternatives • IBM took on the whole thing all at once Disaster Strikes • Immediate confusion: all parts of the system seemed interdependent – To design part A I need to know how part B, also being designed, will work • Controllers didn’t like early proposals and insisted on major changes to design • Fault-tolerance idea was one of the reasons IBM was picked, but made the system so complex that it went on the back burner Summary of Simplifications • Focus on some core components • Postpone worry about faulttolerance until later • Try and build a simple version that can be fleshed out later … but the simplification wasn’t enough. Too many players kept intruding with requirements Crash and Burn • The technical guys saw it coming – Probably as early as one year into the effort – But they kept it secret (“bad news diode”) – Anyhow, management wasn’t listening (“they’ve heard it all before – whining engineers!”) • The fault-tolerance scheme didn’t work – Many technical issues unresolved • The FAA kept out of the technical issues – But a mixture of changing specifications and serious technical issues were at the root of the problems What came out? • In the USA, nothing. • The entire system was useless – the technology was of an all-or-nothing style and nothing was ready to deploy • British later rolled out a very limited version of a similar technology, late, with many bugs, but it does work… Contrast with French • They took a very incremental approach – Early design sought to cut back as much as possible – If it isn’t “mandatory” don’t do it yet – Focus was on console cluster architecture and fault-tolerance • They insisted on using off-the-shelf technology Contrast with French • Managers intervened in technology choices – For example, the vendor wanted to do a home-brew fault-tolerance technology – French insisted on a specific existing technology and refused to bid out the work until vendors accepted – A critical “good call” as it worked out Virtual Synchrony Model • Was supported by a commercially available software technology • Already proven in the field • Could solve the problem IBM was looking at… – But the French decided this was too ambitious – Limited themselves to “replicating” state of the consoles in a controller position – This is a simpler problem – basically, keeps the internal representations of the display consistent Learning by Doing • To gain experience with technology – They tested, and tested, and tested – Designed simple prototypes and played with them – Discovered that large cluster would perform poorly – But found a “sweet spot” and worked within it – This forced project to cut back on some goals • They easily could handle clusters of 3-5 machines • But abandoned hope of treating entire 150 machine network as a single big cluster – “scalability” concerns Testing • 9/10th of time and expense on any system is in – Testing – Debugging – Integration • Many projects overlook this • French planned conservatively Software Bugs • • • • Figure 1/10 lines in new code But as many as 1/250 lines in old code Bugs show up under stress Trick is to run a system in an unstressed mode • French identified “stress points” and designed to steer far from them • Their design also assumed that components would fail and automated the restart All of this worked! • Take-aways from French project? – Break big projects into little pieces – Do the critical core first, separately, and focus exclusively on it – Test, test, test – Don’t build anything you can possibly buy – Management was technically sophisticated enough to make some critical “calls” Scalability Conclusions? • Does XYZ scale? – Not a well-posed question! • Best to see scalability as a process, not a specific issue • Can learn a great deal from the French – Divide and conquer (limit scale) – Test to understand limits (and avoid them by miles)