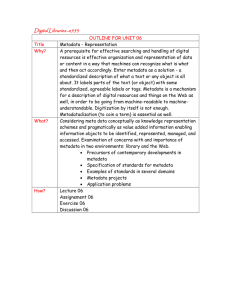

Discussion Class 9 Mixed Content and Metadata 1

advertisement

Discussion Class 9 Mixed Content and Metadata 1 Discussion Classes Format: Question Ask a member of the class to answer. Provide opportunity for others to comment. When answering: Stand up. Give your name. Make sure that the TA hears it. Speak clearly so that all the class can hear. Suggestions: Do not be shy at presenting partial answers. Differing viewpoints are welcome. 2 Question 1: Mixed content (a) What do the authors mean by “mixed content”? (b) What is the traditional way to manage mixed content? (c) Why is this a new problem? (d) What relationship does this have to the results of the TREC ad hoc experiment? 3 Question 2: Mixed metadata The authors of the paper state that mixed metadata is inevitable. (a) What do they mean by “mixed metadata"? (b) What technical and social reasons do they give for standardization being an illusion? 4 Question 3: Information discovery in a messy world Web search engines have adapted to a very large scale. Other techniques, such as cross-domain metadata and federated searching have failed to scale up. 5 • What new concepts and techniques have enabled this adaptation? • What can we learn that is applicable to other information discovery tasks? Question 4: User interfaces (a) What (if any) is the relationship between metadata standards, user interfaces, and user training? (b) How is it possible that free-text indexing, with unstructured queries, can sometimes be more effective than structured searching of high-quality indexes? What do the authors mean by, "Powerful user interfaces and networks bring human expertise into the information discovery loop"? 6 Question 5: New approaches Explain the following new developments: (a) Better understanding of how and why users seek for information. (b) Relationships and context information. (c) Multi-modal information discovery. (d) User interfaces for exploring information. 7 Question 6: Relevance v. importance Traditional information retrieval measures effectiveness in terms of relevance. (a) What is the fundamental assumption behind using precision and recall to measure effectiveness that does not apply with mixed content? (b) Why is importance of documents a useful measure of ranking with mixed content? (c) How does this relate to the user’s information discovery task? 8 Question 7: Physical access to resources (a) Why are the metadata and indexing requirements different for physical resources than for online resources? (b) How do web search engines provide effective service to users despite the mixed content and the absence of good metadata? Would the same approaches work with physical resources, i.e., not online? 9 Question 8: Integration The authors argue that content (data and metadata), search engines, and user interfaces need to be designed as a whole. What is the impact if they are developed separately? 10