MIPS Pipeline Hakim Weatherspoon CS 3410, Spring 2013 Computer Science

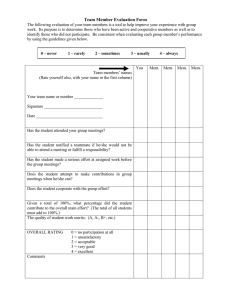

advertisement

MIPS Pipeline Hakim Weatherspoon CS 3410, Spring 2013 Computer Science Cornell University See P&H Chapter 4.6 A Processor Review: Single cycle processor memory +4 inst register file +4 =? PC control offset new pc alu target imm extend cmp addr din dout memory Review: Single Cycle Processor Advantages • Single Cycle per instruction make logic and clock simple Disadvantages • Since instructions take different time to finish, memory and functional unit are not efficiently utilized. • Cycle time is the longest delay. – Load instruction • Best possible CPI is 1 – However, lower MIPS and longer clock period (lower clock frequency); hence, lower performance. Review: Multi Cycle Processor Advantages • Better MIPS and smaller clock period (higher clock frequency) • Hence, better performance than Single Cycle processor Disadvantages • Higher CPI than single cycle processor Pipelining: Want better Performance • want small CPI (close to 1) with high MIPS and short clock period (high clock frequency) • CPU time = instruction count x CPI x clock cycle time Single Cycle vs Pipelined Processor See: P&H Chapter 4.5 The Kids Alice Bob They don’t always get along… The Bicycle The Materials Drill Saw Glue Paint The Instructions N pieces, each built following same sequence: Saw Drill Glue Paint Design 1: Sequential Schedule Alice owns the room Bob can enter when Alice is finished Repeat for remaining tasks No possibility for conflicts Sequential Performance time 1 2 3 4 Latency: Elapsed Time for Alice: 4 Throughput: Elapsed Time for Bob: 4 Concurrency: Total elapsed time: 4*N Can we do better? 5 6 CPI = 7 8… Design 2: Pipelined Design Partition room into stages of a pipeline Dave Carol Bob Alice One person owns a stage at a time 4 stages 4 people working simultaneously Everyone moves right in lockstep time Pipelined Performance 1 2 3 4 5 6 7… Latency: Throughput: Concurrency: Lessons Principle: Throughput increased by parallel execution Pipelining: • Identify pipeline stages • Isolate stages from each other • Resolve pipeline hazards (Thursday) A Processor Review: Single cycle processor memory +4 inst register file +4 =? PC control offset new pc alu target imm extend cmp addr din dout memory A Processor memory inst register file alu +4 addr PC din control new pc Instruction Fetch imm extend Instruction Decode dout memory compute jump/branch targets Execute Memory WriteBack Basic Pipeline Five stage “RISC” load-store architecture 1. Instruction fetch (IF) – get instruction from memory, increment PC 2. Instruction Decode (ID) – translate opcode into control signals and read registers 3. Execute (EX) – perform ALU operation, compute jump/branch targets 4. Memory (MEM) – access memory if needed 5. Writeback (WB) – update register file Clock cycle add lw Time Graphs 1 2 IF ID EX MEM WB IF ID EX MEM WB IF ID EX MEM WB IF ID EX MEM WB IF ID Latency: Throughput: Concurrency: 3 4 5 6 7 8 9 EX MEM WB Principles of Pipelined Implementation Break instructions across multiple clock cycles (five, in this case) Design a separate stage for the execution performed during each clock cycle Add pipeline registers (flip-flops) to isolate signals between different stages Pipelined Processor See: P&H Chapter 4.6 register file B alu D memory D A Pipelined Processor +4 IF/ID M B ID/EX Execute EX/MEM Memory ctrl Instruction Decode Instruction Fetch dout compute jump/branch targets ctrl extend din memory imm new pc control ctrl inst PC addr WriteBack MEM/WB IF Stage 1: Instruction Fetch Fetch a new instruction every cycle • Current PC is index to instruction memory • Increment the PC at end of cycle (assume no branches for now) Write values of interest to pipeline register (IF/ID) • Instruction bits (for later decoding) • PC+4 (for later computing branch targets) IF instruction memory addr mc +4 PC new pc IF instruction memory addr mc +4 PC new pc IF instruction memory mc 00 = read word 1 PC+4 +4 inst addr WE PC pcreg new pc pcsel pcrel pcabs IF/ID Rest of pipeline 1 ID Stage 2: Instruction Decode On every cycle: • Read IF/ID pipeline register to get instruction bits • Decode instruction, generate control signals • Read from register file Write values of interest to pipeline register (ID/EX) • Control information, Rd index, immediates, offsets, … • Contents of Ra, Rb • PC+4 (for computing branch targets later) ctrl PC+4 imm inst PC+4 Stage 1: Instruction Fetch WE Rd register D file A A IF/ID ID/EX decode extend Rest of pipeline B Ra Rb B ID result dest EX Stage 3: Execute On every cycle: • • • • Read ID/EX pipeline register to get values and control bits Perform ALU operation Compute targets (PC+4+offset, etc.) in case this is a branch Decide if jump/branch should be taken Write values of interest to pipeline register (EX/MEM) • Control information, Rd index, … • Result of ALU operation • Value in case this is a memory store instruction ctrl ctrl PC+4 + || j pcrel D A pcsel Rest of pipeline B B alu target imm Stage 2: Instruction Decode pcreg EX branch? pcabs ID/EX EX/MEM MEM Stage 4: Memory On every cycle: • Read EX/MEM pipeline register to get values and control bits • Perform memory load/store if needed – address is ALU result Write values of interest to pipeline register (MEM/WB) • Control information, Rd index, … • Result of memory operation • Pass result of ALU operation pcsel MEM branch? memory Rest of pipeline D pcrel dout mc pcabs ctrl target B din M addr ctrl Stage 3: Execute D pcreg EX/MEM MEM/WB WB Stage 5: Write-back On every cycle: • Read MEM/WB pipeline register to get values and control bits • Select value and write to register file ctrl M Stage 4: Memory D result dest MEM/WB WB D M addr din dout EX/MEM Rd OP Rd mem OP ID/EX B D A B Rt Rd PC+4 IF/ID OP PC+4 +4 PC B Ra Rb imm inst inst mem A Rd D MEM/WB Administrivia Required: partner for group project Project1 (PA1) and Homework2 (HW2) are both out PA1 Design Doc and HW2 due in one week, start early Work alone on HW2, but in group for PA1 Save your work! • Save often. Verify file is non-zero. Periodically save to Dropbox, email. • Beware of MacOSX 10.5 (leopard) and 10.6 (snow-leopard) Use your resources • Lab Section, Piazza.com, Office Hours, Homework Help Session, • Class notes, book, Sections, CSUGLab Administrivia Check online syllabus/schedule • http://www.cs.cornell.edu/Courses/CS3410/2013sp/schedule.html Slides and Reading for lectures Office Hours Homework and Programming Assignments Prelims (in evenings): • Tuesday, February 26th • Thursday, March 28th • Thursday, April 25th Schedule is subject to change Collaboration, Late, Re-grading Policies “Black Board” Collaboration Policy • Can discuss approach together on a “black board” • Leave and write up solution independently • Do not copy solutions Late Policy • Each person has a total of four “slip days” • Max of two slip days for any individual assignment • Slip days deducted first for any late assignment, cannot selectively apply slip days • For projects, slip days are deducted from all partners • 20% deducted per day late after slip days are exhausted Regrade policy • Submit written request to lead TA, and lead TA will pick a different grader • Submit another written request, lead TA will regrade directly • Submit yet another written request for professor to regrade. Example: Sample Code (Simple) Assume eight-register machine Run the following code on a pipelined datapath add nand lw add sw Slides thanks to Sally McKee r3 r1 r2 ; reg 3 = reg 1 + reg 2 r6 r4 r5 ; reg 6 = ~(reg 4 & reg 5) r4 20 (r2) ; reg 4 = Mem[reg2+20] r5 r2 r5 ; reg 5 = reg 2 + reg 5 r7 12(r3) ; Mem[reg3+12] = reg 7 Example: : Sample Code (Simple) add nand lw add sw r3, r6, r4, r5, r7, r1, r2; r4, r5; 20(r2); r2, r5; 12(r3); MIPS instruction formats All MIPS instructions are 32 bits long, has 3 formats R-type op 6 bits I-type op 6 bits J-type rs rt 5 bits 5 bits rs rt rd shamt func 5 bits 5 bits 6 bits immediate 5 bits 5 bits 16 bits op immediate (target address) 6 bits 26 bits MIPS Instruction Types Arithmetic/Logical • R-type: result and two source registers, shift amount • I-type: 16-bit immediate with sign/zero extension Memory Access • load/store between registers and memory • word, half-word and byte operations Control flow • conditional branches: pc-relative addresses • jumps: fixed offsets, register absolute Clock cycle add nand Time Graphs 1 2 IF ID EX MEM WB IF ID EX MEM WB IF ID EX MEM WB IF ID EX MEM WB IF ID lw add sw Latency: Throughput: Concurrency: 3 4 5 6 7 8 9 EX MEM WB M U X 4 target + PC+4 PC+4 R0 R1 regB R2 R3 Register file instruction PC Inst mem regA 0 Bits 16-20 Bits 26-31 IF/ID valA R4 R5 R6 valB R7 extend Bits 11-15 ALU result M U X A L U ALU result mdata Data mem M U X data imm dest valB Rd Rt op ID/EX M U X dest dest op op EX/MEM MEM/WB data dest extend 0 0 IF/ID ID/EX M U X EX/MEM MEM/WB add 3 1 2 M U X 4 0 + 4 0 R0 R1 R3 Register file add 3 1 2 PC Inst mem R2 R4 R5 R6 R7 0 36 9 12 18 7 41 22 extend Fetch: add 3 1 2 Bits 11-15 Bits 16-20 Bits 26-31 Time: 1 IF/ID 0 0 0 0 M U X A L U 0 0 Data mem M U X data 0 dest 0 0 0 nop ID/EX M U X 0 0 nop nop EX/MEM MEM/WB nand 6 4 5 add 3 1 2 M U X 4 0 + 8 4 R0 R2 2 R3 Register file nand 6 4 5 PC Inst mem R1 1 R4 R5 R6 R7 0 36 9 12 18 7 41 22 extend Fetch: nand 6 4 5 Bits 11-15 Bits 16-20 Bits 26-31 Time: 2 IF/ID 0 0 36 9 3 M U X A L U 0 0 Data mem M U X data dest 0 3 2 add ID/EX M U X 0 0 nop nop EX/MEM MEM/WB lw 4 20(2) nand 6 4 5 add 3 1 2 M U X 4 4 + 12 8 R0 R2 5 R3 Register file lw 4 20(2) PC Inst mem R1 4 R4 R5 R6 R7 0 36 9 12 18 7 41 22 extend Fetch: lw 4 20(2) Bits 11-15 Bits 16-20 Bits 26-31 Time: 3/ 4 IF/ID 0 0 36 18 9 7 6 M U X A L U 45 0 Data mem M U X data dest 9 6 5 3 2 nand ID/EX M U X 3 3 0 add nop EX/MEM MEM/WB add 5 2 5 lw 4 20(2) nand 6 4 5 add 3 1 2 M U X 4 8 + 16 12 R0 R2 4 R3 Register file add 5 2 5 PC Inst mem R1 2 R4 R5 R6 R7 0 36 9 12 18 7 41 22 extend Fetch: add 5 2 5 Bits 11-15 Bits 16-20 Bits 26-31 Time: 4 IF/ID 0 45 18 9 7 18 20 M U X A L U -3 45 0 Data mem M U X data dest 7 0 4 6 5 lw ID/EX M U X 6 6 3 nand EX/MEM 3 add MEM/WB sw 7 12(3) add 5 2 5 lw 4 20 (2) nand 6 4 5 add 3 1 2 M U X 4 12 + 20 16 R0 R2 5 R3 Register file sw 7 12(3) PC Inst mem R1 2 R4 R5 R6 R7 0 36 9 45 18 7 41 22 extend Fetch: sw 7 12(3) Bits 11-15 Bits 16-20 Bits 26-31 Time: 5 IF/ID 0 -3 9 9 7 M U 20 X 5 A L U 29 -3 45 0 Data mem M U X data dest 18 5 5 0 4 add ID/EX M U X 4 4 6 lw EX/MEM 6 3 nand MEM/WB sw 7 12(3) add 5 2 5 lw 4 20(2) nand 6 4 5 M U X 4 16 + 20 R0 R1 3 R2 7 R3 Register file PC Inst mem R4 R5 R6 R7 0 36 9 45 18 7 -3 22 extend No more instructions Bits 11-15 Bits 16-20 Bits 26-31 Time: 6 IF/ID 0 29 9 45 7 22 12 M U X A L U 16 29 -3 99 Data mem M U X data dest 7 0 7 5 5 sw ID/EX M U X 5 5 4 add EX/MEM 4 6 lw MEM/WB nop nop sw 7 12(3) add 5 2 5 lw 4 20(2) M U X 4 20 + R0 R1 R2 Inst mem R3 Register file PC R4 R5 R6 R7 0 36 9 45 99 7 -3 22 0 16 45 M U 12 X A L U 57 16 Data mem Bits 11-15 Bits 16-20 22 0 7 Bits 26-31 Time: 7 IF/ID data dest extend No more instructions 0 M U 99 X M U X 7 7 5 sw ID/EX EX/MEM 5 4 add MEM/WB nop nop nop sw 7 12(3) add 5 2 5 M U X 4 + R0 R1 R2 Inst mem R3 Register file PC R4 R5 R6 R7 0 36 9 45 99 16 -3 22 57 M U X 57 Bits 11-15 M U X Bits 16-20 IF/ID Slides thanks to Sally McKee 0 Data mem M U X data dest 7 Bits 26-31 Time: 8 22 22 extend No more instructions A L U 16 5 sw ID/EX EX/MEM MEM/WB nop nop nop nop sw 7 12(3) M U X 4 + R0 R1 R2 Inst mem R3 Register file PC R4 R5 R6 R7 0 36 9 45 99 16 -3 22 M U X A L U Data mem data dest extend No more instructions M U X Bits 11-15 M U X Bits 16-20 Bits 21-23 Time: 9 IF/ID ID/EX EX/MEM MEM/WB Pipelining Recap Powerful technique for masking latencies • Logically, instructions execute one at a time • Physically, instructions execute in parallel – Instruction level parallelism Abstraction promotes decoupling • Interface (ISA) vs. implementation (Pipeline)