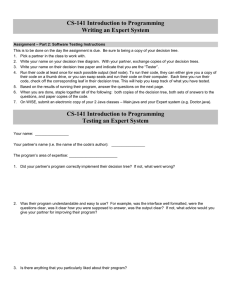

Efficient User-Level Networking in Java Chi-Chao Chang

advertisement

Efficient User-Level Networking

in Java

Chi-Chao Chang

Dept. of Computer Science

Cornell University

(joint work with Thorsten von Eicken and the Safe

Language Kernel group)

Goal

High-performance cluster computing with safe languages

parallel and distributed applications

communication support for operating systems

Use off-the-shelf technologies

User-level network interfaces (UNIs)

direct, protected access to network devices

inexpensive clusters

U-Net (Cornell), Shrimp (Princeton), FM (UIUC), Hamlyn (HP)

Virtual Interface Architecture (VIA): emerging UNI standard

Java

safe: “better C++”

“write once run everywhere”

growing interest for high-performance applications (Java Grande)

Make the performance of UNIs available from Java

JAVIA: a Java interface to VIA

2

Why a Java Interface to UNI?

Different approach for providing

communication support for Java

Traditional “front-end” approach

pick favorite abstraction (sockets, RMI,

MPI) and Java VM

write a Java front-end to custom or

existing native libraries

good performance, re-use proven code

magic in native code, no common solution

Javia: exposes UNI to Java

minimizes amount of unverified code

isolates bottlenecks in data transfer

1. automatic memory management

2. object serialization

Apps

RMI, RPC

Sockets

Active Messages, MPI, FM

Java

UNI

C

Networking Devices

3

Contribution I

PROBLEM

EFFECT

SOLUTION

SUPPORT

RESULT

lack of control over object lifetime/location due to GC

conventional techniques (data copying and buffer

pinning) yield 10% to 40% hit in array throughput

jbufs: explicit, safe buffer management in Java

modifications to GC

BW within 1% of hardware, independent of xfer size

Array Throughput with Jbufs

Array Throughput

MB/s

MB/s

80

80

60

60

raw

conv tech 1

conv tech 2

conv tech 3

conv tech 4

40

20

0

jbufs

20

Kbytes

0

8

16

24

raw

40

32

Kbytes

0

0

8

16

24

32

4

Contribution II

PROBLEM

EFFECT

SOLUTION

SUPPORT

RESULT

linked, typed objects

serialization >> send/recv overheads (~1000 cycles)

jstreams: in-place object unmarshaling

object layout information

serialization ~ send/recv overheads

unmarshaling overhead independent of object size

readObject

Per-Object Overhead (cycles)

35000

30000

25000

20000

15000

Serial (MS JVM5.0)

Serial (Marmot)

jstream/Java

jstream/C

10000

5000

0

Object Size (Bytes)

5

Outline

Background

UNI: Virtual Interface Architecture

Java

Experimental Setup

Javia Architecture

Javia-I: native buffers (baseline)

Javia-II: jbufs (buffer management) and jstreams

(marshaling)

Summary and Conclusions

6

UNI in a Nutshell

Enabling technology for networks of workstations

direct, protected access to networking devices

Traditional

all communication via OS

VIA

connections between virtual

interfaces (Vi)

apps send/recv through Vi, simple

mux in NI

OS only involved in setting up Vis

Generic Architecture

implemented in hardware,

software or both

V

V

V

V

V

V

OS

V

V

V

NI

NI

V

V

V

OS

OS

OS

7

VI Structures

Key Data Structures

user buffers

buffer descriptors < addr, len>:

layout exposed to user

send/recv queues: only through

API calls

Application Memory

Library

buffers

sendQ

recvQ

descr

Structures are

pinned to physical memory

address translation in adapter

DMA

DMA

Doorbells

Adapter

Key Points

direct DMA access to buffers/descr in user-space

application must allocate, use, re-use, free all buffers/desc

alloc&pin, unpin&free are expensive operations, but re-use is cheap

8

Java Storage Safety

class Buffer {

byte[] data;

Buffer(int n) { data = new byte[n]; }

}

No control over object placement

Buffer buf = new Buffer(1024);

cannot pin after allocation: GC can move objects

No control over de-allocation

buf = null;

drop all references, call or wait for GC;

Result: additional data copying in communication path

9

Java Type Safety

Cannot forge a reference to a Java object

e.g. cannot cast between byte arrays and objects

No control over object layout

field ordering is up to the Java VM

objects have runtime metadata

casting with runtime checks

Object o = (Object) new Buffer(1024) /* up cast: OK */

Buffer buf = (Buffer) o; /* down cast: runtime check */

array bounds check

for (int i = 0; i < 1024; i++) buf.data[i] = i;

Result: expensive object marshaling

buf

Buffer vtable

lock obj

byte[] vtable

lock obj

1024

0

1

2

...

10

Marmot

Java System from Microsoft Research

not a VM

static compiler: bytecode (.class) to x86 (.asm)

linker: asm files + runtime libraries -> executable (.exe)

no dynamic loading of classes

most Dragon book opts, some OO and Java-specific opts

Advantages

source code

good performance

two types of non-concurrent GC (copying, conservative)

native interface “close enough” to JNI

11

Example: Cluster @ Cornell

Configuration

8 P-II 450MHz, 128MB RAM

8 1.25 Gbps Giganet GNN-1000 adapter

one Giganet switch

total cost: ~ $30,000 (w/university discount)

GNN1000 Adapter

mux implemented in hardware

device driver for VI setup

VIA interface in user-level library (Win32 dll)

no support for interrupt-driven reception

Base-line pt-2-pt Performance

14s r/t latency, 16s with switch

over 100MBytes/s peak, 85MBytes/s with switch

12

Outline

Background

Javia Architecture

Javia-I: native buffers (baseline)

Javia-II: jbufs and jstreams

Summary and Conclusions

13

Javia: General Architecture

Java classes + C library

Javia-I

baseline implementation

array transfers only

no modifications to Marmot

native library: buffer mgmt +

wrapper calls to VIA

Javia-II

array and object transfers

buffer mgmt in Java

special support from Marmot

native library: wrapper calls to VI

Java (Marmot)

Apps

Apps

Javia classes

Javia C library

Giganet VIA library

GNN1000 Adapter

14

Javia-I: Exploiting Native Buffers

Basic Asynch Send/Recv

buffers/descr in native library

Java send/recv ticket rings mirror VI

queues

# of descr/buffers == # tickets in ring

GC heap

byte array ref

send/recv

ticket ring

Vi

Send Critical Path

get free ticket from ring

copy from array to buffer

free ticket

Recv Critical Path

obtain corresponding ticket in ring

copy data from buffer to array

free ticket from ring

Java

C

descriptor

send/recv

queue

buffer

VIA

15

Javia-I: Variants

Two Send Variants:

Sync Send + Copy

GC heap

goal: bypass send ring

one ticket

array -> buffer copy

wait until send completes

byte array ref

send/recv

ticket ring

Vi

Sync Send + Pin:

goal: bypass send ring, avoid copy

pin array on the fly

waits until send completes

unpins after send

One Recv Variant:

No-Post Recv + Alloc

Java

C

descriptor

send/recv

queue

buffer

VIA

goal: bypass recv ring

allocate array on the fly, copy data

16

Javia-I: Performance

Basic Costs:

VIA pin + unpin = (10 + 10)us

Marmot: native call = 0.28us, locks = 0.25us, array alloc = 0.75us

Latency: N = transfer size in bytes

16.5us + (25ns) * N

38.0us + (38ns) * N

21.5us + (42ns) * N

18.0us + (55ns) * N

raw

pin(s)

copy(s)

copy(s)+alloc(r)

BW: 75% to 85% of raw, 6KByte switch over between copy and pin

s

raw

copy(s)

pin(s)

copy(s)+alloc(r)

pin(s)+alloc(r)

400

300

MB/s

80

60

200

40

100

20

raw

copy(s)

pin(s)

copy(s)+alloc(r)

pin(s)+alloc(r)

Kbytes

0

Kbytes

0

0

1

2

3

4

5

6

7

8

0

8

16

24

32

17

jbufs

Lessons from Javia-I

managing buffers in C introduces copying and/or pinning

overheads

can be implemented in any off-the-shelf JVM

Motivation

eliminate excess per-byte costs in latency

improve throughput

jbuf: exposes communication buffers to Java programmers

1. lifetime control: explicit allocation and de-allocation of jbufs

2. efficient access: direct access to jbuf as primitive-typed arrays

3. location control: safe de-allocation and re-use by controlling

whether or not a jbuf is part of the GC heap

18

jbufs: Lifetime Control

public class jbuf {

public static jbuf alloc(int bytes);/* allocates jbuf outside of GC heap */

public void free() throws CannotFreeException; /* frees jbuf if it can */

}

C pointer

jbuf

GC heap

1. jbuf allocation does not result in a Java reference to it

cannot directly access the jbuf through the wrapper object

2. jbuf is not automatically freed if there are no Java references to it

free has to be explicitly called

19

jbufs: Efficient Access

public class jbuf {

/* alloc and free omitted */

public byte[] toByteArray() throws TypedException;/*hands out byte[] ref*/

public int[] toIntArray() throws TypedException; /*hands out int[] ref*/

. . .

}

jbuf

Java

byte[]

ref

GC heap

3. (Memory Safety) jbuf remains allocated as long as there are array

references to it

when can we ever free it?

4. (Type Safety) jbuf cannot have two differently typed references to it at

any given time

when can we ever re-use it (e.g. change its reference type)?

20

jbufs: Location Control

public class jbuf {

/* alloc, free, toArrays omitted */

public void unRef(CallBack cb); /* app intends to free/re-use jbuf */

}

Idea: Use GC to track references

unRef: application claims it has no references into the jbuf

jbuf is added to the GC heap

GC verifies the claim and notifies application through callback

application can now free or re-use the jbuf

Required GC support: change scope of GC heap dynamically

jbuf

jbuf

Java

byte[]

ref

jbuf

Java

byte[]

ref

GC heap

Java

byte[]

ref

GC heap

unRef

GC heap

callBack

21

jbufs: Runtime Checks

to<p>Array, GC

alloc

to<p>Array

free

Unref

ref<p>

unRef

GC*

to-be

unref<p>

to<p>Array, unRef

Type safety: ref and to-be-unref states parameterized by primitive type

GC* transition depends on the type of garbage collector

non-copying: transition only if all refs to array are dropped before GC

copying: transition occurs after every GC

22

Javia-II: Exploiting jbufs

Send/recv with jbufs

explicit pinning/unpinning of jbufs

tickets point to pinned jbufs

critical path: synchronized access to rings,

but no copies

Additional checks

send posts allowed only if jbuf is in ref<p>

state

recv posts allowed only if jbuf is in unref or

ref<p> state

no outstanding send/recv posts in to-beunref<p> state

GC heap

send/recv

ticket ring

state

jbuf

array

refs

Vi

Java

C

descriptor

send/recv

queue

VIA

23

Javia-II: Performance

Basic Costs

allocation = 1.2us, to*Array = 0.8us, unRefs = 2.5 us

Latency (n = xfer size)

16.5us + (0.025us) * n

20.5us + (0.025us) * n

38.0us + (0.038us) * n

21.5us + (0.042us) * n

raw

jbufs

pin(s)

copy(s)

BW: within margin of error (< 1%)

s

MB/s

raw

400

80

jbufs

copy

60

pin

300

200

40

100

20

raw

jbufs

copy

Kbytes

0

0

1

2

3

4

5

6

7

8

pin

Kbytes

0

0

8

16

24

32

24

Parallel Matrix Multiplication

Goal: validate jbufs flexibility and

performance in Java apps

C

A

+=

matrices represented as array of jbufs (each jbuf

accessed as array of doubles)

A, B, C distributed across processors (block

columns)

comm phase: processor sends local portion of A to

right neighbor, recv new A from left neighbor

8

comp phase: Cloc = Cloc + Aloc * Bloc’

p0 p1 p2 p3

B

*

p0 p1 p2 p3

p0 p1 p2 p3

linear

jbufs

7

copy

Preliminary Results

no fancy instruction scheduling in Marmot

no fancy cache-conscious optimizations

single processor, 128x128: only 15 Mflops

cluster, 128x128

comm time about 10% of total time

6

pin

5

4

3

2

Procs

1

1

Impact of Jbufs will increase as #flops increase

2

3

4

5

6

7

8

25

Active Messages

Goal: Exercise jbuf mgmt

Implemented subset of AM-II

over Javia+jbufs:

maintains a pool of free recv jbufs

when msg arrives, jbuf is passed

to the handler

AM calls unRef on jbuf after

handler invocation

if pool is empty, either alloc more

jbufs or invoke GC

no copying in critical path,

deferred to GC-time if needed

class First extends AMHandler {

private int first;

void handler(AMJbuf buf, …) {

int[] tmp = buf.toIntArray();

first = tmp[0];

}

}

class Enqueue extends AMHandler {

private Queue q;

void handler(AMJbuf buf, …) {

int[] tmp = buf.toIntArray();

q.enq(tmp);

}

}

26

AM: Preliminary Numbers

s

MBps

80

200

60

100

raw

40

raw

Javia+jbufs

Javia+jbufs

AM

Javia+copy

20

Kbytes

0

0

0

1

2

3

4

5

6

7

8

Javia+copy

AM

Kbytes

0

8

16

24

32

Summary

AM latency about 15 us higher than Javia

synch access to buffer pool, endpoint header, flow control

checks, handler id lookup

room for improvement

AM BW within 5% of peak for 16KByte messages

27

jstreams

Goal: efficient transmission of arbitrary objects

assumption: optimizing for homogeneous hosts and Java systems

Idea: “in-place” unmarshaling

defer copying and allocation to GC-time if needed

jstream

R/W access to jbuf through object stream API

no changes in Javia-II architecture

“typical”

readObject

writeObject

NETWORK

“in-place”

readObject

28

jstream: Implementation

writeObject

deep-copy of object, breadth-first

deals with cyclic data structures

replace object metadata (e.g. vtable) with 64-bit class descriptor

readObject

depth-first traversal from beginning of stream

swizzle pointers, type-checking, array-bounds checking

replace class descriptors with metadata

Required support

some object layout information (e.g. per-class pointer-tracking info)

Minimal changes to existing stub compilers (e.g. rmic)

jstream implements JDK2.0 ObjectStream API

29

jstreams: Safety

writeObject, GC

alloc

writeObject

free

Unref

clearWrite

Only recv posts allowed

Unre

f

w/ob

j

Only send posts allowed

readObject

GC*

to-be

unref

readObject

readObject

clearRead

Ref

readObject, GC

No outstanding send/recv posts

No send/recv posts allowed

30

jstream: Performance

80

70

60

50

40

30

20

10

0

readObject

Serial (MS JVM5.0)

Serial (Marmot)

jstream/Java

jstream/C

16

160

Object Size (Bytes)

80

70

60

50

40

30

20

10

0

Per-Object Overhead

(us)

Per-Object Overhead (us)

writeObject

Serial (MS JVM5.0)

Serial (Marmot)

jstream/Java

jstream/C

16

160

Object Size (Bytes)

31

Status

Implementation Status

Javia-I and II complete

jbufs and jstreams integrated with Marmot copying collector

Current Work

finish implementation of AM-II

full implementation of Java RMI

integrate jbufs and jstreams with conservative collector

more investigation into deferred copying in higher-level protocols

32

Related Work

Fast Java RMI Implementations

Manta (Vrije U): compiler support for marshaling, Panda

communication system

34 us null, 51 Mbytes/s (85% of raw) on PII-200/Myrinet, JDK1.4

KaRMI (Karlsruhe): ground-up implementation

117 us null, Alpha 500, Para-station, JDK1.4

Other front-end approaches

Java front-end for MPI (IBM), Java-to-PVM interface (GaTech)

Microsoft J-Direct

“pinned” arrays defined using source-level annotations

JIT produces code to “redirect” array access: expensive

Comm System Design in Safe Languages (e.g. ML)

Fox Project (CMU): TCP/IP layer in ML

Ensemble (Cornell): Horus in ML, buffering strategies, data path

optimizations

33

Summary

High-Performance Communication in Java: Two problems

buffer management in the presence of GC

object marshaling

Javia: Java Interface to VIA

uses native buffers as baseline implementation

jbufs: safe, explicit control over buffer placement and lifetime,

eliminates bottlenecks in critical path

jstreams: jbuf extension for fast, in-place unmarshaling of objects

Concluding Remarks

building blocks for Java apps and communication software

should be integral part of a high-performance Java system

34

Javia-I: Interface

package cornell.slk.javia;

public class ViByteArrayTicket {

private byte[] data; private int len, off, tag;

/* public methods to set/get fields */

}

public class Vi { /* connection to remote Vi */

public void sendPost(ViByteArrayTicket t); /* asynch send */

public ViByteArrayTicket sendWait(int timeout);

public void recvPost(ViByteArrayTicket t); /* async recv */

public ViByteArrayTicket recvWait(int timeout);

public void send(byte[] b, int len, int off, int tag); /* sync send */

public byte[] recv(int timeout); /* post-less recv */

}

35

Javia-II: Interface

package cornell.slk.javia;

public class ViJbuf extends jbuf {

public ViJbufTicket register(Vi vi); /* reg + pin jbuf */

public void deregister(ViJbufTicket t); /* unreg + unpin jbuf */

}

public class ViJbufTicket {

private ViJbuf buf; private int len, off, tag;

}

public class Vi {

public void sendBufPost(ViJbufTicket t); /* asynch send */

public ViBufTicket sendBufWait(int usecs);

public void recvBufPost(ViJbufTicket t); /* async recv */

public ViBufTicket recvBufWait(int usecs);

}

36

Jbufs: Implementation

alloc/free: Win32 VirtualAlloc, VirtualFree

to{Byte,Int,...}Array:no alloc/copying

clearRefs:

baseAddr

native

desc ptr

modification to stop-and-copy Cheney scan GC

clearRef adds a jbuf to that list

after GC, traverse list to invoke callbacks, delete list

Stack + Global

from-space

ref’d

jbufs

Before GC

to-space

Stack + Global

to-space

vtable

lock

length

array

body

After GC

from-space

unref’d

jbufs

37

State-of-the-Art Matrix Multiplication

332 Mhz PowerPC 604e

350

314.2

300

MFLOPS

250

199.9

200

150

plain

nocheck

blocking

unrolling

scalar

fma

C++

ESSL

100

50

4.9

0

Courtesy: IBM Research

38