Reliable Distributed Systems Scalability

advertisement

Reliable Distributed Systems

Scalability

Scalability

Today we’ll focus on how things scale

Basically: look at a property that matters

Make something “bigger”

Like the network, the number of groups, the

number of members, the data rate

Then measure the property and see impact

Often we can “hope” that no slowdown

would occur. But what really happens?

Stock Exchange Problem:

Sometimes, someone is slow…

Most members are

healthy….

… but one is slow

i.e. something is contending with the red process,

delaying its handling of incoming messages…

With a slow receiver, throughput

collapses as the system scales up

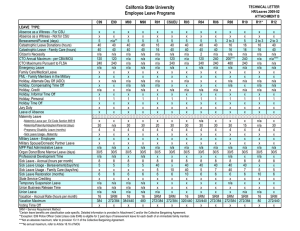

Virtually synchronous Ensemble multicast protocols

average throughput on nonperturbed members

250

group size: 32

group size: 64

group size: 96

32

200

150

100

96

50

0

0

0.1

0.2

0.3

0.4

0.5

perturb rate

0.6

0.7

0.8

0.9

Why does this happen?

Superficially, because data for the slow

process piles up in the sender’s buffer,

causing flow control to kick in

(prematurely)

But why does the problem grow worse as

a function of group size, with just one

“red” process?

Small perturbations happen all the time

Broad picture?

Virtual synchrony works well under bursty

loads

And it scales to fairly large systems (SWX

uses a hierarchy to reach ~500 users)

From what we’ve seen so far, this is about as good

as it gets for reliability

Recall that stronger reliability models like Paxos

are costly and scale far worse

Desired: steady throughput under heavy load

and stress

Protocols famous for

scalability

Scalable reliable multicast (SRM)

Reliable Multicast Transport Protocol (RMTP)

On-Tree Efficient Recovery using Subcasting

Several others: TMP, MFTP, MFTP/EC...

(OTERS)

But when stability is tested under stress, every

one of these protocols collapses just like virtual

synchrony!

Example: Scalable Reliable

Multicast (SRM)

Originated in work on Wb and Mbone

Idea is to do “local repair” if messages

are lost, various optimizations keep load

low and repair costs localized

Wildly popular for internet “push,” seen

as solution for Internet radio and TV

But receiver-driven reliability model

lacks “strong” reliability guarantees

Local Repair Concept

Local Repair Concept

Local Repair Concept

lost

Local Repair Concept

Receipt of subsequent

packet triggers NACK

for missing packet

NACK

X

NACK

NACK

Local Repair Concept

Retransmit

NACK

NACK

X

X

NACK

X

Receive useless NAK,

duplicate repair

Local Repair Concept

X

XX

NACK

NACK

X

X

X

Receive useless NAK,

duplicate repair

X

Local Repair Concept

X

NACK

X

NACK

Receive useless NAK,

duplicate repair

X

NACK

X

Local Repair Concept

X

X

X

Receive useless NAK,

duplicate repair

Limitations?

SRM runs in application, not router, hence IP

multicast of nack’s and retransmissions tend

to reach many or all processes

Lacking knowledge of who should receive

each message, SRM has no simple way to

know when a message can be garbage

collected at the application layer

Probabilistic rules to suppress duplicates

In practice?

As the system grows large the

“probabilistic suppression” fails

More and more NAKs are sent in duplicate

And more and more duplicate data

message are sent as multiple receivers

respond to the same NAK

Why does this happen?

Visualizing how SRM collapses

Think of sender as the hub of a wheel

Messages depart in all directions

Loss can occur at many places “out there” and they could be

far apart…

Hence NAK suppression won’t work

Causing multiple NAKS

And the same reasoning explains why any one NAK is likely

to trigger multiple retransmissions!

Experiments have confirmed that SRM overheads

soar with deployment size

Every message triggers many NAKs and many

retransmissions until the network finally melts down

Dilemma confronting

developers

Application is extremely critical: stock

market, air traffic control, medical

system

Hence need a strong model, guarantees

But these applications often have a

soft-realtime subsystem

Steady data generation

May need to deliver over a large scale

Today introduce a new design pt.

Bimodal multicast (pbcast) is reliable in a sense

that can be formalized, at least for some

networks

Generalization for larger class of networks should be

possible but maybe not easy

Protocol is also very stable under steady load

even if 25% of processes are perturbed

Scalable in much the same way as SRM

Environment

Will assume that most links have known

throughput and loss properties

Also assume that most processes are

responsive to messages in bounded

time

But can tolerate some flakey links and

some crashed or slow processes.

Start by using unreliable multicast to rapidly

distribute the message. But some messages

may not get through, and some processes may

be faulty. So initial state involves partial

distribution of multicast(s)

Periodically (e.g. every 100ms) each process

sends a digest describing its state to some

randomly selected group member. The digest

identifies messages. It doesn’t include them.

Recipient checks the gossip digest against its

own history and solicits a copy of any missing

message from the process that sent the gossip

Processes respond to solicitations received

during a round of gossip by retransmitting the

requested message. The round lasts much longer

than a typical RPC time.

Delivery? Garbage Collection?

Deliver a message when it is in FIFO order

Garbage collect a message when you believe

that no “healthy” process could still need a

copy (we used to wait 10 rounds, but now

are using gossip to detect this condition)

Match parameters to intended environment

Need to bound costs

Worries:

Someone could fall behind and never catch

up, endlessly loading everyone else

What if some process has lots of stuff

others want and they bombard him with

requests?

What about scalability in buffering and in

list of members of the system, or costs of

updating that list?

Optimizations

Request retransmissions most recent

multicast first

Idea is to “catch up quickly” leaving at

most one gap in the retrieved sequence

Optimizations

Participants bound the amount of data

they will retransmit during any given

round of gossip. If too much is solicited

they ignore the excess requests

Optimizations

Label each gossip message with

senders gossip round number

Ignore solicitations that have expired

round number, reasoning that they

arrived very late hence are probably no

longer correct

Optimizations

Don’t retransmit same message twice in

a row to any given destination (the

copy may still be in transit hence

request may be redundant)

Optimizations

Use IP multicast when retransmitting a

message if several processes lack a copy

For example, if solicited twice

Also, if a retransmission is received from “far

away”

Tradeoff: excess messages versus low latency

Use regional TTL to restrict multicast scope

Scalability

Protocol is scalable except for its use of

the membership of the full process

group

Updates could be costly

Size of list could be costly

In large groups, would also prefer not

to gossip over long high-latency links

Can extend pbcast to solve both

Could use IP multicast to send initial

message. (Right now, we have a treestructured alternative, but to use it,

need to know the membership)

Tell each process only about some

subset k of the processes, k << N

Keeps costs constant.

Router overload problem

Random gossip can overload a central

router

Yet information flowing through this

router is of diminishing quality as rate

of gossip rises

Insight: constant rate of gossip is

achievable and adequate

Limited

bandwidth

(1500Kbits)

Receiver

Bandwidth of

other

links:10Mbits

Sender

Receiver

High noise rate

150

200

Uniform

Hierarchical

180

160

140

Uniform

Hierarchical

100

120

100

80

60

40

20

0

link (msgs/sec)

Load on WANnmsgs/sec

delivery (ms)

Latency tonmsgs/sec

Sender

0

10

20

30

40

50

sec

60

70

80

90

100

50

0

0

5

10

15

group size

20

25

30

Hierarchical Gossip

Weight gossip so that probability of

gossip to a remote cluster is smaller

Can adjust weight to have constant load

on router

Now propagation delays rise… but just

increase rate of gossip to compensate

1500

150

1000

100

nmsgs/sec

propagation time(ms)

Uniform

Hierarchical

Fast hierarchical

500

50

Uniform

Hierarchical

Fast hierarchical

0

0

5

10

15

group size

20

25

30

0

0

5

10

15

group size

20

25

30

Remainder of talk

Show results of formal analysis

We developed a model (won’t do the math

here -- nothing very fancy)

Used model to solve for expected reliability

Then show more experimental data

Real question: what would pbcast “do”

in the Internet? Our experience: it

works!

Idea behind analysis

Can use the mathematics of epidemic

theory to predict reliability of the

protocol

Assume an initial state

Now look at result of running B rounds

of gossip: converges exponentially

quickly towards atomic delivery

p{#processes=k}

Pbcast bimodal delivery distribution

Either sender

fails…

1.E+00

… or data gets

through w.h.p.

1.E-05

1.E-10

1.E-15

1.E-20

1.E-25

1.E-30

0

5

10

15

20

25

30

35

40

45

50

number of processes to deliver pbcast

Failure analysis

Suppose someone tells me what they

hope to “avoid”

Model as a predicate on final system

state

Can compute the probability that pbcast

would terminate in that state, again

from the model

Two predicates

Predicate I: A faulty outcome is one

where more than 10% but less than

90% of the processes get the multicast

… Think of a probabilistic Byzantine

General’s problem: a disaster if many

but not most troops attack

Two predicates

Predicate II: A faulty outcome is one where

roughly half get the multicast and failures

might “conceal” true outcome

… this would make sense if using pbcast to

distribute quorum-style updates to replicated

data. The costly hence undesired outcome is

the one where we need to rollback because

outcome is “uncertain”

Two predicates

Predicate I: More than 10% but less than

90% of the processes get the multicast

Predicate II: Roughly half get the multicast

but crash failures might “conceal” outcome

Easy to add your own predicate. Our

methodology supports any predicate over

final system state

Scalability of Pbcast reliability

P{failure}

1.E-05

1.E-10

1.E-15

1.E-20

1.E-25

1.E-30

1.E-35

10

15

20

25

30

35

40

45

50

55

60

#processes in system

Predicate I

Predicate II

P{failure}

Effects of fanout on reliability

1.E+00

1.E-02

1.E-04

1.E-06

1.E-08

1.E-10

1.E-12

1.E-14

1.E-16

1

2

3

4

5

6

7

8

9

10

fanout

Predicate I

Predicate II

fanout

Fanout required for a specified reliability

9

8.5

8

7.5

7

6.5

6

5.5

5

4.5

4

20

25

30

35

40

45

50

#processes in system

Predicate I for 1E-8 reliability

Predicate II for 1E-12 reliability

Scalability of Pbcast reliability

1.E+00

1.E-05

1.E-05

1.E-10

P{failure}

p{#processes=k}

Pbcast bimodal delivery distribution

1.E-10

1.E-15

1.E-20

1.E-15

1.E-20

1.E-25

1.E-30

1.E-35

1.E-25

10

15

1.E-30

0

5

10

15

20

25

30

35

40

45

P{failure}

fanout

5

6

7

40

45

50

55

60

Predicate II

9

8.5

8

7.5

7

6.5

6

5.5

5

4.5

4

20

4

35

Fanout required for a specified reliability

1.E+00

1.E-02

1.E-04

1.E-06

1.E-08

1.E-10

1.E-12

1.E-14

1.E-16

3

30

Predicate I

Effects of fanout on reliability

2

25

#processes in system

50

number of processes to deliver pbcast

1

20

8

9

10

25

30

35

40

45

50

#processes in system

fanout

Predicate I for 1E-8 reliability

Predicate I

Predicate II

Figure 5: Graphs of analytical results

Predicate II for 1E-12 reliability

Discussion

We see that pbcast is indeed bimodal

even in worst case, when initial

multicast fails

Can easily tune parameters to obtain

desired guarantees of reliability

Protocol is suitable for use in

applications where bounded risk of

undesired outcome is sufficient

Model makes assumptions...

These are rather simplistic

Yet the model seems to predict behavior in

real networks, anyhow

In effect, the protocol is not merely robust to

process perturbation and message loss, but

also to perturbation of the model itself

Speculate that this is due to the incredible

power of exponential convergence...

Experimental work

SP2 is a large network

Nodes are basically UNIX workstations

Interconnect is basically an ATM network

Software is standard Internet stack (TCP, UDP)

We obtained access to as many as 128 nodes

on Cornell SP2 in Theory Center

Ran pbcast on this, and also ran a second

implementation on a real network

Example of a question

Create a group of 8 members

Perturb one member in style of Figure 1

Now look at “stability” of throughput

Measure rate of received messages during

periods of 100ms each

Plot histogram over life of experiment

Source to dest latency distributions

0.6

0.4

Traditional Protocol

w ith .45 sleep

probability

0.2

0

Inter-arrival spacing (m s)

1

0.8

0.6

Notice that in

practice, bimodal

Pbcast w ith .05

multicast is fast!

sleep probability

Pbcast w ith .45

sleep probability

0.4

0.2

0

0.

00

5

0.

01

5

0.

02

5

0.

03

5

0.

04

5

0.

05

5

0.

06

5

Traditional Protocol

w ith .05 sleep

probability

Histogram of throughput for pbcast

Probability of occurence

0.8

0.

00

5

0.

01

5

0.

02

5

0.

03

5

0.

04

5

0.

05

5

0.

06

5

Probability of occurence

Histogram of throughput for Ensemble's FIFO

Virtual Synchrony Protocol

Inter-arrival spacing (m s)

Now revisit Figure 1 in detail

Take 8 machines

Perturb 1

Pump data in at varying rates, look at

rate of received messages

Revisit our original scenario with

perturbations (32 processes)

High bandwidth comparison of pbcast performance at faulty and correct hosts

200

traditional: at unperturbed host

pbcast: at unperturbed host

180

traditional: at perturbed host

pbcast: at perturbed host

160

Low bandwidth comparison of pbcast performance at faulty and correct hosts

200

traditional w/1 perturbed

pbcast w/1 perturbed

throughput for traditional, measured at perturbed host

throughput for pbcast measured at perturbed host

180

160

140

average throughput

average throughput

140

120

100

80

120

100

80

60

60

40

40

20

20

0

0.1

0.2

0.3

0.4

0.6

0.5

perturb rate

0.7

0.8

0.9

0

0.1

0.2

0.3

0.4

0.6

0.5

perturb rate

0.7

0.8

0.9

Throughput variation as a

function of scale

mean and standard deviation of pbcast throughput: 96-member group

220

215

215

210

210

throughput (msgs/sec)

throughput (msgs/sec)

mean and standard deviation of pbcast throughput: 16-member group

220

205

200

195

205

200

195

190

190

185

185

180

0

0.05

0.1

0.15

0.2

0.25

0.3

perturb rate

0.35

0.4

0.45

180

0.5

mean and standard deviation of pbcast throughput: 128-member group

0

0.05

0.1

0.15

0.2

0.25

0.3

perturb rate

0.35

0.4

0.45

0.5

standard deviation of pbcast throughput

220

150

215

standard deviation

throughput (msgs/sec)

210

205

200

195

100

50

190

185

180

0

0.05

0.1

0.15

0.2

0.25

0.3

perturb rate

0.35

0.4

0.45

0.5

0

0

50

100

process group size

150

Impact of packet loss on

reliability and retransmission rate

Pbcast background overhead: perturbed process percentage (25%)

Pbcast with system-wide message loss: high and low bandwidth

100

200

8 nodes

16 nodes

64 nodes

128 nodes

90

180

140

120

100

hbw:8

hbw:32

hbw:64

hbw:96

lbw:8

lbw:32

lbw:64

lbw:96

80

60

40

20

0

retransmitted messages (%)

average throughput of receivers

80

160

70

60

50

40

30

20

10

0

0

0.02

0.04

0.06

0.08

0.1

0.12 0.14

system-wide drop rate

0.16

0.18

0.2

0

0.05

Notice that when network becomes

overloaded, healthy processes

experience packet loss!

0.1

0.15

0.2

0.25

0.3

perturb rate

0.35

0.4

0.45

0.5

What about growth of

overhead?

Look at messages other than original

data distribution multicast

Measure worst case scenario: costs at

main generator of multicasts

Side remark: all of these graphs look

identical with multiple senders or if

overhead is measured elsewhere….

16 nodes - 4 perturbed processes

100

80

60

40

20

0

average

overhead

average

overhead

(msgs/sec)

8 nodes - 2 perturbed processes

0.1

0.2

0.3

0.4

100

80

60

40

20

0

0.1

0.5

0.2

100

80

60

40

20

0

0.3

0.4

perturb rate

0.5

128 nodes - 32 perturbed processes

average

overhead

average

overhead

64 nodes - 16 perturbed processes

0.2

0.4

perturb rate

perturb rate

0.1

0.3

0.5

100

80

60

40

20

0

0.1

0.2

0.3

0.4

perturb rate

0.5

Growth of Overhead?

Clearly, overhead does grow

We know it will be bounded except for

probabilistic phenomena

At peak, load is still fairly low

Pbcast versus SRM, 0.1%

packet loss rate on all links

PBCAST and SRM with system wide constant noise, tree topology

PBCAST and SRM with system wide constant noise, tree topology

15

15

adaptive SRM

repairs/sec received

Tree

networks

requests/sec received

adaptive SRM

10

SRM

5

10

SRM

5

Pbcast-IPMC

0

Pbcast

Pbcast-IPMC

0

10

20

30

40

50

60

group size

70

80

90

100

Pbcast

0

0

10

PBCAST and SRM with system wide constant noise, star topology

20

30

40

50

60

group size

70

80

90

100

PBCAST and SRM with system wide constant noise, star topology

15

60

50

SRM

10

SRM

repairs/sec received

Star

networks

requests/sec received

adaptive SRM

5

40

30

20

adaptive SRM

10

0

Pbcast

Pbcast-IPMC

0

10

20

30

40

50

60

group size

70

80

90

100

0

0

10

20

30

40

50

60

group size

70

80

Pbcast

Pbcast-IPMC

90

100

Pbcast versus SRM: link

utilization

PBCAST and SRM with system wide constant noise, tree topology

PBCAST and SRM with system wide constant noise, tree topology

20

Pbcast

Pbcast-IPMC

SRM

Adaptive SRM

45

40

link utilization on an incoming link to sender

link utilization on an outgoing link from sender

50

35

30

25

20

15

10

5

0

Pbcast

Pbcast-IPMC

SRM

Adaptive SRM

18

16

14

12

10

8

6

4

2

0

10

20

30

40

50

60

group size

70

80

90

100

0

0

10

20

30

40

50

60

group size

70

80

90

100

Pbcast and SRM with 0.1% system wide constant noise, 1000-node tree topology

30

Pbcast and SRM with 0.1% system wide constant noise, 1000-node tree topology

30

25

25

adaptive SRM

20

repairs/sec received

requests/sec received

Pbcast versus SRM: 300 members on a

1000-node tree, 0.1% packet loss rate

SRM

15

10

5

0

adaptive SRM

20

SRM

15

10

5

Pbcast

Pbcast-IPMC

0

20

40

60

80

group size

100

120

0

Pbcast-IPMC

Pbcast

0

20

40

60

80

group size

100

120

Pbcast Versus SRM:

Interarrival Spacing

Pbcast versus SRM: Interarrival spacing (500

nodes, 300 members, 1.0% packet loss)

1% system wide noise, 500 nodes, 300 members, pbcast-grb

1% system wide noise, 500 nodes, 300 members, srm

100

90

90

90

80

80

80

70

60

50

40

30

percentage of occurrences

100

percentage of occurrences

percentage of occurrences

1% system wide noise, 500 nodes, 300 members, pbcast-grb

100

70

60

50

40

30

70

60

50

40

30

20

20

20

10

10

10

0

0

0.05

0.1

0.15 0.2 0.25 0.3 0.35

latency at node level (second)

0.4

0.45

0.5

0

0

0.05

0.1

0.15 0.2 0.25 0.3 0.35 0.4

latency after FIFO ordering (second)

0.45

0.5

0

0

0.05

0.1

0.15 0.2 0.25 0.3 0.35

latency at node level (second)

0.4

0.45

0.5

Real Data: Spinglass on a 10Mbit

ethernet (35 Ultrasparc’s)

Injected noise, retransmission

limit disabled

Injected noise, retransmission

limit re-enabled

150

180

140

160

130

140

120

120

110

#msgs

200

100

100

80

90

60

80

40

70

20

60

0

0

10

20

30

40

50

sec

60

70

80

90

100

50

0

10

20

30

40

50

sec

60

70

80

90

100

Networks structured as

clusters

Receiver

Sender

High noise rate

Limited

bandwidth

(1500Kbits)

Bandwidth of

other

links:10Mbits

Receiver

Sender

Delivery latency in a 2-cluster LAN,

50% noise between clusters, 1% elsewhere

partitioned nw, 50% noise between clusters, 1% system wide noise, n=80, pbcast-grb

45

40

40

35

35

percentage of occurrences

percentage of occurrences

partitioned nw, 50% noise between clusters, 1% system wide noise, n=80, pbcast-grb

45

30

25

20

15

30

25

20

15

10

10

5

5

0

0

0

1

2

3

4

latency at node level (second)

5

6

0

1

45

40

40

35

35

30

25

20

15

15

5

2

3

4

latency at node level (second)

6

20

5

1

5

25

10

0

6

30

10

0

5

srm adaptive

45

percentage of occurrences

percentage of occurrences

srm

2

3

4

latency after FIFO ordering (second)

5

6

0

0

1

2

3

4

latency at node level (second)

Requests/repairs and latencies

with bounded router bandwidth

limited bw on router, 1% system wide constant noise

limited bw on router, 1% system wide constant noise

200

120

pbcast-grb

srm

pbcast-grb

srm

180

100

140

repairs/sec received

requests/sec received

160

120

100

80

60

40

80

60

40

20

20

0

0

20

40

60

group size

80

100

120

limited bw on router, noise=1%, n=100, pbcast-grb

20

40

60

group size

5

2

4

6

8

10

12

14

latency at node level (second)

16

18

20

10

5

0

100

120

15

percentage of occurrences

10

0

80

limited bw on router, noise=1%, n=100, srm

15

percentage of occurrences

percentage of occurrences

0

limited bw on router, noise=1%, n=100, pbcast-grb

15

0

0

0

2

4

6

8

10

12

14

latency after FIFO ordering (second)

16

18

20

10

5

0

0

2

4

6

8

10

12

14

latency at node level (second)

16

18

20

Discussion

Saw that stability of protocol is exceptional

even under heavy perturbation

Overhead is low and stays low with system

size, bounded even for heavy perturbation

Throughput is extremely steady

In contrast, virtual synchrony and SRM both

are fragile under this sort of attack

Programming with pbcast?

Most often would want to split

application into multiple subsystems

Use pbcast for subsystems that generate

regular flow of data and can tolerate

infrequent loss if risk is bounded

Use stronger properties for subsystems

with less load and that need high

availability and consistency at all times

Programming with pbcast?

In stock exchange, use pbcast for pricing but

abcast for “control” operations

In hospital use pbcast for telemetry data but

use abcast when changing medication

In air traffic system use pbcast for routine

radar track updates but abcast when pilot

registers a flight plan change

Our vision: One protocol sideby-side with the other

Use virtual synchrony for replicated

data and control actions, where strong

guarantees are needed for safety

Use pbcast for high data rates, steady

flows of information, where longer term

properties are critical but individual

multicast is of less critical importance

Summary

New data point in a familiar spectrum

Virtual synchrony

Bimodal probabilistic multicast

Scalable reliable multicast

Demonstrated that pbcast is suitable for

analytic work

Saw that it has exceptional stability