Independent Study Summary 3/22/05 B. Golden 1

advertisement

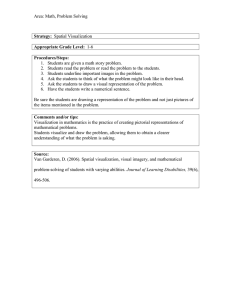

Independent Study Summary 3/22/05 B. Golden 1 Presentation Methodology: Introduction Why use visualizations? To facilitate user comprehension To convey complexity and intricacy of performance data Help bridge the gap between raw performance data and performance improvements When to use visualizations? On-line: visualization while the application is running (can slow down execution significantly) Post mortem: after execution (usually based on trace data gathered at runtime) What to visualize? Interactive displays to guide the user, not rationalize Default visualizations should provide high-level views Low-level information should be easily accessible 2 Visualization Concepts and Principles Context Scaling Multidimensional/Multivariate representation: a representation of data with many attributes per data point Macroscopic/Microscopic views: the level of detail represented by a given view Micro/Macro composition: showing both local detail and global structure Adaptive display: the adjustment of a display’s characteristics in response to data size Display manipulation: interactive modification of a display (i.e. zooming, scrolling) Composite view: synthesis of two or more views into a single view Comparison Perspective: the point of view from which information is presented Semantic Context: the relationship between performance information and user data constructs Sub-view Mapping: a mapping between a subset of graphical views Multiple views: the presentation of data from multiple perspectives Small multiples: a series of images indexed by changes in other performance data (e.g. animation) Cross-execution views: comparison of performance information from various program executions Extraction of information Reduction and filtering: representing raw data by statistical summaries Clustering: multivariate statistical analysis and presentation techniques for grouping or categorizing related data points Encoding and abstracting: using graphical attributes (color, shape, size, etc) to convey information Separating information: differentiation among layers of information through color highlighting foreground/background, etc. 3 General Approaches to Performance Visualization General Categories Program specific: application specific way to show how computation progresses by animating data structures System oriented: focus on the impact the application has on the system System/Application independent: depict performance data for a variety of systems and applications. See figure Meta-tools: facilitate the development of custom visualization tools Other Categories On-line: visualization during execution Can be intrusive Volume of information may be too large to interpret with out playback functionality Allows the user to observe only the interesting parts of execution Post mortem: visualization after execution Large trace files Easier to implement Users are accustomed to this format 4 Specific Features of Existing Visualization Strategies Animation Program graphs De facto standard for displaying inter-process communication Data access displays A generalized picture of the whole system Paradyn has this functionality Gantt charts Has been employed by various tools to assist in the program execution replay Communication operations are the most commonly animated events Viewing data dynamically may illuminate bottlenecks more efficiently Each cell of the 2D display is devoted to an element of the array Color distinguishes between local/remote and read/write Critical path analysis Concerned with identifying the program regions which most contribute to program execution time Construct a graph which depicts synchronization and communication dependencies among the processes in the program 5 Summary of Visualizations Visualization Name Advantages Disadvantages Include in the PAT Used For Animation Adds another dimension to visualizations CPU intensive Yes Various Program Graphs (N-ary tree) Built-in zooming; Integration of high and low-level data Difficult to see interprocess data Maybe Comprehensive Program Visualization Gantt Charts (Time histogram; Timeline) Ubiquitous; Intuitive Not as applicable to shared memory as to message passing Yes Communication Graphs Data Access Displays (2D array) Provide detailed information regarding the dynamics of shared data Narrow focus; Users may not be familiar with this type of visualization Maybe Data Structure Visualization Kiviat Diagrams Provides an easy way to represent statistical data Can be difficult to understand Maybe Various statistical data (processor utilization, cache miss rates, etc.) Event Graph Displays (Timeline) Can be used to display multiple data types (event-based) Mostly provides only high-level information Maybe Inter-process dependency 6 Evaluation of User Interfaces General Guidelines Visualization should guide, not rationalize Scalability is crucial Color should inform, not entertain Visualization should be interactive Visualizations should provide meaningful labels Default visualization should provide useful information Avoid showing too much detail Visualization controls should be simple GOMS Goals, Operators, Methods, and Selection Rules Formal user interface evaluation technique A way to characterize a set of design decisions from the point of view of the user A description of what the user must learn; may be the basis for reference documentation The knowledge is described in a form that can actually be executed (there have been several fairly successful attempts to implement GOMS analysis in software, ie GLEAN) There are various incarnations of GOMS with different assumptions useful for more specific analyses (KVL, CMN-GOMS, NGOMSL, CPM-GOMS, etc.) 7 Simple GOMS Example GOMS model for OS X Method for goal: delete a file. Method for goal: move a file. Step 1. Accomplish goal: drag file to trash. Step 2. Return with goal accomplished. Step 1. Accomplish goal: drag file to destination. Step 2. Return with goal accomplished. GOMS model for UNIX Method for goal: delete a file. Step 1. Recall that command verb is "rm -f". Step 2. Think of directory name and file name and retain as first filespec. Step 3. Accomplish goal: enter and execute a command. Step 4. Return with goal accomplished. Method for goal: copy a file. Step 1. Recall that command verb is "cp". Step 2. Think of source directory name and file name and retain as first filespec. Step 3. Think of destination directory name and retain as second filespec. Step 4. Accomplish goal: enter and execute a command. Step 5. Return with goal accomplished. 8 Conclusion Plan for development Develop a preliminary interface that provides the functionality required by the user while conforming to visualization guidelines presented previously After the preliminary design is complete, elicit user feedback During periods where user contact is unavailable, we may be able to use GOMS analysis or another formal interface evaluation technique 9 Other Tasks Usability Survey Update Posts on MPI user discussion lists Email to all the UPC/SHMEM users we know One response so far We should be able to get paper copies of the 30 responses from the APART survey Gmail invites used to elicit more responses Other potential avenues Existing performance tool mailing lists Use our other contacts (developers, etc.) Literature Search Usability Regarding tool development 10