Integrated Data Analysis and Visualization Group 5 Report DOE Data Management Workshop

advertisement

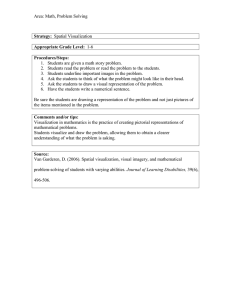

Integrated Data Analysis and Visualization Group 5 Report DOE Data Management Workshop May 24-26, 2004 Chicago, IL Group 5 Participants Wes Bethel, LBNL George Michaels, PNNL John Blondin, NC State Habib Najm, SNL Julian Bunn, Caltech John van Rosendale, DOE/HQ George Chin, PNNL Nagiza Samatova, ORNL Chris Ding, LBNL David Schissel, General Atomics Irwin Gaines, FNAL Gary Strand, NCAR Chandrika Kamath, LLNL Todd Smith, Geospiza, Inc. James Kohl, ORNL Outline What is integrated data analysis and visualization? Why do we care? Data Complexity & Implications Applications-driven capabilities Technology gaps to address these capabilities General Recommendations Conclusions The Curse of Ultrascale Computation and High-throughput Experimentation Computational and experimental advances enable capturing of complex natural phenomena, on a scale not possible just a few years ago. With this opportunity, comes a new problem – the petabyte quantities of produced data. As a result, answers to fundamental questions about the nature of the universe largely remain hidden in these data. How to enable scientists perform analyses and visualizations of these raw data to extract knowledge? Tony’s Scenario Data Select Data Access Correlate Render Display (density, pressure) From astro-data Where (step=101) (x-velocity>Y); Sample (density, pressure) Scientific Process Automation Layer Data Mining & Analysis Layer Storage Efficient Access Layer Run analysis Run viz filter Visualize scatter plot Workflow Design & Execution Select Data Take Sample Use Bitmap Get variables (condition) (var-names, ranges) Bitmap Index Selection Analysis Tool Read Data (buffer-name) Write Data VIZ Tool Read Data Read Data (buffer-name) (buffer-name) Write Data Parallel HDF Hardware, OS, and MSS (HPSS) PVFS Integration Must Happen at Multiple Levels To enable end-to-end system performance, 80-20 rule and novel discoveries integration must happen: Between and within data flow levels: Workflows Analysis & Viz Access & Movement) Across geographically distributed resources Across multiple data scales and resolutions Challenge of Data Massiveness Drinking from the firehose Climate Now: 20-40TB per simulated year 5 yrs: 100TB/yr 5-10PB/yr Fusion Now: 100Mbytes/15min 5 yrs: 1000Mbytes/2 min with realtime comparison with running experiment, 500Mbits/sec guaranteed (QoS) High Energy Physics Now: 1-10PB data stored, Gigabit net. 5 yrs: 100PB data, 100Gbits/sec net Chemistry (Combustion and Nanostructures) Now: 10-30TB data Sharf’smulticast stats, LBL) 5 yrs: 30-100TB data, (John 10Gbits/sec Most of this Data will NEVER Be Touched with the current trends in technology The amount of data stored online quadruples every 18 months, while processing power ‘only’ doubles every 18 months. Unless the number of processors increases unrealistically rapidly, most of this data will never be touched. Storage device capacity doubles every 9 months, while memory capacity doubles every 18 months (Moore’s law). Even if the divergence between these rates of growth will converge, the memory latency is and will remain the rate-limiting step in data-intensive computations Operating systems struggle to handle files larger than a few GBs. OS constraints and memory capacity determine data set file size and fragmentation Challenge of Breaking the Algorithmic Complexity Bottleneck MS Data Rates: 100’sGB10’sTB/day(2004)1.0’sPB/day(2008) Algorithm Complexity Algorithmic Complexity: Calculate means Calculate FFT Data size, n n nlog(n) n2 100B 10-10sec. 10-10 sec. 10-8 sec. 10KB 10-8 sec. 10-8 sec. 10-4sec. 1MB 10-6 sec. 10-5 sec. 1 sec. 100MB 10-4 sec. 10-3 sec. 3 hrs 10GB 10-2 sec. 0.1 sec. 3 yrs. O(n) O(n log(n)) Calculate SVD O(r • c) Clustering algorithms O(n2) For illustration chart assumes 10-12 sec. calculation time per data point Massive Data Sets are Naturally Distributed BUT Effectively Immoveable (Skillicorn, 2001) Bandwidth is increasing but not at the same rate as stored data Latency for transmission at global distances is significant There are some parts of the world with high available bandwidth BUT there are enough bottlenecks that high effective bandwidth is unachievable across heterogeneous networks Most of this latency is time-of-flight and so will not be reduced by technology Data has a property similar to inertia: It is cheap to store and cheap to keep moving, but the transitions between these two states are expensive in time and hardware. Legal and political restrictions Social restrictions Data owners may let access data but only by retaining control of it • Should we move computations to the data, rather than data to the computations? • Should we cache the data close to analysis and viz.? • Should we be smarter about reducing the size of the data while having the same or richer information content? Challenge of High Dimensionality, Multi-Scale and Multi-Resolution Time The experiment paradigm is changing to statistically capture the complexity. We will get maximum value when we explore three or more dimensions/scales in a single experiment. (From G. Michaels, PNNL) But multi-scale and multi-resolution analysis and visualization are in their infancy! Know Our Limits & Be Smart Obligations are two-sided: CS and Apps Not humanly possible to browse a petabyte of data. Analysis must select views or reduce to quantities of interest rather than push more views past the user. More data Ultrascale Simulations: Must be smart about which probe combinations to see! Physical Experiments: Must be smart about probe placement! Petabytes Can we browse a petabyte of data? Terabytes To see 1 percent of a petabyte at 10 megabytes per second takes 35 8-hour days! Gigabytes Megabytes No Analysis Region Selection Analysis-driven summarization More analysis Analysis of full context must select views or reduce to quantities of interest in addition to fast rendering of data. Frame of Context Differences Suggest Needs in Hardware and Software for Analysis and Visualization Arguably, visualization can be the most critical step of a simulation experiment. But it has to be in a full context. Visualization I hear and I forget. I see and I believe. I do Visual Analysis and I understand. —Confucius (551-479 BC) Frames of context for major steps of space-time simulation scientific discovery process. Time Space Simulation Analysis Storage Need hardware and software for Full Context analysis and visualization (From G. Ostrouchov, ORNL) But Tony Still Has a Dream – Internet “Plug-ins” for Ultrascale Computing! Paraview ASPECT From Dreams to Achievable Applicationdriven Capabilities The first step in Group 5 discussion Representation by Applications: Technology Gaps Ranking: • Climate • Biology • Combustion • Fusion • HENP • Supernova Research & Development -- 3 Hardening Technology -- 2 Deployment & Maintenance -- 1 Capability #1: IDL-like SCALABLE, open source environment (J.Blondin) High performance-enabling technologies: Parallel analysis and viz. algorithms (e.g. pVTK, pMatlab, parallel-R) (3—2) Portable implementation on HPC platforms (2—1) Hardware accelerated implementations (GPUs, FPGAs) (2—1) Parallel I/O libraries coupled with analysis and viz (ROMIO+pVTK, pNetCDF+Parallel-R) (2—1) Information visualization (3—2) Interoperability-enabling technologies: Component architectures (CCA) (3—2) Core data models and data structures unification (3—2) Structural, semantic and syntactic mediation (3—2) Scripting environments: IDL/Matlab-like high-level programming languages (3) Optimized (parallel, accelerated) functions (core libraries) (3) Simulation interfaces (3) Visualization interfaces (information, statistical and scientific visualization) (3—2) Capability #2: Domain-specific libraries and tools Same technologies as for Capability #1 Plus More Novel algorithms for domain-specific analysis and visualization: Feature extraction/selection/tracking (e.g., ICA for climate) (3—2) New types of data (e.g. trees, networks) (3—2) Interpolation & transformation (3—2) Multi-scale/hierarchical features correlation (3) Novel data models if necessary (3) Capability #3: “Plug and Play” Analysis and Visualization Envs Community-specific data model(s) (3) Standardization that still provides efficiency and flexibility Community-specific common APIs (3) Unified still extensible data structures Common component architectures (3—2) “i”-ntegration vs. “I”-ntegration Components integration strategies should be assessed within single and between multiple higher-level applications “i”-integration within the same application: Same set of data structures => brute-force check (at worst) Same language, execution and control model Scripting languages (TCL, Python, R) Run on the same cluster of machines “I”-integration across multiple applications: Different data structures (unknown for future apps) Different execution & control models Different programming languages Run on different hosts Data Formats Transformations: • • • • File App App_X App_X App_X App_Y App File Capability #4: Feature (region) detection, extraction, tracking Efficient and effective data indexing that (3-2): Supports unstructured data in files (e.g., bitmap indexing extension to AMR) Supports heterogeneous/non-scalar data (e.g., vector fields, protein sequence, protein function, pathway, network) Supports on-demand derived data (e.g. F(X)/G(Y)<5: entropy as a function of indexed density and pressure) Information visualization (3—2) Other Capabilities Remote, collaborative & interactive analysis and visualization (3-2): Network-aware analysis and viz Novel means of hiding latency (e.g. caching via LoCi, view-dependent isosurfaces) Sensitivity & uncertainty quantification (3) Streaming analysis & viz (3): Approx. multi-res. algorithms Data transformations on streaming data Annotation & provenance of analysis and visualization results System-, analysis & viz-, data-level metadata Verification and validation Comparative analysis and visualization Cross-cutting capabilities: Integration of analysis and visualization with workflows Integration of analysis and visualization with data bases (e.g., query-based) General Recommendations Encourage open source software Move out mature technologies (??) Encourage/force data model(s) & APIs standardization efforts? Do not expect scientists to develop their domain-specific components rather fund collaborative CS & Apps teams Will assure more robust and reusable solutions and take the burden of CS tasks from scientists Conclusions Integration must occur at multiple levels Integration is more easily achievable within a community than across communities Community-based data model(s) and APIs are required for “Plug & Play”