Tuesday week7, part1

advertisement

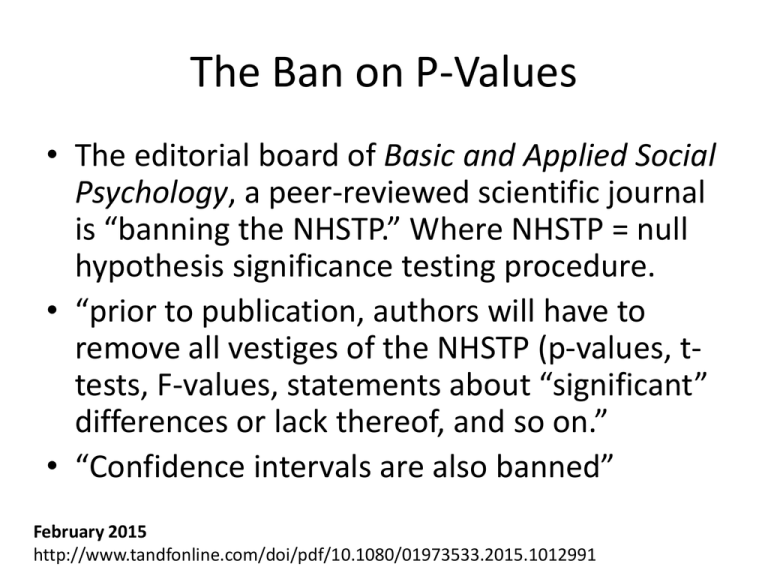

The Ban on P-Values • The editorial board of Basic and Applied Social Psychology, a peer-reviewed scientific journal is “banning the NHSTP.” Where NHSTP = null hypothesis significance testing procedure. • “prior to publication, authors will have to remove all vestiges of the NHSTP (p-values, ttests, F-values, statements about “significant” differences or lack thereof, and so on.” • “Confidence intervals are also banned” February 2015 http://www.tandfonline.com/doi/pdf/10.1080/01973533.2015.1012991 Häggström hävdar - http://haggstrom.blogspot.com/ Intellectual suicide by the journal Basic and Applied Social Psychology With all due respect, professors Trafimow and Marks, but this is moronic. The procedure they call NHSTP is not "invalid", and neither is the (closely related) use of confidence intervals. The only things about NHSTP and confidence intervals that are "invalid" are certain naive and inflated ideas about their interpretation, held by many statistically illiterate scientists. These misconceptions about NHSTP and confidence intervals are what should be fought, not NHSTP and confidence intervals themselves, which have been indispensable tools for the scientific analysis of empirical data during most of the 20th century, and remain so today. Q: Why do so many colleges and grad schools teach p = .05? A: Because that's still what the scientific community and journal editors use. Q: Why do so many people still use p = 0.05? A: Because that's what they were taught in college or grad school. March 2016 http://amstat.tandfonline.com/doi/pdf/10.1080/00031305.2016.1154108 Debates - multiple testing (Gelman and Loken 2014) - “a p-value near 0.05 taken by itself offers only weak evidence against the null hypothesis” (Johnson, 2013) - we did not address alternative hypotheses, error types, or power (among other things), While the p-value can be a useful statistical measure, it is commonly misused and misinterpreted. March 2016 http://amstat.tandfonline.com/doi/pdf/10.1080/00031305.2016.1154108 Essential Elements of the ASA Statement on Statistical Significance and P-values Informally, a p-value is the probability under a specified statistical model that a statistical summary of the data (for example, the sample mean difference between two compared groups) would be equal to or more extreme than its observed value. March 2016 http://amstat.tandfonline.com/doi/pdf/10.1080/00031305.2016.1154108 Essential Elements of the ASA Statement on Statistical Significance and P-values 1. 2. 3. 4. P-values can indicate how incompatible the data are with a specified statistical model. This incompatibility can be interpreted as casting doubt on or providing evidence against the null hypothesis or the underlying assumptions. P-values do not measure the probability that the studied hypothesis is true, or the probability that the data were produced by random chance alone. Scientific conclusions and business or policy decisions should not be based only on whether a p-value passes a specific threshold. The widespread use of “statistical significance” (generally interpreted as “p ≤ 0.05”) as a license for making a claim of a scientific finding (or implied truth) leads to considerable distortion of the scientific process. Proper inference requires full reporting and transparency. Conducting multiple analyses of the data and reporting only those with certain p-values (typically those passing a significance threshold) renders the reported p-values essentially uninterpretable March 2016 http://amstat.tandfonline.com/doi/pdf/10.1080/00031305.2016.1154108 Essential Elements of the ASA Statement on Statistical Significance and P-values 5. A p-value, or statistical significance, does not measure the size of an effect or the importance of a result. 6. By itself, a p-value does not provide a good measure of evidence regarding a model or hypothesis. For these reasons, data analysis should not end with the calculation of a pvalue when other approaches are appropriate and feasible. Other approaches: - emphasize estimation over testing, such as confidence, credibility, or prediction intervals - Bayesian methods; alternative measures of evidence, such as likelihood ratios or Bayes Factors; - and other approaches such as decision-theoretic modeling and false discovery rates. All these measures and approaches rely on further assumptions, but they may more directly address the size of an effect (and its associated uncertainty) or whether the hypothesis is correct. March 2016 http://amstat.tandfonline.com/doi/pdf/10.1080/00031305.2016.1154108 “The reproducibility crisis” Replication with respect to failure to reproduce results Replication studies: Bad copy In the wake of high-profile controversies, psychologists are facing up to problems with replication. Yong. Nature News. 16 May 2012 “The reproducibility crisis” “The conduct of subtle experiments has much in common with the direction of a theatre performance,” says Daniel Kahneman, a Nobel-prizewinning psychologist at Princeton University in New Jersey. Trivial details such as the day of the week or the colour of a room could affect the results, and these subtleties never make it into methods sections. In a survey of more than 2,000 psychologists, Leslie John, a consumer psychologist from Harvard Business School in Boston, Massachusetts, showed that more than 50% had waited to decide whether to collect more data until they had checked the significance of their results, thereby allowing them to hold out until positive results materialize. More than 40% had selectively reported studies that “worked”. Brian Nosek, a social psychologist from the University of Virginia in Charlottesville, is bringing together a group of psychologists to try to reproduce every study published in three major psychological journals in 2008. The teams will adhere to the original experiments as closely as possible and try to work with the original authors. http://www.nature.com/news/replication-studies-bad-copy-1.10634 “The reproducibility crisis” • • • • • • • • 'uniformly most powerful' Bayesian test that defines the alternative hypothesis in a standard way, so that it “maximizes the probability that the Bayes factor in favor of the alternate hypothesis exceeds a specified threshold,” This threshold can be chosen so that Bayesian tests and frequentist tests will both reject the null hypothesis for the same test results. Johnson compared P values to Bayes factors. P value of 0.05 or less — commonly considered evidence in support of a hypothesis in fields such as social science, in which non-reproducibility has become a serious issue — corresponds to Bayes factors of between 3 and 5, which are considered weak evidence to support a finding. Indeed, as many as 17–25% of such findings are probably false, Johnson calculates1. He advocates for scientists to use more stringent P values of 0.005 or less to support their findings He postulates that use of the 0.05 standard might account for most of the problem of nonreproducibility in science — even more than other issues, such as biases and scientific misconduct. Weak statistical standards implicated in scientific irreproducibility Hayden, E.C. 2013. Weak statistical standards implicated in scientific irreproducibility: Onequarter of studies that meet commonly used statistical cutoff may be false. Nature News 11 November “The reproducibility crisis” over-reliance on statistical testing • • • • • Test of an over-reliance on significance-testing in research publication practices. 12 journal issues, all p-values, reliability tests P-values follow an exponential distribution Residuals in the 0.045-0.050 range were much larger than other intervals. More pvalues here! Publication bias, over-emphasis on NHST, “researcher degrees of freedom” – – – – • • Repeated peeks Optional stoppings Selective exclusion of outliers Selective use of covariates Bakker and Molenaar – researchers with p-values just below 0.05 are less likely to share data. “If false beliefs about p are partly to blame then one strategy may be to better educate researchers about the proper implementation of NHST and the benefit of complementary approaches such as likelihood analyses and Bayesian statistics.” Masicampo and Lalande 2012 “A peculiar prevalence of p values just below 0.05” The Quarterly Journal of Experimental Psychology. “The reproducibility crisis” – code sharing • Increasing transparency as one solution (open source code, data archiving etc). • Nature and Scientific Data already have code-sharing policies. http://www.nature.com/articles/sdata20154 • The problem is that reproducibility, as a tool for preventing poor research, comes in at the wrong stage of the research process (the end). While requiring reproducibility may deter people from committing outright fraud (a small group), it won't stop people who just don't know what they're doing with respect to data analysis (a much larger group). http://simplystatistics.org/2015/02/12/is-reproducibility-as-effective-as-disclosure-lets-hope-not/ “The reproducibility crisis” – Publication bias Medical literature - positive outcomes are more likely to be reported than null results. One result in 20 that is “significant at P=0.05” by chance alone. If only positive findings are published then they may be mistakenly considered to be of importance. As many studies contain long questionnaires collecting information on hundreds of variables, and measure a wide range of potential outcomes, several false positive findings are virtually guaranteed. The high volume and often contradictory nature of medical research findings, however, is not only because of publication bias. A more fundamental problem is the widespread misunderstanding of the nature of statistical significance. Jonathan A C Sterne and George Davey Smith Sifting the evidence— what's wrong with significance tests? Another comment on the role of statistical methods BMJ 2001; 322 Replication in Ecology • To what degree is replication possible in Ecological research? • As we move to larger and larger scales, replication becomes impossible. On the emptiness of failed replications Jason Mitchell, Harvard University “unsuccessful experiments have no meaningful scientific value” “What more, it is clear that the null claim cannot be reinstated by additional negative observations; rounding up trumpet after trumpet of white swans does not rescue the claim that no non-white swans exists. This is because positive evidence has, in a literal sense, infinitely more evidentiary value than negative evidence” How is a “significant” finding different from a black swan? 1.Which of the arguments in the blog were most directly connected to concepts we’ve focused on in this class? 2.Which is your favorite argument, example, or insight from the blog? 3.What do you disagree with? Why?