Analysis of Large Graphs Community Detection By: KIM HYEONGCHEOL

advertisement

Analysis of Large Graphs

Community Detection

By:

KIM HYEONGCHEOL

WALEED ABDULWAHAB YAHYA AL-GOBI

MUHAMMAD BURHAN HAFEZ

SHANG XINDI

HE RUIDAN

1

Overview

2

Introduction & Motivation

Graph cut criterion

Min-cut

Normalized-cut

Non-overlapping community detection

Spectral clustering

Deep auto-encoder

Overlapping community detection

BigCLAM algorithm

1

Introduction

Objective

Intro to Analysis of Large Graphs

KIM HYEONG CHEOL

Introduction

4

What is the graph?

Definition

An

ordered pair G = (V, E)

A set V of vertices

A set E of edges

A line of connection between two vertices

2-elements subsets of V

Types

Undirected

graph, directed graph, mixed graph,

multigraph, weighted graph and so on

Introduction

5

Undirected graph

Edges

have no orientation

Edge (x,y) = Edge (y,x)

The maximum number of

edges : n(n-1)/2

All pair

of vertices are

connected to each other

Undirected graph G = (V, E)

V : {1,2,3,4,5,6}

E : {E(1,2), E(2,3), E(1,5), E(2,5), E(4,5)

E(3,4), E(4,6)}

Introduction

6

The undirected large graph

E.g) Social graph

A sampled user email-connectivity graph : http://research.microsoft.com/en-us/projects/S-GPS/

Graph of Harry potter fanfiction

Adapted from http://colah.github.io/posts/2014-07-FFN-Graphs-Vis/

Introduction

7

The undirected large graph

E.g) Social graph

Graph of Harry potter fanfiction

Q : What do these large graphs present?

A sampled user email-connectivity graph : http://research.microsoft.com/en-us/projects/S-GPS/

Adapted from http://colah.github.io/posts/2014-07-FFN-Graphs-Vis/

Motivation

8

Social graph : How can you feel?

VS

A sampled user email-connectivity graph : http://research.microsoft.com/en-us/projects/S-GPS/

Motivation

9

Graph of Harry potter fanfiction : How can you

feel?

VS

Adapted from http://colah.github.io/posts/2014-07-FFN-Graphs-Vis/

Motivation

10

If we can partition, we can use it for analysis of graph as below

Motivation

11

Graph partition & community detection

Motivation

12

Graph partition & community detection

Motivation

13

Graph partition & community detection

Partition

Community

Motivation

14

Graph partition & community detection

Partition

Community

Q : How can we find the partitions?

2

Minimum-cut

Normalized-cut

Criterion : Graph partitioning

KIM HYEONG CHEOL

Criterion : Basic principle

16

Graph partitioning : A & B

A Basic principle for graph partitioning

Minimize

the number of between-group connections

Maximize the number of within-group connections

Criterion : Min-cut VS N-cut

17

A Basic principle for graph partitioning

Minimize

the number of between-group connections

Maximize the number of within-group connections

Minimum-Cut

vs

Normalized-Cut

Min-cut

N-cut

Minimize: between

group connections

Maximize : within-group

connections

X

Mathematical expression : Cut (A,B)

18

For considering between-group

Mathematical expression : Vol (A)

19

For considering within-group

vol (A) = 5

vol (B) = 5

Criterion : Min-cut

20

Minimize the number of between-group

connections

minA,B

cut(A,B)

A

B

Cut(A,B) = 1 -> Minimum value

Criterion : Min-cut

21

A

B

Cut(A,B) = 1

A

But, it looks more balanced…

B

How?

Criterion : N-cut

22

Minimize the number of between-group

connections

Maximize the number of within-group connections

If we define ncut(A,B) as below,

-> The minimum value of ncut(A,B) will produces more

balanced partitions because it consider both principles

Methodology

23

A

B

VS

Cut(A,B) = 1

𝟏

ncut(A,B) = 𝟐𝟔 +

𝟏

𝟏

= 1.038..

A

B

Cut(A,B) = 2

𝟐

ncut(A,B) = 𝟏𝟖 +

𝟐

𝟏𝟏

= 0.292..

Summary

24

What is the undirected large graph?

How can we get insight from the undirected large

graph?

Graph

Partition & Community detection

What were the methodology for good graph

partition?

Min-cut

Normalized-cut

3

Spectral Clustering

Deep GraphEncoder

Non-overlapping community detection:

Waleed Abdulwahab Yahya Al-Gobi

Finding Clusters

26

Nodes

Nodes

Network

How to identify such structure?

How to spilt the graph into two pieces?

Adjacency Matrix

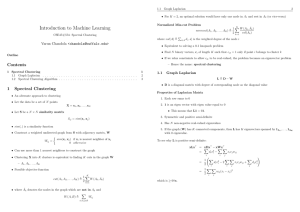

Spectral Clustering Algorithm

27

Three basic stages:

1)

Pre-processing

Construct

2)

a matrix representation of the graph

Decomposition

Compute

eigenvalues ( ) and eigenvectors (x) of the

matrix

Focus is about ( ) and it corresponding

2

3)

.

Grouping

Assign

points to two or more clusters, based on the new

representation

Matrix Representations

28

Adjacency matrix (A):

n

n binary matrix

A=[aij], aij=1 if edge between node i and j

5

1

2

3

4

6

1

2

3

4

5

6

1

0

1

1

0

1

0

2

1

0

1

0

0

0

3

1

1

0

1

0

0

4

0

0

1

0

1

1

5

1

0

0

1

0

1

6

0

0

0

1

1

0

Matrix Representations

29

Degree matrix (D):

n

n diagonal matrix

D=[dii],

dii = degree of node i

5

1

2

3

4

6

1

2

3

4

5

6

1

3

0

0

0

0

0

2

0

2

0

0

0

0

3

0

0

3

0

0

0

4

0

0

0

3

0

0

5

0

0

0

0

3

0

6

0

0

0

0

0

2

Matrix Representations

30

How can we use (L) to find good partitions of our

graph?

What are the eigenvalues and eigenvectors of (L)?

We know: L . x = λ . x

Spectrum of Laplacian Matrix (L)

31

The

Laplacian Matrix (L) has:

Eigenvalues

Eigenvectors

Important

where

properties:

Eigenvalues are non-negative real numbers

Eigenvectors are real and orthogonal

What

is trivial eigenpair?

𝒙 = (𝟏, … , 𝟏) then 𝑳 ⋅ 𝒙 = 𝟎 and so 𝝀 = 𝝀𝟏 = 𝟎

Best Eigenvector for partitioning

32

Second Eigenvector

Best

eigenvector that represents best quality of

graph partitioning.

Let’s check the components of

through (2 )

Fact: For symmetric matrix (L):

2 min x L x

T

x

Minimum is taken under the constraints

is unit vector: that is 𝒊 𝒙𝟐𝒊 = 𝟏

𝒙 is orthogonal to 1st eigenvector (𝟏, … , 𝟏) thus:

𝒊 𝒙𝒊 ⋅ 𝟏 = 𝒊 𝒙𝒊 = 𝟎

𝒙

Details!

λ2 as optimization problem

Fact: For symmetric matrix (L):

2 min x L x

T

x

What is the meaning of min xT L x on G?

x = xT D x − xT A x

𝑛

2

T

x D x = 𝑖=1 𝑑𝑖 𝑥𝑖 =

𝑖,𝑗

x

TL

x

T

x

TL

Ax=

x =

𝑖,𝑗 ∈𝐸 2𝑥𝑖 𝑥𝑗

2

2

(𝑥

+

𝑥

𝑗

𝑖,𝑗 ∈𝐸 𝑖

Remember : L = D - A

2

2

(𝑥

+

𝑥

𝑗)

∈𝐸 𝑖

− 2𝑥𝑖 𝑥𝑗 ) =

𝒊,𝒋 ∈𝑬

𝒙𝒊 −

33

λ2 as optimization problem

34

2 min ( i , j )E ( xi x j ) 2

x

All labelings of

nodes 𝑖 so that

𝑥𝑖 = 0

We want to assign values 𝒙𝒊 to nodes i such

that few edges cross 0.

(we want xi and xj to subtract each other)

x

𝑥𝑖

0

𝑥𝑗

Balance to minimize

Spectral Partitioning Algorithm: Example

35

2

3

4

5

6

1

3

-1

-1

0

-1

0

2

-1

2

-1

0

0

0

3

-1

-1

3

-1

0

0

4

0

0

-1

3

-1

-1

5

-1

0

0

-1

3

-1

6

0

0

0

-1

-1

2

0.0

0.4

0.3

-0.5

-0.2

-0.4

-0.5

1.0

0.4

0.6

0.4

-0.4

0.4

0.0

0.4

0.3

0.1

0.6

-0.4

0.5

0.4

-0.3

0.1

0.6

0.4

-0.5

4.0

0.4

-0.3

-0.5

-0.2

0.4

0.5

5.0

0.4

-0.6

0.4

-0.4

-0.4

0.0

1) Pre-processing:

1

Build Laplacian

matrix L of the

graph

2) Decomposition:

Find eigenvalues

and eigenvectors x

of the matrix L

Map vertices to

corresponding

components of

X2

=

3.0

3.0

1

0.3

2

0.6

3

0.3

4

-0.3

5

-0.3

6

-0.6

X=

How do we now

find the clusters?

Spectral Partitioning Algorithm: Example

36

3) Grouping:

Sort

components of reduced 1-dimensional vector

Identify clusters by splitting the sorted vector in two

How to choose a splitting point?

Naïve

Split

approaches:

at 0 or median value

Split at 0:

Cluster A: Positive points

Cluster B: Negative points

1

0.3

2

0.6

3

0.3

4

-0.3

1

0.3

4

-0.3

5

-0.3

2

0.6

5

-0.3

6

-0.6

3

0.3

6

-0.6

A

B

Example: Spectral Partitioning

Value of x2

37

Rank in x2

Example: Spectral Partitioning

38

Value of x2

Components of x2

Rank in x2

k-Way Spectral Clustering

39

How do we partition a graph into k clusters?

Two basic approaches:

Recursive

bi-partitioning [Hagen et al., ’92]

Recursively apply bi-partitioning algorithm in

a hierarchical

divisive manner

Disadvantages: Inefficient

Cluster

multiple eigenvectors [Shi-Malik, ’00]

Build a

reduced space from multiple eigenvectors

Commonly used in recent papers

A preferable approach

4

Spectral Clustering

Deep GraphEncoder

Deep GraphEncoder [Tian et al., 2014]

Muhammad Burhan Hafez

Autoencoder

41

Architecture:

D1

D2

E1

E2

Reconstruction loss:

Autoencoder & Spectral Clustering

42

Simple theorem (Eckart-Young-Mirsky theorem):

Let A be any matrix, with singular value decomposition (SVD) A = U Σ VT

Let

be the decomposition where we keep only the

k largest singular values

Then,

is

Note:

If A is symmetric singular values are eigenvalues & U = V = eigenvectors.

Result (1):

Spectral Clustering ⇔ matrix reconstruction

Autoencoder & Spectral Clustering (cont’d)

43

Autoencoder case:

based on previous theorem, where X = U Σ VT and K is the hidden layer size

Result (2):

Autoencoder ⇔ matrix reconstruction

Deep GraphEncoder | Algorithm

44

Clustering with GraphEncoder:

1.

2.

Learn a nonlinear embedding of the original graph by

deep autoencoder (the eigenvectors corresponding to

the K smallest eigenvalues of graph Lablacian matrix).

Run k-means algorithm on the embedding to obtain

clustering result.

Deep GraphEncoder | Efficiency

45

Approx. guarantee:

Cut found by Spectral Clustering and Deep GraphEncoder is at

most 2 times away from the optimal.

Computational Complexity:

Spectral Clustering

Θ

(n3)

due to EVD

GraphEncoder

Θ (ncd)

c : avg degree of the graph

d: max # of hidden layer nodes

Deep GraphEncoder | Flexibility

46

Sparsity

constraint can be easily added.

Improving the efficiency (storage & data processing).

Improving clustering accuracy.

Original objective

function

Sparsity

constraint

5

BigCLAM: Introduction

Overlapping Community Detection

SHANG XINDI

Non-overlapping Communities

48

Nodes

Nodes

Network

Adjacency matrix

Non-overlapping vs Overlapping

49

Facebook Network

50

Social communities

High school

Summer

internship

Stanford (Basketball)

Stanford (Squash)

Nodes: Facebook Users

Edges: Friendships

50

Overlapping Communities

51

Edge density in the overlaps is higher!

Network

Adjacency matrix

Assumption

52

Community membership strength matrix 𝑭 (>=0)

Nodes

Communities

𝑷𝑪 𝒖, 𝒗 : Probability of u and v

have connection according to

community C

𝑷𝑪 𝒖, 𝒗 = 𝟏 − 𝐞𝐱𝐩(−𝑭𝒖𝑪 ⋅ 𝑭𝒗𝑪 )

𝑭=

𝑷 𝒖, 𝒗 : At least one common

community 𝑪 links the nodes:

j

𝑭𝒗𝑨 …

strength of 𝒗’s

membership to 𝑨

𝑷 𝒖, 𝒗 = 𝟏 − 𝑪 𝟏 − 𝑷𝑪 𝒖, 𝒗

𝑻)

=

𝟏

−

𝐞𝐱𝐩(−𝑭

⋅

𝑭

𝒖

𝒗

𝑭𝒖 … vector of

community

membership

strengths of 𝒖

Detecting Communities with MLE

53

𝑷 𝒖, 𝒗|𝑭 : Probability of u and v have connection

𝑮 𝑽, 𝑬 : Given a social graph

Maximize likelihood to find best F

0

0.9

0.9

0

0

1

1

0

0.9

0

0.9

0

1

0

1

0

0.9

0.9

0

0.9

1

1

0

1

0

0.1

0.9

0

0

0

1

0

𝑷 𝒖, 𝒗|𝑭

𝑮 𝑽, 𝑬

Detecting Communities with MLE

54

Maximum Likelihood Estimation

Data 𝑿

Assumption: Data is generated by some model 𝒇(𝚯)

Given:

𝒇

… model

𝚯 … model parameters

Estimate

--- 𝑷 𝒖, 𝒗

--- 𝑭

likelihood 𝑷𝒇 𝑿 𝚯):

probability that the model 𝒇 (with parameters 𝜣)

generated the data

The

BigCLAM

55

Given a network 𝑮(𝑽, 𝑬), estimate 𝑷𝑷 𝑮 𝑭):

𝑷(𝒖, 𝒗)

(𝒖,𝒗)∈𝑬

where:

(𝟏 − 𝑷 𝒖, 𝒗 )

𝒖,𝒗 ∉𝑬

𝑷(𝒖, 𝒗) = 𝟏 − 𝐞𝐱𝐩(−𝑭𝒖 ⋅ 𝑭𝑻𝒗 )

Maximize 𝑷𝑷 𝑮 𝑭):

𝒂𝒓𝒈𝒎𝒂𝒙𝑭 𝑷𝑷 𝑮 𝑭):

Yang, Jaewon, and Jure Leskovec. "Overlapping community detection at scale: a

nonnegative matrix factorization approach." Proceedings of the sixth ACM

international conference on Web search and data mining. ACM, 2013.

BigCLAM

56

Many times we take the logarithm of the likelihood,

and call it log-likelihood: 𝒍 𝑭 = 𝐥𝐨𝐠 𝑷(𝑮|𝑭)

Goal: Find 𝑭 that maximizes 𝒍(𝑭):

5

BigCLAM: How to optimize parameter F ?

Additional reading: state of the art methods

Overlapping Community Detection

He Ruidan

BigCLAM: How to find F

58

Model Parameter: Community membership strength matrix F

Each row vector Fu in F is the community membership strength of

node u in the graph

BigCLAM v1.0: How to find F

59

Block coordinate gradient ascent: update Fu for each u

with other Fv fixed

Compute the gradient of single row

BigCLAM v1.0: How to find F

60

Coordinate gradient ascent:

Iterate over the rows of F

BigCLAM v1.0: How to find F

61

Constant

Time

O(n)

This is slow! Takes linear time O(n) to compute

As we are solving this for each node u, there are n nodes in

total, the overall time complexity is thus O(n^2).

Cannot be applied to large graphs with millions of nodes.

BigCLAM v2.0: How to find F

62

However, we notice that:

Usually, the average degree of node in a graph could be

treat as constant, Then it takes constant time to

compute

Therefore, time complexity to update matrix F is

reduced to O(n)

6

BigCLAM: How to optimize parameter F ?

Additional reading: state of the art methods

Overlapping Community Detection

He Ruidan

BigCLAM: How to find F

64

Model Parameter: Community membership strength matrix F

Each row vector Fu in F is the community membership strength of

node u in the graph

BigCLAM v1.0: How to find F

65

Block Coordinate gradient ascent:

Iterate over the rows of F

x + ax’

x

BigCLAM v1.0: How to find F

66

Constant

Time

O(n)

This is slow! Takes linear time O(n) to compute

As we are solving this for each node u, there are n nodes in

total, the overall time complexity is thus O(n^2).

Cannot be applied to large graphs with millions of nodes.

BigCLAM v2.0: How to find F

67

However, we notice that:

Usually, the average degree of node in a graph could be

treat as constant, Then it takes constant time to

compute

Therefore, time complexity to update matrix F is

reduced to O(n)

5

BigCLAM: How to optimize parameter F ?

Additional reading: state of the art methods

Overlapping Community Detection

He Ruidan

Graph Representation

69

Representation learning of graph node.

Try to represent each node using as a numerical vector. Given a graph,

the vectors should be learned automatically.

Learning objective: The representation vectors for nodes share similar

connections are close to each other in the vector space

After the representation of each node is learnt. Community detection

could be modeled as a clustering / classification problem.

Graph Representation

70

Graph representation using neural networks / deep learning

B. Perozzi, R. Al-Rfou, and S. Skiena. Deepwalk: Online learning of social

representations. In SIGKDD, pages 701–710. ACM, 2014.

J. Tang, M. Qu, M. Wang, M. Zhang, J. Yan, and Q. Mei. Line: Large-scale

information network embedding. In WWW. ACM, 2015.

F. Tian, B. Gao, Q. Cui, E. Chen, and T.-Y. Liu. Learning deep representations for

graph clustering. In AAAI, 2014.

Summary

71

Introduction & Motivation

Graph cut criterion

Min-cut

Normalized-cut

Non-overlapping community detection

Spectral clustering

Deep auto-encoder

Overlapping community detection

BigCLAM algorithm

72

Appendix

Details!

Facts about the Laplacian L

(a) All eigenvalues are ≥ 0

(b) 𝑥 𝑇 𝐿𝑥 = 𝑖𝑗 𝐿𝑖𝑗 𝑥𝑖 𝑥𝑗 ≥ 0 for every 𝑥

(c) 𝐿 = 𝑁 𝑇 ⋅ 𝑁

That

is, 𝐿 is positive semi-definite

Proof:

(c)(b):

As it

𝑥 𝑇 𝐿𝑥 = 𝑥 𝑇 𝑁 𝑇 𝑁𝑥 = 𝑥𝑁

𝑇

𝑁𝑥 ≥ 0

is just the square of length of 𝑁𝑥

Let 𝝀 be an eigenvalue of 𝑳. Then by (b)

𝑥 𝐿𝑥 ≥ 0 so 𝑥 𝑇 𝐿𝑥 = 𝑥 𝑇 𝜆𝑥 = 𝜆𝑥 𝑇 𝑥 𝝀 ≥ 𝟎

(a)(c): is also easy! Do it yourself.

(b)(a):

𝑇

73

2 min xT M x

Proof:

Details!

x

Write 𝑥 in axes of eigenvecotrs 𝑤1 , 𝑤2 , … , 𝑤𝑛 of 𝑴.

So, 𝑥 = 𝑛𝑖 𝛼𝑖 𝑤𝑖

Then we get: 𝑀𝑥 = 𝑖 𝛼𝑖 𝑀𝑤𝑖 = 𝑖 𝛼𝑖 𝜆𝑖 𝑤𝑖

= 𝟎 if 𝒊 ≠ 𝒋

𝑻

𝝀

𝒘

𝒊 𝒊

So, what is 𝒙 𝑴𝒙?

1 otherwise

𝑥

𝑇 𝑀𝑥

=

𝑖 𝛼𝑖 𝑤𝑖

𝑖 𝛼𝑖 𝜆𝑖 𝑤𝑖

𝟐

𝝀

𝜶

𝒊 𝒊 𝒊

=

𝑖𝑗 𝛼𝑖 𝜆𝑗 𝛼𝑗 𝑤𝑖 𝑤𝑗

= 𝑖 𝛼𝑖 𝜆𝑖 𝑤𝑖 𝑤𝑖 =

To minimize this over all unit vectors x orthogonal to: w =

min over choices of (𝛼1 , … 𝛼𝑛 ) so that:

𝛼𝑖2 = 1 (unit length) 𝛼𝑖 = 0 (orthogonal to 𝑤1 )

To

minimize this, set 𝜶𝟐 = 𝟏 and so

𝟐

𝝀

𝜶

𝒊 𝒊 𝒊 = 𝝀𝟐

74