(a) Describe the concept of register renaming. Use a simple... to illustrate your explanation. Question 1.

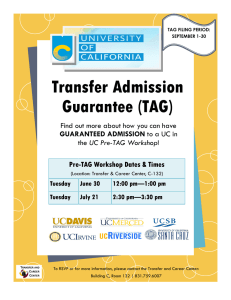

advertisement

Question 1.

(a) Describe the concept of register renaming. Use a simple program example

to illustrate your explanation.

Each time the execution unit dispatches for execution an

instruction that writes into a register, the register is given a new

identification tag. Subsequent instructions that use the register as

operand would also get the latest tag. When an instruction has

executed, its result emerges from the arithmetic unit with the

destination register tag, and is delivered to any instruction with

this tag as operand. When a waiting instruction has received all its

operands, it enters the arithmetic unit for execution.

E.g. Before Renaming

I1

I2

I3

I4

I5

mov r1, 0

add r2, r3, r1

mul r1, r3, 3

sub r4, r1, r7

div r2, r2, r1

Attach a tag to each register. Tag is incremented each time an

instruction that modifies the register is dispatched. All subsequent

references to the same register will receive the most up-to-date

tag.

I1

mov r1-1, 0

I2

add r2-1, r3-1, r1-1

I3

mul r1-2, r3-1, 3

I4

sub r4-1, r1-2, r2-1

I5

div r2-2, r2-1, r1-2

The Tomasulo (or equivalent) pipeline ensures that the same

register with different tags (e.g. r1-1 and r1-2) behave as two

different registers.

1

Question 1

(b) Explain why register renaming prevents Read-Write hazard, and why it does

not completely prevent Write-Write hazard.

The result of the write would have a different tag from the

operand tag of the previous instructions that use the same

register. Hence, the new result would not replace the operand

of the earlier instruction.

E.g. If I3 executes before I2, there is no problem because I2 will

read from r1-1, while I3 will write to r1-2, both of which are

effectively different registers.

Two writes would have different tags and their results would be

delivered to different consumer instructions so would not be

confused. So if I3 completes before I1, I2 will not receive

anything for r1 since I3 will broadcast results for r1-2, and I2 is

waiting for results for r1-1. Likewise if I1 completes, instruction

I4, I5 will not receive erroneous r1 from I1, since it is expecting

r1-2, and I1 is producing r1-1. So read-write (WAR) hazard is

resolved.

But if I3 completes before I1 and I3 updates r1 with r1-2, and

later I1 completes and updates r1 with r1-1, we get an erroneous

updating of r1 (WAW hazard). Hence we need to do re-ordering.

Of course this can be done by making use of the tags.

Results of r1-2 and r1-1 are written to the re-order buffer:

I3 completes:

ROB

Destination

r1

r1

Tag

2

1

Value

y

x

2

Committed

n

n

I1 completes:

Destination

r1

r1

Tag

2

1

Value

y

x

Committed

n

n

Value

y

x

Committed

n

y

I1’s result is committed to r1.

Destination

r1

r1

Tag

2

1

I3’s results are committed to r1. WAW hazard eliminated.

Destination

r1

r1

Tag

2

1

Value

y

x

Committed

y

y

Of course we can optimize this by just taking the most up-todate copy of r1 in the ROB and writing this to r1. This is of

course the r1 with the highest tag.

However this has serious complications for interrupt

processing. For e.g. the process is interrupted at I2, in which

case even with r1-2 and r1-1 in the ROB, the correct result in r1

should be r1-1 and not r1-2. An optimization where r1-1 is

skipped in favor of r1-2 will result in the wrong value in r1 if an

interrupt triggers at I2.

3

Question 2

(a) File processing programs often use both indexed addressing mode and

displacement addressing mode; explain by providing one example of each. (Note:

this question is specifically in the file processing context. Other examples are not

relevant. You are not required to provide program examples. Descriptive

explanations are fine.)

Main gist:

Indexed addressing is useful for accessing arrays.

Displacement addressing is useful for accessing particular records.

Why:

Indexed addressing encodes the base address in the instruction and

the index in a register. Accessing next element accomplished just by

incrementing register by 1. Final address is base + index * size, where

size is the size of the data item in words or bytes.

Displacement addressing encodes the displacement in the instruction

and the base address in a register. Hence convenient mainly for

accessing single items/records. Final address is displ * size + base,

size is the size of a data item is words or bytes.

The two addressing modes are not generally interchangeable (e.g. you

cannot do an indexed addressing mode by encoding the base in the

displacement portion of the instruction and placing the index into the

register) because the displacement portion of a displacement mode

instruction will generally be shorter than the base portion of an

indexed mode instruction.

Hence indexed mode is useful for accessing an array of records, while

displacement mode is useful for accessing a particular record.

4

Question 2

(b) Explain why RISC processors normally use the Execute stage of the pipeline

to perform address computation.

Primary reason: Savings in hardware.

Why:

In RISC architecture arithmetic instructions (e.g. add, sub, mul, div,

shl, shr, and, or, not etc.) cannot access memory, and hence do not

require address computation. The arithmetic unit can be dedicated

totally to processing the instruction.

Instructions that require address computation (e.g. load, store)

cannot perform arithmetic (e.g. add, sub, etc.), and hence the

arithmetic unit will be used solely for computing address.

So there is no need for a separate arithmetic unit to do address

computations, and hence can “re-use” unit in EX stage. Economical.

Downside -> destination address known only at end of EX stage.

5

Question 3

(a) Explain the idea of “set-associative” in cache, translation-lookaside buffer

or other devices that use the idea.

Side issues (not related to question)

Direct-mapped cache:

The rightmost n bits of an address or VPN is used to indexed a

cache with 2n blocks. The data (or PPN) for this address (or VPN)

then goes to the indexed block. The bits to the left of these index

bits are used as “tags” to determine cache hit/miss. E.g. for DM

caches with n=4:

Data for address 001101 goes to block 13, tag 00

Data for address 001010 goes to block 10, tag 00

Data for address 011101 goes to block 13, tag 01,

Data for address 111001 goes to block 9, tag 11

Good: Simple to implement

Bad: Frequent collision resulting in poor hit rate. E.g. a repeated

alternating access to locations 001101 and 011101 (i.e. access

001101, then 011101, then 001101, then 011101) will result in a hitrate of 0.

Fully associative Cache/TLB:

Entire address/VPN is stored as a tag in any block in the cache.

When an address/VPN is presented, a parallel search across all

blocks is made for a matching tag. If hit, data is read out/written to

that block.

Good: Flexibility to implement clever replacement algorithms to

maximize hit rate.

Bad: Need comparator for every block. Becomes too big and slow

when too many blocks are involved. Hence limited to small caches.

6

Actual Answer:

Set-Associative Cache/TLB:

Compromise between DM and FA. The leftmost m bits of the

address are used to index to a set of blocks. Blocks within this set

are fully associative. There are 2m sets, and a certain number of

blocks in each set. E.g. if m = 3, then there will be 8 sets. Suppose

each set has 2 blocks. Then:

Data for address 001101 goes to set 5 block 0, tag 001

Data for address 001010 goes to set 2 block 0, tag 001

Data for address 011101 goes to set 5 block 1, tag 011,

Data for address 111001 goes to set 1 block 0, tag 11

To access, take leftmost 3 bits (e.g. 101b = 5), then search both

blocks in set 5 for the tag 001. If found, hit, else miss.

Good: Has flexibility offered by FA in allocating blocks to maximize

hit rate, yet only requires as many comparators as there are blocks

in each set. So if each set has 2 blocks, we only need 2

comparators. Fast, cheap.

Bad: Less associativity (and lower hit rate) then FA. More complex

than DM.

7

Question 3

(b) In a multiprocessor shared-memory system, one processor may invalidate data in

another processor. Explain why this is useful, and describe one example of such

invalidation going through it step by step from the initial condition making invalidation

necessary to subsequent events canceling the invalidation.

When a memory block is shared and has multiple copies in

different caches, then processor that modifies its copy must

invalidate the other copies so that they will obtained the latest

values when their copies are used and not use the outdated

copies. The events are (note this is NOT the MESI protocol, but

an imaginary one):

(1)

processor A first uses block X which is tagged Exclusive

(2)

Processor B also uses block X and both copies are tagged

Shared

(3)

Processor A modifies its copy which is again marked

Exclusive and is written through to memory, while B’copy

is Invalid

(4)

Access by B on its copy causes cache miss with access of

latest copy from memory. Both copies are now marked

Shared.

8

Question 4

(a) Explain why the Ethernet protocol is not suitable for linking processors with

memory modules, which usually go through a bus or switch.

Main point: Transfers to/from memory usually small and frequent.

Hence low overhead, low latencies and predictable timing are

important.

Characteristics of Ethernet:

i)

High overheads: Ethernet has complex packeting and

framing structure, and requires encoding (non-return to

zero or NRZ) before transmitting and decoding upon

receiving. All these add to overheads.

Bus and switches generally use plain +5v/0v binary.

Routing done once and data sent rapidly.

ii)

Slow: Ethernet is a serial medium transmitting 1 bit at a

time.

Bus and switches are parallel architectures, with widths

matching machine word size (or more!). Hence fast to

transmit.

iii)

Ethernet arbitration is by contention. A station monitors

line until it is quiet, then transmits. As it transmits it

continues to monitor for collision. If a collision occurs the

transmitting stations back-off for a random period of time

and try again. Hence latencies are unpredictable.

Bus and switches, once set up and routed properly, have

fixed latencies (bus propagation delay + switch latencies).

iv)

Contention-based protocol also cause bandwidth to decay

rapidly if many stations (CPUs) are transmitting

(send/receive to/from memory). At worst can decay to

almost 0 bps.

Bus and switches, once set up and routed, are dedicated

channels between CPU and memory.

9

Question 4

(b) A polynomial a + bz + cz^2 +dz^3 + ez^4 + fz^5 + gz^6 +hz^7 .. may be

computed on a N-node distributed machine in O(logN) steps as follows

node

0

1

a

b

multiply

z

multiply

multiply

2

c

3

d

4

e

z

z^2

5

f

6

g

z

z^2

z^4

z^4

7

..

h

..

z

..

z^2

z^2 ..

z^4

z^4 ..

..

followed by summation which takes logN steps as shown in a tutorial. Outline a

simple program doing the first part shown above. There is no need to show the

rest of the program which is the summation part. The two parts are quite similar

as the computation in each iteration depends on a binary digit of the node index.

Analysis:

At each step, z needs to be squared. This is so that we can compute z *z

to give us z2, z *z2 to give us z3, z2 * z2 to give us z4, z * z4 to give us z5

etc.

Also at some point we need to multiply in the coefficients. We can

actually do this at any time as long as it is done only once. We begin by

multiplying in the coefficients when I is odd.

The table on the next page shows how it will work:

10

P0

I=0

r=a

I=0

r=a

I=0

r=a

I=0

r=a

P1

I=1

r=b

v=z

P2

I=2

r=c

v=z

P3

I=3

r=d

v=z

P4

I=4

r=e

v=z

P5

I=5

r=f

v=z

P6

I=6

r=g

v=z

P7

I=7

r=h

v=z

r=rv

= bz

v=v2

=z2

I=0

r=bz

v=v2

=z2

v=v2

=z2

v=v2

=z2

I=2

r=e

v=z2

r=rv

=fz

v=v2

=z2

I=2

r=fz

v=z2

v=v2

=z2

I=1

r=c

v=z2

r=rv

=dz

v=v2

=z2

I=1

r=dz

v=z2

I=3

r=g

v=v2

r=rv

= hz

v=v2

=z2

I=3

r=hz

v=z2

r=rv

=cz2

v=v2

=v4

I=0

r=cz2

r=rv

=dz3

v=v2

=v4

I=0

r=dz3

v=v2

=v4

v=v2

=z4

I=1

r=e

v=z4

I=1

r=fz

z=z4

r=rv

=gv2

v=v2

=z4

I=1

r=gv2

v=z4

r=rv

=hz3

v=v2

=z4

I=1

r=hz3

v=z4

r=rv

=ev4

v=v2

=z8

I=0

r=ev4

r=rv

=fz5

v=v2

=z8

I=0

r=fz5

r=rv

=gv6

v=v2

=z8

I=0

r=gz6

r=rv

=hz7

v=v2

=z8

I=0

r=hz7

I=0

r=bz

I=0

r=bz

I=0

r=cz2

I=0

r=dz3

Algorithm takes log2N steps to complete.

11

Program:

r = coeff;

// This depends on node. Node 0 coeff=a, node 1, coeff=b

// etc.

v = z;

I = nodenum();

// Get node number

while(I>0)

{

if(odd(I))

r=r*v

}

v = v * v;

I=I/2;

12

Question 5

(a) Describe the use of parity checks in storage systems and ATM switches. For

each example, describe the situation when the parity checks are needed to solve a

problem, and how this solution is carried out.

Storage systems:

Parity is done by adding across the same block for all disks, and

across all blocks within the same disk. Recovery single disks or blocks

achieved by subtracting the sum of all the other disks/blocks from the

parity. Possible to do multiple disk recovery (see tutorial question).

ATM Switch:

ATM switch computes checksum across all data in the packet and

compares it with the checksum stored in the packet. If match, packet

is sent along to next node in the virtual circuit. If mismatch, switch

asks previous node to re-send the packet.

13

Question 5

(b) The Itanium processor is classified as an EPIC, explicitly parallel

instruction computer. Explain the concept EPIC. Also explain how

Itanium handles branches and how this is different from MIPS processors.

Reference: Computer Organization and Architecture, 5ed,

William Stallings.

Key point: Explicit parallelism.

i)

EPIC is a VLIW

architecture.

(very

long

instruction

word)

i. In this architecture multiple instructions can be

placed into a single instruction word.

ii. Each instruction word is loaded, and the multiple

instructions are dispatched to separate execution

unit.

iii. Dependencies are NOT checked by hardware!!

The IA-64 can handle up to 3 instructions per instruction

word.

ii)

Instructions that can be executed in parallel must be

bundled together.

Bits are set in the “template”

portion of the instruction to tell CPU which

instructions are independent. In the IA-64/Itanium the

independent instructions can span instruction words.

i. Compiler

re-arranges

instruction

so

that

independent instruction are placed into the same

instruction word or contiguous instruction words.

So if the compiler can find 8 independent

instructions, it will span a total of 2 2/3 instruction

words (each has 3 instructions). The template bits

are set to show that these eight instructions are

independent.

ii. CPU takes these 8 instructions and executes them in

parallel.

iii)

Hence parallelism must be explicitly set by the

compiler in the instruction -> Explicitly Parallel

Instruction Computer.

14

Branches:

MIPS handles branches by attempting to predict direction of branch

(taken/not taken). If predict taken, instructions are fetched and

executed from the target. If predicted not taken, instructions are

fetched and executed following the branch. A mis-prediction will

require the pipeline to be flushed before the correct instructions are

fetched and executed.

Itanium handles branches by predication. E.g.:

if(x==1)

{

y = y + 1;

z = y * 2;

}

else

{

y = y – 2;

z = y / 3;

}

Let x be register r1, y be r2, z be r3.

Conventional MIPS style:

ELSE_PART:

EXIT:

cmpi r0, r1, 1

jz r0, ELSE_PART

addi r2, r2, 1

multi r3, r2, 2

j EXIT

subi r2, r2, 2

divi r3, r2, 3

…

// ro is 0 if r1 != 1

But in Itanium-style, a predicate Px is appended to instructions in the

TRUE portion, and a predicate Py is appended to instructions in the

FALSE portion. Do not need to append predicates to any other

instructions.

15

<P1, P2> = cmp(r1 == 1)

// P1 = true predicate,

// P2 = false predicate.

<P1> addi r2, r2, 1

<P1> multi r3, r2, 2

<P2> subi r2, r2, -2

<P2> divi r3, r2, 3

Predication allows both the TRUE and FALSE portions to be executed

simultaneously. When the outcome of the comparison is known, only

the results of the correct predicate are committed. The results of the

wrong predicate are discarded. This is trivially achieved by writing

the results of both sides (<P1> and <P2>) to an ROB, attaching a tag to

the result indicating which predicate this result belongs to. The

results corresponding to the correct tag are then committed, while

those for the wrong tag are purged from the ROB (ROB = re-order

buffer).

This simplifies branch handling, eliminates the costs associated with

pipeline flusing, and predicated streams (which are naturally

independent) can be executed in parallel by giving the compiler more

independent instructions that it can use to fill instruction bundles.

16

Side Issues

The Itanium has speculative loading. Speculative loads return

immediately (instead of waiting for the load to complete), and the

load goes on independently. Moreover the result of the load are not

committed to the register until a later time. This allows the compiler

to move all loads to the start of the program, then schedule other

instructions while the load is going on, until the results of the load are

actually needed.

Of course the load may trigger an exception (e.g. loading from

another processes’ address space causing protection violations). It

will not be useful to interrupt the process there and then, because it is

possible that the load is never actually used (e.g. the load may be

pulled up from a portion of code that never would have gotten

executed). Instead a check is done just before the result of the load is

used, and if an error had occurred, the exception will be triggered at

this point. This checking operation also commits the results of the

load to the register, since the speculative load is taking place at the

start of the program rather than at the “correct” position.

E.g. Original code w/o speculation and predication:

cmpi r0, r1, 1

jz r0, ELSE

ELSE:

EXIT:

lw r4, 0(r7)

addi r4, r3, 2

mul r7, r4, r3

j EXIT

lw r5, 0(r1)

addi r5, r3, 2

divi r7, r5, 7

…

With predication:

EXIT:

<p1, p2> = cmpi(r0 == 1)

<p1> lw r4, 0(r7)

<p1> addi r4, r3, 2

<p1> mul r7, r4, r3

<p2> lw r5, 0(r1)

<p2> addi r5, r3, 2

<p2> divi r7, r5, 7

…

17

Pull all lw to the top, replace with speculative load s.lw. Replace all

current lw in the predicated instructions with s.check to do checking

for exceptions.

Exit:

s.lw r4, 0(r7)

s.lw r5, 0(r1)

<p1, p2> = cmpi(ro==1)

<p1> s.check r4

<p1> addi r4, r3, 2

<p1> mul r7, r4, r3

<p2> s.check r5

<p2> addi r5, r3, 2

<p2> divi r7, r5, 7

…

The s.check checks for exceptions, and commits the results of the load

to the register.

18