Chapter 3

advertisement

Chapter 3

The most important questions of life are, for the most part,

really only problems of probability.--Marquis de LaPlace

Probability Concepts:

Quantifying Uncertainty

1

The Language of Probability

Probability as Long-Run Frequency

Pr[head] = .50 because .50 is long-run

frequency for getting a head in many tosses

The Random Experiment and Its Elementary

Events

The elementary events of a random experiment

are all the outcomes. Examples:

Coin toss: {head, tail}

Number of arrivals: {0,1,2,...}

Playing card: {♥K, ♦K, ♣K, ♠K,..., ♣A, ♠A}

2

A complete collection is the sample space.

The Language of Probability

Certain events:

Pr[certain event] = 1 (occurs every time)

“head or tail”

“success or failure”

“Dow’s next close is some value.”

Impossible events:

Pr[impossible event] = 0 (never occurs)

“Coin toss has no outcome.”

“Both success and failure.”

“Dow hits minus 500.”

3

Finding a Probability

Count-and-Divide

For a randomly selected playing card,

Pr[♥Q]=1/52, Pr[queen]=4/52, Pr[♥]=13/52

Works only when Elementary Events are

equally likely.

Must be able to count possibilities.

May often be logically deduced.

Historical Frequencies

Pr[house burns down]=.003 because in any past

year about three out of thousand such homes

did burn down.

4

Finding a Probability

Application of a Probability Law

Multiplication law

Addition law

Apply to composite events. Must know separate

individual probabilities first.

Pull Out of “Thin Air” Using Judgment

5

Pr[Dow rises over 100 tomorrow]=.17

Above is a subjective probability. It measures

one person’s “strength of conviction” that the

event will occur.

Types of Random Experiments

and Probabilities

Repeatable Random Experiments

Producing a microcircuit (satisfactory or

defective).

Random arrival of a customer in any minute.

P[satisfactory] is a long-run frequency and an

objective probability.

Non-repeatable Random Experiments

6

A product is launched and might be a success.

Completing the course (possibly with an “A”.)

Pr[A] is a judgmental assessment and is a

subjective probability.

Joint Probability Table I

Start with a Cross Tabulation:

LEVEL

7

SEX

Undergrad.

(U)

Graduate

(G)

Total

Male (M)

24

16

40

Female (F)

18

14

32

Total

42

30

72

Joint Probability Table I

Count and Divide:

The respective

joint probabilities

appear in the

interior cells.

LEVEL

The marginal

probabilities

appear in the

margin for

the

respective

row or

column.

8

Graduate Marginal

(G)

Probability

SEX

Undergrad.

(U)

Male (M)

24/72

16/72

40/72

Female (F)

18/72

14/72

32/72

Marginal

Probability

42/72

30/72

1

Joint and Marginal Probabilities

The joint probabilities involve “and” for

two or more events:

Pr[M and U] = 24/72

Pr[G and F] = 14/72

The marginal probabilities involve single

events:

Pr[M] = 40/72

Pr[F] = 32/72

Pr[U] = 42/72

Pr[G] = 30/72

The term “marginal probability” derives from

the position of that particular value: It lies in

the margin of the joint probability table.

9

The Multiplication Law for

Finding “And” Probabilities

Multiplication Law for Independent Events

Pr[A and B] = Pr[A] × Pr[B]

Example: Two Lop-Sided Coins are tossed.

Coin 1is concave, so that Pr[H1]=.15

(estimated from repeated tosses)

Coin 2 is altered, so that Pr[H2]=.60

Pr[both heads]=Pr[H1 and H2]

=Pr[H1]×Pr[H2]

=.15×.60=.09

10

Need for Multiplication Law

Use the multiplication law when counting

and dividing won’t work, because:

Elementary events are not equally likely.

Sample space is impossible to enumerate.

Only component event probabilities are known.

This multiplication law only works for

independent events.

11

That would be the case only if H2 were

unaffected by the occurrence of H1. That would

not be the case, for example, if the altered coin

were tossed only if the first coin was a tail.

The Addition Law for Finding

“Or” Probabilities

The following addition law applies to

mutually exclusive events:

Pr[A or B or C] = Pr[A] + Pr[B] + Pr[C]

For example, suppose the 72 students on a

previous slide involved 16 accounting

majors, 24 finance majors, and 20 marketing majors. One is chosen randomly.Then,

Pr[accounting or finance or marketing]

= Pr[accounting]+Pr[finance]+Pr[marketing]

= 16/72 + 24/72 + 20/72 = 60/72

12

Why Have the Addition Law?

The addition law is needed when:

Only the component probabilities are known.

It is faster or simpler than counting and

dividing.

There is insufficient information for counting

and dividing. (It was not essential for the

preceding example, since we could have instead

used the fact that 60 out of 72 students had the

majors in question.)

The preceding addition law requires mutually

exclusive events. (Joint majors invalidate it.)

13

Must We Use a Law?

Use a probability law only when it is

helpful.

Counting and dividing may be easier to do.

But, a law may not work. For example:

Pr[Queen and Face card] ≠ Pr[Queen] × Pr[Face]

(because Queen and Face are not independent)

Pr[Queen or ♥] ≠ Pr[Queen] + Pr[♥]

(because Queen and ♥ overlap with the ♥Q,

making then not mutually exclusive)

14

Some Important Properties

Resulting from Addition Law

The cells of a joint probability table sum to

the respective marginal probabilities. Thus,

Pr[M] = Pr[M and U] + Pr[M and G]

Pr[G] = Pr[M and G] + Pr[F and G]

When events A, B, C are both collectively

exhaustive and mutually exclusive:

Pr[A or B or C] = 1 (due to certainty)

Pr[A] + Pr[B] + Pr[C] = 1 (by addition law)

Complementary Events: Pr[A or not A] =1

15

Pr[A] + Pr[not A] = 1 and Pr[A] = 1 – Pr[not A]

Independence Defined

A and B are independent whenever

Pr[A and B] = Pr[A] × Pr[B]

and otherwise not independent.

A and B are independent if

Pr[A] is always the same regardless of

whether:

16

B occurs.

B does not occur.

Nothing is known about the occurrence or nonoccurrence of B.

Establishing Independence

Yes, if multiplication gives correct result.

2 fair coin tosses: Pr[H1]×Pr[H2] = Pr[H1 & H2]

H1 and H2 are independent if above is true.

No, otherwise. Consider Queen and Face card:

Pr[Face card] = 12/52

Pr[Queen] = 4/52

The product 12/52 × 4/52 = .018 is not equal to

Pr[Face card and Queen] = 4/52 = .077

Can be self-evident (assumed):

17

Sex of two randomly chosen people.

Person’s height and political affiliation.

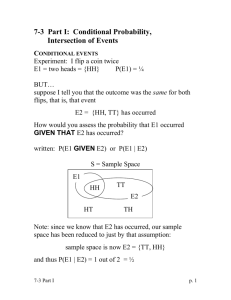

Conditional Probability

The conditional probability of event A

given event B is denoted as Pr[A | B].

18

Pr[head | tail] = 0 (single toss)

Pr[cloudy | rain] = 1 (always)

Pr[rain | cloudy] = .15 (could be)

Pr[Queen | Face card] = 4/12 (regular cards)

Pr[get A for course | 100% on final] = .85

Pr[pass screen test | good performance] = .65

Pr[good performance | pass screen test] = .90

Independence and

Conditional Probability

Two events A, B are independent if

Pr[A | B] = Pr[A]

and when the above is true, so must be

Pr[B | A] = Pr[B]

Independence can be tested by comparing

the conditional and unconditional

probabilities. Thus, since

Pr[Queen] = 4/52 ≠ 4/12 = Pr[Queen | Face]

the events Queen and Face are not

independent.

19

General Multiplication Law

The general multiplication law is:

Pr[A and B] = Pr[A] × Pr[B | A]

Or

= Pr[B] × Pr[A | B]

This is the most important multiplication law,

because it always works.

20

Applying

General Multiplication Law

21

Two out of 10 recording heads are tested and

destroyed. 2 are defective (D), 8 satisfactory

(S). With 1 and 2 denoting successive selections,

Pr[D1] = 2/10 and Pr[D2 | D1] = 1/9

Pr[S1] = 8/10 and Pr[D2 | S1] = 2/9

Thus, Pr[D1 and D2] = Pr[D1] × Pr[D2 | D1]

= 2/10 × 1/9 = 2/90

Pr[S1 and D2] = Pr[S1] × Pr[D2 | S1]

= 8/10 × 2/9 = 16/90

And, Pr[D2] = Pr[D1 and D2] + Pr[S1 and D2]

= 2/90 + 16/90 = 18/90 = .2

Conditional Probability Identity

Dividing both sides of the expression for the

multiplication law and rearranging terms,

establishes the conditional probability identity:

Pr[A and B]

Pr[A | B] =

Pr[B]

22

The above might not provide the answer. We

Couldn’t use it to find Pr[D2 | D1] because that

was used to compute Pr[D1 and D2], and

Pr[D2 | D1] = 1/9 followed from 1 out of 9 of

items available for final selection being defective.

Joint Probability Table II

Use Probability Laws to Construct:

Knowing that

5% of Gotham City adults drive drunk (D)

12% are alcoholics (A)

40% of all drunk drivers are alcoholics

We have for a randomly selected adult

Pr[D] = .05

Pr[A | D] = .40

Pr[A] = .12

The multiplication law provides:

Pr[A and D] = Pr[D]×Pr[A | D] = .05(.40) = .02

23

And the following joint probability table is

constructed.

Joint Probability Table II

The blue values were added using addition

law properties.

ALCOHOLISM

24

Nonalcoholic Marginal

(not A)

Probability

CONDITION

Alcoholic

(A)

Drunk (D)

.02

.03

.05

Sober (not D)

.10

.85

.95

Marginal

Probability

.12

.88

1

Probability Trees

25

Prior and Posterior Probabilities

A prior probability pertains to a main event:

And may be found by judgment (subjective).

Or, by logic or history (objective).

Example: Pr[oil]=.10 (subjective)

A posterior probability is a conditional

probability for the main event given a

particular experimental result. For example,

26

Pr[oil | favorable seismic] = .4878 (greater)

Pr[oil | unfavorable seismic] = .0456 (smaller)

Favorable result raises main event’s probability.

Conditional Result Probabilities

Posterior probabilities are computed. That

requires a conditional result probability:

Pr[result | event] (like past “batting average”)

Example: 60% of all known oil fields have

yielded favorable seismics, and

Pr[favorable | oil] = .60 (historical & objective)

and 7% of all dry holes have yielded favorable

seismics, so that

Pr[favorable | not oil] = .07 (objective)

27

Bayes’ Theorem:

Computing Posterior Probability

Several laws of probability combine to

merge the components to compute the

posterior probability:

Pr[ E | R]

Pr[ R | E ] Pr[ E ]

Pr[ R | E ] Pr[ E ] Pr[ R | not E ] Pr[not E ]

For example,

.10(.60)

Pr[O | F]

.4878

.10(.60) (1 - .10)(.07)

28

Computing Posterior Probability

Example, continued:

.10(1- .60)

Pr[O | not F]

(.10)(1- .60) (1 - .10)(1- .07)

= .0456

Posterior probabilities might be computed

from the conditional probability identity:

Pr[Event and Result]

Pr[Event | Result] =

Pr[Result]

29

Computing Posterior Probability

from Joint Probability Table

Fill in a joint probability table. Black values are

given. Apply multiplication & addition laws (blue).

GEOLOGY

30

SEISMIC

Favorable

(F)

Unfavorable

(not F)

Marginal

Probability

Oil

(O)

.10×.60

= .06

.10-.06

= .04

.10

Not Oil

Marginal

(not O)

Probability

.90-.837

.06+.063

=.063

=.123

(1-.10)×

.04+.837

(1-.07)=.837

=.877

1-.10

= .90

1

Computing Posterior Probability

from Joint Probability Table

Apply conditional probability identity,

lifting needed values from table:

Pr[O | F]

= .06/.123 = .4878

Pr[O | not F] = .04/.877 = .0456

The following are known values:

Prior probabilities (from early judgment).

Conditional result probabilities (from testing

the tester).

31