Poggio

University of Texas at Austin

Department of Art & Art History Website

Usability Report

Carrie Newsom: School of Information

Natacha Poggio: College of Fine Arts

University of Texas at Austin

April 21, 2005

Table of Contents

EXECUTIVE SUMMARY

INTRODUCTION

S TUDY P URPOSE

S TUDY M ETHODS AND C ONTEXT

S TUDY S UMMARY

P ARTICIPANT P ROFILE

A

CKNOWLEDGMENTS

S TUDY IS NOT

METHODOLOGY

E ND USER TEST METHODOLOGY

P

ARTICIPANTS

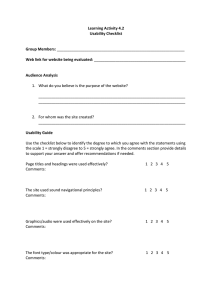

A SSESSMENT T OOLS

T

EST

P

ROCEDURES

P ARTICIPANT O BSERVATIONS

D ATA A NALYSIS

T ESTING F ACILITY

T ASKS

T ASK 1

–

F IRST I MPRESSIONS

T ASK 2 – T ASK S CENARIO : S TUDENT

T

ASK

2 – T

ASK

S

CENARIO

: F

ACULTY

T ASK 3 – F EEDBACK Q UESTIONNAIRE

DATA AND FINDINGS

P ERFORMANCE D ATA

S ATISFACTION D ATA

U

SABILITY

F

INDINGS

G OOD F INDINGS

C

RITICAL

F

INDINGS

M AJOR F INDINGS

M ODERATE F INDINGS

M INOR F INDINGS

NEXT STEPS

CONTACT INFORMATION

3

4

4

4

4

5

5

5

6

10

10

12

13

14

14

14

15

16

16

7

7

8

8

6

6

6

7

8

8

9

9

16

BIBLIOGRAPHIC REFERENCES 16

APPENDICES 18

U SABILITY E VALUATION I NSTRUCTIONS

C ONSENT F ORM

P ARTICIPANT B ACKGROUND Q UESTIONNAIRE

U SABILITY E VALUATION T ASKS

T

ASK

1: F

IRST

I

MPRESSIONS

U SABILITY E VALUATION T ASKS

T

ASK

2: T

ASKS

S

CENARIO

: S

TUDENT

U SABILITY E VALUATION T ASKS

T ASK 2: T ASKS S CENARIO : F ACULTY

U

SABILITY

E

VALUATION

T

ASKS

T ASK 3: S ITE F EEDBACK

Executive Summary

Carrie Newsom and Natacha Poggio conducted a usability test for the University of Texas at

Austin, Department of Art & Art History web site. Newsom and Poggio designed an end-user usability test, wherein individual representative users were tested, one at a time. Tests were carried out in the Design Studio of Poggio (which acted as a usability lab) the week of April 4,

2005. Participants were given representative tasks to perform on the site, and were observed as they carried out these tasks. These observations, along with satisfaction data obtained via questionnaires, form the basis of the results.

Four representative users from the College of Fine Arts at the University of Texas at Austin were selected for the study. Three were students and one was a design professor. All participants were very experienced in using the Internet (between eight and ten years of experience), and indicated they access it daily. Three of the participants have previously accessed the tested web site - but none had visited the site in the last three months.

Overall, participants were pleased with the web site interface and mentioned they would consider applying to the school because of the feeling of belonging and the impression they perceived of the department being part of a larger institution. However, all participants agreed the interface was not as “visually impressive” as they would expect for an art and design department.

There were two problems identified as critical (i.e. the identified issues is so sever that critical data may be lost or the user cannot complete the task). First, the search function of the calendar and news pages does not work. Two participants attempted to use these functions to find the date of a lecture only to be told there were no results. The only way participants could find the event was by clicking on the exact date of the lecture on the calendar graphic.

Second, the link to further detail s about the program (labeled “handbook”) is worded in such a way that no users connected the idea of a handbook with that of further details (or catalog).

See section 5.3 for detailed descriptions of these critical problems and descriptions of the other problems identified.

Who is going to design schools anyway?

The AIGA (American Institute of Graphic Arts) lists 240 four-year college programs in graphic

19

20

21

22

22

23

23

24

24

25

25

design and 1,300 to 2,500 two-year programs in graphic design.

According to Ed Gold in his book “The Business of Graphic Design,” these schools graduate an average of 50,000 graphic design students each year (Gold, 1995).

Introduction

S

TUDY

P

URPOSE

The purpose of this study was to test the usability of the UT Art & Art History web site, using representative users.

S

TUDY

M

ETHODS AND

C

ONTEXT

This study evaluated the UT Art & Art History web site’s usability by employing an “end-user test” method, wherein individual representative users were tested, one at a time. Test participants came to Poggio's Design Studio (which acted as a usability lab), were given some representative tasks to perform, and were observed as they carried out these tasks. Data was collected through the use of a “think aloud” protocol and post-test questionnaires.

S

TUDY

S

UMMARY

Four representative users were tested one at a time on the Department of Art & Art History web site. We wished to collect satisfaction data (via a questionnaire), and, most importantly, particular areas of poor usability. Performance data (error rates) was not measured since it was not relevant to the tasks requested.

Three aspects were considered when testing the web site:

Information : Whether the web site content includes all the information that is pertinent to successfully accomplishing a certain task.

Interface : Whether the web site layout affects the (successful) accomplishment of a task.

Interaction : Whether the web site allows efficient navigation for users to accomplish their tasks successfully.

Participants were asked to perform the following tasks to help us understand how effectively the web site was fulfilling each of the three areas above:

1. First Impressions : users were asked to browse the website freely for four to five minutes and verbalize their first impressions of the site.

2. Task Scenario : participants were given a short situation scenario, and asked to find specific information from the web site. They were limited to 20 minutes and were asked to note if any information could not be located by the end of that time. Personalizing the task helped them browse different parts of the web site, and get to know site better.

3. Feedback questionnaire : participants completed a multiple-choice survey (See Appendix

F) after the task scenario. This task allowed the participants to review each site further and gave them an opportunity to voice their opinions regarding their overall perceptions of the site.

P

ARTICIPANT

P

ROFILE

To ensure the usability test revealed valid results, careful consideration was placed in recruiting participants who were typical end users of the web site and whose background and abilities were representative of the web site's target audience.

Most typical users of this web site will come from a visual and applied arts background and will have varying computer expertise. Their education is also varied ranging from undergraduate students to faculty members.

A

CKNOWLEDGMENTS

We would like to thank the test participants for their help in taking the usability test.

S

TUDY IS NOT

This study is NOT a baseline test, with crisp, quantitative data like time-on-task. Rather, it is a usability study aimed at finding and fixing major usability problems. It is also NOT a study of asymptotic behavior (that is, performance after users get good at using the web site). Rather, it is a study of how well people perform in their first interactions with the site.

Methodology

E

ND

-

USER TEST METHODOLOGY

Participants

Four participants from the College of Fine Arts at the University of Texas at Austin were selected for the study. Three participants were students; two were design undergraduates and one was a female studio art graduate. One participant was a male design faculty. All participants were very experienced in using the Internet (between eight and ten years of experience), and indicated they access it daily. The most commonly stated reasons for

Internet use among the participants was e-mail, research, and shopping.

This information was obtained from a questionnaire (See Appendix C) the participants completed after they were informed about the test and consenting (See Appendix B) to be a participant in this study.

Three of the participants had accessed the tested web site previously though none had visited the site in the last three months. All participants had experience using Apple Mac computers, which were used to perform the tests.

Table 4.11: Test Participants Profile

Participant # Gender

1 M

2

3

4

F

M

F

Age

36 +

25-30

19-24

19-24

Education

Design faculty

Studio Art freshman

Design sophomore

Design sophomore

Internet Experience

10 years

8 years

8 years

8-9 years

Assessment Tools

We used the following assessment tools:

1. Introduction to the test process (Appendix A)

2. A consent form (Appendix B)

3. A pre-test [background] questionnaire (Appendix C)

4. An exploratory [first impressions] questionnaire (Appendix D).

5. A task-specific [scenario] questionnaire (Appendix E).

6. A post-test [site feedback] questionnaire (Appendix F).

Test Procedures

Participants were given a general idea of the usability study when invited to participate. They were told they would be looking at the Department of Art & Art History web site and informed of the expected length of time to complete the test and test location. All tests were conducted within two days; hence, no recognizable changes in the interface occurred in the site during this period. Moreover, the tests were conducted in one-on-one sessions, because this is the best source of feedback (Fleming, 1998).

The day of the test, participants were welcomed into the design studio and were seated at an

Apple Mac workstation. Participants were given a user folder, which contained the test introduction outlining the study (A), the consent form (B), background (C), first impressions

(D), task scenario (E), and site feedback (F) questionnaires for the web site. All four users tested the same site; the only difference was the faculty member used a customized task scenario aimed at a similar user experience.

Prior to each individual’s test, we cleared Safari’s browser cache so each new participant could not identify previous users’ actions using “phantom clues” from the last user (Nielsen et al., 2000).

After we explained the test session to each participant, they read and signed the informed consent form, which was modeled after the one used by Nielsen (Nielsen et al., 2000). The consent form contained the user’s name and User ID (P#-“1 st initial”). All other material used in the test contained the User ID only. In addition, users completed a background questionnaire, asking about their demographic profile, computer and Internet habits. Also modeled after

Jakob Nielsen’s questionnaire (Nielsen et al., 2000).

We answered any questions and then instructed the participants to begin. The participant proceeded to read aloud the first task description and then attempted to carry out the task.

At the completion of the last task, the participant completed the post-questionnaire providing feedback on impressions and experiences with the site.

After testing the web site, as a thank you gift, we gave the participant a bag with traditional candies from Argentina, thanked them, and escorted them out of the studio. The entire session took between 45 and 60 minutes.

Participant Observations

We collected data through direct observation of each participant. One moderator (Poggio) sat beside each participant during the usability testing session and took notes on both the decision path taken to address the task, and what the users said and did during the test. Another moderator (Newsom) sat behind the participant and took additional notes. The participants were encouraged to think aloud as they attempted to complete each task. Both moderators asked questions to clarify and better understand the user experience and thought process while refraining from offering any assistance. However, in cases where the participant was unable to complete a particular task, we tried to point out possible solutions to observe how the participant continued the actions necessary to accomplish the task.

Data Analysis

Quantitative data was obtained from the background user profile questionnaire and the taskspecific scenario survey. Qualitative data was gathered from the open-ended answers of the first impressions survey, the post-questionnaire, and field notes taken while observing participants. The collected data was synthesized using Excel functions and charts.

Because of the scale used in the survey, ‘4’ was considered a neutral rating, while a ‘7’ was a strong positive rating and a ‘1’ was a strong negative rating. After all tests, we read through the

observation sheets and categorized them according to emerging findings which form the basis of the Findings Section (5.3) of this report.

Testing Facility

We conducted the usability tests at the design studio in the Art building at the University of

Texas at Austin (UT) because the room is usually quiet, allowed flexibility to schedule the tests, and is mutually known to the users and Poggio. A powerful Apple Mac G5 computer with 21” Apple Cinema display flat-screen monitor (a common computer setup for design students) was provided for the tests. The monitor used a screen resolution of 1600 X 1200.

Participants used the Apple’s native browser, Safari (V1.2.4) and Mac OS Panther 10.3.2 operating system. The computer was connected to the Internet using the university’s T1 connection speed.

T

ASKS

We gave participants three tasks to perform, which we imagined would be reflective of what they would do if they visited the site on their own (i.e., as prospective applicants). The first task recorded participants’ initial reactions to the site. The other two tasks were meant to reveal the ease of use of the site. The tasks for each website included:

Task 1 – First Impressions

For the first task participants were told to browse the website freely for four to five minutes and verbalize their impressions of the site. After they were done browsing, participants answered the following questions. (See Appendix D)

1. What was your first impression of this web site?

2. Who is this site for?

3. What does this site offer to the users?

4. Does it appear that this site would have information that you might want or need?

This task was constructed to get a feel for what the site was about and what it had to offer. It also served to observe the participant's immediate first impressions of the site. The participant's comme nts provided an overall perception of the site’s information, interaction, and interface designs.

Task 2 – Task Scenario: Student

For the second task participants were given a short situation scenario, and asked to find specific information from the web site. They were limited to 20 minutes and asked to note if any information could not be found in that time limit. Personalizing the task helped them browse different parts of the web site, and get to know the site better. (See Appendix E)

We gave participants the following scenario script:

“Pretend that you are applying for the MFA program in Design. You need to know the details of the application process, deadlines, learn more about the school program and faculty. Locate the following information on the web site then complete the survey below. Please limit yourself to 10 minutes. Be sure to note if you were unable to locate any of the information requested”.

1. Find the admissions deadline for the graduate program in design.

2. Determine if it is possible to request a catalog.

3. Find the graduate-level course descriptions for the design program.

4. Find the name of the contact person for the graduate design program.

5. Find the web galleries and look at three paintings from each of the galleries.

6. Find the link back to the home page.

We observed participants as they navigated the site and encouraged them to think aloud as they proceeded to locate all information. Even though the site contained the information requested, some users were unsuccessful at performing certain tasks in the time allotted. After the time was due, the participants completed the corresponding survey. They rated the Likertscale type survey ranging from “Failed” (1 pts) to “Very easy” (7 pts).

Task 2 – Task Scenario: Faculty

This task was modified for the participant who is a faculty member. (See Appendix E-bis)

We gave the participants the following scenario script:

“Pretend you are a professor of the MFA program in Design. You need to find information about one of your colleagues, review latest artwork posted in the online galleries, check when visiting professor “X” is giving a lecture, find information about the computer facilities and return directly to the home page from the browsed pages. Locate the following information on the web site then complete the survey below. Please limit yourself to 10 minutes. Be sure to note if you were unable to locate any of the information requested”.

1. Find biographical information about professor Dan Olsen.

2. Find the web galleries and look at three artworks from each of the galleries.

3. Find out when John Maeda will give his visiting lecture.

4. Find detailed information about the computer lab facilities.

5. Find the link back to the home page.

Task 3 – Feedback Questionnaire

Participants completed a multiple-choice survey as their last task. (See Appendix F)

This allowed participants to review each site further – letting them evaluate the design and information presented more holistically and providing an opportunity for them to voice their opinions regarding their overall perceptions of the site. We created the survey questions to express the participants’ impressions of the site in terms of information, interface, and interaction design. Furthermore, the questionnaire collected data that provides an indication of the degree to which each participant liked the site, how well the site met their need for design information, and their perceptions of the site’s main purpose.

Data and Findings

This section summarizes the data collected during the study sessions. It is important in usability studies to address both performance data and satisfaction data, as we are interested in not only “can users complete their tasks”, but “do they like the user experience as they do”.

Thus, this section consists of three parts:

Performance of the participants while attempting the tasks (task completion percentages),

Satisfaction of the participants as noted in their post-test questionnaire responses, and

Particular usability findings, compiled from both participant comments and researcher observations and notes including: a prioritized list of identified usability problems and recommended improvements.

The problems noted by the researchers will be given a criticality rating. The higher the rating of criticality, the more significant the proble m is to the user’s experience or ability to accomplish the task.

P

ERFORMANCE

D

ATA

After each task in the task scenario, participants rated how easy it was to perform the task

(Chart 5.1a & Chart 5.1b). A score of “1” indicates the participant could not complete the task; a score of “7” indicates the participant could very easily complete the task. We did not record time on task data because the “find-and-fix” approach of this test allowed for substantial dialog between the test participant and the test moderator. Therefore, participants were often talking to the moderator instead of directly completing tasks - making such a measure unreliable at best and meaningless at worst.

Faculty Task Scenario (Participant 1)

4

3

2

1

7

6

5

4

2

UT-Find specific faculty information

Task 1

UT-Find and use the portfolio/web gallery

Task 2

2 2 2

UT-Find details about an event

UT-Find details about the computer facilities

Task 4 Task 3

Task

UT-Find the homepage link

Task 5

Chart 5.1a: Faculty Task Ratings

7

4

3

2

1

6

5

5

4 4

Student Task Ratings

7 7 7 7

6 6

4

5 5

6

3 3

7

1 1

UT-Find the admissions deadline

UT-find the catalog request form

Task 1 Task 2

UT-Find the grad course descriptions

Task 3

UT-Find contact for grad design program

UT-Find and use the portfolio/web gallery

Task 4 Task 5

Task

UT-Find the homepage link

Task 6

P2

P3

P4

Chart 5.1b: Student Task Ratings

S

ATISFACTION

D

ATA

Participants answered a post-test questionnaire (Appendix F) at the completion of the last task. The charts below (Chart 5.2a & Chart 5.2b) summarize the participants’ responses to the post-test questionnaire.

Post-Test Questions: Feedback

7

6

5

4

3

2

1

3

6

5

6

UT-How easy w as it to get the inf ormation?

Q1

Task

6

4

1 1

UT-How tired do you f eel?

Q2

P1

P2

P3

P4

Chart 5.2a: Post-Test Feedback (Q1

– Q2)

Post-Test Questions: Feedback

7 7 7 7 7 7

4

3

2

1

7

6

5

1

4

5

6

1

4

6

2

5

4

1

4

1

5

6

4

5

6

5

1

4

1

UT-Colors are pleasing.

UT-Pictures are relevant to website's purpose.

Q3 Q4

UT-

Navigation was enjoyable.

UT-Easy to figure out what section

I was in.

UT-Website was logically organized.

UT-Website was informative.

UT-Website was annoying to use.

Q5 Q6

Task

Q7 Q8 Q9

P1

P2

P3

P4

Chart 5.2b: Post-Test Feedback (Q3 – Q9)

U

SABILITY

F

INDINGS

As this study was a “find-and-fix” usability study, these specific findings are the most important findings in the study. For usability studies such as this, it is important focus on the particular problems identified, and offer recommendations to fix them.

The following findings include a criticality rating as described below.

Description CRITICALITY Definition

Critical

Major

Moderate

Minor

The identified issue is so severe that:

Critical data may be lost.

The user cannot complete the task.

The user may not want to continue using the application.

Users can accomplish the task but only with considerable frustration.

Non-critical data is lost.

The user will have great difficulty in circumventing the problem.

Users can overcome the issue only after they have been shown how to perform the task.

The user will be able to complete the task in most cases, but will undertake some moderate effort in getting around the problem.

The user may need to investigate several links or pathways through the system to determine which option will allow them to accomplish the intended task.

Users will most likely remember how to perform the task on subsequent encounters with the system.

An irritant aspect

A cosmetic problem

A typographical error

The findings that follow are divided into Critical, Major, Moderate, and Minor problems. Each tells which aspect (information, interface or interaction) of the site it concerns, has a description and an associated recommendation. “The reason for prioritizing problems by criticality is to enable the [potential] development team to structure and prioritize the work that is required to improve the product” (Rubin, 1994). This section has been design for optimal usability to get to facts quickly.

Good Findings

Finding: In general, users were pleased with the website interface, and they found the images relevant to the s ite’s purpose.

Finding: Student users mentioned they would consider applying to the school because of the feeling of belonging and the impression they perceived of the department being part of a larger institution. The hierarchy of the department within UT was made evident.

Critical Findings

Finding Critical 1: Calendar and News search

Area : Information

Description : The calendar and news searches do not work. In two cases, participants tried to find a lecture, searching by the name of the person giving the lecture, only to be told there were no results. The only way the participants could find the event was by clicking on the exact date of the lecture on the calendar graphic. However, even when this option was employed participants were confused because the title of the lecture was displayed without any indication of the name of the lecturer.

Recommendation : A more robust search function that will pull up events based on name of lecturer, title of lecture, and date. In addition, display the name of the lecturer in search results.

Finding Critical 2: Link to catalog (or further details about the program)

Area : Information

Description : None of the participants connected the PDF link labeled “handbook” to the division graduate catalog. One participant did find a way to get more information about the program through the Information Request Form link.

Recommendation : Rename current “handbook” link to “division catalog” so participants can get more information about the department on their own.

Major Findings

Finding Major 1: Information structure and crossover

Area : Interaction

Description : The admissions information and course descriptions are first broken down by department and then by undergraduate/graduate. Also, much of the information in the admissions section of the site is pertinent to current students.

Recommendation : First divide admissions information and course descriptions by undergraduate/graduate and then by department. This is helpful for those students who wish to see all graduate (or undergraduate) level classes offered in the Art and Art History

Department. Also, include cross-references to information currently only housed in the admissions section of the site that might be useful to current students in the academics section

of the site (where most current students go to look for information). These cross-references include: advising office, course schedule, course descriptions, office of the registrar, and financial aid.

Finding Major 2: Text length and headings

Area : Interface

Description : Many participants complained about having to read through long sections of text to find pertinent information. And though some headings are present, they are often not very different from the surrounding text.

Recommendation : Trim paragraphs down to only the most pertinent information and make sure they are separated by white space so it is easy for users to differentiate paragraphs. In addition, include headings (and make them highly visible) so users can easily scan pages to find information they are searching for.

Finding Major 3: Admission deadline

Area: Information

Description: All participants scanned the “Design division, admission requirements” page quickly, trying to find the deadline to apply to graduate school. It was interesting that only one saw it while scrolling down, the other two students had to review the page a few times until they found the deadline at the beginning of one of the paragraphs.

Recommendation: Make the admission deadline information more evident by adding color, larger font, an d/or placing it inside a separate “highlight” box.

Finding Major 4: Home page link

Area : Interaction/Interface

Description : All participants had to guess (or found accidentally by mouse-over) the location of the Art & Art History home page link because it is embedded within a picture.

Recommendation : Make the home page link more evident.

Moderate Findings

Finding Moderate1: Online portfolio/galleries

Area : Information/Interaction

Description : Participants were frustrated by the lack of contextual information presented in the online galleries, the fact that a new window opened for each work of art viewed, and the lack of zoom and forward/back features.

Recommendation : Include artist, media, and size for each work in the online portfolio. In addition, if pop-up windows must be used refresh the current opened window with the new work of art, include a “close window” link, and include links for navigating forward and backward through the gallery. Also, implement a zoom feature so users can take a close up look at works.

Finding Moderate 2: Information

Area : Information

Description : Several pages had out of date information (e.g., the Design Division Computer

Lab was last updated in 2003).

Recommendation : Create a system so information is routinely checked for accuracy.

Minor Findings

Finding Minor 1: Pictures

Area : Interface

Description : All participants thought the photographs used on the site were relevant and gave a good feel of the program by showing spaces and people they could identify with. However, parti cipants commented that the photos looked “old”, “could be more eye-catching”, and “didn't look professional”.

Recommendation : Redo photographs on the site so they provide a more polished feel.

Finding Minor 2: Links to faculty works

Area : Interaction

Description : Some faculty biographical pages do not include links to their works.

Recommendation : Include a link to faculty works from their biographical page. If a faculty member has their own web site provide a link to it as well as including a few samples of his/her art work within the department’s website.

Next Steps

The next step is to present these findings to the staff responsible for the Art and Art History web site for them to review and decide when and how each of these identified problems should be addressed. It is our hope the department will contact us should they need more information or help in re-designing the web site, and possibly perform additional usability tests.

Contact Information

If you have any questions about anything contained in this report, please contact:

Carrie Newsom newsomz@mail.utexas.edu

Natacha Poggio natacha@mail.utexas.edu

References

Gold, E.. (1995). The Business of Graphic Design . Watson-Guptill Publications

Fleming, J. (1998). Web navigation: designing the user experience . Sebastopol, CA: O’Reilly & Associates,

Inc.

Rubin, J. (1994). Handbook of Usability Testing . John Wiley & Sons.

Nielsen, J., Snyder, C., Molich, R., Farrell, S. (2000). E-commerce User Experience: Methodology . Nielsen

Norman Group. http://www.nngroup.com/reports/ecommerce

Appendices

1- Introduction to the test process (Appendix A)

2- A consent form (Appendix B)

3- A pre-test [background] questionnaire (Appendix C)

4- An exploratory [first impressions] questionnaire (Appendix D).

5- A tasks-specific [scenario] questionnaire (Appendix E).

6- A post-test [site feedback] questionnaire (Appendix F).

U

SABILITY

E

VALUATION

I

NSTRUCTIONS

Appendix A

User ID:

Date:

Introduction

Thank you for your time helping us. We are conducing a usability evaluation on the UT

Department of Art & Art History web site for a class project. Usability evaluations seek to determine if people can easily and quickly use a web site to accomplish their own tasks.

Evaluations are designed to solicit feedback from participants, focusing on areas of concern. An evaluation typically involves several participants, each of whom represents a typical user. Once all evaluation sessions are completed, we compile the feedback received from each participant, along with our notes. We then prepare a final analysis report.

This evaluation is intended to measure how easy it is to use the Department of Art & Art

History web site. To evaluate this site, you will be asked to perform 3 to 4 tasks following an evaluation scenario. In the beginning, you will have a few minutes to get used to the site, after that you will be asked to perform various tasks, some are more specific than others. Remember that you are evaluating the website information, interface and interaction. You are not being evaluated in any way. Take as much time as you need. It does not matter if you complete any of the tasks. We expect that it will perhaps take about one hour to complete the test.

During the test, the study administrator will be sitting next to you, to observe how you use the site. You will be encouraged to work without guidance; nevertheless, you may ask a question when anything is unclear. Since the purpose is to understand what makes it easy or difficult to use, we would like to know what you are thinking while you are browsing the sites. So during the test we will ask you to verbalize your thoughts (“think aloud”) or say whatever comes to your mind, such as something that frustrate you, something that you like, and something that surprise you. Please think aloud as much as possible because the more you speak, the more data we can collect.

As a demonstration of our appreciation for your voluntary participation in this study, we would like to give you a sample of candies for you to enjoy.

In the report of this testing, we will not use your real name at all. All information we collect concerning your participation in the session is confidential. Further, all the results will be discussed in terms of the usability of the site, rather than your skills.

C

ONSENT

F

ORM

Appendix B

User ID:

Date:

Study Administrator is:

Participant is:

Understanding Your Participation

You have been asked to participate in a usability study about how websites meet your needs. Our purpose in conducting this study is to understand what makes some websites easier to use than others. The results of this study will NOT be published in professional reports. In the session, we’ll ask you to visit some websites and look for various things.

Please keep in mind that this is a test of websites; we are not testing you. We may audiotape all or some of the test for evaluation purposes but we will not release the recording to anybody. All information we collect concerning your participation in the session is confidential.

Participation is voluntary. To the best of our knowledge, there are no physical or psychological risks associated with participating in this study.

During the session, the study administrator will assist you and answer any questions. You may take short breaks as needed and may withdraw from this evaluation at any time.

If you have any questions, you may ask now or at any time during the test.

Statement of Informed Consent

I have read the description of the study and I am aware of my rights as a participant. The conductor of the research study has assured me that my identity will remain anonymous and confidential. I agree to participate in the study.

Signature _______________________________

Date ___________________________________

P

ARTICIPANT

B

ACKGROUND

Q

UESTIONNAIRE

Appendix C

User ID:

Date:

UT Art & Art History website

Thank you very much for participating in this research study. Before you start your usability testing, please answer the following questions. This information will be kept strictly confidential. Please circle the appropriate answer.

Participant Information

1- Your age: 18 or younger 19-24 25-30 31-35 36 or older

2- Gender: Male Female

3- Please state your current occupation: student faculty staff other

4- If you are a student, please check all that apply

High school college graduate school > Master student

Ph.D. student

5- Please state your field of study/research:

Computer Experience

1- About how long have you been using the Internet?

2- How would you define your computer proficiency? Low medium high

3- How often do you access the Internet? Daily

4- What do you use the Internet for? (Choose all that apply)

Weekly

Email Research Shopping

Less

Other, please specify

U

SABILITY

E

VALUATION

T

ASKS

Appendix D

User ID:

Date:

UT Art & Art History website

This is an exploratory exercise. Please take 2-3 minutes to just browse this web site.

Please jot down your first impressions of this web site in the space provided below. Please be honest in your responses, your objective opinion will only support the purpose of this study.

Task 1: First Impressions

1. What was your first impression on this web site?

____________________________________________________________________________

____________________________________________________________________________

____________________________________________________________________________

____________________________________________________________________________

____________________________________________________________________________

____________________________________________________________________________

2. Who is this site for?

____________________________________________________________________________

____________________________________________________________________________

____________________________________________________________________________

____________________________________________________________________________

____________________________________________________________________________

____________________________________________________________________________

3. What does this site offer to the users?

____________________________________________________________________________

____________________________________________________________________________

____________________________________________________________________________

____________________________________________________________________________

____________________________________________________________________________

____________________________________________________________________________

4. Does it appear that this site would have information that you might want or need?

____________________________________________________________________________

____________________________________________________________________________

____________________________________________________________________________

____________________________________________________________________________

____________________________________________________________________________

U

SABILITY

E

VALUATION

T

ASKS

Appendix E

User ID:

Date:

UT Art & Art History website

Task 2: Tasks Scenario: Student

Pretend that you are applying for the MFA program in Design. You need to know the details of the application process, deadlines, learn more about the school program and faculty. Locate the following information on the web site then complete the survey below. Please limit yourself to 10 minutes per site. Be sure to note if you were unable to locate any of the information requested.

Find the admissions deadline for the graduate program in design.

Determine if it is possible to request a catalog.

Find the graduate-level course descriptions for the design program.

Find the name of the contact person for the graduate design program.

Please find the web galleries and look at three paintings from each of the galleries.

Find the link back to the home page.

Rating: Not at all (1) – hard (2) – unclear (3) – not so easy (4) – manageable (5) – easy (6) – very easy (7)

1- How easy is it to find the admissions deadline for the graduate design program?

1 2 3 4 5 6 7

Not at all Very easy

2- How easy is it to find the catalog request form?

1 2 3 4 5 6 7

Not at all Very easy

3- How easy is it to find the graduate course descriptions for the design program?

1 2 3 4 5 6 7

Not at all Very easy

4- How easy is it to determine who is the contact person for the graduate design program?

1 2 3 4 5 6 7

Not at all Very easy

5- How easy is it to find and use the portfolio/web gallery?

1 2 3 4 5 6 7

Not at all Very easy

6- How easy was it to find the homepage link on this website?

1 2 3 4 5 6 7

Not at all Very easy

U

SABILITY

E

VALUATION

T

ASKS

Appendix F

User ID:

Date:

UT Art & Art History website

Task 2: Tasks Scenario: Faculty

Pretend that you are a professor of the MFA program in Design. You need to find information about one of your colleagues, review latest artwork posted in the online galleries, check when visiting professor “X” is giving a lecture, find information about the computer facilities and return directly to the home page from the browsed pages. Locate the following information on the web site then complete the survey below. Please limit yourself to 10 minutes per site. Be sure to note if you were unable to locate any of the information requested.

Find biographical information about professor Dan Olsen.

Please find the web galleries and look at three paintings from each of the galleries.

Find out when John Maeda will give his visiting lecture.

Find the link back to the home page.

Find detailed information about the computer lab facilities.

Rating: Not at all (1) – hard (2) – unclear (3) – not so easy (4) – manageable (5) – easy (6) – very easy (7)

1- How easy was it to get information about faculty from a specific area of expertise?

1 2 3 4 5 6 7

Not at all Very easy

2- How easy is it to use the portfolio/web gallery?

1 2 3 4 5 6 7

Not at all Very easy

3- How easy is it to use the calendar webpage?

1 2 3 4 5 6 7

Not at all Very easy

4- How easy was it to find detailed information about the computer lab facilities?

1 2 3 4 5 6 7

Not at all Very easy

5- How easy was it to find the homepage link on this website?

1 2 3 4 5 6 7

Not at all Very easy

U

SABILITY

E

VALUATION

T

ASKS

Appendix G

User ID:

Date:

UT Art & Art History website

Task 3: Site Feedback

After browsing this website for Tasks 1 & 2, please rate your satisfaction regarding the following aspects of the site you have just finished working with by circling the appropriate number.

1. How easy was it to get the information you were looking for?

1 2 3 4 5 6 7

Not at all Very easy

2. After working with this site how tired do you feel?

1 2 3 4 5 6 7

Not at all Very tired

3. The colors on this website are pleasing.

1 2 3 4 5 6 7

Strongly disagree Strongly agree

4. The pictures on this website are relevant to this website’s purpose.

1 2 3 4 5 6 7

Strongly disagree Strongly agree

5. Navigation throughout this site was enjoyable.

1 2 3 4 5 6 7

Strongly disagree Strongly agree

6. It was easy to figure out what section of the site I was located in.

1 2 3 4 5 6 7

Strongly disagree Strongly agree

7. This website was logically organized.

1 2 3 4 5 6 7

Strongly disagree Strongly agree

8. This website was informative.

1 2 3 4 5 6 7

Strongly disagree Strongly agree

9. This website was annoying to use.

1 2 3 4 5 6 7

Strongly disagree Strongly agree