Language Independent Methods of Clustering Similar Contexts (with applications)

advertisement

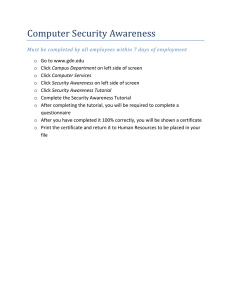

Language Independent Methods of Clustering Similar Contexts (with applications) Ted Pedersen University of Minnesota, Duluth tpederse@d.umn.edu http://www.d.umn.edu/~tpederse/SCTutorial.html January 6, 2007 IJCAI-2007 Tutorial 1 Language Independent Methods • Do not utilize syntactic information – No parsers, part of speech taggers, etc. required • Do not utilize dictionaries or other manually created lexical resources • Based on lexical features selected from corpora – Assumption: word segmentation can be done by looking for white spaces between strings • No manually annotated data, methods are completely unsupervised in the strictest sense January 6, 2007 IJCAI-2007 Tutorial 2 A Note on Tokenization • Default tokenization is white space separated strings • Can be redefined using regular expressions – e.g., character n-grams (4 grams) – any other valid regular expression January 6, 2007 IJCAI-2007 Tutorial 3 Clustering Similar Contexts • A context is a short unit of text – often a phrase to a paragraph in length, although it can be longer • Input: N contexts • Output: K clusters – Where each member of a cluster is a context that is more similar to each other than to the contexts found in other clusters January 6, 2007 IJCAI-2007 Tutorial 4 Applications • Headed contexts (focus on target word) – Name Discrimination – Word Sense Discrimination • Headless contexts – Email Organization – Document Clustering – Paraphrase identification • Clustering Sets of Related Words January 6, 2007 IJCAI-2007 Tutorial 5 Tutorial Outline • Identifying Lexical Features • First Order Context Representation – native SC : context as vector of features • Second Order Context Representation – LSA : context as average of vectors of contexts – native SC : context as average of vectors of features • Dimensionality reduction • Clustering • Hands-On Experience January 6, 2007 IJCAI-2007 Tutorial 6 SenseClusters • A free package for clustering contexts – http://senseclusters.sourceforge.net – SenseClusters Live! (Knoppix CD) • Perl components that integrate other tools – Ngram Statistics Package – CLUTO – SVDPACKC – PDL January 6, 2007 IJCAI-2007 Tutorial 7 Many thanks… • Amruta Purandare (M.S., 2004) – Now PhD student in Intelligent Systems at the University of Pittsburgh – http://www.cs.pitt.edu/~amruta/ • Anagha Kulkarni (M.S., 2006) – Now PhD student at the Language Technologies Institute at Carnegie-Mellon University – http://www.cs.cmu.edu/~anaghak/ • Ted, Amruta, and Anagha were supported by the National Science Foundation (USA) via CAREER award #0092784 January 6, 2007 IJCAI-2007 Tutorial 8 Background and Motivations January 6, 2007 IJCAI-2007 Tutorial 9 Headed and Headless Contexts • A headed context includes a target word – Our goal is to cluster the target word based on the surrounding contexts – The focus is on the target word and making distinctions among word meanings • A headless context has no target word – Our goal is to cluster the contexts based on their similarity to each other – The focus is on the context as a whole and making topic level distinctions January 6, 2007 IJCAI-2007 Tutorial 10 Headed Contexts (input) • • • • • I can hear the ocean in that shell. My operating system shell is bash. The shells on the shore are lovely. The shell command line is flexible. An oyster shell is very hard and black. January 6, 2007 IJCAI-2007 Tutorial 11 Headed Contexts (output) • Cluster 1: – My operating system shell is bash. – The shell command line is flexible. • Cluster 2: – The shells on the shore are lovely. – An oyster shell is very hard and black. – I can hear the ocean in that shell. January 6, 2007 IJCAI-2007 Tutorial 12 Headless Contexts (input) • The new version of Linux is more stable and better support for cameras. • My Chevy Malibu has had some front end troubles. • Osborne made one of the first personal computers. • The brakes went out, and the car flew into the house. • With the price of gasoline, I think I’ll be taking the bus more often! January 6, 2007 IJCAI-2007 Tutorial 13 Headless Contexts (output) • Cluster 1: – The new version of Linux is more stable and better support for cameras. – Osborne made one of the first personal computers. • Cluster 2: – My Chevy Malibu has had some front-end troubles. – The brakes went out, and the car flew into the house. – With the price of gasoline, I think I’ll be taking the bus more often! January 6, 2007 IJCAI-2007 Tutorial 14 Web Search as Application • Snippets returned via Web search are headed contexts since they include the search term – Name Ambiguity is a problem with Web search. Results mix different entities – Group results into clusters where each cluster is associated with a unique underlying entity • Pages found by following search results can also be treated as headless contexts January 6, 2007 IJCAI-2007 Tutorial 15 Name Discrimination January 6, 2007 IJCAI-2007 Tutorial 16 George Millers! January 6, 2007 IJCAI-2007 Tutorial 17 January 6, 2007 IJCAI-2007 Tutorial 18 January 6, 2007 IJCAI-2007 Tutorial 19 January 6, 2007 IJCAI-2007 Tutorial 20 January 6, 2007 IJCAI-2007 Tutorial 21 Email Foldering as Application • Email (public or private) is made up of headless contexts – Short, usually focused… • Cluster similar email messages together – Automatic email foldering – Take all messages from sent-mail file or inbox and organize into categories January 6, 2007 IJCAI-2007 Tutorial 22 Clustering News as Application • News articles are headless contexts – Entire article or first paragraph – Short, usually focused • Cluster similar articles together, can also be applied to blog entries and other shorter units of text January 6, 2007 IJCAI-2007 Tutorial 23 What is it to be “similar”? • You shall know a word by the company it keeps – Firth, 1957 (Studies in Linguistic Analysis) • Meanings of words are (largely) determined by their distributional patterns (Distributional Hypothesis) – Harris, 1968 (Mathematical Structures of Language) • Words that occur in similar contexts will have similar meanings (Strong Contextual Hypothesis) – Miller and Charles, 1991 (Language and Cognitive Processes) • Various extensions… – Similar contexts will have similar meanings, etc. – Names that occur in similar contexts will refer to the same underlying person, etc. January 6, 2007 IJCAI-2007 Tutorial 24 General Methodology • Represent contexts to be clustered using first or second order feature vectors – Lexical features • Reduce dimensionality to make vectors more tractable and/or understandable (optional) – Singular value decomposition • Cluster the context vectors – Find the number of clusters – Label the clusters • Evaluate and/or use the contexts! January 6, 2007 IJCAI-2007 Tutorial 25 Identifying Lexical Features Measures of Association and Tests of Significance January 6, 2007 IJCAI-2007 Tutorial 26 What are features? • Features are the salient characteristics of the contexts to be clustered • Each context is represented as a vector, where the dimensions are associated with features • Contexts that include many of the same features will be similar to each other January 6, 2007 IJCAI-2007 Tutorial 27 Feature Selection Data • The contexts to cluster (evaluation/test data) – We may need to cluster all available data, and not hold out any for a separate feature identification step • A separate larger corpus (training data), esp. if we cluster a very small number of contexts – local training – corpus made up of headed contexts – global training – corpus made up of headless contexts • Feature selection data may be either the evaluation/test data, or a separate held-out set of training data January 6, 2007 IJCAI-2007 Tutorial 28 Feature Selection Data • Test / Evaluation data : contexts to be clustered – Assume that the feature selection data is the test data, unless otherwise indicated • Training data – a separate corpus of held out feature selection data (that will not be clustered) – may need to use if you have a small number of contexts to cluster (e.g., web search results) – This sense of “training” due to Schütze (1998) • does not mean labeled • simply an extra quantity of text January 6, 2007 IJCAI-2007 Tutorial 29 Lexical Features • Unigram – a single word that occurs more than X times in feature selection data and is not in stop list • Stop list – – – – words that will not be used in features usually non-content words like the, and, or, it … may be compiled manually may be derived automatically from a corpus of text • any word that occurs in a relatively large percentage (>10-20%) of contexts may be considered a stop word January 6, 2007 IJCAI-2007 Tutorial 30 Lexical Features • Bigram – an ordered pair of words that may be consecutive, or have intervening words that are ignored – the pair occurs together more than X times and/or more often than expected by chance in feature selection data – neither word in the pair may be in stop list • Co-occurrence – an unordered bigram • Target Co-occurrence – a co-occurrence where one of the words is the target January 6, 2007 IJCAI-2007 Tutorial 31 Bigrams • Window Size of 2 – baseball bat, fine wine, apple orchard, bill clinton • Window Size of 3 – house of representatives, bottle of wine, • Window Size of 4 – president of the republic, whispering in the wind • Selected using a small window size (2-4 words) • Objective is to capture a regular or localized pattern between two words (collocation?) January 6, 2007 IJCAI-2007 Tutorial 32 Co-occurrences • president law – the president signed a bill into law today – that law is unjust, said the president – the president feels that the law was properly applied • Usually selected using a larger window (7-10 words) of context, hoping to capture pairs of related words rather than collocations January 6, 2007 IJCAI-2007 Tutorial 33 Bigrams and Co-occurrences • Pairs of words tend to be much less ambiguous than unigrams – “bank” versus “river bank” and “bank card” – “dot” versus “dot com” and “dot product” • Three grams and beyond occur much less frequently (Ngrams very Zipfian) • Unigrams occur more frequently, but are noisy January 6, 2007 IJCAI-2007 Tutorial 34 “occur together more often than expected by chance…” • Observed frequencies for two words occurring together and alone are stored in a 2x2 matrix • Expected values are calculated, based on the model of independence and observed values – How often would you expect these words to occur together, if they only occurred together by chance? – If two words occur “significantly” more often than the expected value, then the words do not occur together by chance. January 6, 2007 IJCAI-2007 Tutorial 35 2x2 Contingency Table Intelligence Artificial not Intelligence 100 400 300 100,000 not Artificial January 6, 2007 IJCAI-2007 Tutorial 36 2x2 Contingency Table Artificial not Artificial January 6, 2007 Intelligence not Intelligence 100 300 400 200 99,400 99,600 300 99,700 100,000 IJCAI-2007 Tutorial 37 2x2 Contingency Table Artificial not Artificial January 6, 2007 Intelligence not Intelligence 100.0 000.12 300.0 398.8 400 200.0 298.8 99,400.0 99,301.2 99,600 300 99,700 100,000 IJCAI-2007 Tutorial 38 Measures of Association G 2 (observed (w , w ) * log expected(w , w ) ) i , j 1 X 2 observed ( wi , w j ) 2 2 i , j 1 January 6, 2007 i j i [observed ( wi , w j ) expected( wi , w j )] j 2 expected( wi , w j ) IJCAI-2007 Tutorial 39 Measures of Association G 750.88 2 X 8191.78 2 January 6, 2007 IJCAI-2007 Tutorial 40 Interpreting the Scores… • G^2 and X^2 are asymptotically approximated by the chi-squared distribution… • This means…if you fix the marginal totals of a table, randomly generate internal cell values in the table, calculate the G^2 or X^2 scores for each resulting table, and plot the distribution of the scores, you *should* get … January 6, 2007 IJCAI-2007 Tutorial 41 January 6, 2007 IJCAI-2007 Tutorial 42 Interpreting the Scores… • Values above a certain level of significance can be considered grounds for rejecting the null hypothesis – H0: the words in the bigram are independent – 3.84 is associated with 95% confidence that the null hypothesis should be rejected January 6, 2007 IJCAI-2007 Tutorial 43 Measures of Association • There are numerous measures of association that can be used to identify bigram and co-occurrence features • Many of these are supported in the Ngram Statistics Package (NSP) – http://www.d.umn.edu/~tpederse/nsp.html • NSP is integrated into SenseClusters January 6, 2007 IJCAI-2007 Tutorial 44 Measures Supported in NSP • • • • • • • • • Log-likelihood Ratio (ll) True Mutual Information (tmi) Pointwise Mutual Information (pmi) Pearson’s Chi-squared Test (x2) Phi coefficient (phi) Fisher’s Exact Test (leftFisher) T-test (tscore) Dice Coefficient (dice) Odds Ratio (odds) January 6, 2007 IJCAI-2007 Tutorial 45 Summary • Identify lexical features based on frequency counts or measures of association – either in the data to be clustered or in a separate set of feature selection data – Language independent • Unigrams usually only selected by frequency – Remember, no labeled data from which to learn, so somewhat less effective as features than in supervised case • Bigrams and co-occurrences can also be selected by frequency, or better yet measures of association – Bigrams and co-occurrences need not be consecutive – Stop words should be eliminated – Frequency thresholds are helpful (e.g., unigram/bigram that occurs once may be too rare to be useful) January 6, 2007 IJCAI-2007 Tutorial 46 References • Moore, 2004 (EMNLP) follow-up to Dunning and Pedersen on loglikelihood and exact tests http://acl.ldc.upenn.edu/acl2004/emnlp/pdf/Moore.pdf • Pedersen, Kayaalp, and Bruce. 1996 (AAAI) explanation of the exact conditional test, a stochastic simulation of exact tests. http://www.d.umn.edu/~tpederse/Pubs/aaai96-cmpl.pdf • Pedersen, 1996 (SCSUG) explanation of exact tests for collocation identification, and comparison to log-likelihood http://arxiv.org/abs/cmp-lg/9608010 • Dunning, 1993 (Computational Linguistics) introduces log-likelihood ratio for collocation identification http://acl.ldc.upenn.edu/J/J93/J93-1003.pdf January 6, 2007 IJCAI-2007 Tutorial 47 Context Representations First and Second Order Methods January 6, 2007 IJCAI-2007 Tutorial 48 Once features selected… • We will have a set of unigrams, bigrams, cooccurrences or target co-occurrences that we believe are somehow interesting and useful – We also have any frequency and measure of association score that have been used in their selection • Convert contexts to be clustered into a vector representation based on these features January 6, 2007 IJCAI-2007 Tutorial 49 Possible Representations • First Order Features – Native SenseClusters • each context represented by a vectors of features • Second Order Co-Occurrence Features – Native SenseClusters • each word in a context replaced by vector of cooccurring words and averaged together – Latent Semantic Analysis • each feature in a context replaced by vector of contexts in which it occurs and averaged together January 6, 2007 IJCAI-2007 Tutorial 50 First Order Representation Native SenseClusters • Context by Feature • Each context is represented by a vector with M dimensions, each of which indicates if a particular feature occurred in that context – value may be binary or a frequency count – bag of words representation of documents is first order, where each doc is represented by a vector showing words that occur therein January 6, 2007 IJCAI-2007 Tutorial 51 Contexts • x1: there was an island curse of black magic cast by that voodoo child • x2: harold a known voodoo child was gifted in the arts of black magic • x3: despite their military might it was a serious error to attack • x4: military might is no defense against a voodoo child or an island curse January 6, 2007 IJCAI-2007 Tutorial 52 Unigram Features • • • • • island black curse magic child 1000 700 500 400 200 • (assume these are frequency counts obtained from feature selection data…) January 6, 2007 IJCAI-2007 Tutorial 53 First Order Vectors of Unigrams island black curse magic child x1 1 1 1 1 1 x2 0 1 0 1 1 x3 0 0 0 0 0 x4 1 0 1 0 1 January 6, 2007 IJCAI-2007 Tutorial 54 Bigram Feature Set • • • • • • • • • • island curse black magic voodoo child military might serious error island child voodoo might military error black child serious curse 189.2 123.5 120.0 100.3 89.2 73.2 69.4 54.9 43.2 21.2 • (assume these are log-likelihood scores from feature selection data) January 6, 2007 IJCAI-2007 Tutorial 55 First Order Vectors of Bigrams x1 black magic 1 x2 island military serious voodoo curse might error child 1 0 0 1 1 0 0 0 1 x3 0 0 1 1 0 x4 0 1 1 0 1 January 6, 2007 IJCAI-2007 Tutorial 56 First Order Vectors • Values may be binary or frequency counts • Forms a context by feature matrix • May optionally be smoothed/reduced with Singular Value Decomposition – More on that later… • The contexts are ready for clustering… – More on that later… January 6, 2007 IJCAI-2007 Tutorial 57 Second Order Features • First order features directly encode the occurrence of a feature in a context – Native SenseClusters : each feature represented by a binary value or frequency count in a vector • Second order features encode something ‘extra’ about a feature that occurs in a context, something not available in the context itself – Native SenseClusters : each feature is represented by a vector of the words with which it occurs – Latent Semantic Analysis : each feature is represented by a vector of the contexts in which it occurs January 6, 2007 IJCAI-2007 Tutorial 58 Second Order Representation Native SenseClusters • Build word matrix from feature selection data – Start with bigrams or co-occurrences identified in feature selection data – First word is row, second word is column, cell is score – (optionally) reduce dimensionality w/SVD – Each row forms a vector of first order co-occurrences • Replace each word in a context with its row from the word matrix • Represent the context with the average of all its word vectors – Schütze (1998) January 6, 2007 IJCAI-2007 Tutorial 59 Word by Word Matrix magic curse might error child black 123.5 0 0 0 43.2 island 0 189.2 0 0 73.2 military 0 0 100.3 54.9 0 serious 0 21.2 0 89.2 0 voodoo 0 0 69.4 0 120.0 January 6, 2007 IJCAI-2007 Tutorial 60 Word by Word Matrix • …can also be used to identify sets of related words • In the case of bigrams, rows represent the first word in a bigram and columns represent the second word – Matrix is asymmetric • In the case of co-occurrences, rows and columns are equivalent – Matrix is symmetric • The vector (row) for each word represent a set of first order features for that word • Each word in a context to be clustered for which a vector exists (in the word by word matrix) is replaced by that vector in that context January 6, 2007 IJCAI-2007 Tutorial 61 There was an island curse of black magic cast by that voodoo child. magic curse might error child black 123.5 0 0 0 43.2 island 0 189.2 0 0 73.2 voodoo 0 0 69.4 0 120.0 January 6, 2007 IJCAI-2007 Tutorial 62 Second Order Co-Occurrences • Word vectors for “black” and “island” show similarity as both occur with “child” • “black” and “island” are second order cooccurrence with each other, since both occur with “child” but not with each other (i.e., “black island” is not observed) January 6, 2007 IJCAI-2007 Tutorial 63 Second Order Representation • x1: there was an island curse of black magic cast by that voodoo child • x1: there was an [curse,child] curse of [magic, child] magic cast by that [might,child] child • x1: [curse,child] + [magic,child] + [might,child] January 6, 2007 IJCAI-2007 Tutorial 64 There was an island curse of black magic cast by that voodoo child. x1 January 6, 2007 magic curse might error child 41.2 63.1 24.4 0 78.8 IJCAI-2007 Tutorial 65 Second Order Representation Native SenseClusters • Context by Feature/Word • Cell values do not indicate if feature occurred in context. Rather, they show the strength of association of that feature with other words that occur with a word in the context. January 6, 2007 IJCAI-2007 Tutorial 66 Second Order Representation Latent Semantic Analysis • Build first order representation of context – Use any type of features selected from feature selection data – result is a context by feature matrix • Transpose the resulting first order matrix – result is a feature by context matrix – (optionally) reduce dimensionality w/SVD – Replace each feature in a context with its row from the transposed matrix • Represent the context with the average of all its context vectors – Landauer and Dumais (1997) January 6, 2007 IJCAI-2007 Tutorial 67 First Order Vectors of Unigrams island black curse magic child x1 1 1 1 1 1 x2 0 1 0 1 1 x3 0 0 0 0 0 x4 1 0 1 0 1 January 6, 2007 IJCAI-2007 Tutorial 68 Transposed January 6, 2007 x1 x2 x3 x4 island 1 0 0 1 black 1 1 0 0 curse 1 0 0 1 magic 1 1 0 0 child 1 1 0 1 IJCAI-2007 Tutorial 69 harold a known voodoo child was gifted in the arts of black magic x1 x2 x3 x4 black 1 1 0 0 child 1 1 0 1 magic 1 1 0 0 January 6, 2007 IJCAI-2007 Tutorial 70 Second Order Representation • x2: harold a known voodoo child was gifted in the arts of black magic • x2: harold a known voodoo [x1,x2,x4] was gifted in the arts of [x1,x2] [x1,x2] • x2: [x1,x2,x4] + [x1,x2] + [x1,x2] January 6, 2007 IJCAI-2007 Tutorial 71 x2: harold a known voodoo child was gifted in the arts of black magic x2 January 6, 2007 x1 x2 x3 x4 1 1 0 .3 IJCAI-2007 Tutorial 72 Second Order Representation Latent Semantic Analysis • Context by Context • The features in the context are represented by the contexts in which those features occur • Cell values indicate the similarity between the contexts January 6, 2007 IJCAI-2007 Tutorial 73 Summary • First order representations are intuitive, but… – Can suffer from sparsity – Contexts represented based on the features that occur in those contexts • Second order representations are harder to visualize, but… – Allow a word to be represented by the words it cooccurs with (i.e., the company it keeps) – Allows a context to be represented by the words that occur with the words in the context – Allow a feature to be represented by the contexts in which it occurs – Allows a context to be represented by the contexts where the words in the context occur – Helps combat sparsity… January 6, 2007 IJCAI-2007 Tutorial 74 References • Pedersen and Bruce 1997 (EMNLP) first order method of discrimination http://acl.ldc.upenn.edu/W/W97/W97-0322.pdf • Landauer and Dumais 1997 (Psychological Review) overview of LSA. http://lsa.colorado.edu/papers/plato/plato.annote.html • Schütze 1998 (Computational Linguistics) introduced second order method http://acl.ldc.upenn.edu/J/J98/J98-1004.pdf • Purandare and Pedersen 2004 (CoNLL) compared first and second order methods http://acl.ldc.upenn.edu/hlt-naacl2004/conll04/pdf/purandare.pdf – First order better if you have lots of data – Second order better with smaller amounts of data January 6, 2007 IJCAI-2007 Tutorial 75 Dimensionality Reduction Singular Value Decomposition January 6, 2007 IJCAI-2007 Tutorial 76 Motivation • First order matrices are very sparse – Context by feature – Word by word • NLP data is noisy – No stemming performed – synonyms January 6, 2007 IJCAI-2007 Tutorial 77 Many Methods • Singular Value Decomposition (SVD) – SVDPACKC http://www.netlib.org/svdpack/ • • • • • Multi-Dimensional Scaling (MDS) Principal Components Analysis (PCA) Independent Components Analysis (ICA) Linear Discriminant Analysis (LDA) etc… January 6, 2007 IJCAI-2007 Tutorial 78 Effect of SVD • SVD reduces a matrix to a given number of dimensions This may convert a word level space into a semantic or conceptual space – If “dog” and “collie” and “wolf” are dimensions/columns in a word co-occurrence matrix, after SVD they may be a single dimension that represents “canines” January 6, 2007 IJCAI-2007 Tutorial 79 Effect of SVD • The dimensions of the matrix after SVD are principal components that represent the meaning of concepts – Similar columns are grouped together • SVD is a way of smoothing a very sparse matrix, so that there are very few zero valued cells after SVD January 6, 2007 IJCAI-2007 Tutorial 80 How can SVD be used? • SVD on first order contexts will reduce a context by feature representation down to a smaller number of features – Latent Semantic Analysis performs SVD on a feature by context representation, where the contexts are reduced • SVD used in creating second order context representations for native SenseClusters – Reduce word by word matrix January 6, 2007 IJCAI-2007 Tutorial 81 Word by Word Matrix native SenseClusters 2nd order apple blood cells pc 2 0 0 body 0 3 disk 1 petri data box tissue graphics 1 3 1 0 0 0 0 0 0 2 0 0 2 0 3 0 2 1 0 0 lab 0 0 3 0 sales 0 0 0 linux 2 0 debt 0 0 January 6, 2007 ibm organ plasma 0 0 0 0 0 2 1 0 1 2 0 0 0 2 0 1 0 1 2 0 2 0 2 1 3 2 3 0 0 1 2 0 0 0 1 3 2 0 1 1 0 0 0 2 3 4 0 2 0 0 0 IJCAI-2007 Tutorial memory 82 Singular Value Decomposition A=UDV’ January 6, 2007 IJCAI-2007 Tutorial 83 U .35 .09 -.2 .02 .63 .20 -.00 -.02 .08 -.09 -.44 -.04 -.6 -.02 -.01 .41 -.22 .20 -.39 .00 .03 .09 .83 .05 -.26 -.01 .00 .29 -.68 -.45 -.34 -.31 .02 -.21 .01 .43 -.02 -.07 .37 -.01 -.31 .09 .03 .31 -.00 .08 .05 -.49 .59 .35 .13 .52 -.09 .40 .44 .39 -.60 .31 .08 -.45 .25 -.02 .17 .72 -.48 -.04 .46 .11 -.08 .24 -.01 .39 .05 .08 .08 -.00 -.01 .56 .25 .30 -.07 -.49 -.52 .14 -.3 -.30 .00 -.07 January 6, 2007 IJCAI-2007 Tutorial 84 D 9.19 6.36 3.99 3.25 2.52 2.30 1.26 0.66 0.00 0.00 0.00 January 6, 2007 IJCAI-2007 Tutorial 85 V .21 .08 -.04 .28 .04 .86 -.05 -.05 -.31 -.12 .03 .04 -.37 .57 .39 .23 -.04 .26 -.02 .03 .25 .44 .11 -.39 -.27 -.32 -.30 .06 .17 .15 -.41 .58 .07 .37 .15 .12 -.12 .39 -.17 -.13 .71 -.31 -.12 .03 .63 -.01 -.45 .52 -.09 -.26 .08 -.06 .21 .08 -.02 .49 .27 .50 -.32 -.45 .13 .02 -.01 .31 .12 -.03 .09 -.51 .20 .05 -.05 .02 .29 .08 -.04 -.31 -.71 .25 .11 .15 -.12 .02 -.32 .05 -.59 -.62 -.23 .07 .28 -.23 -.14 -.45 .64 .17 -.04 -.32 .31 .12 -.03 .04 -.26 .19 .17 -.06 -.07 -.87 -.10 -.07 .22 -.20 .11 -.47 -.12 -.18 -.27 .03 -.18 .09 .12 -.58 .50 January 6, 2007 IJCAI-2007 Tutorial 86 Word by Word Matrix After SVD apple blood cells ibm data tissue graphics memory organ plasma pc .73 .00 .11 1.3 2.0 .01 .86 .77 .00 .09 body .00 1.2 1.3 .00 .33 1.6 .00 .85 .84 1.5 disk .76 .00 .01 1.3 2.1 .00 .91 .72 .00 .00 germ .00 1.1 1.2 .00 .49 1.5 .00 .86 .77 1.4 lab .21 1.7 2.0 .35 1.7 2.5 .18 1.7 1.2 2.3 sales .73 .15 .39 1.3 2.2 .35 .85 .98 .17 .41 linux .96 .00 .16 1.7 2.7 .03 1.1 1.0 .00 .13 debt 1.2 .00 .00 2.1 3.2 .00 1.5 1.1 .00 .00 January 6, 2007 IJCAI-2007 Tutorial 87 Second Order Co-Occurrences • I got a new disk today! • What do you think of linux? apple blood cells ibm data tissue graphics memory organ Plasma disk .76 .00 .01 1.3 2.1 .00 .91 .72 .00 .00 linux .96 .00 .16 1.7 2.7 .03 1.1 1.0 .00 .13 • These two contexts share no words in common, yet they are similar! disk and linux both occur with “Apple”, “IBM”, “data”, “graphics”, and “memory” • The two contexts are similar because they share many second order co-occurrences January 6, 2007 IJCAI-2007 Tutorial 88 References • Deerwester, S. and Dumais, S.T. and Furnas, G.W. and Landauer, T.K. and Harshman, R., Indexing by Latent Semantic Analysis, Journal of the American Society for Information Science, vol. 41, 1990 • Landauer, T. and Dumais, S., A Solution to Plato's Problem: The Latent Semantic Analysis Theory of Acquisition, Induction and Representation of Knowledge, Psychological Review, vol. 104, 1997 • Schütze, H, Automatic Word Sense Discrimination, Computational Linguistics, vol. 24, 1998 • Berry, M.W. and Drmac, Z. and Jessup, E.R.,Matrices, Vector Spaces, and Information Retrieval, SIAM Review, vol 41, 1999 January 6, 2007 IJCAI-2007 Tutorial 89 Clustering Partitional Methods Cluster Stopping Cluster Labeling January 6, 2007 IJCAI-2007 Tutorial 90 Many many methods… • Cluto supports a wide range of different clustering methods – Agglomerative • Average, single, complete link… – Partitional • K-means (Direct) – Hybrid • Repeated bisections • SenseClusters integrates with Cluto – http://www-users.cs.umn.edu/~karypis/cluto/ January 6, 2007 IJCAI-2007 Tutorial 91 General Methodology • Represent contexts to be clustered in first or second order vectors • Cluster the context vectors directly – vcluster • … or convert to similarity matrix and then cluster – scluster January 6, 2007 IJCAI-2007 Tutorial 92 Agglomerative Clustering • Create a similarity matrix of contexts to be clustered – Results in a symmetric “instance by instance” matrix, where each cell contains the similarity score between a pair of instances – Typically a first order representation, where similarity is based on the features observed in the pair of instances (X Y ) (X Y ) January 6, 2007 IJCAI-2007 Tutorial 93 Measuring Similarity • Integer Values – Matching Coefficient X Y – Jaccard Coefficient X Y X Y – Dice Coefficient 2 X Y • Real Values – Cosine X Y X Y X Y January 6, 2007 IJCAI-2007 Tutorial 94 Agglomerative Clustering • Apply Agglomerative Clustering algorithm to similarity matrix – To start, each context is its own cluster – Form a cluster from the most similar pair of contexts – Repeat until the desired number of clusters is obtained • Advantages : high quality clustering • Disadvantages – computationally expensive, must carry out exhaustive pair wise comparisons January 6, 2007 IJCAI-2007 Tutorial 95 Average Link Clustering S1 S1 S2 S3 S4 3 4 2 2 0 S2 3 S3 4 2 S4 2 0 S1S3S 2 S1S3S2 S4 January 6, 2007 1.5 1.5 1. 5 2 1 1 S4 S1S3 S1S3 S2 S4 3 2 2.5 2 1 1.5 2 S2 3 2 2.5 2 S4 2 1 1.5 2 2 0 0 1.5 1.5 1. 5 2 IJCAI-2007 Tutorial 96 Partitional Methods • Randomly create centroids equal to the number of clusters you wish to find • Assign each context to nearest centroid • After all contexts assigned, re-compute centroids – “best” location decided by criterion function • Repeat until stable clusters found – Centroids don’t shift from iteration to iteration January 6, 2007 IJCAI-2007 Tutorial 97 Partitional Methods • Advantages : fast • Disadvantages – Results can be dependent on the initial placement of centroids – Must specify number of clusters ahead of time • maybe not… January 6, 2007 IJCAI-2007 Tutorial 98 Vectors to be clustered January 6, 2007 IJCAI-2007 Tutorial 99 Random Initial Centroids (k=2) January 6, 2007 IJCAI-2007 Tutorial 100 Assignment of Clusters January 6, 2007 IJCAI-2007 Tutorial 101 Recalculation of Centroids January 6, 2007 IJCAI-2007 Tutorial 102 Reassignment of Clusters January 6, 2007 IJCAI-2007 Tutorial 103 Recalculation of Centroid January 6, 2007 IJCAI-2007 Tutorial 104 Reassignment of Clusters January 6, 2007 IJCAI-2007 Tutorial 105 Partitional Criterion Functions • Intra-Cluster (Internal) similarity/distance – How close together are members of a cluster? – Closer together is better • Inter-Cluster (External) similarity/distance – How far apart are the different clusters? – Further apart is better January 6, 2007 IJCAI-2007 Tutorial 106 Intra Cluster Similarity • Ball of String (I1) – How far is each member from each other member • Flower (I2) – How far is each member of cluster from centroid January 6, 2007 IJCAI-2007 Tutorial 107 Contexts to be Clustered January 6, 2007 IJCAI-2007 Tutorial 108 Ball of String (I1 Internal Criterion Function) January 6, 2007 IJCAI-2007 Tutorial 109 Flower (I2 Internal Criterion Function) January 6, 2007 IJCAI-2007 Tutorial 110 Inter Cluster Similarity • The Fan (E1) – How far is each centroid from the centroid of the entire collection of contexts – Maximize that distance January 6, 2007 IJCAI-2007 Tutorial 111 The Fan (E1 External Criterion Function) January 6, 2007 IJCAI-2007 Tutorial 112 Hybrid Criterion Functions • Balance internal and external similarity – H1 = I1/E1 – H2 = I2/E1 • Want internal similarity to increase, while external similarity decreases • Want internal distances to decrease, while external distances increase January 6, 2007 IJCAI-2007 Tutorial 113 Cluster Stopping January 6, 2007 IJCAI-2007 Tutorial 114 Cluster Stopping • Many Clustering Algorithms require that the user specify the number of clusters prior to clustering • But, the user often doesn’t know the number of clusters, and in fact finding that out might be the goal of clustering January 6, 2007 IJCAI-2007 Tutorial 115 Criterion Functions Can Help • Run partitional algorithm for k=1 to deltaK – DeltaK is a user estimated or automatically determined upper bound for the number of clusters • Find the value of k at which the criterion function does not significantly increase at k+1 • Clustering can stop at this value, since no further improvement in solution is apparent with additional clusters (increases in k) January 6, 2007 IJCAI-2007 Tutorial 116 H2 versus k T. Blair – V. Putin – S. Hussein January 6, 2007 IJCAI-2007 Tutorial 117 PK2 • Based on Hartigan, 1975 • When ratio approaches 1, clustering is at a plateau • Select value of k which is closest to but outside of standard deviation interval H 2(k ) PK 2(k ) H 2(k 1) January 6, 2007 IJCAI-2007 Tutorial 118 PK2 predicts 3 senses T. Blair – V. Putin – S. Hussein January 6, 2007 IJCAI-2007 Tutorial 119 PK3 • • • • Related to Salvador and Chan, 2004 Inspired by Dice Coefficient Values close to 1 mean clustering is improving … Select value of k which is closest to but outside of standard deviation interval 2 * H 2( k ) PK 3(k ) H 2(k 1) H 2(k 1) January 6, 2007 IJCAI-2007 Tutorial 120 PK3 predicts 3 senses T. Blair – V. Putin – S. Hussein January 6, 2007 IJCAI-2007 Tutorial 121 Adapted Gap Statistic • Gap Statistic by Tibshirani et al. (2001) • Cluster stopping by comparing observed data to randomly generated data – Fix marginal totals of observed data, generate random matrices – Random matrices should have 1 cluster, since there is no structure to the data – Compare criterion function of observed data to random data – The point where the difference between criterion function is greatest is the point where the observed data is least like noise (and is where we should stop) January 6, 2007 IJCAI-2007 Tutorial 122 Adapted Gap Statistic January 6, 2007 IJCAI-2007 Tutorial 123 Gap predicts 3 senses T. Blair – V. Putin – S. Hussein January 6, 2007 IJCAI-2007 Tutorial 124 References • Hartigan, J. Clustering Algorithms, Wiley, 1975 – basis for SenseClusters stopping method PK2 • Mojena, R., Hierarchical Grouping Methods and Stopping Rules: An Evaluation, The Computer Journal, vol 20, 1977 – basis for SenseClusters stopping method PK1 • Milligan, G. and Cooper, M., An Examination of Procedures for Determining the Number of Clusters in a Data Set, Psychometrika, vol. 50, 1985 – Very extensive comparison of cluster stopping methods • Tibshirani, R. and Walther, G. and Hastie, T., Estimating the Number of Clusters in a Dataset via the Gap Statistic,Journal of the Royal Statistics Society (Series B), 2001 • Pedersen, T. and Kulkarni, A. Selecting the "Right" Number of Senses Based on Clustering Criterion Functions, Proceedings of the Posters and Demo Program of the Eleventh Conference of the European Chapter of the Association for Computational Linguistics, 2006 – Describes SenseClusters stopping methods January 6, 2007 IJCAI-2007 Tutorial 125 Cluster Labeling January 6, 2007 IJCAI-2007 Tutorial 126 Cluster Labeling • Once a cluster is discovered, how can you generate a description of the contexts of that cluster automatically? • In the case of contexts, you might be able to identify significant lexical features from the contents of the clusters, and use those as a preliminary label January 6, 2007 IJCAI-2007 Tutorial 127 Results of Clustering • Each cluster consists of some number of contexts • Each context is a short unit of text • Apply measures of association to the contents of each cluster to determine N most significant bigrams • Use those bigrams as a label for the cluster January 6, 2007 IJCAI-2007 Tutorial 128 Label Types • The N most significant bigrams for each cluster will act as a descriptive label • The M most significant bigrams that are unique to each cluster will act as a discriminating label January 6, 2007 IJCAI-2007 Tutorial 129 George Miller Labels • Cluster 0 : george miller, delay resignation, tom delay, 202 2252095, 2205 rayburn,, constituent services, bethel high, congressman george, biography constituent • Cluster 1 : george miller, happy feet, pig in, lorenzos oil, 1998 babe, byron kennedy, babe pig, mad max • Cluster 2 : george a, october 26, a miller, essays in, mind essays, human mind January 6, 2007 IJCAI-2007 Tutorial 130 Evaluation Techniques Comparison to gold standard data January 6, 2007 IJCAI-2007 Tutorial 131 Evaluation • If Sense tagged text is available, can be used for evaluation – But don’t use sense tags for clustering or feature selection! • Assume that sense tags represent “true” clusters, and compare these to discovered clusters – Find mapping of clusters to senses that attains maximum accuracy January 6, 2007 IJCAI-2007 Tutorial 132 Evaluation • Pseudo words are especially useful, since it is hard to find data that is discriminated – Pick two words or names from a corpus, and conflate them into one name. Then see how well you can discriminate. – http://www.d.umn.edu/~tpederse/tools.html • Baseline Algorithm– group all instances into one cluster, this will reach “accuracy” equal to majority classifier January 6, 2007 IJCAI-2007 Tutorial 133 Evaluation • Pseudo words are especially useful, since it is hard to find data that is discriminated – Pick two or more words or names from a corpus, and conflate them into one name. Then see how well you can discriminate. – http://www.d.umn.edu/~tpederse/tools.html January 6, 2007 IJCAI-2007 Tutorial 134 Baseline Algorithm • Baseline Algorithm – group all instances into one cluster, this will reach “accuracy” equal to majority classifier • What if the clustering said everything should be in the same cluster? January 6, 2007 IJCAI-2007 Tutorial 135 Baseline Performance S1 S2 S3 Totals S3 S2 S1 Totals C1 0 0 0 0 C1 0 0 0 0 C2 0 0 0 0 C2 0 0 0 0 C3 80 35 55 170 C3 55 35 80 170 Totals 80 35 55 170 Totals 55 35 80 170 (0+0+55)/170 = .32 (0+0+80)/170 = .47 January 6, 2007 if C3 is S1 if C3 is S3 IJCAI-2007 Tutorial 136 Evaluation • Suppose that C1 is labeled S1, C2 as S2, and C3 as S3 • Accuracy = (10 + 0 + 10) / 170 = 12% • Diagonal shows how many members of the cluster actually belong to the sense given on the column • Can the “columns” be rearranged to improve the overall accuracy? – Optimally assign clusters to senses January 6, 2007 S1 S2 S3 Totals C1 10 30 5 45 C2 20 0 40 60 C3 50 5 10 65 Totals 80 35 55 170 IJCAI-2007 Tutorial 137 Evaluation • The assignment of C1 to S2, C2 to S3, and C3 to S1 results in 120/170 = 71% • Find the ordering of the columns in the matrix that maximizes the sum of the diagonal. • This is an instance of the Assignment Problem from Operations Research, or finding the Maximal Matching of a Bipartite Graph from Graph Theory. January 6, 2007 S2 S3 S1 Totals C1 30 5 10 45 C2 0 40 20 60 C3 5 10 50 65 Totals 35 55 80 170 IJCAI-2007 Tutorial 138 Analysis • Unsupervised methods may not discover clusters equivalent to the classes learned in supervised learning • Evaluation based on assuming that sense tags represent the “true” cluster are likely a bit harsh. Alternatives? – Humans could look at the members of each cluster and determine the nature of the relationship or meaning that they all share – Use the contents of the cluster to generate a descriptive label that could be inspected by a human January 6, 2007 IJCAI-2007 Tutorial 139 Hands on Experience Experiments with SenseClusters January 6, 2007 IJCAI-2007 Tutorial 140 Things to Try • Feature Identification – Type of Feature – Measures of association • Context Representation – native SenseClusters (1st and 2nd order) – Latent Semantic Analysis (2nd order) • • • • Automatic Stopping (or not) SVD (or not) Evaluation Labeling January 6, 2007 IJCAI-2007 Tutorial 141 Experimental Data • Available on Web Site – http://senseclusters.sourceforge.net • Available on LIVE CD • Mostly “Name Conflate” data January 6, 2007 IJCAI-2007 Tutorial 142 Creating Experimental Data • NameConflate program – Creates name conflated data from English GigaWord corpus • Text2Headless program – Convert plain text into headless contexts • http://www.d.umn.edu/~tpederse/tools.html January 6, 2007 IJCAI-2007 Tutorial 143 January 6, 2007 IJCAI-2007 Tutorial 144 January 6, 2007 IJCAI-2007 Tutorial 145 January 6, 2007 IJCAI-2007 Tutorial 146 January 6, 2007 IJCAI-2007 Tutorial 147 Thank you! • Questions or comments on tutorial or SenseClusters are welcome at any time tpederse@d.umn.edu • SenseClusters is freely available via LIVE CD, the Web, and in source code form http://senseclusters.sourceforge.net • SenseClusters papers available at: http://www.d.umn.edu/~tpederse/senseclusters-pubs.html January 6, 2007 IJCAI-2007 Tutorial 148 Target Word Clustering SenseClusters Native Mode • line data – 6 manually determined senses – approx. 4,000 contexts • second order bigram features – selected with pmi – use SVD – word by word co-occurrence matrix • cluster stopping – all methods January 6, 2007 IJCAI-2007 Tutorial 149 January 6, 2007 IJCAI-2007 Tutorial 150 January 6, 2007 IJCAI-2007 Tutorial 151 January 6, 2007 IJCAI-2007 Tutorial 152 January 6, 2007 IJCAI-2007 Tutorial 153 January 6, 2007 IJCAI-2007 Tutorial 154 January 6, 2007 IJCAI-2007 Tutorial 155 January 6, 2007 IJCAI-2007 Tutorial 156 January 6, 2007 IJCAI-2007 Tutorial 157 January 6, 2007 IJCAI-2007 Tutorial 158 January 6, 2007 IJCAI-2007 Tutorial 159 January 6, 2007 IJCAI-2007 Tutorial 160 January 6, 2007 IJCAI-2007 Tutorial 161 January 6, 2007 IJCAI-2007 Tutorial 162 January 6, 2007 IJCAI-2007 Tutorial 163 January 6, 2007 IJCAI-2007 Tutorial 164 January 6, 2007 IJCAI-2007 Tutorial 165 January 6, 2007 IJCAI-2007 Tutorial 166 January 6, 2007 IJCAI-2007 Tutorial 167 January 6, 2007 IJCAI-2007 Tutorial 168 January 6, 2007 IJCAI-2007 Tutorial 169 Target Word Clustering Latent Semantic Analysis • line data – 6 manually determined senses – approx. 4,000 contexts • second order bigram features – selected with pmi – use SVD – bigram by context matrix • cluster stopping – all methods January 6, 2007 IJCAI-2007 Tutorial 170 January 6, 2007 IJCAI-2007 Tutorial 171 January 6, 2007 IJCAI-2007 Tutorial 172 January 6, 2007 IJCAI-2007 Tutorial 173 January 6, 2007 IJCAI-2007 Tutorial 174 January 6, 2007 IJCAI-2007 Tutorial 175 January 6, 2007 IJCAI-2007 Tutorial 176 January 6, 2007 IJCAI-2007 Tutorial 177 January 6, 2007 IJCAI-2007 Tutorial 178 January 6, 2007 IJCAI-2007 Tutorial 179 January 6, 2007 IJCAI-2007 Tutorial 180 January 6, 2007 IJCAI-2007 Tutorial 181 January 6, 2007 IJCAI-2007 Tutorial 182 January 6, 2007 IJCAI-2007 Tutorial 183 January 6, 2007 IJCAI-2007 Tutorial 184 January 6, 2007 IJCAI-2007 Tutorial 185 January 6, 2007 IJCAI-2007 Tutorial 186 January 6, 2007 IJCAI-2007 Tutorial 187 January 6, 2007 IJCAI-2007 Tutorial 188 January 6, 2007 IJCAI-2007 Tutorial 189 January 6, 2007 IJCAI-2007 Tutorial 190 Feature Clustering Latent Semantic Analysis • line data – 6 manually determined senses – approx. 4,000 contexts • first order bigram features – selected with pmi – use SVD – bigram by context matrix • cluster stopping – all methods January 6, 2007 IJCAI-2007 Tutorial 191 January 6, 2007 IJCAI-2007 Tutorial 192 January 6, 2007 IJCAI-2007 Tutorial 193 January 6, 2007 IJCAI-2007 Tutorial 194 January 6, 2007 IJCAI-2007 Tutorial 195 January 6, 2007 IJCAI-2007 Tutorial 196 January 6, 2007 IJCAI-2007 Tutorial 197 January 6, 2007 IJCAI-2007 Tutorial 198 January 6, 2007 IJCAI-2007 Tutorial 199 January 6, 2007 IJCAI-2007 Tutorial 200