L3_Ch_9_MCP-2014.doc

advertisement

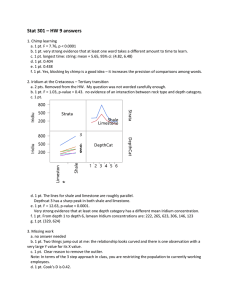

Lecture 3: Multiple Comparisons (C-9) An immediate follow-up of ANOVA (where the null hypothesis has been rejected) which says there IS a difference among the means tested is to see where the differences are. Is it between GROUP 1 and 2, or 1 and 3 or 2 or 3 in our example from Data Set 1? As we said earlier, we don’t want to do multiple t-test to test the difference as it increases (inflates) TYPE I error. So let us address what is TYPE I error and how we can hope to control it in a multiple testing scenario like ANOVA. What is Type I error in testing? In any hypothesis testing we can make errors: For a single hypothesis: Reality Ho is true Decision Reject Ho Type I error Fail to reject Ho Correct Ho is False Correct Type II error In any testing scenario we try to fix Type I error at some fixed level and try to minimize our Type II error (Maximize power). So we try and impose the condition that =Prob(Reject H0|H0 is true) ≤ α (typically α = 0.05) So for a single hypothesis test Type I error and its control are well defined. Multiple Hypotheses (like ANOVA) Often we conduct experiments and studies to try to answer several questions at once. So the question is should each study just answer ONE question. Sir R.A. Fisher made a point about “Nature will respond to a carefully thought out questionnaire; indeed if we ask her a single question, she will often refuse to answer till some other topic has been discussed” So in real life we are often dealing with studies and experiments designed to answer multiple questions – which relates to multiple inferences. Let’s consider some examples: 1. For a follow-up from the data in Table 1 we could be interested in seeing if ethnicity 1 is different from mean wages for ethnicity 2 and similarly if the mean wages were different between ethnicity 1 and 3 and ethnicity 2 and 3. Interested in 2. We could be interested in comparing mean wages for ethnicity 2 and 3 to that of 1. Considering that ethnicity 1 is the standard that we want to compare the others to. Interested in 3. We could have been interested in comparing the mean wages for ethnicity 1 to that of the mean of 2 and 3. Interested in What are we interested in ANOVA: The above 3 are examples of: 1. Pairwise comparisons: comparing all pairs as in example 1 2. Comparison to control: comparing all treatments to a standard treatment 3. Linear Contrasts: A linear combination of the means such that the coefficients sum up to 0. where So as a follow up of ANOVA we are generally interested in a multiple inferences. This brings us to the concept of a “family of inferences”. According to Hochberg and Tamhane (1987): Family: Any collection of inferences for which it is meaningful to take into account some combined measure of errors is called a family. Family can be finite or infinite. Example of Finite Family: 1. All pairwise comparisons arising in ANOVA, 2. comparison to control Example of an Infinite Family: 1. All possible contrasts arising from ANOVA. So here we may have multiple hypothesis that we need answers to. Just for an example let us consider a finite family i.e. pairwise differences arising in ANOVA. Here our hypothesis is: k Here we have a total of hypothesis. Let’s call 2 this total number=m. So here what does the above table look like? Reality Decision #Reject Ho #Fail to reject Ho #Ho is true #Ho is False Totals V= #Type I U errors S T = # Type II error m0 m-m0 R m-R m So if you look at this decision table what do we can actually KNOW in any given situation. We can know R (the number of hypothesis we reject) and we always know m the total number of hypothesis tested. So what we decide to control depends on what we care about. This brings us to the question of error rates: Per Comparison Error Rate: Expected Proportion of Type I errors (incorrect rejections of the true null hypothesis) For our example doing PCE would mean doing each pair-wise comparison at . So here we do not control for the error rate of the entire family but control error for just each specific comparison. This is also called the comparison-wise error rate. Family-wise Error Rate: This is the Probability of making any error in the family of inferences. This obviously controls the error rate for the entire family of inferences. Tukey called this the experiment-wise error rate. So in our example of pair-wise comparison any procedure that controls the error rate for the entire family. So each test would have to be done at a rate lower than a. Per-Family Error Rate: This is the expected number of wrong rejections in any finite family. PFE is well defined for finite families. For an infinite family PFE can be infinity. So this isn’t used as commonly as the others. It is easy to show the relationship: PCE FWE PFE To think about this consider the situation where we have a finite family and consider the m inferences in the family are independent of each other (not true for pairwise differences). If each test is done at , the PFE=m, FWE = 1 (1 ) m False Discovery Error Rate: FDR was very recently introduced in the literature and making a big splash in most disciplines. It is the expected proportion of incorrect rejections of the null hypothesis. FDR E (V / R) So it only uses the R out of m hypothesis for multiplicity control. So a lot more liberal than FWE, more powerful Which error rate to control? Most statisticians and practitioners see the drawback of PCE. So the choice in the past was between FWE and PFE. While both methods have supporters and detractors there is general support for FWE as it is defined for both finite and infinite families. Hochberg and Tamhane say that if “high statistical validity must be attached to exploratory inferences” FWE is the method of choice. However, recently the argument is not between FWE and PFE it is between FDR and FWE and appears that FDR is making headways. Before we discuss different methods of multiplicity control lets talk about the types of control one can impose. 1. No control 2. Weak control 3. Strong Control For obvious reasons we won’t discuss 1. Weak control: This controls Type I error ONLY under the overall null hypothesis. An example of weak control is Fisher’s protected LSD. Where you first test the overall null and then follow up k with alpha level t tests. 2 This ONLY gives you protection if the overall null is true i.e if 1 2 ... k . When does this fail? Suppose you have k means such that (k-1) are all about the same and the kth one is a lot larger than the others. Then almost always you would reject the null hypothesis. But if you apply (k-1) tests at level alpha, the FWE would exceed alpha. Thus we say you can only control Family wise Type I error weakly with Fisher’s LSD. Since in most problems we believe that at least one of the means are different, using Fisher’s LSD is not very useful in error control. Strong Control: Here we want to ensure that we control Familywise Type I error under all configurations of the true means. This means for each comparison we need to do the test at a level less than alpha (example Bonferroni, Tukey, Scheffe). Strong control assures us that for finite families PFE . Generally most researchers want Strong Control. The different Methods for pair-wise comparisons: Interested in testing if there is any difference among the pairs of hypothesis, if so which pairs are different. Consider: One way ANOVA Model: y i ij Interested in: D i i ˆ y i y i Estimate: D 1 2 1 ˆ ) Variance = ( D) ( ni ni 2 1 1 s ( Dˆ ) MSE ( ) ni ni Fisher’s LSD: First do the F test for overall significance. Then perform t-test for each pair-wise comparison. Dˆ D Hence, t 1 1 MSE ( ) ni ni doing each test at , using the t critical points Tukey’s HSD: Uses the fact that max( yi i ) min( yi i ) d q(k , n k ) MSE / n Where q represents the studentized range distribution. Tukey used the inequality that: | Dˆ D | max( yi i ) min( yi i ) , since any pair of differences will be less than the range. So under equal sample size Tukey’s method boils down to: 2 ( Dˆ D) d q* q(k , n k ) MSE / n Scheffe’s Method: Based on the Sceffe Projection Principle. Meant for all possible contrasts of form: L ci i , where ci 1. ( Lˆ L) s* , s ( Lˆ ) for critical point use S=(k-1)F(k-1,n-k). Bonferroni Method: Based on the Bonferroni inequality. Dˆ D , t 1 1 MSE ( ) ni ni use the critical point t ( k 2 , n k). For the unequal sample sizes all three except Tukey’s works the same. People use the Tukey-Kramer method. For our example in Data 1: The SAS System The GLM Procedure Least Squares Means factor wage LSMEAN LSMEAN Number A 5.90000000 1 B 5.50000000 2 C 5.00000000 3 Least Squares Means for effect factor Pr > |t| for H0: LSMean(i)=LSMean(j) Dependent Variable: wage i/j 1 1 2 <.0001 3 <.0001 2 3 <.0001 <.0001 <.0001 <.0001 Note: To ensure overall protection level, only probabilities associated with pre-planned comparisons should be used. The SAS System The GLM Procedure Least Squares Means Adjustment for Multiple Comparisons: Bonferroni factor wage LSMEAN LSMEAN Number A 5.90000000 1 B 5.50000000 2 C 5.00000000 3 Least Squares Means for effect factor Pr > |t| for H0: LSMean(i)=LSMean(j) Dependent Variable: wage i/j 1 1 2 <.0001 3 <.0001 2 3 <.0001 <.0001 <.0001 <.0001 The SAS System The GLM Procedure Least Squares Means Adjustment for Multiple Comparisons: Scheffe factor wage LSMEAN LSMEAN Number A 5.90000000 1 B 5.50000000 2 C 5.00000000 3 Least Squares Means for effect factor Pr > |t| for H0: LSMean(i)=LSMean(j) Dependent Variable: wage i/j 1 1 2 <.0001 3 <.0001 2 3 <.0001 <.0001 <.0001 <.0001 The SAS System The GLM Procedure Least Squares Means Adjustment for Multiple Comparisons: Tukey factor wage LSMEAN LSMEAN Number A 5.90000000 1 B 5.50000000 2 C 5.00000000 3 Least Squares Means for effect factor Pr > |t| for H0: LSMean(i)=LSMean(j) Dependent Variable: wage i/j 1 1 2 <.0001 3 <.0001 2 3 <.0001 <.0001 <.0001 <.0001