scriptie E Mogulkoc

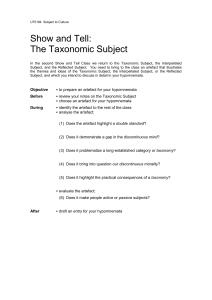

advertisement