BWilliamsPaper.doc

The Road to Quantum Theory

Brian Williams

Around the turn of the twentieth century, our view of the universe was changing dramatically. With Einstein’s theories of relativity, people were starting to realize that the universe may not work in ways that was once thought. The intuitive, common-sense view of the universe built on simple logic and centuries old equations was falling apart at the seams. Simple problems could no longer be solved by classical methods. Take for example the problem of calculating the total energy inside an oven. A perfectly insulated oven at a set temperature will have a finite amount of energy in the form of electromagnetic radiation. However, physicists using classical methods came up with a ridiculous answer when they tried to mathematically calculate the total energy, infinity!

Clearly a new theory was required, one that would explain how the oven worked. We shall return to this problem shortly. But first, we must understand where the laws of physics at the time had come from.

The ancient Greeks are often the first civilization credited with developing a scientific method by which to examine the world. It was Eratosthenes who first made a somewhat accurate measurement of the circumference of the earth, and Aristotle who first tried to determine what things were made of. He determined that everything on earth was composed of one of four elements, earth, fire, air, and water. He looked at these four elements and grouped them into two categories, those that moved up (air and fire) and those that moved down (water and earth). Aristotle even went so far as to

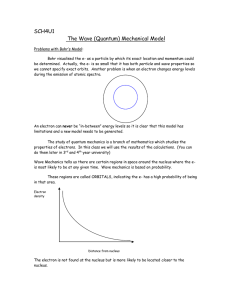

“discover” a fifth element. He looked at the stars in the sky and noticed that they didn’t move up or down, so far as he could tell, so he assumed that the stars must be made out of something else, something he called ether. This crude classification of the elements, while it lasted a long time, didn’t last forever. About 200 years ago, John Dalton formulated his atomic theory, which stated that matter is made up of individual units called atoms, from the Greek word meaning “indivisible.” Furthermore, he proposed that atoms of one element are different from those of another element. At the time, it was thought that atoms were the smallest particles possible, the true “building blocks” of matter. It was later discovered that these atoms are indeed made of smaller parts, with a heavy nucleus at the center, surrounded by very light electrons in orbit. At first it was thought that the atom was similar to a mini solar system, with the electrons in definite orbits around the nucleus. The problem was, it was known in classical mechanics that a moving charged particle emits radiation, and the electrons would soon spiral down into the nucleus, and in the span of much less than a second, you wouldn’t have a stable atom.

Clearly something was wrong with this model.

It is also necessary in physics to know the laws governing the behavior of systems, not merely what they are composed of. Prior to Isaac Newton, a scientist who was examining an apple falling from a tree might have looked at it and said, “it is in the nature of apples to fall towards the earth,” and leave it at that. They certainly would have realized that there was some sort of attractive force between the earth and the apple, but no one had ever been able to qualitatively describe it. Isaac Newton changed all that.

He not only realized that there was an attractive force between the apple and the earth, he determined that it was the same attractive force that held the moon in orbit around the

earth, and the earth in orbit around the sun, and so on. He was able to write a mathematical expression which quantified the force, called gravity, relating the attractive force between two objects to the product of their masses divided by the square of the distance between them. Newton also developed three laws of motion, laws which are still taught in introductory physics courses today. His first law states that a body will continue in the state of motion (or rest) which it is in until a force is applied to it. His second law, relating the acceleration an object experiences to the total force applied to it, is more familiarly known as F=ma. His third law states that for every action, there is an equal and opposite reaction. It is this law that later lead to principles such as conservation of momentum. These laws formed the basis of what we now know as

Classical Mechanics. Using mathematical techniques such as algebra, calculus, and differential equations, we can solve equations of motion for everything from the smallest particle to the largest galaxy. Newton’s laws held firm for over 200 years before

Einstein’s discoveries would cause us to take a new look at the way the universe works on large scales. But what about small scales? Could Newton’s laws be applied to atomic and newly discovered sub-atomic particles, such as the electron? Clearly they could not. The example of the electron spiraling into the nucleus shows us that

Newton’s laws, while they may work for a real solar system, do not apply to little things.

We shall now return to the oven, which we conveniently left on at a steady temperature. Classical physicists had tried to calculate the energy in the oven, and came up with infinities which they could not explain, nor get rid of. Along came Max Planck, who thought of a new way to look at the oven. At the time, it was thought that all electromagnetic waves, regardless of their wavelength, carried the same amount of energy. The amplitude of the wave, it was thought, was what determined how much energy it had. Make a bigger wave, i.e. one with a bigger amplitude, and you will have a more energetic wave, in much the same way that a guitar string that is plucked hard is louder than one that is plucked softly. What Planck suggested was that the energy a wave could have, or more specifically, the minimum energy a wave could have, was proportional to its frequency, and therefore its wavelength. Increase the frequency of a wave, Planck argued, and the minimum amount of energy it can have must go up. So how does this solve the infinite energy problem of the oven? Planck suggested that oscillators in the walls were quantized.

In other words, the amount of energy that could be put into the wave could only come in discrete steps, it was not a continuous function.

Planck argued that if the minimum energy carried by a wave was larger than the amount it was supposed to contribute for that particular oven temperature, that wave could not contribute to the total energy. By having a finite number of waves with a finite amount of energy inside the oven, the total energy could only be finite. Planck went on to say that there was a constant factor which related the energy of a wave to its frequency, a factor which he called h, which is now known as Planck’s constant, equal to 6.63 *

10^-34 J*s.

There was another problem that had been troubling physicists for hundreds of years. What was the nature of light, particle or wave? Newton postulated that light was made up of tiny particles that streamed out from the light source in every direction at the speed of light. Still other physicists, like Christian Huygens, said that light must be a wave. In the early 1800’s, Thomas Young set out to determine whether light was a particle or a wave. He devised a remarkably simple experiment, which today is known

as Young’s double-slit experiment. He used a narrow beam of light coming in from a window, sent it through a slit, and set up a detector screen to see what came out of the slit. If light were a particle, he reasoned, it would go straight through the slits with no interference. However, if there was an interference pattern, then light must be a wave.

Sure enough, an interference pattern was seen on the detector screen, and light was a wave. We shall return to this double-slit experiment later, it is quite important. Indeed,

Maxwell’s electromagnetic equations were based on the idea that light is a wave.

Fortunately, the particle idea of light was not dead forever. About a hundred years after

Young’s experiment, Einstein decided to re-open the case of the nature of light.

Physicists had discovered that light, when shone on a metal surface, could eject electrons from the surface. This was not the puzzling part, everyone knew that waves carried energy, and with energy you should be able to eject electrons. The puzzling part was that the speed of the electrons being ejected did not depend on the brightness of the light being shone upon them. If light was a wave, and the intensity of the light was turned up, then the total amount of energy on the surface of the metal, should increase, and therefore the amount of kinetic energy each electron had when it was ejected should increase also.

This was not the case. If the experimenter increased the intensity of the light, more electrons would be ejected, but they would have the same amount of kinetic energy.

Moreover, it was discovered that varying the frequency of the incident light did have a direct effect on the kinetic energy of the electrons. Einstein looked at this and said that it could be reconciled if light was again thought of as a particle, with each particle of light imparting its energy to one electron. Turn up the intensity of the light, and you have more photons coming in, but since they still have the same individual energy, the amount of energy they transfer to the electrons will not change. On the other hand, increase the frequency of the light, and you will increase the energy of the individual photons, and thus the amount energy they can transfer to the electrons, kicking them off with greater speed. Einstein would later be awarded the Nobel Prize for this discovery.

Unfortunately, this leaves us right back where we started, wondering if light is a particle or a wave.

Let us now return to the double-slit experiment discussed earlier. This experiment has far-reaching results. Richard Feynman, one of quantum mechanics greatest practitioners, has been quoted as saying that all of quantum mechanics can be determined from carefully examining the double-slit experiment. We set up a light source that we can adjust to our specifications, and a detector screen some distance away from the light source, which will leave a dot whenever a light from the source hits it. In between we put a barrier, with two slits cut into it, which we can open and close as we please. We start out by turning on the light source and opening the left slit only. As one would expect, light shines through the slit and onto the detector screen directly behind the slit, but nowhere else, since the screen prevents the light from shining anywhere else. The same thing happens with the right slit. Now we open both slits at the same time. Now we see an interference pattern, leading us to believe that light is a wave, and it interferes with itself much the same way waves of water in a tub can interfere with themselves, causing constructive interference in some places (bright spots), and destructive interference in others (dark spots). But now Einstein has told us that light can also be thought of as a particle, due to the photoelectric effect. So how can this interference pattern be reconciled with the idea that light is a particle. This is easy if we

think of light as being a stream of particles that together, act like a wave, with some photons canceling each other out, and some photons building on each other.

Unfortunately, this doesn’t work. Let’s say we slow down the source to emit only one photon at a time, say one every ten seconds. With only the left slit open, we will observe the exact same pattern as we did before, with the light only being detected directly behind the slit. The same is true for the right slit. Now we open both slits, with only one photon in the system at a time. Amazingly, we will observe the same interference pattern we saw before, with bright spots in some places and dark spots in some places. It would seem as though the photons somehow know that both slits are open, even though there is no way they can communicate with each other, since only one is in the system at any given time. In addition, these individual photons can be detected behind the screen, a place where they shouldn’t be allowed to be. It is as if the mere fact that the other slit is open can affect the path taken by a photon to get to the screen. Obviously these photons that make up light are very different from any particles of matter that we can observe, or are they?

In 1923, a Frenchman named Louis de Broglie made a prediction about the way matter should behave. In short, he reasoned that if Einstein’s famous E=mc^2 equation relates energy and mass, and Planck’s formula relates the energy of a wave to its wavelength, then matter should have a wave-like property to it as well. He developed a simple equation which stated that the wavelength of matter was equal to Planck’s constant divided by the momentum of the body. For large objects, this is inconsequential, a person, for instance, would have a “de Broglie wavelength” of something on the order of 10^-36 m, far too small to measure. A sub-atomic particle on the other hand, with its extremely small momentum, could have a noticeable wavelength.

If electrons, for instance, have a wavelength, then they too should form an interference pattern when subjected to a double-slit experiment. In the mid-1920’s two physicists at the Bell laboratories were studying how a stream of electrons interacted with a hunk of nickel. It turns out that the nickel crystals in this experiment act very much like the slits in the previous experiment, except that now we are dealing with electrons, which we know are particles. As it turns out, the results of the experiment are the same, with an interference pattern detected from the electrons as well. In fact, when the wavelength of the electrons is calculated from this interference pattern, it agrees with the wavelength from de Broglie’s hypothesis. This earned de Broglie the Nobel prize in 1929.

Apparently, both waves and light can be thought of as being both waves and particles at the same time. Depending on the experimental setup, you can see them behaving as waves or particles. This is not due to a flaw in the experiments, it is simply the way nature works. It is something we call wave-particle duality, and it’s something physicists have come to accept about the universe.

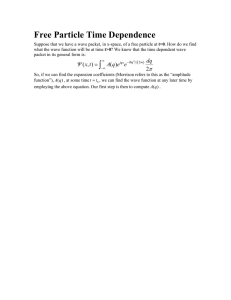

Now that we have all these wonderful ideas, energy is emitted only in packets, matter can be thought of as being a wave, etc., we wish to have a mathematical interpretation for all of this. After all, no matter how good they may sound, ideas are fairly useless without mathematical support. In the 1920’s, two physicists, working independently, came up with two very different mathematical methods to describe sub-atomic systems. Werner Heisenberg developed matrix mechanics, and Erwin

Schrodinger developed wave mechanics. In Schrodinger’s method, he developed what is now known as the Schrodinger equation. It took several ideas which were known at the

time, including both de Broglie’s and Planck’s hypotheses, and formulated them into an equation that also included the wave function,

. The Schrodinger equation, when applied to the hydrogen atom, yielded the same results that physicists such as Bohr had obtained in other ways. However, there was still the question of what the wave function,

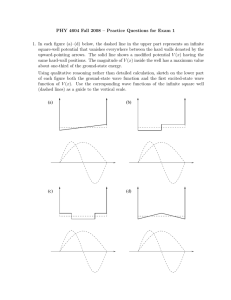

, actually meant. Schrodinger referred to it as a “smeared-out” electron, but this holds very little physical meaning. What exactly does a smeared-out electron look like? A pancake? It was Max Born who provided us with a more accurate description of what the wave function describes. Born said that the wave function describes the particle from a probability standpoint. Rather than tell you where the particle is, it only tells you where the particle is most likely to be. The square of the magnitude of the wave function gives the probability density for a particle over a particular region. If the wave function is normalized, then the square of the magnitude over a particular interval will give you the percent chance of finding the particle in that region. This presented a problem to many physicists of the time, who believed that an equation should be able to give you an exact answer for where the particle is at a given time, assuming you know the initial conditions and the outside forces. In fact, this is the biggest break between classical mechanics and quantum mechanics. In classical mechanics, if one knows the initial conditions of a particle, and all of the forces acting on the particle, then in principle you can determine the location of the particle at any time in the future. In quantum mechanics, however, you can never know exactly where the particle will be in the future, in fact, you can never know exactly where it is now, a problem that we shall revisit shortly. Apparently, the quantum world is nothing more than a game a chance, a roll of the dice, the particle might be here, it might be there, it might be anywhere. This greatly bothered many physicists of that era. As Einstein said, “God does not play dice with the universe.” It would seem though, that not only does God play dice, they are loaded dice, with the probabilities for finding the particle being higher in some places than in others.

We are still left with the problem of having two different mathematical constructs which both give correct results, matrix mechanics and wave mechanics. This problem was solved in 1926 by Paul Dirac, who showed that the two were in fact, equal. In doing so, he also developed a general formalism for quantum mechanics based on linear algebra, a formalism that we still use today. In 1928, Dirac developed relativistic quantum mechanics, which incorporated Schrodinger’s ideas, but also explained something that Schrodinger’s equation did not account for, electron spin, which had just been discovered a few years earlier. Among the other contributions of Dirac was the prediction of the existence of antimatter, particularly the prediction of the anti-electron, or positron, which was discovered experimentally in 1932.

I said before that it was impossible in quantum mechanics to know the initial conditions of a system exactly. This is due to the Heisenberg Uncertainty Principle, which states that you cannot simultaneously know the momentum and the position of a particle with 100% certainty. The reason for this is that a measurement on one variable will affect the other. For example, let’s say you wanted to know the position of an electron. The way we determine the position of things is to look at them, which means that we must shine light on them. But because an electron is so small, even shining a little photon on it will give it a kick. Once you kick it, it isn’t in the same place anymore, and it certainly isn’t moving with the same velocity it was before. This crude example serves our purpose well, because if you hit the electron with a shorter

wavelength photon to get a better determination of its position, you will then give it a harder kick, since shorter wavelength photons have more energy. The more you know one variable, the less you can know the other. In fact, this doesn’t just work with momentum and velocity. It works with any two non-commutable variables, such as energy and time.

Now that we have a mathematical interpretation of quantum mechanics, let’s look at a simple problem, the particle in a box problem. Given the wave function of the particle, we can determine the places where it is most likely to be. If we wish to check our results we can simply look inside the box to see where the particle is. If we do this enough times, and graph our results, we should get a curve that looks like the probability density from the wave function. But let’s say we wanted to know where the particle was the instant before we opened the box and looked. We already know that there is no way to solve for this mathematically, since the wave function only gives us statistical probabilities, but there several interpretations as to where the particle might have been just before we looked.

1. Realist- The particle was always there. If we opened the box to find it at position X, then it is logical to assume that it was at X. This was the position that

Einstein, among others, held firmly to. However, if the realist position was correct, then quantum mechanics was incomplete, since it couldn’t tell us where the particle was, which was at X. For this reason, hidden variable theories were proposed, with a hidden variable being something else besides the wave-function that would completely describe the particle.

2. Orthodox- The particle wasn’t really anywhere. This position was held by

Neils Bohr, among others. This position says that before you opened the box, the particle was not in a well-defined position. It was everywhere and it was nowhere at the same time. Perhaps this is what Schrodinger meant when he talked about a smeared-out electron. This position, as strange as it may sound, has been the most widely held position among physicists. Now as for why the particle chose position X when you opened the box is a question that I will not even attempt to discuss here, that is a problem that physicists are still working on today. Apparently there is something about the act of making a measurement that causes the wave function to “collapse” into a certain position.

3. Agnostic- Who cares? In a nutshell, this was the position taken by many physicists when the question arose. We can’t ever know because the question is metaphysical, you can’t ever answer a question about “before the measurement,” because to check yourself you would have to perform a measurement, and then you would be back in the same situation you started in. Wolfgang Pauli advocated this position. He and other agnostics considered it silly to rack one’s brain over a question such as this.

So which is correct? Theoretical and experimental work in the 1960’s by John

Bell and others showed that apparently, the orthodox position is the correct one. The particle was not anywhere until you decided to look at it, the act of measurement collapsed the wave function in a certain place. How and why the wave function decided to collapse at X, and not some other position, is a question that I am in no position to answer, indeed, that may very well be a question that we will never know the answer to.

Let’s examine one more paradox that was posed in the early years of quantum theory, that of Schrodinger’s Cat. The following is taken from an essay written by

Schrodinger in an essay written in 1935. “A cat is placed in a steel chamber, together

with the following hellish contraption...In a Geiger counter there is a tiny amount of radioactive substance, so tiny that maybe within an hour one of the atoms decays, but equally probably none of them decays. If one decays then the counter triggers and via a relay activates a little hammer which breaks a container of cyanide. If one has left this entire system for over an hour, then one would say the cat is living if no atom is decayed.

The first decay would have poisoned it. The wave function of the entire system would express this by containing equal parts of the living and dead cat.” In other words, until you looked in the box, the cat would not be in a definite state of either living or dead, it would be in a “smeared-out” state of both dead and alive, only by opening the box would the cat be forced to take a stand on which position it wanted to take. If this seems like nonsense, it is. Schrodinger knew this, in fact, the question was posed as a ridiculous argument to show how silly he thought the orthodox position was. Obviously the cat is either alive or dead, there is no such thing as a quasi-alive state for cats (or any other organism for that matter). It appears as though the Geiger counter in the box acts as the measuring device, causing the wave function of the system to collapse. The cat example does serve one important purpose, however, it shows us that quantum mechanical principles do not apply on large scales.

This presents somewhat of a problem. In the last 100 years, we have developed two wonderful theories that explain their respective areas very well. Quantum mechanics works quite well on small scales, but does not apply to large scales, whereas

General Relativity works wonderfully on large scales such as the universe itself, but it cannot explain the strange world of sub-atomic particles. The search is on for a grand theory that will unite these two theories into one mathematical and theoretical description that will work on both scales. This unified theory, if it exists, is the holy grail of modern physics.

Bibliography

Greene, Brian. The Elegant Universe. New York: Vintage Books, 1999.

Griffiths, David. Introduction to Quantum Mechanics. Upper Saddle River, NJ:

Prentice Hall, 1995.

Ferris, Timothy. Coming of age in the Milky Way. New York: Anchor Books, 1988.

Oxford Dictionary of Scientists. Ed. John Daintith and Derek Gjertsen. Oxford

University Press, 1999.

Schrodinger, Erwin, Naturwiss.

48, 52 (1935)