FaitCrowd: Fine Grained Truth Discovery for Crowdsourced Data Aggregation Fenglong Ma

advertisement

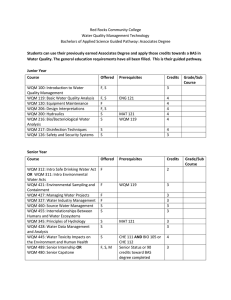

FaitCrowd: Fine Grained Truth Discovery for Crowdsourced Data Aggregation Fenglong Ma1, Yaliang Li1, Qi Li1, Minghui Qiu2, Jing Gao1, Shi Zhi3, Lu Su1, Bo Zhao4, Heng Ji5, Jiawei Han3 Presenter: Jing Gao 1SUNY Buffalo; 2Singapore Management University; 3University of Illinois Urbana-Champaign; 4LinkedIn; 5Rensselaer Polytechnic Institute 1 50% 30% 19% 1% A B C D Which of these square numbers also happens to be the sum of two smaller numbers? 16 25 36 49 https://www.youtube.com/watch?v=BbX44YSsQ2I A Straightforward Aggregation Method • Voting/Averaging – Take the value that is claimed by majority of the sources (users) – Or compute the mean of all the claims 3 50% 30% 19% 1% A B C D Which of these square numbers also happens to be the sum of two smaller numbers? 16 25 36 49 https://www.youtube.com/watch?v=BbX44YSsQ2I A Straightforward Aggregation Method • Voting/Averaging – Take the value that is claimed by majority of the sources (users) – Or compute the mean of all the claims • Limitation – Ignore source reliability (user expertise) • Source reliability – Is crucial for finding the true fact but unknown 5 Object Aggregation Source 1 Source 2 Source 3 Source 4 Source 5 6 Truth Discovery • Principle – To learn users’ reliability degree and discover trustworthy information (i.e., the truths) from conflicting data provided by various users on the same object. • A user is reliable if it provides many pieces of true information • A piece of information is likely to be true if it is provided by many reliable users 7 Existing Work on Truth Discovery Expertise • Existing methods – Assign single expertise (reliability degree) to each user (source). Barack Obama Albert Einstein Michael Jackson 8 Example--Existing Truth Discovery Methods • Input User Question u1 u2 u3 q1 1 2 1 – User Set q2 2 1 2 q3 1 2 2 – Answer Set q4 1 2 2 q5 2 q6 1 – Question Set • Output 1 2 2 User u1 u2 u3 – Users’ Expertise Expertise 5.00E-11 0.961 3.989 – Truths Question q1 q2 q3 q4 q5 q6 Truth 1 2 2 2 1 2 Question q1 q2 q3 q4 q5 q6 Ground Truth 1 2 1 2 1 2 Overview of Our Work • Goal – To learn fine-grained (topical-level) user expertise and the truths from conflicting crowd-contributed answers. Politics Physics Music 10 Example--Our Model • Input Question User Word u1 u2 u3 q1 1 2 1 a b q2 2 1 2 b c – User Set q3 1 2 2 a c q4 1 2 2 d e – Answer Set q5 2 1 e f – Question Content q6 1 2 d f – Question Set Topic • Output – Questions’ Topic – Topical-Level Users’ Expertise – Truths 2 K1 q1 q2 q3 K2 q4 q5 q6 User Expertise Question u1 u2 u3 K1 2.34 2.70E-4 1.00 K2 1.30E-4 2.34 2.35 Question q1 q2 q3 q4 q5 q6 Truth 1 2 1 2 1 2 Question q1 q2 q3 q4 q5 q6 Ground Truth 1 2 1 2 1 2 FaitCrowd Model • Overview Modeling Content yqm wqm Modeling Answers u zq Mq ' aqu ' Input Output Nq bq Q 2' e K q tq KU 2 Hyperparameter Intermediate Variable – Jointly modeling question content and users’ answers by introducing latent topics. – Modeling question content can help estimate reasonable user reliability, and in turn, modeling answers leads to the discovery of meaningful topics. – Learning topic-level user expertise, truths and topics simultaneously. Modeling Question Content • Word Generation – Assume that each question is about a single topic (the length of each question is short). • Draw a topic indicator yqm wqm u zq Mq ' aqu ' Nq KU bq Q 2' e K q tq 2 Modeling Question Content • Word Generation – Assume that each question is about a single topic (the length of each question is short). • Draw a topic indicator – Assume that a word can be drawn from topical word distribution or background word distribution. • Draw a word category yqm wqm u zq Mq ' aqu ' Nq KU bq Q 2' e K q tq 2 Modeling Question Content • Word Generation – Assume that each question is about a single topic (the length of each question is short). • Draw a topic indicator – Assume that a word can be drawn from topical word distribution or background word distribution. • Draw a word category • Draw a word yqm wqm u zq Mq ' aqu ' Nq KU bq Q 2' e K q tq 2 Modeling Answers • Answer Generation – The correctness of a user’s answer may be affected by the question’s topic, user’s expertise on the topic and the question’s bias. • Draw user’s expertise yqm wqm u zq Mq ' aqu ' Nq KU bq Q 2' e K q tq 2 Modeling Answers • Answer Generation – The correctness of a user’s answer may be affected by the question’s topic, user’s expertise on the topic and the question’s bias. • Draw user’s expertise • Draw the truth yqm wqm u zq Mq ' aqu ' Nq KU bq Q 2' e K q tq 2 Modeling Answers • Answer Generation – The correctness of a user’s answer may be affected by the question’s topic, user’s expertise on the topic and the question’s bias. • Draw user’s expertise • Draw the truth yqm wqm u zq Mq ' aqu • Draw the bias ' Nq KU bq Q 2' e K q tq 2 Modeling Answers • Answer Generation – The correctness of a user’s answer may be affected by the question’s topic, user’s expertise on the topic and the question’s bias. • Draw user’s expertise • Draw the truth yqm wqm u zq Mq ' aqu • Draw the bias • Draw a user’s answer ' Nq KU bq Q 2' e K q tq 2 Inference Method • Gibbs-EM – Gibbs sampling to learn the hidden variables – Gradient descent to learn hidden factors yqm wqm u zq Mq ' ' Nq KU 2 . . q bq Q 2' e K and tq aqu and Datasets & Measure • Datasets – The Game Dataset • Collected from a crowdsourcing platform via an Android App based on a TV game show “Who Wants to Be a Millionaire”. • 2,103 questions, 37,029 sources, 214,849 answers and 12,995 words – The SFV Dataset • Extracted from Slot Filling Validation (SFV) task of the NITS Text Analysis Conference Knowledge Base Population (TAC-KBP) track. • 328 questions, 18 sources, 2,538 answers and 5,587 words • Measure – Error Rate • The lower the better Baseline Methods • Basic Method – MV • Truth Discovery – – – – – – Truth Finder AccuPr Investment 3-Estimates CRH CATD • Crowdsourcing – D&S – ZenCrowd • Variations of FaitCrowd – FaitCrowd-b – FaitCrowd-b-g Performance Validation Table 1: Performance on the Game Dataset. • Analysis – For easy questions (from Level 1 to Level 7), all the methods can estimate most answers correctly. – For difficult questions (from Level 8 to Level 10) , the performance of FaitCrowd is much better than that of the baseline methods. – FaitCrowd performs well on both Game and SFV datasets. Table 2: Performance on the SFV Dataset. Model Validation • Goal – Illustrate the importance of joint modeling question content and answers by comparing with the method that conducts topic modeling and true answer inference separately. • Explanation – Dividing the whole dataset into sub-topical datasets will reduce the number of responses per topic, which leads to insufficient data for baseline approaches. Table 3: Results of Model Validation. Topical Expertise Validation • Goal – Validate the correctness of topical expertise learned by FaitCrowd. – Ideally, the expertise estimated by the proposed method is consistent with the ground truth accuracy. Figure 1: Topic 2 on the Game Dataset. Figure 2: Topic 4 on the SFV Dataset. Expertise Diversity Analysis • Goal – Demonstrate that the topical expertise for each source varies on different topics. – Ideally, the topical expertise should correspond to the ground truth accuracy, i.e., the higher expertise, the higher the ground truth accuracy. Figure 3: Source 7 on the Game Dataset. Figure 4: Source 16 on the SFV Dataset. Summary • Problem – Recognize the difference in source reliability among topics on the truth discovery task and propose to incorporate the estimation of fine grained reliability into truth discovery. • Solution – Propose a probabilistic model that simultaneously learns the topic-specific expertise for each source, aggregates true answers, and assigns topic labels to questions. • Results – Empirically show that the proposed model outperforms existing methods in multi-source aggregation with two real world datasets. 27 28