12659528_Copyright Collectives and Contracts.pptx (206.6Kb)

advertisement

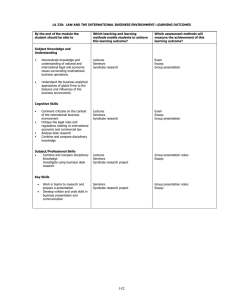

Copyright Collectives and Contracts: An Economic Theory Perspective Richard Watt (University of Canterbury and SERCI) Efficiency of collective management of copyright Standard theory is based on transaction costs Also, collectives are normally assumed to undertake 3 roles Licensing access to users Distributing royalty income to members Enforcing against infringement I will add a further rationale to the transaction costs one for the efficiency of collective management, and I will concentrate upon the second and first roles to add a new role – that of risk management and insurance. This will be done by looking at the sorts of contracts that copyright collectives undertake, first among the members themselves, and second between the collective and users. Copyright collectives as syndicates An optimal risk-sharing problem in economics is when: Given a risky payoff X and m agents, divide X into (risky) shares xi i=1,…,m, such that ∑xi=X, with xi being acceptable for agent i, i=1,…,m. A group of such risk sharers is normally called a “syndicate”. This is quite a good description of what a copyright collective is and does; a group of agents (copyright holders) together generate a risky payoff (royalty income from licensing of the repertory), which they must share among themselves (distribution of royalty income) in such a way that is acceptable to all. The sharing rule is a contract between syndicate members. Main issues to contemplate How should the sharing rule be constructed? To what extent is it true that the decisions taken by the syndicate (for example, on repertory bundling, and repertory pricing) are in fact optimal for the syndicate members individually? The first question is about efficient risk sharing. It has been extensively tackled in the economic theory literature, and there are several important aspects that should serve as a guide for copyright collectives. The second question is asking when the syndicate is able to be thought of as a representative agent. Again, this has been answered in the economic theory literature. The two issues are very closely related. Efficient risk sharing In any Pareto efficient solution to a risk sharing problem, the contracted sharing rule, x = (x1,…,xm), should be such that it is impossible to alter x in such a way that at least one member is made better off without making any other individual worse off. While a rather simple requirement, this leads to very concrete and important restrictions upon how sharing should take place. The first implication of an efficient sharing rule is the “mutuality principle” In each state of nature each syndicate member should receive a payoff that depends only on the level of aggregate surplus to be shared in that state of nature. So a member’s income cannot depend upon that particular member’s realisation, or upon the aggregate income in any other state of nature. So, concretely, monitoring individual use in order to pay each member according to how much use was made of his/her copyrights is not Pareto efficient. Doing that only shifts risk back onto the individual members, when that risk can be better shared among all of the group. So how should income be shared? Essentially we would start by adding a second restriction to the sharing rule – that it be individually rational, i.e. that it satisfies a participation constraint for each member. And then, presumably, the final sharing rule would be found by a bargaining process. A focal contract – linear sharing Many “real world” sharing rules are linear functions of the aggregate surplus; xi,j = ci + aiXj , where the ai are independent of state j aggregate wealth, Xj . This is only a feature of an efficient sharing rule when all of the syndicate members have “equi-cautious” utility functions of the same HARA (hyperbolic absolute risk aversion) class. Outside of this case, efficient sharing is likely to be non-linear. The HARA class contains most standard utility functions (power utility or CRRA including logarithmic utility, and negative exponential utility or CARA, among others). So if, for example, all members were equally risk averse with CRRA utility functions, then the sharing rule should be linear. Sharing aggregate risk Pareto efficiency also gives us a rule for sharing aggregate risk, that is, for sharing any differences in the aggregate surplus over different realisations of it. Wilson (1968) showed that aggregate risk should be shared in inverse proportion to an individual’s Arrow-Pratt level of risk aversion (or, if you prefer, proportionally to his/her risk tolerance). Note again that this sharing rule is independent of actual outcomes of individual copyright lotteries (as per the mutuality principle). It only depends on risk bearing preferences. The effect of the Law of Large Numbers (LoLN) Risk sharing within a syndicate also gives us a nice way of determining the optimal size of the syndicate. This may be important for the current debate on whether digitisation implies that collective management is no longer as efficient as it may once have been. A syndicate that engages in efficient risk sharing does two things – it pools risks, and it shares (or spreads) risks. From the LoLN, risk pooling leads to a situation in which the average of the aggregate outcome becomes less and less risky the more risks are added, so long as the risks themselves are independent. Given that, the more members there are, assuming that risk sharing does indeed take place, the more favourable can be the risk bearing situation of each member. But not only is the addition of new members valuable for each existing member individually, it also works to the benefit of the entire syndicate. This can be most easily seen under the assumption that each work is iid, with expected value μ and variance σ². Assume the syndicate has n>1 members. From LoLN, the average aggregate surplus of the syndicate has expected value μ and variance σ²/n. So the syndicate can pay each member the average surplus, and then each member would be better off than acting alone (by having the same expected value at a lower variance). Thus each member would be willing to pay a strictly positive amount of money, p(n), to join the syndicate. The syndicate as a whole can earn a positive payoff of P(n)=n×p(n). Note that P(n) is increasing with n. Perfect risk sharing That the syndicate should have as many members as possible can be seen in another way. If each (identical) member is paid the average aggregate surplus, then they do still each carry some risk. But what if the syndicate paid them the expected value of their work, μ, for sure? That would be the very best that we could possibly do for the members – a risk-free payoff with no loss in expected value, i.e. it is ‘perfect’ risk sharing. But then the syndicate as a whole suffers a risk – the aggregate surplus will sometimes be greater than the total payout, nμ, and othertimes less. That risk needs to be financed. But the larger is n, the LoLN implies that the lower is this risk, so the lower is the need for costly financing of it. In short, the addition of members serves to eliminate a costly element – risk itself – both at the individual and the aggregate level. And this advantage is greater the greater is n. In that way, the LoLN implies that the optimal syndicate size is as large as possible. And note that this has nothing at all to do with transaction costs, only risk pooling and risk sharing. It holds as an implication whenever there is risk, and it is all the stronger the more risk there is. However, the more the assumption of iid risks is violated, the more the potential risk savings in the aggregate may be diluted. Optimal syndicate size and digitisation If we can accept that the digital environment increases the variance of the payoffs for an individual work (makes very high outcomes possible – perhaps due to the increased size of the market, makes very low ones possible as well – perhaps due to piracy), then the LoLN would predict that the optimal size of the syndicate should be all the larger, as there is a greater need for risk management with the increased risk. This contrasts with some literature which argues that digitisation reduces transaction costs, and thus serves to reduce the optimal size of copyright collectives. Adding a decision rule Things are complicated a little when the syndicate must make a decision that will affect the (random) aggregate variable (total royalty income). For example, the decision to only market a blanket license, and the decision as to the price at which it will be licensed. In this case, it becomes important that the syndicate’s preferences also represent those of its members. As it happens, the sufficient condition for this to happen is exactly the same as for the sharing rule to be linear – all syndicate members must have equi-cautious utility functions in the same HARA class. Contracts between a CMO syndicate and repertory users The other area in which contracts are an issue for a copyright collective is in the relationship with users. There, the contracts essentially boil down to exactly what is licensed, and at what price. I will not deal with pricing, but rather the more interesting issue of aggregation of the repertory into a single unit which is licensed under a blanket license. Is it always efficient for a CMO to restrict its offer to blanket licensing of the entire repertory, rather than licensing of subsets of the repertory, and perhaps under more restrictive licensing contracts? The answer is maybe. And if it is efficient, then it is for similar reasons as to why it is efficient to aggregate members into a single CMO in the first place. The economics of bundling As a general conclusion, the literature on the economics of bundling tells us that bundling of information goods is both economically efficient and profit enhancing for the owners of the bundled goods (copyright holders), because it reduces the heterogeneity in user willingness to pay for individual titles (a bundle allows a sale at the average, rather than the minimum, willingness to pay). E.g. two users and two works. User A values work 1 at $10 and work 2 at $20. User B values work 1 at $20 and work 2 at $10. If the works are not bundled, then they can be licensed at $10 each, which would give revenue of $40 (both users buy a license for both works). Or at $20 each, which also gives revenue of $40 (each user buys a license for only one work). But if they are bundled and licensed at $30, then both users buy a license for the bundle, and revenue is $60. So indeed, in principle, it is worthwhile for a CMO to only offer a license to the entire repertory, rather than offering disaggregated options. This benefit can be shared with users by price regulation, or by bi-lateral negotiation. Nevertheless, in the same way as the result on aggregation of members, the benefits from bundling repertory may be weakened if there are external effects over works. Also, the benefits are weakened by the possible presence of observable differences between users (of the same sort that would normally lead to price discrimination), that can provide scope for specialist bundles (subsets of the repertory) to be offered. The gains from aggregation of copyright holders into a single CMO, and the gains from aggregation of repertory into a single product, are both based on the Law of Large Numbers (LoLN). For CMO membership, the LoLN implies that adding members allows the average aggregate surplus to have a lower variance at the same expected value. Thus adding members gives clear risk saving advantages. For blanket licensing, the LoLN implies that the average valuation of users of the bundle will be increasingly concentrated near the mean valuation as more goods are added to the bundle. Thus adding works allows pricing at the average rather than minimum willingness to pay. Notwithstanding that, bundling is more efficient the lower are the marginal costs of production, and less efficient the lower are the marginal costs of delivery. So digitisation has an ambiguous effect on the optimal bundle size. Concluding comments Copyright collectives may form not only for reasons related to transaction costs. They are also an optimal response to a demand for risk-management and insurance by copyright holders. This, in turn, is what drives the configuration of the contracts between the individual members and the collective. We have discussed the following general results related to the contractual environment of copyright collectives: Efficient risk sharing contracts imply that each member’s payoff should not depend upon his/her final contribution to aggregate surplus. The more members can be attracted to the collective, in principle the greater are the benefits that each realises. And this also can be translated into greater benefits for the collective as a whole. If the digital revolution serves to increase the risk of each individual work, then the rationale for forming a collective is all the stronger. When the collective must take a decision that affects the aggregate surplus, then that decision is optimal under the condition that each of the members has equi-cautious utility of the same HARA class. In this case (and assuming efficient risk sharing) the collective as a whole is validly a representative individual for the members, and the contracts signed by the collective would be optimal for the members as well. Bundling of the repertory into a single licensing unit is, in principle, optimal for the collective (i.e. it allows for greater profits). Of course these profits can be shared with users by regulating the price of access.