Lobster Survival in Tether Experiment (PPT)

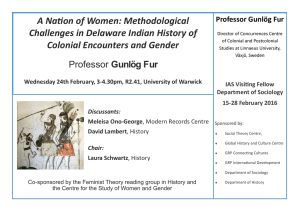

advertisement

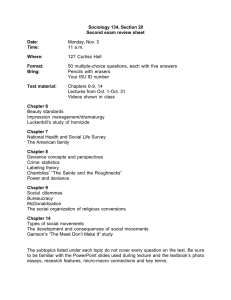

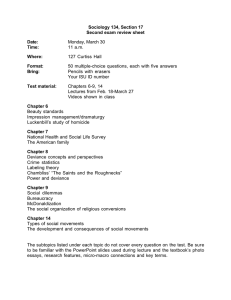

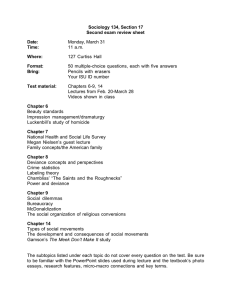

Logistic Regression with “Grouped” Data Lobster Survival by Size in a Tethering Experiment Source: E.B. Wilkinson, J.H. Grabowski, G.D. Sherwood, P.O. Yund (2015). "Influence of Predator Identity on the Strength of Predator Avoidance Response in Lobsters," Journal of Experimental Biology and Ecology, Vol. 465, pp. 107-112. Data Description • Experiment involved 159 juvenile lobsters in a Saco Bay, Maine • Outcome: Whether or not lobster survived predator attack in Tethering Experiment • Predictor Variable: Length of Carapace (mm). Lobsters grouped in m = 11 groups of width of 3mm (27 to 57 by 3) size.grp 27 30 33 36 39 42 45 48 51 54 57 Y.grp 0 1 3 7 12 17 13 12 7 6 1 n.grp 5 10 22 21 22 29 18 17 8 6 1 m Overall: Yi 79 i 1 m ^ ni 159 i 1 79 0.4969 159 Models • Data: (Yi,ni) i=1,…,m • Distribution: Binomial at each Size Level (Xi) • Link Function: Logit: log(i/(1-i)) is a linear function of Predictor (Size Level) • 3 Possible Linear Predictors: log(i/(1-i)) = a (Mean is same for all Sizes, No association) log(i/(1-i)) = a + bXi (Linearly related to size) log(i/(1-i)) = b1Z1 +…+ bm-1Zm-1 + bmZm Zi = 1 if Size Level i, 0 o.w. This allows for m distinct logits, without a linear trend in size (aka Saturated model) Note for a linear predictor for the logit link: log 1 f ef 1 1 + e f 1 + e f Probability Distribution & Likelihood Function - I n ni ! n y n y f yi | ni , i i iyi 1 i i i iyi 1 i i i 0 yi ni yi ! ni yi ! yi m ni ! n y Assuming independence among the yi : f y1 ,..., ym iyi 1 i i i i 1 yi ! ni yi ! Yi ~ Bin ni , i Consider 3 Models for i : Model 1: log i 1 i Model 2: log i 1 i ea a i 1 + ea ea + b X i a + b X i i 1 + ea + b X i i e b1Z1 +...+ bm1Zm1 + bm Zm Model 3: log b1Z1+...+ b m1Z m1+ b m Z m b1Z1 + ... + b m 1Z m 1 + b m Z m i 1 1+ e i 1 if Size Group i where Z i otherwise 0 We can consider the distribution function as a Likelihood function for the regression coefficients given the data y1 ,..., ym : m L i 1 ni ! n y iyi 1 i i i yi ! ni yi ! For Model 1: a For Model 2: a , b For Model 3: b1 ,..., b m 1 , b m Maximum Likelihood Estimation – Model 1 Model 1: log i a 1 i ea i 1 + ea 1 i 1 1 + ea yi m m i ni ! ni ! ni ! ni yi ni yi a yi a ni L i 1 i 1 e 1 + e i i 1 yi ! ni yi ! i 1 yi ! ni yi ! 1 i i 1 yi ! ni yi ! The log-Likelihood typically is easier to maximize than the Likelihood m m l log L log ni ! log yi ! log ni yi ! + yi log i log 1 i + ni log 1 i i 1 We maximize Likelihood by differentiating log likelihood, setting it to zero, and solving for unknown parameters l a log L a log ni ! log yi ! log ni yi ! + yi log ea ni log 1 + ea i 1 m log n ! log y ! log n y ! + y a n log 1 + ea m i i 1 i log L a a m 1 ^ ea i i ea set yi ni 0 a 1 + e i 1 m i m i 1 yi i 1 i m n y i 1 i i m y ^ a e i 1 i m m i 1 i 1 ni yi a e yi ^ a i 1 1+ e ^ m m n i i 1 m ^ m yi ^ i 1 a log m m n y i i i 1 i 1 1 + ea ^ ea 1 ^ +1 ea n i 1 m y i 1 ^ i i i m y i 1 m i n i 1 i 79 0.4969 159 Maximum Likelihood Estimation – Model 3 Model 3: log k b1Z1 + ... + b m Z m b k Z k b k 1 k e bk k 1 + e bk m i ni ! ni ! n y iyi 1 i i i yi ! ni yi ! i 1 yi ! ni yi ! 1 i m L i 1 yi 1 i 1 k ni m log n ! log y ! log n y ! + y b i 1 i l b k e bk yk nk bk b k 1+ e ni ! e bi yi ! ni yi ! i 1 l b k log L b k log ni ! log yi ! log ni yi ! + yi log e bi i 1 i 1 + e m bi ni yi n log 1 + e m i 1 1 + e bk i i i bi i ni log 1 + e bi b^k e yk ^ bk 1 + e n k ^ ^ yk yk b k log k nk yk nk yk ^ ^ Note that b k can be undefined if yk 0 or yk nk but in those cases , we have k 0 or 1, respectively size.grp Y.grp n.grp phat3.grp 27 0 5 0.0000 30 1 10 0.1000 33 3 22 0.1364 36 7 21 0.3333 39 12 22 0.5455 42 17 29 0.5862 45 13 18 0.7222 48 12 17 0.7059 51 7 8 0.8750 54 6 6 1.0000 57 1 1 1.0000 Model 2 – R Output glm(formula = lob.y ~ size, family = binomial("logit")) Coefficients: Estimate Std. Error z value Pr(>|z|) (Intercept) -7.89597 1.38501 -5.701 1.19e-08 *** size 0.19586 0.03415 5.735 9.77e-09 *** 7.89597 + 0.19586 X i e i 7.89597 + 0.19586 X i 1+ e ^ size.grp (X_i) Y.grp n.grp phat2.grp 27 0 5 0.0686 30 1 10 0.1171 33 3 22 0.1927 36 7 21 0.3005 39 12 22 0.4360 42 17 29 0.5818 45 13 18 0.7146 48 12 17 0.8184 51 7 8 0.8902 54 6 6 0.9359 57 1 1 0.9633 Evaluating the log-likelihood for Different Models m ^ ^ ^ ^ l ln L 1 ,..., m log ni ! log yi ! log ni yi ! + yi log i + ni yi log 1 i i 1 Note: When comparing model fits, we only need to include components that involve estimated parameters. Some software packages print l , others print l * : m ^ ^ l * yi log i + ni yi log 1 i i 1 m c log ni ! log yi ! log ni yi ! 72.3158 l c + l* i 1 m Model 1 (Null Model): log i 1 i a ^ i y i 1 m i n i 1 79 0.4969 i 1,..., m 11 159 i m l yi log 0.4969 + ni yi log 1 0.4969 79 0.6993 + 159 79 0.6870 110.2073 * 1 i 1 ^ i yi Model 3 (Saturated Model): log i 1,..., m 11 bi i ni 1 i m y n yi * l3 yi log i + ni yi log i Here we use: 0log(0) = 0 84.1545 n n i 1 i i ^ i e 7.89597 + 0.19586 X i Model 2 (Linear Model): log i 1,..., m 11 a + b Xi i 7.89597 + 0.19586 X i 1 1 + e i ^ ^ l yi log i + ni yi log 1 i 86.4357 i1 m * 2 Deviance and Likelihood Ratio Tests • Deviance: -2*(log Likelihood of Model – log Likelihood of Saturated Model) Degrees of Freedom = # of Parameters in Saturated Model - # in model • When comparing a Complete and Reduced Model, take difference between the Deviances (Reduced – Full) • Under the null hypothesis, the statistic will be chi-square with degrees of freedom = difference in degrees of freedoms for the 2 Deviances (number of restrictions under null hypothesis) • Deviance can be used to test goodness-of-fit of model. Deviance and Likelihood Ratio Tests ^ ^ l yi log i + ni yi log 1 i i 1 m * l c+l m c log ni ! log yi ! log ni yi ! 72.3158 * i 1 m Model 1 (Null Model): log i a 1 i ^ i y i 1 m i n i 1 79 0.4969 i 1,..., m 11 159 i l1* 110.2073 l1 110.2073 + 72.3158 37.8915 ^ i e 7.89597 + 0.19586 X i Model 2 (Linear Model): log a + b Xi i 1 1 + e 7.89597 + 0.19586 X i i l2* 86.4357 l2 86.4357 + 72.3158 14.1199 ^ y Model 3 (Saturated Model): log i bi i i ni 1 i l3* 84.155 l3 84.1545 + 72.3158 11.8387 i 1,..., m 11 i 1,..., m 11 Deviance for Model 1: DEV1 2 37.8915 11.8388 52.1054 df1 11 1 10 2 0.05,10 18.307 Deviance for Model 2: DEV2 2 14.1199 11.8388 4.5622 df 2 11 2 9 2 0.05,9 16.919 Testing for a Linear Relation (in log(odds)): H 0 : b 0 H A : b 0 2 TS : X obs DEV1 DEV2 47.5432 df 10 9 1 2 0.05,1 3.841 Model 2 is clearly the Best Model and Provides a Good Fit to the Data (Small Deviance) Pearson Chi-Square Test for Goodness-of-Fit For each of the "cells" in the m 2 table, we have an Observed and Expected Count: ni ni Oi 0 1 Yij ni Oi1 i 1,..., m Observed: Oi1 Yij j 1 j 1 ^ Ei 0 ni 1 i ni Ei1 i 1,..., m ^ Expected: Ei1 ni i Pearson Chi-Square Statistic: m X 2 obs 1 i 1 j 0 O ij Eij Eij 2 df m # of Parameters in model Note: This test is based on the assumption that group sample sizes are large. In this case, some of the "edge" groups are small, but the test gives clear result that Model 2 is best. Pearson Chi-Square Test for Goodness-of-Fit Pearson Goodness-of-Fit Test size.grp Y.grp n.grp 27 0 5 30 1 10 33 3 22 36 7 21 39 12 22 42 17 29 45 13 18 48 12 17 51 7 8 54 6 6 57 1 1 Model1 pihat.grp1 0.4968553 0.4968553 0.4968553 0.4968553 0.4968553 0.4968553 0.4968553 0.4968553 0.4968553 0.4968553 0.4968553 O_i1 O_i0 0 1 3 7 12 17 13 12 7 6 1 5 9 19 14 10 12 5 5 1 0 0 E1_i1 2.4843 4.9686 10.9308 10.4340 10.9308 14.4088 8.9434 8.4465 3.9748 2.9811 0.4969 E2_i0 2.5157 5.0314 11.0692 10.5660 11.0692 14.5912 9.0566 8.5535 4.0252 3.0189 0.5031 X2_1 2.4843 3.1698 5.7542 1.1302 0.1046 0.4660 1.8400 1.4949 2.3024 3.0571 0.5095 X^2 #Groups #Parms df X2(.05,df) P-value Model2 X2_0 pihat.grp E2_i1 E2_i0 X2_1 X2_0 2.4532 0.0686 0.3432 4.6568 0.3432 0.0253 3.1302 0.1171 1.1710 8.8290 0.0250 0.0033 5.6823 0.1927 4.2393 17.7607 0.3623 0.0865 1.1160 0.3005 6.3101 14.6899 0.0754 0.0324 0.1033 0.4360 9.5919 12.4081 0.6046 0.4674 0.4602 0.5818 16.8721 12.1279 0.0010 0.0013 1.8170 0.7146 12.8624 5.1376 0.0015 0.0037 1.4763 0.8184 13.9122 3.0878 0.2628 1.1842 2.2736 0.8902 7.1217 0.8783 0.0021 0.0169 3.0189 0.9359 5.6152 0.3848 0.0264 0.3848 0.5031 0.9633 0.9633 0.0367 0.0014 0.0367 44.3470 11 1 10 18.3070 0.0000 X^2 #Groups #Parms df X2(.05,df) P-value Even though some of the group sample sizes are small, and some Expected cell Counts are below 5, it is clear that Model 2 provides a Good Fit to the data 3.9480 11 2 9 16.9190 0.9148 Residuals Yi Wij ^ Pearson Residuals: ri P ^ Yi ni i ^ V Yi ni i 1 i Pearson Chi-Square Statisic is related to residuals: ^ ^ 2 m 1 2 X obs Oij Eij Eij i 1 j 0 2 ^ Y n i i i m m P ^ r i ^ i 1 ni i 1 i i 1 Deviance Residuals: ^ Y ^ ^ ri D sign Yi ni i 2 Yi log i + ni Yi log 1 i Yi log i ni DEV G ri D m 2 i 1 2 ni Yi + ni Yi log ni Residuals Residuals size.grp 27 30 33 36 39 42 45 48 51 54 57 Y.grp 0 1 3 7 12 17 13 12 7 6 1 n.grp 5 10 22 21 22 29 18 17 8 6 1 Model1 Pearson Deviance Model2 Pearson Deviance pihat.grp1 Residual Residual pihat.grp2 Residual Residual 0.4969 -2.2220 -2.6208 0.0686 -0.6070 -0.8433 0.4969 -2.5100 -2.6946 0.1171 -0.1682 -0.1720 0.4969 -3.3818 -3.5739 0.1927 -0.6699 -0.6989 0.4969 -1.4987 -1.5137 0.3005 0.3284 0.3252 0.4969 0.4559 0.4562 0.4360 1.0353 1.0298 0.4969 0.9624 0.9646 0.5818 0.0482 0.0482 0.4969 1.9123 1.9453 0.7146 0.0718 0.0720 0.4969 1.7237 1.7489 0.8184 -1.2029 -1.1275 0.4969 2.1392 2.2667 0.8902 -0.1376 -0.1350 0.4969 2.4649 2.8971 0.9359 0.6412 0.8919 0.4969 1.0063 1.1828 0.9633 0.1951 0.2734 SumSq 44.3470 52.1054 3.9480 4.5623 Computational Approach for ML Estimator log i 1 i b0 b 1 X ip β i 1,..., m b p ' ' b 0 + b1 X i1 + ... + b p X ip x i β x i 1 X i1 b + b X +...+ b X ' p ip e 0 1 i1 e xi β i ' b + b X +...+ b p X ip 1 + e 0 1 i1 1 + e xi β m e xi β n ni ! n y Likelihood: L β i iyi 1 i i i x'i β i 1 yi i 1 yi ! ni yi ! 1 + e ' m yi m 1 ni yi ni ! x'i β e ' xi β i 1 yi ! ni yi ! 1+ e m ' log-Likelihood: l β ln L β log ni ! log yi ! log ni yi ! + yi x'i β ni log 1 + e xiβ i 1 l β m ni ni e xiβ m x'i β g β x i yi e x i x i yi ' ' β 1 + e xi β 1 + e xi β i 1 i 1 ' m ni e xiβ 2l β G β x i 1 + e x'iβ ββ' i 1 ' ni x i x'i exp(x'i β) Wii ni i (1 i ) m x i yi ni i X' Y μ i 1 1 + exp(x'i β) x'i exp(x'i β) exp(x'i β)x'i exp(x'i β) ni x i 2 1 + exp(x'iβ) 1 1 + exp(x'iβ) 2 X'WX G (β) Wij 0 i j ^ NEW Newton-Raphson Algorithm: β ^ OLD β 1 ^ OLD ^ OLD G β g β ^ OLD start with: β ^ 0.4969 0 0 0 0 yi 1 + e xi β ' ni Estimated Variance-Covariance for ML Estimator m ni e xiβ 2l β G β x i 1 + e x'iβ ββ' i 1 ' 1 + exp(x'i β) x'i exp(x'i β) exp(x'i β)x'i exp(x'i β) ni x i 2 ' 1 + exp( x β ) i 1 ^ ^ ' ^ ' V β G β ni x i x i exp x i β X'WX 2 ^ ' 1 + exp xi β Wii ni i (1 i ) Wij 0 i j ^ 1 1 1 1 1 X 1 1 1 1 1 1 27 30 33 36 39 42 45 48 51 54 57 ^ ^ 0 n1 1 1 1 ^ ^ 0 n 2 1 2 2 ^ W 0 0 0 0 0 0 ^ ^ n10 10 1 10 0 ^ ^ 0 n11 11 1 11 0 0 0 1 3 7 12 Y 17 13 12 7 6 1 ^ n1 1 ^ n2 2 ^ μ ^ n 10 10 ^ n11 11 ML Estimate, Variance, Standard Errors Beta0 Beta1 Beta2 Beta3 Beta4 Beta5 0.4969 -6.5160 -7.7637 -7.8947 -7.8960 -7.8960 0.0000 0.1608 0.1925 0.1958 0.1959 0.1959 delta 49.2057 g_beta G_beta 1.07E-14 -29.0314 -1166.67 4.4E-13 -1166.67 -47741.8 1.5577 0.0172 0.0000 -G(beta) 29.03144 1166.673 1166.673 47741.84 0.0000 V(beta) 1.918261 -0.04688 -0.04688 0.001166 beta SE(beta) z p-value -7.8960 1.385013 -5.70101 1.19101E-08 0.1959 0.034154 5.734593 9.7747E-09 > mod2 <- glm(lob.y ~ size, family=binomial("logit")) > summary(mod2) Call: glm(formula = lob.y ~ size, family = binomial("logit")) Deviance Residuals: Min 1Q Median 3Q Max -1.12729 -0.43534 0.04841 0.29938 1.02995 Coefficients: Estimate Std. Error z value Pr(>|z|) (Intercept) -7.89597 1.38501 -5.701 1.19e-08 *** size 0.19586 0.03415 5.735 9.77e-09 *** Null deviance: 52.1054 on 10 degrees of freedom Residual deviance: 4.5623 on 9 degrees of freedom AIC: 32.24 logLik(mod2) 'log Lik.' -14.11992 (df=2)