Parsimony, Likelihood, Common Causes, and Phylogenetic Inference.ppt

advertisement

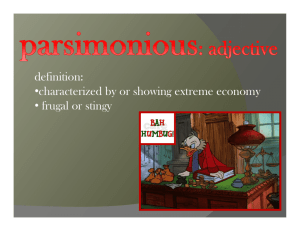

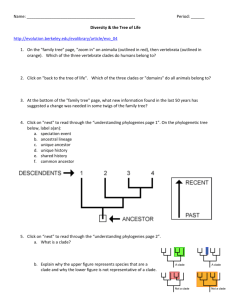

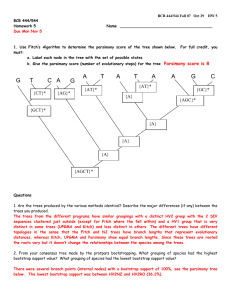

Parsimony, Likelihood, Common Causes, and Phylogenetic Inference Elliott Sober Philosophy Department University of Wisconsin, Madison 1 2 suggested uses of O’s razor • O’s razor should be used to constrain the order in which hypotheses are to be tested. • O’s razor should be used to interpret the acceptability/support of hypotheses that have already been tested. 2 Pluralism about Ockham’s razor? • [Pre-test] O’s razor should be used to constrain the order in which hypotheses are to be tested. • [Post-test] O’s razor should be used to interpret the acceptability/support of hypotheses that have already been tested. 3 these can be compatible, but… • [Pre-test] O’s razor should be used to constrain the order in which hypotheses are to be tested. • [Post-test] O’s razor should be used to interpret the acceptability/support of hypotheses that have already been tested. If pre-test O’s razor is “rejectionist,” then post-test O’s razor won’t have a point. 4 these can be compatible, but… • [Pre-test] O’s razor should be used to constrain the order in which hypotheses are to be tested. • [Post-test] O’s razor should be used to interpret the acceptability/support of hypotheses that have already been tested. If the pre-test idea involves testing hypotheses one at time, then it views testing as noncontrastive. 5 within the post-test category of support/plausibility ... • Bayesianism – compute posterior probs. • Likelihoodism – compare likelihoods. • Frequentist model selection criteria like AIC – estimate predictive accuracy. 6 I am a pluralist about these broad philosophies … • Bayesianism – compute posterior probs • Likelihoodism – compare likelihoods • Frequentist model selection criteria like AIC – estimate predictive accuracy. 7 I am a pluralist about these broad philosophies … • Bayesianism – compute posterior probs • Likelihoodism – compare likelihoods • Frequentist model selection criteria like AIC – estimate predictive accuracy. Not that each is okay as a global thesis about all scientific inference … 8 I am a pluralist about these broad philosophies … • Bayesianism – compute posterior probs • Likelihoodism – compare likelihoods • Frequentist model selection criteria like AIC – estimate predictive accuracy. But I do think that each has its place. 9 Ockham’s Razors* Different uses of O’s razor have different justifications and some have none at all. * “Let’s Razor Ockham’s Razor,” in D. Knowles (ed.), Explanation and Its Limits, Cambridge University Press, 1990, 73-94. 10 Parsimony and Likelihood In model selection criteria like AIC and BIC, likelihood and parsimony are conflicting desiderata. AIC(M) = log[Pr(Data│L(M)] - k 11 Parsimony and Likelihood In model selection criteria like AIC and BIC, likelihood and parsimony are conflicting desiderata. In other settings, parsimony has a likelihood justification. 12 the Law of Likelihood Observation O favors H1 over H2 iff Pr(O│H1) > Pr(O│H2) 13 a Reichenbachian idea Salmon’s example of plagiarism E1 E2 C [Common Cause] E1 E2 C1 C2 [Separate Causes] 14 a Reichenbachian idea Salmon’s example of plagiarism E1 E2 C [Common Cause] E1 E2 C1 C2 [Separate Causes] 15 more parsimonious Reichenbach’s argument IF (i) (ii) (iii) (iv) A cause screens-off its effects from each other All probabilities are non-extreme (≠ 0,1) a particular parameterization of the CC and SC models cause/effect relationships are “homogenous” across branches. THEN Pr[Data │Common Cause] > Pr[Data │Separate Causes]. 16 parameters and homogeneity E1 E2 p1 p2 C [Common Cause] E1 p1 C1 E2 p2 C2 [Separate Causes] 17 Reichenbach’s argument IF (i) (ii) (iii) (iv) A cause screens-off its effects from each other. All probabilities are non-extreme parameterization of the CC and SC models. cause/effect relationships are “homogenous” across branches. THEN Pr[Data │Common Cause] > Pr[Data │Separate Causes]. The more parsimonious hypothesis has the higher likelihood. 18 Reichenbach’s argument IF (i) (ii) (iii) (iv) A cause screens-off its effects from each other. All probabilities are non-extreme parameterization of the CC and SC models. cause/effect relationships are “homogenous” across branches. THEN Pr[Data │Common Cause] > Pr[Data │Separate Causes]. Parsimony and likelihood are ordinally equivalent. 19 Some differences with Reichenbach • I am comparing two hypotheses. • I’m not using R’s Principle of the Common Cause. • I take the evidence to be the matching of the students’ papers, not their “correlation.” 20 empirical foundations for likelihood ≈ parsimony (i) (ii) (iii) (iv) A cause screens-off its effects from each other. All probabilities are non-extreme parameterization of the CC and SC models. cause/effect relationships are “homogenous” across branches. By adopting different assumptions, you can arrange for CC to be less likely than SC. Now likelihood and parsimony conflict! 21 empirical foundations for likelihood ≈ parsimony (i) (ii) (iii) (iv) A cause screens-off its effects from each other. All probabilities are non-extreme parameterization of the CC and SC models. cause/effect relationships are “homogenous” across branches. Note: the R argument shows that these are sufficient for likelihood ≈ parsimony, not that they are necessary. 22 Parsimony in Phylogenetic Inference Two sources: ─ Willi Hennig ─ Luigi Cavalli-Sforza and Anthony Edwards Two types of inference problem: ─ find the best tree “topology” ─ estimate character states of ancestors 23 1. Which tree topology is better? H C (HC)G G H C G H(CG) MP: (HC)G is better supported than H(CG) by data D if and only if (HC)G is a more parsimonious explanation of D than H(CG) is. 24 An Example of a Parsimony Calculation 1 H 1 C 0 G 1 H 1 C 0 0 (HC)G H(CG) 0 G 25 2. What is the best estimate of the character states of ancestors in an assumed tree? 1 H 1 C 1 G A=? 26 2. What is the best estimate of the character states of ancestors in an assumed tree? 1 H 1 C 1 G A=? MP says that the best estimate is that A=1. 27 Maximum Likelihood H C (HC)G G H C G H(CG) ML: (HC)G is better supported than H(CG) by data D if and only if Pr[D│(HC)G] > Pr[D│H(CG)]. 28 Maximum Likelihood H C (HC)G G H C G H(CG) ML: (HC)G is better supported than H(CG) by data D if and only if PrM[D│(HC)G] > PrM[D│H(CG)]. ML is “model dependent.” 29 the present situation in evolutionary biology • MP and ML sometimes disagree. • The standard criticism of MP is that it assumes that evolution proceeds parsimoniously. • The standard criticism of ML is that you need to choose a model of the evolutionary process. 30 When do parsimony and likelihood agree? • (Ordinal Equivalence) For any data set D and any pair of phylogenetic hypotheses H1and H2, Pr(D│H1) > Pr(D│H2) iff H1 is a more parsimonious explanation of D than H2 is. 31 When do parsimony and likelihood agree? • (Ordinal Equivalence) For any data set D and any pair of phylogenetic hypotheses H1and H2, PrM(D│H1) > PrM(D│H2) iff H1 is a more parsimonious explanation of D than H2 is. • Whether likelihood agrees with parsimony depends on the probabilistic model of evolution used. 32 When do parsimony and likelihood agree? • (Ordinal Equivalence) For any data set D and any pair of phylogenetic hypotheses H1and H2, PrM(D│H1) > PrM(D│H2) iff H1 is a more parsimonious explanation of D than H2 is. • Whether likelihood agrees with parsimony depends on the probabilistic model of evolution used. • Felsenstein (1973) showed that the postulate of very low rates of evolution suffices for ordinal equivalence. 33 Does this mean that parsimony assumes that rates are low? • NO: the assumptions of a method are the propositions that must be true if the method correctly judges support. 34 Does this mean that parsimony assumes that rates are low? • NO: the assumptions of a method are the propositions that must be true if the method correctly judges support. • Felsenstein showed that the postulate of low rates suffices for ordinal equivalence, not that it is necessary for ordinal equivalence. 35 Tuffley and Steel (1997) • T&S showed that the postulate of “no-commonmechanism” also suffices for ordinal equivalence. • “no-common-mechanism” means that each character on each branch is subject to its own drift process. 36 the two probability models of evolution Felsenstein Tuffley and Steel • Rates of change are low, but not necessarily equal. • Rates of change can be high. • Drift not assumed: Pr(i j) and Pr(j i) may differ. • Drift is assumed: Pr(i j) = Pr(j i) 37 How to use likelihood to define what it means for parsimony to assume something • The assumptions of parsimony = the propositions that must be true if parsimony correctly judges support. • For a likelihoodist, parsimony correctly judges support if and only if parsimony is ordinally equivalent with likelihood. • Hence, for a likelihoodist, parsimony assumes any proposition that follows from ordinal equivalence. 38 A Test for what Parsimony does not assume Model M ordinal equivalence A where A = what parsimony assumes 39 A Test for what Parsimony does not assume Model M ordinal equivalence A where A = what parsimony assumes • If model M entails ordinal equivalence, and M entails proposition X, X may or may not be an assumption of parsimony. 40 A Test for what Parsimony does not assume Model M ordinal equivalence A where A = what parsimony assumes • If model M entails ordinal equivalence, and M entails proposition X, X may or may not be an assumption of parsimony. • If model M entails ordinal equivalence, and M does not entail proposition X, then X is not an assumption of parsimony. 41 applications of the negative test • T&S’s model does not entail that rates of change are low; hence parsimony does not assume that rates are low. • F’s model does not assume neutral evolution; hence parsimony does not assume neutrality. 42 How to figure out what parsimony does assume? • Find a model that forces parsimony and likelihood to disagree about some example. • Then, if parsimony is right in what it says about the example, the model must be false. 43 Example #1 Task: Infer the character state of the MRCA of species that all exhibit the same state of a quantitative character. 10 10 … 10 10 A=? The MP estimate is A=10. When is A=10 the ML estimate? And when is it not? 44 Answer 10 10 … 10 10 A=? ML says that A=10 is the best estimate (and thus agrees with MP) if there is neutral evolution or selection is pushing each lineage towards a trait value of 10. 45 Answer 10 10 … 10 10 A=? ML says that A=10 is the best estimate (and thus agrees with MP) if there is neutral evolution or selection is pushing each lineage towards a trait value of 10. ML says that A=10 is not the best estimate (and thus disagrees with MP) if (*) selection is pushing all lineages towards a single trait value different from 10. 46 Answer 10 10 … 10 10 A=? ML says that A=10 is the best estimate (and thus agrees with MP) if there is neutral evolution or selection is pushing each lineage towards a trait value of 10. ML says that A=10 is not the best estimate (and thus disagrees with MP) if (*) selection is pushing all lineages towards a single trait value different from 10. So: Parsimony assumes, in this problem, that (*) is false. 47 Example #2 Task: Infer the character state of the MRCA of two species that exhibit different states of a dichotomous character. 1 0 A=? A=0 and A=1 are equally parsimonious. When are they equally likely? And when are they unequally likely? 48 Answer 1 0 A=? ML agrees with MP that A=0 and A=1 are equally good estimates if the same neutral process occurs in the two lineages. 49 Answer 1 0 A=? ML agrees with MP that A=0 and A=1 are equally good estimates if the same neutral process occurs in the two lineages. ML disagrees with MP if (*) the same selection process occurs in both lineages. 50 Answer 1 0 A=? ML agrees with MP that A=0 and A=1 are equally good estimates if the same neutral process occurs in the two lineages. ML disagrees with MP if (*) the same selection process occurs in both lineages. So: Parsimony assumes, in this problem, that (*) is false. 51 Conclusions about phylogenetic parsimony ≈ likelihood • The assumptions of parsimony are the propositions that must be true if parsimony correctly judges support. 52 Conclusions about phylogenetic parsimony ≈ likelihood • The assumptions of parsimony are the propositions that must be true if parsimony correctly judges support. • To find out what parsimony does not assume, use the test described [M ordinal equivalence A]. 53 Conclusions about phylogenetic parsimony ≈ likelihood • The assumptions of parsimony are the propositions that must be true if parsimony correctly judges support. • To find out what parsimony does not assume, use the test described [M ordinal equivalence A]. • To find out what parsimony does assume, look for examples in which parsimony and likelihood disagree, not for models that ensure that they agree. 54 Conclusions about phylogenetic parsimony ≈ likelihood • The assumptions of parsimony are the propositions that must be true if parsimony correctly judges support. • To find out what parsimony does not assume, use the test described [M ordinal equivalence A]. • To find out what parsimony does assume, look for examples in which parsimony and likelihood disagree, not for models that ensure that they agree. • Maybe parsimony’s assumptions vary from problem to problem. 55 broader conclusions • underdetermination: O’s razor often comes up when the data don’t settle truth/falsehood or acceptance/rejection. 56 broader conclusions • underdetermination: O’s razor often comes up when the data don’t settle truth/falsehood or acceptance/rejection. • reductionism: when O’s razor has authority, it does so because it reflects some other, more fundamental, desideratum. 57 broader conclusions • underdetermination: O’s razor often comes up when the data don’t settle truth/falsehood or acceptance/rejection. • reductionism: when O’s razor has authority, it does so because it reflects some other, more fundamental, desideratum. [But there isn’t a single global justification.] 58 broader conclusions • underdetermination: O’s razor often comes up when the data don’t settle truth and falsehood. • reductionism: when O’s razor has authority, it does so because it reflects some other, more fundamental, desideratum. • two questions: When parsimony has a precise meaning, we can investigate: What are its presuppositions? What suffices to justify it? 59 A curiosity: in the R argument, to get a difference in likelihood, the hypotheses should not specify the states of the causes. E1 E2 p1 p2 C [Common Cause] E1 p1 C1 E2 p2 C2 [Separate Causes] 60 Example #0 Task: Infer the character state of the MRCA of species that all exhibit the same state of a dichotomous character. 1 1 … 1 1 A=? The MP inference is that A=1. When is A=1 the ML inference? 61 Example #0 Task: Infer the character state of the MRCA of species that all exhibit the same state of a dichotomous character. 1 1 … 1 1 A=? The MP inference is that A=1. When is A=1 the ML inference? Answer: when lineages have finite duration and the process is Markovian. It doesn’t matter whether selection or drift is the process at work. 62