Lecture 7 Two RVs.pptx

advertisement

PROBABILITY AND STATISTICS

FOR ENGINEERING

Two Random Variables

Hossein Sameti

Department of Computer Engineering

Sharif University of Technology

Introduction

Expressing observations using more than one quantity

- height and weight of each person

- number of people and the total income in a family

Joint Probability Distribution Function

Let X and Y denote two RVs based on a probability model (, F, P).

Then:

P x1 X ( ) x2 FX ( x2 ) FX ( x1 )

P y1 Y ( ) y2 FY ( y2 ) FY ( y1 )

x2

x1

y2

y1

f X ( x )dx,

fY ( y )dy.

What about P ( x1 X ( ) x2 ) ( y1 Y ( ) y2 ) ?

So, Joint Probability Distribution Function of X and Y is defined to be

FXY ( x, y ) P( X ( ) x ) (Y ( ) y )

P ( X x, Y y ) 0,

Properties(1)

FXY (, y ) FXY ( x,) 0

FXY (,) 1

Proof

Since

X ( ) ,Y ( ) y X ( ) ,

FXY (, y) P X ( ) 0.

Since

X ( ) ,Y ( ) ,

FXY (, ) P() 1.

Properties(2)

Px1 X ( ) x2 ,Y ( ) y FXY ( x2 , y) FXY ( x1, y).

P X ( ) x, y1 Y ( ) y2 FXY ( x, y2 ) FXY ( x, y1 ).

Proof

for

x2 x1,

X ( ) x2 , Y ( ) y

X ( ) x1 , Y ( ) y x1 X ( ) x2 , Y ( ) y

Since this is union of ME events,

P X ( ) x2 , Y ( ) y

P X ( ) x1 , Y ( ) y Px1 X ( ) x2 , Y ( ) y

Proof of second part is similar.

Properties(3)

Px1 X ( ) x2 , y1 Y ( ) y2 FXY ( x2 , y2 ) FXY ( x2 , y1 )

FXY ( x1 , y2 ) FXY ( x1 , y1 ).

Proof

x1 X ( ) x2 , Y ( ) y2

x1 X ( ) x2 , Y ( ) y1 x1 X ( ) x2 , y1 Y ( ) y2 .

Px1 X ( ) x2 , Y ( ) y2

Px1 X ( ) x2 , Y ( ) y1 Px1 X ( ) x2 , y1 Y ( ) y2

Y

So, using property 2,

we come to the desired result.

y2

R0

y1

X

x1

x2

Joint Probability Density Function (Joint p.d.f)

By definition, the joint p.d.f of X and Y is given by

2 FXY ( x, y )

f XY ( x, y )

.

x y

Hence,

FXY ( x, y )

x

y

f XY (u, v ) dudv.

So, using the first property,

f XY ( x, y ) dxdy 1.

Calculating Probability

How to find the probability that (X,Y) belongs to an arbitrary region D?

Using the third property,

P x X ( ) x x, y Y ( ) y y FXY ( x x, y y )

FXY ( x, y y ) FXY ( x x, y ) FXY ( x, y )

x x

x

P ( X , Y ) D

y y

y

f XY (u, v )dudv f XY ( x, y ) xy.

( x , y )D

f XY ( x, y )dxdy.

Y

D

y

x

X

Marginal Statistics

Statistics of each individual ones are called Marginal Statistics.

FX (x) marginal PDF of X,

f X (x) the marginal p.d.f of X.

Can be obtained from the joint p.d.f.

f X ( x)

fY ( y )

f XY ( x, y )dy

f XY ( x, y )dx.

Proof

( X x ) ( X x ) (Y )

FX ( x) P X x P X x, Y FXY ( x,).

Marginal Statistics

f X ( x)

f XY ( x, y )dy

fY ( y )

f XY ( x, y )dx.

Proof

FX ( x ) FXY ( x,)

x

f XY (u, y ) dudy

Using the formula for differentiation under integrals, taking derivative with respect

to x we get:

f X ( x)

f XY ( x, y )dy.

Differentiation Under Integrals

Let

Then:

H ( x)

b( x )

a( x)

h ( x, y )dy.

b ( x ) h ( x , y )

dH ( x ) db( x )

da ( x )

h ( x , b)

h ( x, a )

dy.

a

(

x

)

dx

dx

dx

x

Marginal p.d.fs for Discrete r.vs

X and Y are discrete r.vs

Joint p.d.f:

pij P( X xi , Y y j )

Marginal p.d.fs:

P( X xi ) P( X xi , Y y j ) pij

j

j

P(Y y j ) P( X xi , Y y j ) pij

i

p

i

ij

i

When written in a tabular fashion,

to obtain P( X xi ), one needs to add up all

entries in the i-th row.

This suggests the name marginal densities.

p

ij

j

p11

p12

p1 j

p1n

p21

p22

p2 j

p2 n

pi1

pi 2

pij

pin

pm1

pm 2

pmj

pmn

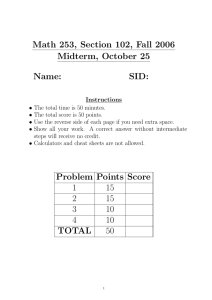

Example

Given marginals, it may not be possible to compute the joint p.d.f.

Example

Obtain the marginal p.d.fs f X (x) and fY (y) for:

constant,

f XY ( x, y )

0,

0 x y 1,

otherwise .

Example

Solution

f XY ( x, y ) is constant in the shaded region

We have:

f XY ( x, y) dxdy 1

f XY ( x, y )dxdy

Y

So:

cy

c dx dy

cydy

y 0 x 0 y 0

2

1

y

1

2 1

1

0

c

1.

2

0

Thus c = 2. Moreover,

f X ( x)

Similarly,

fY ( y )

y

f XY ( x, y )dy

f XY ( x, y )dx

1

yx

y

x 0

2dy 2(1 x ),

1

0 x 1,

2dx 2 y, 0 y 1.

Clearly, in this case given f X (x ) and fY ( y ) , it will not be possible to obtain the

original joint p.d.f in

X

Example

Example

X and Y are said to be jointly normal (Gaussian) distributed, if their joint p.d.f

has the following form:

f XY ( x, y )

1

2 X Y 1

2

e

1 ( x X ) 2 2 ( x X )( y Y ) ( y Y ) 2

XY

2 (1 2 ) X2

Y2

,

x , y , | | 1.

By direct integration,

f X ( x)

fY ( y )

f XY ( x, y )dy

f XY ( x, y )dx

1

2

2

X

1

2

2

Y

e ( x X )

e ( y Y )

2

2

2

/ 2 X

/ 2 Y2

N ( X , X2 ),

N ( Y , Y2 ),

So the above distribution is denoted by N ( X , Y , X2 , Y2 , ).

Example - continued

So, once again, knowing the marginals alone doesn’t tell us everything about

the joint p.d.f

We will show that the only situation where the marginal p.d.fs can be used to

recover the joint p.d.f is when the random variables are statistically

independent.

Independence of r.vs

Definition

The random variables X and Y are said to be statistically independent if the

events X ( ) A and {Y ( ) B} are independent events for any two Borel

sets A and B in x and y axes respectively.

For the events X ( ) x and Y ( ) y, if the r.vs X and Y are

independent, then

P( X ( ) x) (Y ( ) y) P( X ( ) x) P(Y ( ) y)

i.e.,

FXY ( x, y) FX ( x) FY ( y)

or equivalently: f XY ( x, y ) f X ( x) fY ( y ).

If X and Y are discrete-type r.vs then their independence implies

P( X xi , Y y j ) P( X xi ) P(Y y j ) for all i, j.

Independence of r.vs

Procedure to test for independence

Given f XY ( x, y ),

obtain the marginal p.d.fs fY ( y ) and

f X (x )

examine whether independence condition for discrete/continuous type r.vs

are satisfied.

Example: Two jointly Gaussian r.vs as in (7-23) are independent if and only if

the fifth parameter 0.

Example

Given

xy2e y , 0 y , 0 x 1,

f XY ( x, y )

otherwise.

0,

Determine whether X and Y are independent.

Solution

f X ( x ) f XY ( x, y )dy x y 2 e y dy

0

0

y

x 2 ye

2 ye y dy 2 x, 0 x 1.

0

0

Similarly

1

y2 y

fY ( y ) f XY ( x, y )dx

e , 0 y .

0

2

In this case

f XY ( x, y ) f X ( x) fY ( y ),

and hence X and Y are independent random variables.