Sociology Dept Assessment Report 2013 2014

advertisement

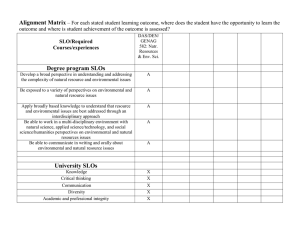

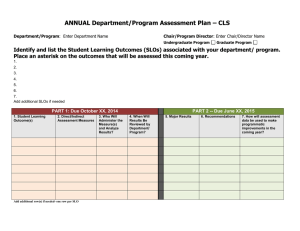

2013-2014 Annual Program Assessment Report Please submit report to your department chair or program coordinator, the Associate Dean of your College, and to james.solomon@csun.edu, director of assessment and program review, by Tuesday, September 30, 2014. You may submit a separate report for each program which conducted assessment activities. College: Social and Behavioral Sciences Department: Sociology Program: Sociology Assessment liaison: Lori Campbell 1. Overview of Annual Assessment Project(s). Provide a brief overview of this year’s assessment plan and process. This was my first year as assessment liaison. I spent the fall semester familiarizing myself with our 5 year plan and previous assessment reports as well as reading those of other departments in our college. During spring semester, we made two major changes to our assessment plan. In the spring 2014, I proposed to faculty that we: (1) change our assessment strategy, (2) revise our primary assessment instrument and reduce the number of questions, and (3) revise our SLOs to make them more measureable and specific. Our previous assessment strategy was to choose one lower division course (our “incoming” cohort) and one upper division course (our “outgoing” cohort) to assess with a 50-item objective instrument and then compare learning across those cohorts. The method of choosing which students and courses to select for assessment produced results in which faculty did not have confidence. This resulted in faculty not trusting the assessment results. To rectify this, first, we proposed a revised strategy whereby we assess all incoming and all outgoing (i.e., graduating) students every year. This overcomes the obstacle of trying to find the perfect courses to assess and also produces longitudinal data on student learning. I consulted with faculty and sought their feedback about this revised strategy; no one objected. Second, we revised the 50-item objective instrument that we use to assess student learning (see attached). 1 This process of revising the instrument, which covered 4 SLOs (theory, research methods, statistical and introductory knowledge of sociology), was time-consuming and required much feedback from faculty, but the revised instrument will hopefully produce a better understanding of student learning in these core subjects. Approximately 8 faculty members assisted with the revision of the instrument. Third, I proposed to the faculty that we revise our SLOs to make them more measurable and specific. This process is still ongoing and requires more feedback and discussion from the faculty. I hope to have the revision of SLOs completed this academic year (2014-2015). Finally, we assessed learning in one section of SOC 364 Social Statistics with an objective instrument that directly measures knowledge of statistics. 2. Assessment Buy-In. Describe how your chair and faculty were involved in assessment related activities. Did department meetings include discussion of student learning assessment in a manner that included the department faculty as a whole? The Sociology Department has an assessment committee of 4 people who are responsible for carrying out assessment. Assessment is a regular item on the agenda for faculty meetings; assessment activities and plans also are discussed at faculty meetings, faculty retreats, and on the departmental list-serve. I received feedback from approximately 50% of the faculty on the decision to change assessment strategy and revise the assessment instrument. Discussions about student learning occur constantly in formal faculty meetings and among the faculty informally as well. In order for faculty to trust assessment results and use them to make program changes in the future, we needed to change our assessment strategy and revise our assessment instrument. We sought faculty feedback throughout this process for “buy-in.” Approximately 50% of faculty provided feedback on the changes to our assessment strategy and instrument. 3. Student Learning Outcome Assessment Project. Answer items a-f for each SLO assessed this year. If you assessed an additional SLO, copy and paste items a-f below, BEFORE you answer them here, to provide additional reporting space. 3a. Which Student Learning Outcome was measured this year? SLO2: Students will be able to recall and interpret common statistics used in Sociology utilizing computer printout. SLO4: Students will demonstrate the ability to collect, process, and interpret research data. 2 3b. Does this learning outcome align with one or more of the university’s Big 5 Competencies? (Delete any which do not apply) Quantitative Literacy 3c. What direct and/or indirect instrument(s) were used to measure this SLO? We used a direct instrument that consisted of an 8-item objective test of students’ knowledge of basic statistical concepts. 3d. Describe the assessment design methodology: For example, was this SLO assessed longitudinally (same students at different points) or was a cross-sectional comparison used (Comparing freshmen with seniors)? If so, describe the assessment points used. We assessed 42 students in one section of SOC 364 (Social Statistics) at one time point (the end of the semester). 3e. Assessment Results & Analysis of this SLO: Provide a summary of how the results were analyzed and highlight findings from the collected evidence. There were 8 multiple choice items on the instrument that assessed basic statistical knowledge. We approached this assessment as a pilot test of the revised assessment instrument. 42 students in one section of SOC 364 were assessed using this instrument. The assessment took place online using Moodle. Thus, students had access to their books, notes and other class materials. Students were given extra credit for completing the assessment to increase their motivation. The average score was 6.28 out of 8 (standard deviation=1.55). The median, or typical, score was 6 out of 8, which indicates that at least half of the students scored a 6 or better and at least half of the students scored at 6 or lower. A frequency distribution of the scores is below. This shows that 20 out of 42 students (48%) scored an A or B (7 or 8 out of 8) on the assessment, 11 students (26%) scored a C (6 out of 8), 2 students (5%) scored a D (5 out of 8), and 9 students (21%) scored an F (3 or 4 out of 8). In other words, 48% of students scored A or B, 26% scored a C, 5% scored a D and 21% scored an F. The bar graph below shows the results visually. The results indicate that a large percentage of students have a good grasp of basic statistical knowledge (having scored an A or B), a sizeable percentage of students lack basic knowledge of statistics even after just completing a statistics course. 3f. Use of Assessment Results of this SLO: Describe how assessment results were used to improve student learning. Were assessment results from previous years or from this year used to make program changes in this reporting year? (Possible changes include: changes to course content/topics covered, changes to course sequence, additions/deletions of courses in program, changes 3 in pedagogy, changes to student advisement, changes to student support services, revisions to program SLOs, new or revised assessment instruments, other academic programmatic changes, and changes to the assessment plan.) In order for faculty to trust assessment results and use them to make program changes in the future, we needed to change our assessment strategy and revise our assessment instrument. We are beginning data collection for a longitudinal assessment strategy using our revised instrument this semester (fall 2014). We are revising our SLOs this academic year (2014-2015). Once we have assessment results that faculty trust, I am hopeful that these data will be useful to faculty so that other program changes may be made. 4. Assessment of Previous Changes: Present documentation that demonstrates how the previous changes in the program resulted in improved student learning. N/A 5. Changes to SLOs? Please attach an updated course alignment matrix if any changes were made. (Refer to the Curriculum Alignment Matrix Template, http://www.csun.edu/assessment/forms_guides.html.) We are in the process of revising our SLOs. We are currently analyzing the SLOs of other departments and discussing what types of changes we should make to our SLOs. This requires extensive discussion with faculty, so the process is ongoing. I anticipate that we will finish revising the SLOs this academic year (2014-2015). 6. Assessment Plan: Evaluate the effectiveness of your 5 year assessment plan. How well did it inform and guide your assessment work this academic year? What process is used to develop/update the 5 year assessment plan? Please attach an updated 5 year assessment plan for 2013-2018. (Refer to Five Year Planning Template, plan B or C, http://www.csun.edu/assessment/forms_guides.html.) Our 5-year assessment plan is undergoing substantial revision. We have made several changes already, and I anticipate that more changes will be made in the future after the department revises our SLOs. When our SLOs are revised I will update the 5 year plan. 4 7. Has someone in your program completed, submitted or published a manuscript which uses or describes assessment activities in your program? Please provide citation or discuss. No. 8. Other information, assessment or reflective activities or processes not captured above. 5