Data Streams

advertisement

Data Streams

• Definition: Data arriving continuously,

usually just by insertions of new elements.

The size of the stream is not known a-priori,

and may be unbounded.

• Hot research area

1

Data Streams

• Applications:

–

–

–

–

–

Phone call records in AT&T

Network Monitoring

Financial Applications (Stock Quotes)

Web Applications (Data Clicks)

Sensor Networks

2

Continuous Queries

• Mainly used in data stream environments

• Defined once, and run until user terminates

them

• Example: Give me the names of the stocks

who increased their value by at least 5%

over the last hour

3

What is the Problem (1)?

• Q is a selection: then size(A) may be

unbounded. Thus, we cannot guarantee we

can store it.

4

What is the Problem (2)?

• Q is a self-join: If we want to provide only

NEW results, then we need unlimited

storage to guarantee no duplicates exist in

result

5

What is the Problem (3)?

• Q contains aggregation: then tuples in A might be

deleted by new observed tuples.

Ex: Select A, sum(B)

From Stream X

What if B < 0 ?

Group by A

Having sum(B) > 100

6

What is the Problem (4)?

• What if we can delete tuples in the Stream?

• What if Q contains a blocking operator near

the top (example: aggregation)?

• Online Aggregation Techniques useful

7

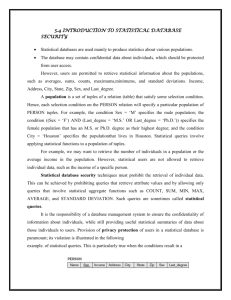

Global Architecture

8

Related Areas

• Data Approximation: limits size of scratch, store

• Grouping Continuous Queries submitted over the

same sources

• Adaptive Query Processing (data sources may be

providing elements with varying rates)

• Partial Results: Give partial results to the user (the

query may run forever)

• Data Mining: Can the algorithms be modified to

use one scan of the data and still provide good

results?

9

Initial Approaches (1)

• Typical Approach: Limit expressiveness of query

language to limit size of Store, Scratch

• Alert (1991):

– Triggers on Append-Only (Active) Tables

– Event-condition-action triggers

• Event: Cursor on Active Table

• Condition: From and Where Clause of Rule

• Action: Select Clause of Rule (typically called a function)

– Triggers were expressed as continuous queries

– User was responsible for monitoring the size of tables

10

Initial Approaches (2)

• Tapestry (1992):

– Introduced Notion of Continuous Queries

– Used subset of SQL (TQL)

– Query Q was converted to the Minimum Monotone

Bounding Query QM(t)= Union QM(τ) , for all τ <= t

– Then QM was converted to an Incremental query QI.

– Problems:

• Duplicate tuples were returned

• Aggregation Queries were not supported

• No Outer-Joins allowed

11

Initial Approaches (3)

• Chronicle Data Model (1995):

– Data Streams referred as Chronicles (append-only)

– Assumptions:

• A new tuple is not joined with previously seen tuples.

• At most a constant number of tuples from a relation R can join

with Chronicle C.

– Achievement: Incremental maintenance of views in

time independent of the Chronicle size

12

Materialized Views

• Work on Self-Maintenance: important to

limit size of Scratch. If a view can be selfmaintainable, any auxiliary storage much

occupy bounded space

• Work on Data Expiration: important for

knowing when to move elements from

Scratch to Throw.

13

Data Approximation

• Area most working is being done nowadays

• Problem: We cannot have O(N) space/time

cost per element to solve a problem, but

want solutions close to O(poly(logN)).

• Sampling

• Histograms

• Wavelets

• Sketching Techniques

14

Sampling

• Easiest one to implement, use

• Reservoir Sampling: dominant algorithm

• Used for any problem (but with serious

limitations, especially in cases of joins)

• Stratified Sampling (sampling data at

different rates)

– Reduce variance in data

– Reduce error in Group-By Queries.

15

Histograms

• V-Optimal:

– Gilbert et al. removed sorted restriction: time/space using sketches

in O(poly(B,logN,1/ε)

• Equi-Width:

– Compute quantiles in O(1/ε logεN) space and precision of εN.

• Correlated Aggregates:

–

–

–

–

AGG-D{Y : Predicate(X, AGG-I(X)) }

AGG-D : Count or Sum

AGG-I : Min, Max or Average

Reallocate histogram based on arriving tuples, and the AGG-I (if we want

min, and are storing [min, min + ε] in the histogram and receive new min,

throw away previous histogram.

16

Wavelets

• Used for Signal Decomposition (good if measured

aggregate follows a signal)

• Matias, Vitter: Incremental Maintenance of top

Wavelet coefficients

• Gilbert et al: Point, Range Queries with wavelets

17

Sketching Techniques (1)

• Main idea: If getting the exact value of a variable

V requires O(n) time, then use approximation:

• Define a random variable R with expected value

equal to that of V, and small variance.

• Example (self-join):

– Select 4-wise independent variables ξi (i = 1, …, dom(A))

– Define Z = X2, X = Σf(i)ξ(i) , f(i): frequency of i-th value

– Result is median of s2 variables Yj, where Yj is the average of s1

variables (boosting accuracy, confidence interval)

18

Sketching Techniques (2)

• Answer Complex Aggregate Queries

d

• Frequency moments Fk, where Fk mik

i 1

capture statistics of data

–

–

–

–

mi: the frequency of occurrence for value i

F0: number of distinct values

F1: number of total elements

F2: Gini index (useful for self-joins)

• L1, L2 norms of a vector computes similarly to F2

• Quantiles (combination of histograms, sketches)

19

Grouping Continuous Queries

• Goal: Group similar queries over data

sources, to eliminate common processing

needed and minimize response time and

storage needed.

• Niagara (joint work of Wisconsin, Oregon)

• Tukwilla (Washington)

• Telegraph (Berkeley)

20

Niagara (1)

• Supports thousands of queries over XML sources.

• Features:

– Incremental Grouping of Queries

– Supports queries evaluated when data sources change

(change-based)

– Supports queries evaluated at specific intervals (timerbased)

• Timer-based queries are harder to group because

of overlapping time intervals

• Change-based queries have better response times

but waste more resources.

21

Niagara (2)

• Why Group Queries:

– Share Computation

– Test multiple “Fire” actions together

– Group plans can be kept in memory more easily

22

Niagara - Key ideas

• Query Expression Signature

• Query Plan (generated by

Niagara parser)

23

Group

• Group signature

(common signature of

all queries in a plan)

• Group Constant Table

(signature constants of

all the queries in the

group, and destination

buffers)

• Group plan: the query

plan shared by all

queries in the group.

24

Incremental Grouping

• Create signature of new query. Place in lower

parts of signature the most selective predicates.

• Insert new query in the Group which best matches

its signature bottom-up

• If no match is found, create new group for this

query

• Store any timer information, and the data sources

needed for this query

25

Other Issues (1)

• Why write output to file, and not use pipelining?:

– Pipelining would fire all actions, even if new

needed to be fired

– Pipelining does not work in timer-based queries

where results need to be buffered.

– Split operator may become a bottleneck if

output is consumed in widely different rates

– Query plan too complex for the optimizer

26

Other Issues (2)

• Selection Operator above, or below Joins?

– Below only if selections are very selective

– Else, better to have one join

• Range queries?

– Like equality queries. Save lower, upper bound

– Output in one common sorted file to eliminate

duplicates.

27

Tukwilla

• Adaptive Query Processing over autonomous data

sources

• Periodically change query plan if output of

operators is not satisfactory.

• At the end perform cleanup. Some calculated

results may have to be thrown away.

28

Telegraph

• Adaptive Query Engine based on Eddy Concept

• Queries are over autonomous sources over the

internet

• Environment is unpredictable and data rates may

differ significantly during query execution.

Therefore the query processing SHOULD be

adaptive.

• Also can help produce partial results

29

Eddy

• Eddy: Routes tuples to operators for processing,

gets them back and routes them again…

30

Eddy – Knowing State of Tuples

• Passes Tuples by Reference to Operators (avoids copying)

• When Eddy does not have any more input tuples, it polls

the sources for more input.

• Tuples need to be augmented with additional information:

– Ready Bits: Which operators need to be applied

– Done Bits: Which operators have been applied

– Queries Completed: Signals if tuple has been output or rejected by

the query

– Completion Mask (per query): To know when a tuple can be output

for a query (completion mask & done bits = mask)

31

Eddy – Other Details

• Queries with no

joins are partitioned

per data source (to

save space in the bits

required)

• Queries with

Disjunctions (OR’s)

are transformed into

conjunctive normal

form (and of or’s).

• Range/exact

predicates are found

in Grouped filter

32

Joins - SteMs

• SteMs: Multiway-Pipelined

Joins

• Double- Pipelined Joins

maintain a hash index on

each relation.

• When N relations are joined,

at least n-2 inflight indices

are needed for intermediate

results even for left-deep

trees.

• Previous approach cannot

change query plan without recomputing intermediate

indices.

33

SteMs - Functionality

• Keeps hash-table (or other index) on one data

source

• Can have tuples inserted into it (passed from eddy)

• Can be probed. Intermediate tuples (results of

join) are returned to eddy, with the appropriate bits

set

• Tuples have sequence numbers. A tuple X can join

only with tuples in stem M, if the indexed tuples

have lower sequence numbers than X (arrived

earlier).

34

Telegraph – Routing

• How to route between operators?

– Route to operator with smaller queue

– Route to more selective operators (ticket scheme)

35

Partial Results - Telegraph

• Idea: When tuple returns to eddy, the tuple may

contribute to final result (fields may be missing

because of joins not performed yet).

• Present tuple anyway. The missing fields will be

filled later.

• Tuple is guaranteed to be in result if referential

constraints exist (foreign keys). Not usual in web

sources.

• Might be useful to the user to present tuples that

do not have matching fields (like in outer join).

36

Partial Results - Telegraph

• Results presented in tabular representation

• User can:

– Re-arrange columns

– Drill down (add columns) or roll-up (remove columns)

• Assume current area of focus is where user needs more

tuples.

• Weight tuples based on:

– Selected columns and their order

– Selected Values for some dimension

• Eddy sorts tuples according to their benefit in result and

schedule them accordingly

37

Partial Results – Other methods

• Online Aggregation: Present current aggregate

with error bounds, and continuously refine results

• Previous approaches involved changing some

blocking operators to be able to get partial results

–

–

–

–

Join (use symmetric hash-join)

Nest

Average

Except

38

Data Mining (1)

• General problem: Data mining techniques usually

require:

– Entire dataset to be present (in memory or in disk)

– Multiple passes of the data

– Too much time per data element

39

Data Mining (2)

• New algorithms should require:

–

–

–

–

–

Small constant time per record

Use of a fixed amount of memory

Use one scan of data

Provide a useful model at all times

Produce a model that would be close to the one

produced by multiple passes over the same data if the

dataset was available offline.

– Alter the model when generating phenomenon changes

over time

40

Decision Trees

• Input: A set of examples (x, v) where x is a vector

of D attributes and v is a discrete class label

• Find at each node the best attribute to split.

• Hoeffding bounds are useful here:

– Consider a variable r with range R

– N independent observations

– Computed average r’ differs by true average of r by at

most ε with probability 1-δ, where:

R 2 ln( 1 / )

2n

41

Hoeffding Tree

• At each node maintain counts for each attribute X, and

each value Xi of X and each correct class

• Let G(Xi) be the heuristic measure to choose test attributes

(for example, Gini index)

• Assume two attributes A,B with maximum G

• If G(A) – G(B) > ε, then with probability 1-δ, A is the

correct attribute to split

• Memory needed = O(dvc) (dimensions, values, classes)

• Can prove that produced tree is very close to optimal tree.

42

VFDT Tree

• Extension of Hoeffding tree

• Breaks ties more aggressively (if they delay

splitting)

• Computes G after nmin tuples arrive (splits are not

that often anyway)

• Remove least promising leaf nodes if a memory

problem exists (they may be reactivated later)

• Drops attributes from consideration if at the

beginning their G value is very small

43

CVFDT System

• Source producing examples may significantly change

behavior.

• In some nodes of the tree, the current splitting attribute

may not be the best anymore

• Expand alternate trees. Keep previous one, since at the

beginning the alternate tree is small and will probably give

worse results

• Periodically use a bunch of samples to evaluate qualities of

trees.

• When alternate tree becomes better than the old one,

remove the old one.

• CVFDT also has smaller memory requirements than VFDT

over sliding window samples.

44

OLAP

• On-Line Analytic Processing

• Requires processing very large quantities of data

to produce result

• Usually updates are done in batch, and sometimes

when system is offline

• Organization of data extremely important (query

response times, and mainly update times can vary

by several orders of magnitude)

45

Terminology

•

•

•

•

•

Dimension

Measure

Aggregate Function

Hierarchy

What is the CUBE operator:

– All 2D possible views, if no hierarchies exist

D

– levels( Dim ) , if hierarchies exist

i

i 1

46

Cube Representations - MOLAP

• MOLAP: Multi-dimensional array

• Good for dense cubes, as it does not store the

attributes of each tuple

• Bad in sparse cubes (high-dimensional cubes)

• Needs no indexing if stored as is

• Typical methods save dense set of dimensions in

MOLAP mode, and index remaining dimensions

with other methods

• How to store? Chunk in blocks to speed up range

queries

47

Cube Representations - ROLAP

• Store views in relations

• Needs to index produced relations, otherwise

queries will be slow.

• Indexes slow down updates

• Issues: If limited size is available, which views to

store?

– Store fact table, and smaller views (ones who have

performed most aggregation)

– Queries usually specify few dimensions

– These views are more expensive to compute on-the-fly

48

Research issues- ROLAP

• How to compute the CUBE

– Compute views from smaller parent

– Share sort orders

– Exhibit locality

• Can the size of the cube be limited?

– Prefix redundancy (Cube Forests)

– Suffix Redundancy (Dwarf)

– Approximation Techniques (Wavelets)

49

Research Issues - ROLAP

• How to speed up selected classes of Queries:

Range-Sum, Count…Different structures for each

case (Partial Sum, Dynamic Data Cube)

• How to best represent hierarchical data. Almost no

research here.

50