Gholamreza Safi Using Social Network Analysis Methods for the Prediction of Faulty Components

Using Social Network Analysis Methods for the Prediction of Faulty Components

Gholamreza Safi

List of Contents

• Motivations

• Our Vision

• Goal Models

• Software Architectural Slices(SAS)

• Predicting Fault prone components

• Comparison with related works

• Conclusions

2

Motivations

• Finding errors as early as possible in software lifecycle is important

• Using dependency data, socio-technical analysis

•

•

Considering dependency between software elements

Considering interactions between developers during the lifecycle

[Bird et al 2009]

3

Our Vision

• Provide a facility for considering concerns of roles other than developers who participating in development process

• Not directly like socio-technical based approaches

•

•

Complexity

Some basis is needed to model concerns

• Goal Models and software architectures

4

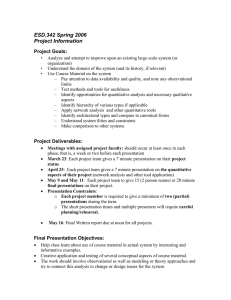

Goal Models

5

Goal models and software architectures

• Software Architecture(SA): Set of principal design decisions

• goal models represent the different way of satisfaction of a high-level goal

•

•

•

They could have impacts 0n SA

Components and connectors is a common representation of SA

So we should show the impact of Goal models on this representation of SA

6

Software Architectural Slices(SAS)

• Software Architectural Slices(SAS): is part of a software architecture (a subset of interacting components and related connectors) that provides the functionality of a leaf level goal of a goal model graph

• An Algorithm is designed to extract SAS of a system, given goal model and the entry point of leaf level goals in the SA

7

Example of SAS

Interface

Layer

Business

Logic

User Interface

User Manager

Timetable

Manager

Event Manager

Agent Manager

Interface

Data

Layer

User Data

Interface

Event Data

Interface

Leaf-level Goal in goal Model

Send request for topic

Decrypt received message

Send Requests for Interests

Send Request for time table

Choose schedule Automatically

Select Participants Explicitly

Collect Timetables by system from

Agents

Slice

User Interface, User Manager, User Data

Interface

User Manager

User Interface, User Manager, User Data

Interface

User Interface, User Manager, User Data

Interface, Time Table Manager

User Interface, User Manager, User Data

Interface, Event Manager, Event Data

Interface

User Interface, User Manager, User Data

Interface

User Interface, Agent Manager Interface

8

Predicting Fault prone components

• Social Networks analysis methods

• Metrics

• Connectivity metrics:

•

• individual nodes and their immediate neighbors

Degree

• Centrality Metrics

• relation between non-immediate neighbor nodes in network

• Closeness

• Betweeness

9

Interface

Layer

Business

Logic

User Interface

User Manager

Timetable

Manager

Event Manager

Agent Manager

Interface

Data

Layer

User Data

Interface

Event Data

Interface

Components

User Interface

User Manager

Timetable Manager 3

4

2

Event Manager

Agent Manager

Interface

User

Interface

Event

Interface

Data

Data

2

2

2

3

Degree Closeness

8/6=1.33

11/6=1.83

9/6=1.5

11/6=1.83

11/6=1.83

14/6=2.33

12/6=2

Betweeness

6+1+1+1=9

½+1/2+1/2=1.5

½+1/3+1+1/3+1/2+

1/2=2.88

1/3+1/3=2/3

1/3+1/3=2/3

0

0

10

Aggregated Metrics based on SAS

• metrics for individual components could not be very useful for test related analysis, since it only provide information for unit level testing

• In a real computation many components collaborate with each other to provide a service or satisfy a goal of the system

• A bug in one of them could have bad impact on all of the other collaborators

Send request for topic 8

Decrypt received message 2

Send

Leaf Level Goals

Interests

Requests for 8

Aggregated

Degree

11 Send Request for time table

Choose

Automatically

Select

Explicitly schedule

Participants

Collect Timetables by system from Agents

13

8

6

33/6

11/6

33/6

Aggregated

Closeness

42/6=7

58/6

33/6

19/6

10.5

1.5

10.5

Aggregated

Betweeness

10.5+2.88=13..33

10.5+2/3=11.16

10.5

9+2/3=9.66

11

Logistic Regression f ( z )

1

1 e

z

( equation 1 )

12

Logistic regression and Architectural slices

• we want to select the beta values for three aggregated metrics z

0

1 x

1

2 x

2

3 x

3

• After this by using f(z) we could find the probability of the event that the corresponding architectural slice encounter at least one error

• The process for making a logistic regression ready for prediction contains two stages:

• Training

• Validation

13

How to train and validate?

• Consider a test suite and based on the number of failed test cases, compute the probability of a slice to being faulty (number of failed test case for that slice/total number of test cases)

• then using metrics, try to find beta values to make f(z) close to computed probability.

• Evaluate the model by actual data.

• Validation measures could help us to determine the quality of our initial model.

• The process of training and validation should be repeated until we reach to a certain level of confidence about our model.

14

Measures for Validation

• Precision: Ratio of (true positives) to (true positives + false positives)

•

•

True positives: number of error prone slices which also determine to be error-prone in the model

False positives: Those which have not error but shown to have errors using approach

• Recall: Ratio of (true positives) to (true positives + false negatives)

• False Negatives: Those which are considered to be error free by mistake using approach

• F Score:

15

Related works

• Zimmerman and Nagappan

•

•

•

Uses dependency data between different part of code

These kind of techniques are accurate

Central components could have more errors

• Bird et al. using a socio-technical network

•

•

• consider the work of developers and the updates that they make to files

Similar to Meneely et al.

The main idea:

• A developer who participated in developing different files could have the same impact on those files

– Make the same sort of faults

16

Comparison with related works

• Our approach has the benefits of dependency data approaches

•

•

•

Dependency between SA components

Dependency between goals and SA

Goal models introduce some privileges

• Compare to Social Network based approaches:

•

•

They only consider simple contribution of developers such as updating a file

Goals and their relations shows the concerns of stakeholders

•

• consider impacts of different stakeholders implicitly other aspects of a developer

– lack of knowledge in using a specific technology

– Strong experience of a developer in using a method or technology

• Augmenting our approach to consider developer interaction is also possible

17

Conclusion

• Introduce metrics based on dependency between components of software architecture

• Introduce aggregate metrics to show impact of goal selection on error prediction using architectural slices

• Prediction using logistic regression

• Training

• Validation

• Compare to existing works

•

•

We could consider other roles than developers

Different aspect of contribution of developers

• Evaluations

18

Reference

• T. Zimmermann and N. Nagappan, “Predicting Subsystem

Failures using Dependency Graph Complexities,”

Trollhattan, Sweden: 2007, pp. 227-236.

The 18th IEEE

International Symposium on Software Reliability (ISSRE '07) ,

• A. Meneely, L. Williams, W. Snipes, and J. Osborne, “Predicting failures with developer networks and social network analysis,”

Proceedings of the 16th ACM SIGSOFT International

Symposium on Foundations of software engineering - SIGSOFT

'08/FSE-16 , Atlanta, Georgia: 2008, p. 13.

• C. Bird, N. Nagappan, H. Gall, B. Murphy, and P. Devanbu,

“Putting It All Together: Using Socio-technical Networks to

Predict Failures,” 2009 20th International Symposium on

Software Reliability Engineering , Mysuru, Karnataka, India:

2009, pp. 109-119.

19

20