Systems/Software ICM Workshop Acquisition and Process Issues Working Group

advertisement

Systems/Software ICM Workshop

Acquisition and Process Issues

Working Group

Rick Selby and Rich Turner

Systems/Software ICM Workshop

July 14-17, 2008

Washington DC

Process & Acquisition Participants

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

Rick Selby, Northrop Grumman (co-chair)

Rich Turner, Stevens Institute of Technology (co-chair)

Steven Wong, Northrop Grumman

Ernie Gonzalez, SAF

Ray Madachy, USC

Matt Rainey, Redstone Huntsville

Dan Ingold, USC

Dave Beshore, Aerospace

Lindsay MacDonald, BAE Systems

Blake Ireland, Raytheon

Bill Bail, Mitre

Barry Boehm, USC

John Forbes, OSD/SSA

Carlos Galdamez, Boeing

Gail Haddock, Aerospace

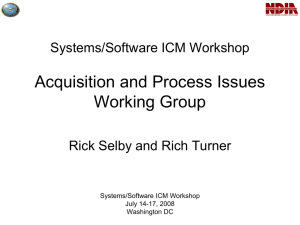

Software/Systems Process and

Acquisition Initiatives

Software/Systems Process & Acquisition

Start-up

Initiatives

teams

Prioritized SW requirements list with cut-points at PDR

Methods for driving behavior for SW risk reduction

100%

Ideal forms of SW evidence

Architecturally significant SW requirements

Engage right SW decision makers

New SW acquisition approaches

1st SW Build at PDR for key components for SW architecture evidence

SW leadership meeting with Chief SW Engineers

SW start-up teams

SW design reviews are risk- not function-centric

Independent SW risk team (non-contractor)

67%

TRL framework for SW

Payoff

Enable parallel, open, competitive environment for SW acquisition

SW invariants that you must have in order to adopt ICM

33%

Learn from CMMI appraisals for evidence-based reviews

Develop approaches to minimize/prevent protests

0%

0%

33%

67%

Ease

100%

Software/Systems Process and

Acquisition Initiatives

Initiative

Review how CMMI appraisals do evidence-based reviews

Define the ideal forms of evidence

Develop/enhance TRL framework for SW

Define new acquisitions approaches

Define the “SW invariants” that you must have in order to adopt ICM

Enable parallel, open, competitive environment

Develop approaches to minimize/prevent protests

Implement “Start-Up Teams”

Define methods of driving behavior

Convene SW Leadership meeting, including Chief SW Engineers, across all ACAT 1

programs

Ensure “right decision-makers” attend and are involved prior to the PDR

Design review activity around risks rather than functions

Employ independent groups (non-contractor) to identify and investigate the risks

Require SW Builds for key (high risk) software components to demonstrate functionality,

integration, and that the SW people have explored preliminarily design space

Define architecturally significant requirements (which need to be resolved in the first SW

release) and map these to risks

At PDR, require a prioritized list of requirements/capabilities/features so that the customer

can select the “cut point” based on the degree of value, risk, budget, and other new

information

Payoff

Low Med High

1

3

9

Ease

Diff Med Easy

1

3

9

Payoff Ease Payoff

15

15 #voters

135

135

Ease

#voters

9

1

0

1

1

3

8

0

0

0

4

0

6

2

7

4

5

3

0

3

1

12

7

11

2

6

0

10

13

11

2

8

5

8

4

10

13

0

0

1

5

5

4

4

5

2

0

6

7

7

6

0

4

0

0

0

0

7

6

5

24%

93%

69%

84%

44%

59%

20%

85%

100%

86%

61%

20%

45%

19%

23%

15%

11%

69%

64%

57%

14

13

13

14

10

13

13

13

13

14

13

13

13

12

9

12

13

13

13

13

0

0

0

0

3

4

5

2

12

10

9

10

7

0

0

5

5

11

8

3

1

2

4

5

87%

81%

76%

89%

26%

44%

56%

50%

15

14

14

12

13

13

12

13

0

2

10

7

5

1

89%

26%

12

13

0

0

13

2

8

3

100%

45%

13

13

Ideal Program PDR (pre-Milestone B)

●

Attendees

–

–

●

Focus of meeting and method of evaluation

–

–

–

●

–

Level the playing field in terms of technical knowledge, such as embedding engineers in the contractor organization

and making the acquisition personnel more knowledgeable about SW

Need to have government/FFRDC/UARC SW tiger teams that go into and help acquisitions and programs, such as

from Tri-Service Acquisition Initiative including Start-Up Teams to help launch new programs

Risks

–

–

●

Focus on risks (vs functionality) of achieving desired functionally within the proposed architecture

Evidence-based review

The decision making actually occurs before the milestone; how to empower the

Technical knowledge

–

●

“right decision-makers” attend and will involved prior to the PDR [P 12, 3, 0] [E 0, 1, 2]

All success-critical stakeholders engaged, including technical warrant holders who will authorize ultimate

deployment

Rather than listing the system “functions”, we list the “risks” at the review [P 10, 4, 0] [E 0, 3, 0]

Independent groups (non-contractor) identify and investigate the risks [P 9, 5, 0] [E 0, 3, 0]

Architecture

–

–

At least one SW Build for each key (such as high risk) software component (maybe CSCI) to demonstrate their

functionality and integration, which demonstrates that the SW people have explored preliminarily design space [P

10, 2, 0] [E 3, 0, 0]

Need to define architecturally significant requirements (by definition, these architecturally significant risks are

addressed in the first release) and map these to risks [P 10, 2, 0] [E 0, 0, 3]

• Scalability

• Performance

–

–

●

If architecturally significant risks are not addressed, the system “will fail”

Somehow put in place the architecturally baseline earlier

Requirements

–

Prioritized list of requirements/capabilities/features that the customer can select the “cut point” based on the degree

of value, risk, budget, and other new information [P 13, 0, 0] [E 1, 1, 1]

• Incorporate some notion of how to change the requirements to reduce risk

–

Need to be able to assess whether requirements allocated to configurable items make sense

Comments on Draft 5000.2 Language [SA 8,

4, SD 3]

●

3.5.10. A [System] Preliminary Design Review[(s)] (PDR[(s)]) shall be

conducted for the candidate design(s) to establish the allocated

baseline (hardware, software, human/support systems) and

underlying architectures and to define a high-confidence design. All

system elements (hardware and software) shall be at a level of

maturity commensurate with the PDR entry and exit criteria [as

defined in the Systems Engineering Plan]. A successful PDR will

[provide {independently validated?} evidence that supports] inform

requirements trades [decisions]; [substantiates design decisions;]

improve[s] cost [, schedule, and performance] estimation; and

identify[ies] remaining design, integration, and manufacturing risks.

The PDR shall be conducted at the system level and include user

representatives [, technical authority,] and associated certification

authorities. The PDR Report shall be provided to the MDA at

Milestone B {and include recommended requirements trades based

upon an assessment of cost, schedule, and performance risk[s]?}.

●

Synergy with ICM

– Greater emphasis on risk-driven decisions, evidence, and highconfidence designs

Comments on Draft 5000.2 Language [SA 7,

6, SD 1]

●

3.5.10. A Preliminary Design Review (PDR) shall be conducted for

the candidate design(s) to establish the allocated baseline

(hardware, software, human/support systems) and underlying

architectures and to define a high-confidence design. [At PDR,

evidence shall be provided that {independently?} validates that a]All

system elements (hardware and software) [are] shall be at a level of

maturity commensurate with the PDR entry and exit criteria. A

successful PDR will [support] inform requirements trades [decisions];

[substantiate design decisions;] improve cost [, schedule, and

performance] estimation[es]; and identify remaining design,

integration, and manufacturing risks. The PDR shall be conducted at

the system level and include user representatives [, technical

authority,] and associated certification authorities. The PDR Report

shall be provided to the MDA at Milestone B {and include

recommended requirements trades based upon an assessment of

cost, schedule, and performance risk[s]?}.

●

Synergy with ICM

– Greater emphasis on risk-driven decisions, evidence, and highconfidence designs

Some Quotes for Context Setting

●

"The only way we will have large acquisition programs on schedule,

within budget, and performing as expected, is for everyone - from

Congress down to the suppliers - to all stop lying to each other at

the same time."

●

"Software's just another specialty discipline and doesn't deserve

special attention. Integrating software engineering into the

development is the job of the chief system engineer."

●

"It takes so long for a program to reach deployment that we are

essentially acquiring legacy systems."

●

"Spiral process is nothing more than the vee chart rolled up."

●

"There is no such thing as an emergent requirement."

●

"Evolutionary acquisition is just a ploy to excuse the software guys’

incompetence and let programs spiral forever without having to

deliver something."

Some Topics for Discussion: Acquisition

and Process

●

Quality Factor Tradeoffs

– Integrating hardware and software quality factor evidence planning and

preparation guidelines

– Coordinating single-quality-factor IPTs

●

Cost and Risk

– Budgeting for systems and software risk mitigation

– Risk-driven earned value management

– Translating shortfalls in feasibility evidence into next-increment risk management

plans

●

Requirements

– Concurrently engineering vs. allocating system, hardware, software, and human

factors requirements

– Methods for dealing with requirements emergence and rapid change

●

Competitive Prototyping

– Supporting value-adding continuity of prototype development and evaluation

teams

●

Topic Specifics

– Synchronizing different-length hardware and software increments

– Early hardware-software integration: hardware surrogates

– Contracting for 3-team developer/V&Ver/next-increment rebaseliner incremental

development

Incremental Commitment Life Cycle Process

Stage I: Definition

©USC-CSSE

Stage II: Development and Operations

Understanding ICM Model for Software

●

Reconciling the milestones

– Where are LCO/LCA/IOC and SRR/PDR/CDR?

– When are the downselects: 3 to 2, 2 to 1?

●

How to drive behavior

– RFP language

– Award fee

– Large carrot (sole winner of major program)

●

●

How long does the competitive phase last (ends at Milestone B, ends later,

etc)?

Create a “whole new contractor role” that gets awarded to the 2-to-1

downselect non-winner

– External evaluators come into reviews (“air dropped”) and have a high entry barrier

and limited context to achieve success

– Loss of valuable expertise in the non-winner

– Non-winner becomes the “evaluator” of evidence throughout the program

●

●

What kinds of evidence/prototypes are needed for what kinds of risks?

Funding

– Who pays for pre vs post 2-to-1 downselect (what color)?

●

How do you use CP to do:

– New approaches for model definition and validation

– Quality attribute trades (non-functional)

Ranked Summary of Initiatives (High to Low)

At PDR, require a prioritized list of requirements/capabilities/features so

that the customer can select the “cut point” based on the degree of value,

risk, budget, and other new information

Define methods of driving behavior

Ensure “right decision-makers” attend and are involved prior to the PDR

Define the ideal forms of evidence

Convene SW Leadership meeting, including Chief SW Engineers, across

all ACAT 1 programs

Define new acquisitions approaches

Design review activity around risks rather than functions

Implement “Start-Up Teams”

Employ independent groups (non-contractor) to identify and investigate

the risks

Require SW Builds for key (high risk) software components to

demonstrate functionality, integration, and that the SW people have

explored preliminarily design space

Define architecturally significant requirements (which need to be resolved

in the first SW release) and map these to risks

Develop/enhance TRL framework for SW

Enable parallel, open, competitive environment

Review how CMMI appraisals do evidence-based reviews

Develop approaches to minimize/prevent protests

117

117

117

109

108

106

102

99

96

96

96

81

69

30

23

Issues - 1

●

John Young was seeing CP as a way to “get the HW right”

– He did not expect CP to cause all this discussion about SE/SW

●

●

●

What is the order of buying down risk?

We currently do evidence-based reviews for CMMI appraisals? [P 1,4,9]

[E 2, 0 , 1]

How do we change the behavior of both the vendor and acquirer?

– Reviewers now “tune out” when the SW architecture presentation is given

because it is hard to “bring it to life”

●

●

ICM ties together goals of reviewers

Navy currently has a six-gate review system

– Has an emphasis similar to ICM, including both system and software

●

●

●

ICM has “sufficient levels of vagueness”; provides opportunity for

tailoring – which is a positive flexible

How can we figure how the HW-SW touchpoints?

SW has the inherent value of changeability

Issues - 2

●

●

●

What are the ideal forms of evidence? [P 12, 0, 1] [E 0, 1, 2]

Demonstrating is not a complete answer?

Needs to be a validated demo that addresses

–

–

●

●

There are already lots of gates and reviews in place now; but the Army had seven Nunn-McGurdy’s last year

The decision makers are not attending the early reviews

–

–

–

●

These people are needed, not just surrogates “who just take notes”

When do you start addressing these issues and when do you push these issues up the chain

The review attendees are “going for the show” not “to do the review”

The contractor overwhelms the reviewers in terms of technical knowledge

–

–

●

For example, on the early FCS reviews there were many dog-and-pony shows

No/little talk about risks

Somehow we need to level the playing field in terms of technical knowledge

Need some form of parallel teams

Risk: PDRs are currently oriented around functions

–

–

–

Rather than listing the system “functions”, we list the “risks” at the review

This enables something that the reviewers can focus on

The “ranked risk list” becomes a first-class document that is at least as important as other design documents

●

At the PEO/IWS (Navy), there is an emerging requirement that prior to System PDR, there will have been at least one

SW Build for each CSCI to demonstrate that their functionality and integration

●

We should define the “invariants” that you must have in order to adopt ICM

We need to make sure that the risks that are currently being presented are honest/accurate

–

●

–

–

●

The government reviewers somehow identify the risks, and can empower/contract some teams to address these risks

Independent groups (non-contractor) identify and investigate the risks

Requirements organization and presentation

–

–

●

Demonstrate performance-criticality functionality

Need to define architecturally significant requirements and map these to risks

The first release (“indivisible build 1”) needs to address all architecturally significant risks

Take a fraction of the predictable overruns (50-100%) and spend it up front to reduce risks

Issues - 3

●

Need TRL framework for SW? [P 7, 6, 0] [E 0, 2, 1]

–

–

–

–

●

●

SMC uses Key Decision Points (KDPs) as the major decision milestones, and their KDP-B occurs after the SW reviews

now

Need to change the attitude of the senior acquisition and policy decision makers (above chief engieer level)

–

–

●

–

–

–

–

●

●

Development tools as well as systems

How to address assurance???

Government has “open access” to disk farm where all development artifacts (req, design, source code, test code, etc)

are stored/developed and therefore can inspect/analyze

Have common test beds

–

–

●

Navy sonar systems have periodic re-bidding approach/process where contractors continual re-bid on new capabilities

“technology refresh cycle”

One inhibitor: How to protect IP that is a discriminator for contractors corporations, including the underlying methods for producing the

products

Boeing made middleware for UAV open source shared across military contractors

ELV Atlas-V Ground Control System uses Linux libraries

Naval open architecture for open systems (including shared code) initiative, including contract terms and licensing

Domain-based “members-only” open source models

How about moving toward an open source model

–

–

●

SW illiteracy exists at the highest levels, such as arguments about whether to do SDPs

Need to re-instate the original language that was proposed for the DoD 5000 revision

Need to think broadly about new acquisitions approaches, such as moving away from fee-on-labor cost-plus

contracting vehicles to “new incentive models” [P 11, 2, 1] [E 0, 0, 3]

–

–

–

●

Maybe do not call this framework “TRL” because of confusion with existing HW-centric TRLs

MDA has SWRLs (software readiness levels) now and it works pretty well

Navy has ratings for process and functionality, analogous to TRLs

Interface level readiness too (from IDA workshop April 2008)

Such as original Ada validation suite

Define common test beds that also provide meeting place and communities to interact

DDR&E has several development and test beds environments now that enable the SBIRs teams to develop products

How to address unknown unknowns

–

Making unknown unknowns known

Issues - 4

●

●

Enable parallel, open, competitive environment [P 6, 4, 3] [E 0, 0, 3]

When acquiring new system, adopt an members-only open source model:

–

–

Standard middleware (think RT Linux for ELV Atlas-V ground system and now NASA

Aries ground)

Apple-like AppStore for developers to develop and sell applications; Google has

gadgets (free), Microsoft has gadgets (free), Yahoo has widgets (free)

• Must have some sequence of gates to ensure that SW was

“reasonable” / do-no-harm

• Members-only contributors

• SW is “low cost” but need to pay for support

• Multi-tier pricing scheme for execute only, source for customers,

source for all

–

–

–

●

Acquirers can select/purchase the applications that have the most value

What are the incentives for contractors to invest for developing these applications?

Example: ACE/TAO is open source middleware that is being used on Navy SSDS

large-deck combat systems

• Enables new potential competitors because of externally known

interfaces

Architecture would need to be able to accommodate this “new thinking”

Issues - 5

●

What is the earliest to end the Competitive Prototyping?

– Sometime between Milestone A and B

●

What is the latest to end the Competitive Prototyping?

– You can build 2 or more complete systems by keeping competition going throughout

the lifecycle

●

You continue the competition until the decision makers (and success critical

stakeholders) have sufficient evidence that the risks are “acceptable enough” to

go forward with one contractor

– You can possibly re-open the competition later for some aspects of the program

●

●

●

●

●

●

The current working assumption is that you can downselect to one contractor at

Milestone B

Can we gain knowledge over time?

Once a winner is selected, you want to hit-the-ground running and not lose any

time and talent

How to do we minimize/prevent protests [P 0, 5, 8] [E 0, 0, 3]

Will the early rounds of prototyping show you enough evidence to justify going

with the a Sole Source award (and therefore avoid protests)

Milestone B brings on new requirements and formal briefings to Congress

–

–

–

–

Right now, the government declares the budget before Milestone B

It is very difficult to re-certify programs when you exceed 25% of the original budget

Most programs who Nunn-McCurdy once, do it again because of staff loss, etc

Government wants cost and schedule realism

Issues - 6

●

●

Do we need to have multiple Milestone B’s and/or multiple

PDRs?

Start-Up Teams: Recommend that SW Tiger Teams are

engaged in the SEP development (which occurs prior to

Milestone B because the SEP defines the entry and exit criteria

for the PDR that occurs prior to Milestone B). [P 10, 3, 0] [E 3, 0,

0]

– Update the current SEP preparation guide

– Many parts are HW-centric or HW-exclusive

– SWAT Team = external team of experts that “help” PEO prior to

Milestone B

●

Driving behavior [P 13, 0, 0] [E 0, 3, 0]

– What to define in the RFP, award fee language

– Emphasize usability to government and contractor

●

SW Leadership meeting across all ACAT 1 programs including

Chief SW Engineers [P 11, 3, 0] [E 3, 0, 0]

– Include Chief System Engineers, both government and contactor

– Maybe hold this at the NDIA Sys Engr meeting