ICSM Principles III and IV

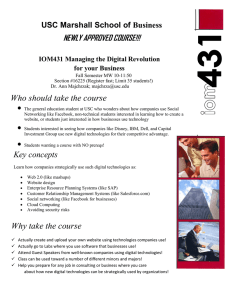

advertisement

USC C S E University of Southern California Center for Software Engineering ICSM Principles 3 and 4 3. Concurrent multidiscipline engineering 4. Evidence- and risk-based decisions • Barry Boehm, USC CS 510 Lecture, Fall 2015 8/31/2015 © USC-CSSE 1 USC C S E University of Southern California Center for Software Engineering Principle 3: Concurrent multidiscipline engineering • Problems with sequential engineering • What concurrent engineering is and isn’t • Failure story: Total Commitment RPV control • Success Story: Incremental Commitment RPV control • Concurrent hardware-software-human engineering 8/31/2015 © USC-CSSE 2 USC C S E University of Southern California Center for Software Engineering Problems with Sequential Engineering • Functionality first: (build it now; tune it later) – Often fails to satisfy needed performance • Hardware first – Inadequate capacity for software growth – Physical component hierarchy vs. software layered services • Software first – Layered services vs. component hierarchy – Non-portable COTS products • Human interface first – Unscalable prototypes • Requirements first – Solutions often infeasible 8/31/2015 © USC-CSSE 3 USC C S E University of Southern California Center for Software Engineering What Concurrent Engineering Is and Isn’t • Is: Enabling activities to be performed in parallel via synchronization and stabilization – – – – ICSM concurrency view and evidence-based synchronization Agile sprints with timeboxing, refactoring, prioritized backlog Kanban pull-based systems evolution Architected agile development and evolution • Isn’t: Catching up on schedule by starting activities before their prerequisites are completed and validated – “The requirements and design aren’t ready, but we need to hurry up and start coding, because there will be a lot of debugging to do” – A self-fulfilling prophecy 8/31/2015 © USC-CSSE 4 USC C S E University of Southern California Center for Software Engineering ICSM Principle 3. Concurrent Multidiscipline Engr. Cumulative Level of Understanding, Product and Process Detail (Risk-Driven) Concurrent Engineering of Products and Processes OPERATION2 DEVELOPMENT3 FOUNDATIONS4 OPERATION1 DEVELOPMENT2 FOUNDATIONS3 DEVELOPMENT1 FOUNDATIONS2 FOUNDATIONS RISK-BASED STAKEHOLDER COMMITMENT REVIEW POINTS: VALUATION EXPLORATION 6 5 4 3 2 1 Opportunities to proceed, skip phases backtrack, or terminate Risk-Based Decisions Evidence-Based Review Content - A first-class deliverable - Independent expert review - Shortfalls are uncertainties and risks Acceptable Negligible Risk Too High, Unaddressable High, but Addressable 8/31/2015 © USC-CSSE 1 Exploration Commitment Review 2 Valuation Commitment Review 3 Foundations Commitment Review 4 Development Commitment Review 5 Operations1 and Development2 Commitment Review 6 Operations2 and Development3 Commitment Review 5 USC C S E University of Southern California Center for Software Engineering Scalable Remotely Controlled Operations Agent-based 4:1 Remotely Piloted Vehicle demo 12/31/2007 8/31/2015 ©USC-CSSE © USC-CSSE 6 USC C S E University of Southern California Center for Software Engineering Total vs. Incremental Commitment – 4:1 RPV • Total Commitment – – – – – Agent technology demo and PR: Can do 4:1 for $1B Winning bidder: $800M; PDR in 120 days; 4:1 capability in 40 months PDR: many outstanding risks, undefined interfaces $800M, 40 months: “halfway” through integration and test 1:1 IOC after $3B, 80 months • Incremental Commitment [with a number of competing teams] – – – – – $25M, 6 mo. to VCR [4]: may beat 1:2 with agent technology, but not 4:1 $75M, 8 mo. to FCR [3]: agent technology may do 1:1; some risks $225M, 10 mo. to DCR [2]: validated architecture, high-risk elements $675M, 18 mo. to IOC [1]: viable 1:1 capability 1:1 IOC after $1B, 42 months 12/31/2007 8/31/2015 ©USC-CSSE © USC-CSSE 7 USC C S E University of Southern California Center for Software Engineering ICSM Activity Levels for Complex Systems Concurrency needs to be synchronized and stabilized; see Principle 4 8/31/2015 © USC-CSSE 8 USC C S E University of Southern California Center for Software Engineering Principle 4. Evidence- and risk-based decisions • Evidence provided by developer and validated by independent experts that: If the system is built to the specified architecture, it will – Satisfy the requirements: capability, interfaces, level of service, and evolution – Support the operational concept – Be buildable within the budgets and schedules in the plan – Generate a viable return on investment – Generate satisfactory outcomes for all of the success-critical stakeholders • All major risks resolved or covered by risk management plans (shortfalls in evidence are uncertainties and risks) • Serves as basis for stakeholders’ commitment to proceed Can be used to strengthen current schedule- or event-based reviews 8/31/2015 © USC-CSSE 9 USC C S E University of Southern California Center for Software Engineering Feasibility Evidence a First-Class ICSM Deliverable Not just an optional appendix • General Data Item Description in ICSM book – Based on one used on a large program • Includes plans, resources, monitoring – Identification of models, simulations, prototypes, benchmarks, usage scenarios, experience data to be developed or used – Earned Value tracking of progress vs. plans • Serves as way of synchronizing and stabilizing concurrent activities in hump diagram 8/31/2015 © USC-CSSE 10 USC C S E University of Southern California Center for Software Engineering Failure Story: 1 Second Response Rqt. $100M Required Architecture: Custom; many cache processors $50M Original Architecture: Modified Client-Server Original Cost Original Spec 1 After Prototyping 3 2 4 5 Response Time (sec) 8/31/2015 © USC-CSSE 11 USC C S E University of Southern California Center for Software Engineering Problems Avoidable with FED Feasibility Evidence Description • Attempt to validate 1-second response time – Commercial system benchmarking and architecture analysis: needs expensive custom solution – Prototype: 4-second response time OK 90% of the time • Negotiate response time ranges – 2 seconds desirable – 4 seconds acceptable with some 2-second special cases • Benchmark commercial system add-ons to validate their feasibility • Present solution and feasibility evidence at anchor point milestone review – Result: Acceptable solution with minimal delay 8/31/2015 © USC-CSSE 12 USC C S E University of Southern California Center for Software Engineering Steps for Developing FED Step Description A Develop phase work-products/artifacts B Determine most critical feasibility assurance issues Evaluate feasibility assessment options C D Examples/Detail For a Development Commitment Review, this would include the system’s operational concept, prototypes, requirements, architecture, life cycle plans, and associated assumptions Issues for which lack of feasibility evidence is program-critical Cost-effectiveness; necessary tool, data, scenario availability F Select options, develop feasibility assessment plans Prepare FED assessment plans and earned value milestones Begin monitoring progress with respect to plans G Prepare evidence-generation enablers H Perform pilot assessments; evaluate and iterate plans and enablers Assess readiness for Commitment Review E I J Hold Commitment Review when ready; adjust plans based on review outcomes What, who, when, where, how… Example to follow… Also monitor changes to the project, technology, and objectives, and adapt plans Assessment criteria Parametric models, parameter values, bases of estimate COTS assessment criteria and plans Benchmarking candidates, test cases Prototypes/simulations, evaluation plans, subjects, and scenarios Instrumentation, data analysis capabilities Short bottom-line summaries and pointers to evidence files are generally sufficient Shortfalls identified as risks and covered by risk mitigation plans Proceed to Commitment Review if ready Review of evidence and independent experts’ assessments NOTE: “Steps” are denoted by letters rather than numbers to indicate that many are done concurrently. 8/31/2015 © USC-CSSE 13 USC C S E University of Southern California Center for Software Engineering Success Story: CCPDS-R Characteristic Domain Size/language Average number of people Schedule Process/standards Environment Contractor Customer Performance 8/31/2015 CCPDS-R Ground based C3 development 1.15M SLOC Ada 75 75 months DOD-STD-2167A Iterative development Rational host DEC host DEC VMS targets TRW USAF Delivered On-budget, On-schedule © USC-CSSE 14 USC C S E University of Southern California Center for Software Engineering CCPDS-R Evidence-Based Commitment Development Life Cycle Elaboration Inception Construction Architecture Iterations SSR 0 5 Contract award (LCO) Competitive design phase: •Architectural prototypes •Planning •Requirements analysis 8/31/2015 IPDR 10 Release Iterations PDR 15 CDR 20 25 Architecture baseline under change control (LCA) Early delivery of “alpha” capability to user © USC-CSSE 15 USC University of Southern California C S E Center for Software Engineering Reducing Software Cost-to-Fix: CCPDS-R - Royce, 1998 Architecture first -Integration during the design phase -Demonstration-based evaluation Risk Management Configuration baseline change metrics: 40 Design Changes Hours30 Change Maintenance Changes and ECP’s 20 10 Implementation Changes Project Development Schedule 15 8/31/2015 20 © USC-CSSE 25 30 35 40 16 USC C S E University of Southern California Center for Software Engineering CCPDS-R and 4 Principles • Stakeholder Value-Based Guidance – Reinterpreted DOD-STD-2167a; users involved – Extensive user, maintainer, management interviews, prototypes – Award fee flowdown to performers • Incremental Commitment and Accountability – Stage I: Incremental tech. validation, prototyping, architecting – Stage II: 3 major-user-organization increments • Concurrent multidiscipline engineering – Small, expert, concurrent-SysE team during Stage I – Stage II: 75 parallel programmers to validated interface specs; integration preceded programming • Evidence and Risk-Driven Decisions – High-risk prototyping and distributed OS developed before PDR – Performance validated via executing architectural skeleton 8/31/2015 © USC-CSSE 17 USC University of Southern California C S E Center for Software Engineering Failure Story and 4 Principles 1. Stakeholder value-based guidance – – No prototyping with representative users No early affordability analysis 2. Incremental commitment and accountability – Total commitment to infeasible 1-second response time 3. Concurrent multidiscipline engineering – – Fixed 1-second response time before evaluating architecture No early prototyping of operational scenarios and usage 4. Evidence and risk-driven decisions – No early evidence of ability to satisfy 1-second response time 8/31/2015 © USC-CSSE 18 USC C S E University of Southern California Center for Software Engineering Meta-Principle 4+: Risk Balancing • How much (system scoping, planning, prototyping, COTS evaluation, requirements detail, spare capacity, fault tolerance, safety, security, environmental protection, documenting, configuration management, quality assurance, peer reviewing, testing, use of formal methods, and feasibility evidence) are enough? • Answer: Balancing the risk of doing too little and the risk of doing too much will generally find a middle-course sweet spot that is about the best you can do. 8/31/2015 © USC-CSSE 19 USC University of Southern California C S E Center for Software Engineering Using Risk to Determine “How Much Is Enough” - testing, planning, specifying, prototyping… • Risk Exposure RE = Prob (Loss) * Size (Loss) – “Loss” – financial; reputation; future prospects, … • For multiple sources of loss: RE = [Prob (Loss) * Size (Loss)]source sources 8/31/2015 © USC-CSSE 20 USC C S E University of Southern California Center for Software Engineering Example RE Profile: Time to Ship - Loss due to unacceptable dependability Many defects: high P(L) Critical defects: high S(L) RE = P(L) * S(L) Few defects: low P(L) Minor defects: low S(L) Time to Ship (amount of testing) 8/31/2015 © USC-CSSE 21 USC C S E University of Southern California Center for Software Engineering Example RE Profile: Time to Ship - Loss due to unacceptable dependability - Loss due to market share erosion Many defects: high P(L) Critical defects: high S(L) Many rivals: high P(L) Strong rivals: high S(L) RE = P(L) * S(L) Few rivals: low P(L) Weak rivals: low S(L) Few defects: low P(L) Minor defects: low S(L) Time to Ship (amount of testing) 8/31/2015 © USC-CSSE 22 USC C S E University of Southern California Center for Software Engineering Example RE Profile: Time to Ship - Sum of Risk Exposures Many defects: high P(L) Critical defects: high S(L) RE = P(L) * S(L) Many rivals: high P(L) Strong rivals: high S(L) Sweet Spot Few rivals: low P(L) Weak rivals: low S(L) Few defects: low P(L) Minor defects: low S(L) Time to Ship (amount of testing) 8/31/2015 © USC-CSSE 23 USC C S E University of Southern California Center for Software Engineering Comparative RE Profile: Safety-Critical System Higher S(L): defects High-Q Sweet Spot RE = P(L) * S(L) Mainstream Sweet Spot Time to Ship (amount of testing) 8/31/2015 © USC-CSSE 24 USC C S E University of Southern California Center for Software Engineering Comparative RE Profile: Internet Startup Low-TTM Sweet Spot RE = P(L) * S(L) Higher S(L): delays Mainstream Sweet Spot TTM: Time to Market Time to Ship (amount of testing) 8/31/2015 © USC-CSSE 25 USC C S E University of Southern California Center for Software Engineering How Much Testing is Enough? (LiGuo Huang, 2006) - Early Startup: Risk due to low dependability - Commercial: Risk due to low dependability - High Finance: Risk due to low dependability - Risk due to market share erosion Combined Risk Exposure 1 Market Share Erosion 0.8 Early Startup 0.6 RE = P(L) * S(L) Sweet Spot 0.4 Commercial High Finance 0.2 0 VL L N H VH RELY COCOMO II: 0 12 22 34 54 Added % test time COQUALMO: 1.0 .475 .24 .125 0.06 P(L) Early Startup: .33 .19 .11 .06 .03 S(L) Commercial: 1.0 .56 .32 .18 .10 S(L) High Finance: 3.0 1.68 .96 .54 .30 S(L) Market Risk: .008 .027 .09 .30 1.0 REm 8/31/2015 © USC-CSSE 26 USC University of Southern California C S E Center for Software Engineering How Much Architecting Is Enough? -A COCOMO II Analysis 100 Percent of Time Added to Overall Schedule 90 10000 KSLOC 80 Percent of Project Schedule Devoted to Initial Architecture and Risk Resolution 70 Added Schedule Devoted to Rework (COCOMO II RESL factor) Total % Added Schedule 60 Sweet Spot 50 40 100 KSLOC 30 Sweet Spot Drivers: 20 Rapid Change: leftward 10 KSLOC High Assurance: rightward 10 0 0 10 20 30 40 50 60 Percent of Time Added for Architecture and Risk Resolution 8/31/2015 © USC-CSSE 27