Slides (RC)

advertisement

Predictive Modeling

&

The Bayes Classifier

0011 0010 1010 1101 0001 0100 1011

Rosa Cowan

April 29, 2008

1

2

4

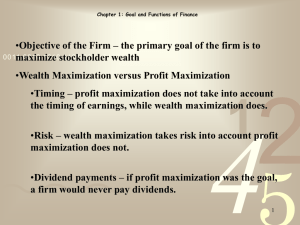

Goal of Predictive Modeling

0011 0010 1010 1101 0001 0100 1011

• To identify class membership of a variable (entity, event, or

phenomenon) through known values of other variables (characteristics,

features, attributes).

1

2

• This means finding a function f such that

y = f(x,) where x = {x1,x2,…,xp}

is a set of estimated parameters for the model

y = c {c1,c2,…,cm} for the discrete case

y is a real number for the continuous case

4

Example Applications of Predictive

Models

0011 0010 1010 1101 0001 0100 1011

• Forecasting peak bloom period for Washington’s cherry blossoms

• Numerous applications in Natural Language Processing including

semantic parsing, named entity extraction, coreference resolution and

machine translation.

• Medical diagnosis (MYCIN – identification of bacterial infections)

• Sensor threat identification

• Predicting stock market behavior

• Image processing

• Predicting consumer purchasing behaviors

• Predicting successful movie and record productions

1

2

4

Predictive Modeling Ingredients

0011 0010 1010 1101 0001 0100 1011

1.

2.

3.

4.

A model structure

A score function

An optimization strategy for finding the best

Data or expert knowledge for training and testing

1

2

4

2 Types of Predictive Models

0011 0010 1010 1101 0001 0100 1011

• Classifiers or Supervised Classification* –

for the case when C is categorical

• Regression – for the case when C is realvalued.

1

2

4

*The remainder of this presentation focuses on Classifiers

Classifier Variants &

Example Types

0011 0010 1010 1101 0001 0100 1011

1.

2.

Discriminative: work by defining decision boundaries or decision surfaces

–

Nearest Neighbor Methods; K-means

–

Linear & Quadratic Discriminant Methods

–

Perceptrons

–

Support Vector Machines

–

Tree Models (C4.5)

Probabilistic Models: work by identifying the most likely class for a given

observation by modeling the underlying distributions of the features across

classes*

–

Bayes Modeling

–

Naïve Bayes Classifiers

1

2

4

*Remainder of presentation will focus on Probabilistic Models with

particular attention paid to the Naïve Bayes Classifier

General Bayes Modeling

0011 0010 1010 1101 0001 0100 1011

• Uses Bayes Rule:

P( A B)

;

P( B)

P( A B) P( A / B) P( B)

P( A / B) P( B)

therefore P( B / A)

P( A)

P( A / B)

1

P(ck | x1 , x2 ,..., x p )

P( x1 , x2 ,..., x p | ck ) P(ck )

for 1 k m;

P( x1 , x2 ,..., x p )

P(ck | x1 , x2 ,..., x p ) is referred to as the posterior probabilit y,

P(ck ) as the prior probabilit y,

P( x1 , x2 ,..., x p | ck ) as the likelihood ,

and P( x1 , x2 ,..., x p ) as the evidence.

2

4

• For general conditional probability classification

modeling, we’re interesting in

Bayes Example

0011 0010 1010

1101say

0001

0100

1011 in predicting if a particular student

• Let’s

we’re

interested

will pass CMSC498K.

• We have data on past student performance. For each student

we know:

– If student’s GPA > 3.0 (G)

– If student had a strong math background (M)

– If student is a hard worker (H)

– If student passed or failed course

• A new student comes along with values G g , M m, and H h

1

2

4

and wants to know if they will likely pass or fail the course.

P( g , m, h, pass )

f ( g , m, h)

P( g , m, h, fail )

If f ( g , m, h) 1, then classifier predicts pass; otherwise fail.

General Bayes Example (Cont.)

0011 0010 1010 Pass

1101 0001 0100 1011

GPA>3 (G)

Math? (M)

Hardworker

(H)

Prob

Fail

GPA>3 (G)

Math? (M)

Hardworker

(H)

Prob

0

0

0

0.01

0

0

0

0.28

0

0

1

0.03

0

0

1

0.15

0

1

0

0.05

0

1

0

0.20

0

1

1

0.08

0

1

1

0.14

1

0

0

0.10

1

0

0

0.07

1

0

1

0.28

1

0

1

0.05

1

1

0

0.15

1

1

0

0.08

1

1

1

0.30

1

Assume P(pass) 0.5 and P(fail) 0.5

Let x {0,1,0} or {G, M, H)

P( pass ) P( x / pass ) 0.5 * 0.05

f ( x)

0.25

P( fail ) P( x / fail ) 0.5 * 0.20

1

2

4

1

1

0.03

Joint Probability Distributions

grow exponentially with # of

features! For binary-valued

features, we need O(2p) JPDs for

each class.

Augmented Naïve Bayes Net

(Directed Acyclic Graph)

0011 0010 1010 1101 0001 0100 1011

G and H are conditionally

independent of M given pass

pass

G

P(G | H pass) 0.9

P(G | H pass) 0.85

P(G | H pass) 0.10

P(G | H pass) 0.05

H

P( H | pass) 0.5

P( H | pass) 0.2

0.5

M

1

2

4

P( M | pass) 0.6

P( M | pass) 0.10

P ( M , G, H , pass ) P ( M | G H pass ) * P (G H pass )

P ( M | pass ) * P (G H pass )

P ( M | pass ) * P (G | H pass ) * P (H pass )

P ( M | pass ) * P (G | H pass ) * P (H | pass ) * P ( pass )

0.6 0.15 0.5 0.5

0.0225

Naïve Bayes

•Strong assumption of the conditional independence of all feature variables.

•Feature

only0100

dependent

0011 0010

1010 variables

1101 0001

1011on class variable

pass

G

H

P(G | pass) 0.8

P(G | pass) 0.1

P( H | pass) 0.7

P( H | pass) 0.4

0.5

M

1

P( pass, G, M , H ) P( M | G H pass) P(G H pass)

P( M / pass) P(G | H pass) P(H pass)

P( M / pass) P(G | pass) P(H | pass) P( pass)

p

P( pass)

P( xi | pass)

i 1

0.5 0.6 0.2 0.3

0.018

2

4

P( M | pass) 0.6

P( M | pass) 0.7

Characteristics of Naïve Bayes

0011 0010 1010 1101 0001 0100 1011

• Only requires the estimation of the prior probabilities

P(CK) and p conditional probabilities for each class, to be

able to answer full set of queries across classes and

features.

• Empirical evidence shows that Naïve Bayes classifiers

work remarkable well. The use of a full Bayes (belief)

network provide only limited improvements in

classification performance.

1

2

4

Why do Naïve Bayes Classifiers

work so well?

0011 0010 1010 1101 0001 0100 1011

• Performance measured using 0-1 loss function which

counts the number of incorrect classifications rather than a

measure of how accurate the classifier estimates the

posterior probabilities

• Additional explanation by Harry Zhang claiming that the

distribution of dependencies among features over the

classes affects the accuracy of Naïve Bayes.

1

2

4

Zhang’s Explanation

0011 0010 1010 1101 0001 0100 1011

•

Define Local Dependencies – measure of the dependency between

a node and its parents. Ratio of the conditional probability of the

node given its parents over the node without parents.

1

2

for a node, X , in the augmented naive Bayes graph the dependence derivative is defined as :

P( X | pa( X ), pass)

dd pass ( X | pa( X ))

P( X | pass)

P( X | pa( X ), fail )

dd fail ( X | pa( X ))

P( X | fail )

where pa( X ) denotes the parents of X

Define a local dependence derivative ratio for node X as

dd ( X | pa( X ))

ddr( X ) pass

dd fail ( X | pa( X ))

4

Zhang’s Theorem #1

0011 0010 1010 1101 0001 0100 1011

• Given an augmented naïve Bayes graph and its correspondent naïve

Bayes graph on features X1,X2,…Xp, assume that fb and fnb are the Bayes

and Naïve Bayes classifiers respectively, then the equation below is

true.

f ( x , x ,..., x ) f ( x , x ,..., x ) ddr ( x )

p

b

1

2

p

p

nb

1

2

p

i

ddr ( x ) is called the dependence

i

i

i

1

2

4

distributi on factor - DF(x)

Zhang’s Theorem #2

0011 0010

1010x1101

Given

{x ,0001

x ,...,0100

x }, an1011

f classifier

1

2

p

b

is equivalent to an f nb classifier

under 0 - 1 loss (i.e. both result in the same classifica tion), iff when

f b ( x) 1, DF(x) f b ( x); or when f b ( x) 1, DF(x) f b ( x).

1

2

4

Analysis

0011 0010 1010 1101 0001 0100 1011

• Determine when fnb results in the same classification as fb.

f

f

DF ( x)

b

nb

1

• Clearly when DF(X) = 1. There are 3 cases for DF(X)=1.

2

1. All the features are independent

2. Local dependencies for each node distributes evenly in both classes

3. Local dependencies supporting classification in one class are

canceled by others supporting the opposite class.

• If f 1 then when DF(x) f to ensure f 1.

b

b

nb

• If f 1 then when DF(x) f to ensure f 1.

b

b

nb

4

The End Except For

0011 0010 1010 1101 0001 0100 1011

•Questions

•List of Sources

1

2

4

List of Sources

0011 0010 1010 1101 0001 0100 1011

• Hand, D., Mannila, H., & Smyth, P. (2001). Principles of Data Mining;

Chapter 10. Massachusetts:The MIT Press.

• Zhang, H. (2004). The Optimality of Naïve Bayes. Retrieved April 17,

2008, Web site:

1

http://www.cs.unb.ca/profs/hzhang/publications/FLAIRS04ZhangH.pdf

2

• Moore, A. (2001) Bayes Nets for Representing and reasoning about

uncertainty. Retrieved April 22, 2008, Web site:

•

http://www.coral-lab.org/~oates/classes/2006/Machine%20Learning/web/bayesnet.pdf

Naïve Bayes classifier. Retrieved April 10, 2008, Web site:

http://en.wikipedia.org/wiki/Naive_Bayes_classifier

4

• Ruane, Michael (March 30, 2008) Cherry Blossom Forecast gets a

Digital Aid. Retrieved April 10, 2008, Web site:

http://www.boston.com/news/nation/washington/articles/2008/03/30/cherry_blossom_fo

recast_gets_a_digital_aid

/