Making Visualization Work Benjamin B. Bederson Computer Science Department/UMIACS Human-Computer Interaction Lab

advertisement

Making Visualization Work

Benjamin B. Bederson

Computer Science Department/UMIACS

Human-Computer Interaction Lab

University of Maryland

Information Visualization

Interactive visual display of abstract information to

help users:

Find patterns, outliers and trends

Explore data to build intuition

Develop specific questions to be asked

of more traditional mechanisms

Visuals help us think

Provide a frame of reference, a temporary storage area

What’s the Big Deal?

Presentation is everything!

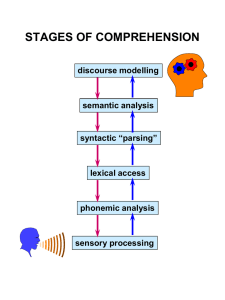

External Cognition

Recognize human

limitations

External Cognition

Role of external world in

thinking and reason

Excellent Pattern Recognition

How many 3’s?

1281736875613897654698450698560498286762

9809858453822450985645894509845098096585

9091030209905959595772564675050678904567

8845789809821677654872664908560912949686

1281736875613897654698450698560498286762

9809858453822450985645894509845098096585

9091030209905959595772564675050678904567

8845789809821677654872664908560912949686

Pitfalls

Complex navigation and occlusion

Meaningless spatial dimensions

Self-Organizing Maps

Unhelpful animation

Inappropriate use of 3D

PowerPoint, Visual Thesaurus

Inefficient and

confusing use of screen space

Chart Junk

“Inventive, Imaginative,

Ingenious, Fanciful!”

=> But is it useful?

Strategy

Show more than fits on the screen:

Scrolling

3D

Dense information design

Overview+detail

2.5D (ZUIs)

Distortion (fisheye)

Careful animation

Goal: Support users to

stay “in the flow”.

Techniques:

Zoomable User Interfaces (ZUIs)

Fisheye Distortion

Transitional animations

Work closely with users

[Bederson & Shneiderman 2003 - Craft]

My Focus

Themes:

Adults

Children

Mobile Devices

Toolkits

Approaches:

Systems

Applications

Studies

This talk is not about my other work on:

• Voting Systems

• Interaction & Input devices

• Methodology

[Chipman, Bederson, Golbeck Behaviour & Information Technology (submitted)]

[Hutchinson, Bederson et al. - CHI 2003]

[Baudisch, Bederson et al. - Interact 2003]

[Bederson - CHI 2003]

[Gandhi, Kumar, Bederson, Shneiderman - WebVis 2000]

[Stewart, Bederson & Druin - CHI 1999]

[Hightower, Bederson, et al. - Hypertext 1998]

CounterPoint - [Good & Bederson - J. Information Visualization 2002]

PhotoMesa

– Zoomable Image Browser

Browse large numbers of images

See relationships among images

Fast preview / detail

Stand-alone, or integrated w/ DB

Local or web-deployed

ZUIs have excellent bandwidth characteristics –

constant amount of information per view

Also working on

Demo

Automatic thumbnail cropping

Semi-autonomous annotation

[Suh, Ling, Bederson & Jacobs - UIST 2003]

[Bederson - UIST 2001]

[Combs & Bederson - DL 1999]

Commercialized at

www.photomesa.com

www.cs.umd.edu/hcil/photomesa

Applying PhotoMesa

- Visual Search Results

Shows search results

Integrated with UMD Art

History Dept. DB

9,000 images

Installed in slide library

Quantum Treemaps

Variation on treemaps:

space-filling subdivision of a rectangle

Guarantees that each rectangle’s

dimensions are an integral multiple of a

constant

Lays out images so that all images are the

same size and are aligned on a single grid

across rectangles

[Bederson, Shneiderman & Wattenberg - TOG 2002]

Quantum Treemaps II

Applicable to any treemap algorithm:

After rectangles are generated, expand to next quantum size.

Expand to match width/height of neighbors

Translate to avoid overlap

Accommodate larger size within parent

1.

2.

3.

4.

Pivot Treemap

Average Aspect Ratios

Quantum Strip Treemap

4.50

50%

Strip Treemap

45%

Quantum Strip Treemap

4.00

40%

3.50

35%

Wasted Space

Average Aspect Ratio

Quantum Pivot Treemap

Strip Treemap

5.00

3.00

2.50

2.00

Pivot Treemap

Wasted Space

Quantum Pivot Treemap

30%

25%

20%

15%

1.50

10%

1.00

5%

0.50

0%

0.00

10

20

50

100

Maxim um # elem ents per rectangle

1000

10

20

50

100

Maximum # elements per rectangle

1000

Strip Treemaps

Want rectangles to be ordered

Squarified alg. creates rows or columns,

and inserts in order of rectangle size.

Based on any existing “Squarified” treemap algorithm

Strip approach:

1.

2.

Add rectangle to current row (“strip”)

If row’s average aspect ratio increases, start new row

Squarified =>

<= Strip

Strip Treemaps

Squarified treemaps: avg aspect ratio: 1.75

Strip treemaps: avg aspect ratio: 2.6

User study examined “readability”

83% faster for strip than squarified

search task w/ 100 rects

(2.5 sec vs 14.8 sec w/ 20 subjs).

SpaceTree / TaxonTree

- Seeing Hierarchies in Context

Demo

Explore large hierarchies

Gain understanding of

relationships among data

Integrate search/browse

TaxonTree is specialized

version of biodiversity

Used in UMD Biodiversity

BSCI 224

Working on “SpaceGraph” to

view ontologies

[Grosjean, Plaisant & Bederson - InfoVis 2002]

www.cs.umd.edu/hcil/spacetree

DateLens

- Calendars on the Go

Support longer range tasks

Scale up while maintaining

context:

Uses 2D fisheye distortion

Carefully designed

interaction

Integrated search with or

without text entry

High performance on lowpowered device

[Bederson, Clamage, Czerwinski, Robertson - TOCHI submitted]

www.cs.umd.edu/hcil/datelens

Demo

DateLens Studies

Interaction between Calendar Type and Task

Complexity

Two user studies at

Microsoft Research

First with non-PDA users

Second with MSR PDAusing employees

90

80

Average Task Time (Seconds)

70

60

DateLens--Simple

50

PPC--Simple

40

DateLens--Complex

PPC--Complex

30

20

10

Similar timing results

Overall quite enthusiastic

Commercialized at

www.datelens.com

0

Condition

Fisheye Menus

Problem: Selection from a long list

Traditional approaches:

ArrowBars

ScrollBars

Hierarchies

Solution: Apply fisheye distortion

Shows detail in context

Reduces mouse presses / taps

[Bederson - UIST 2000]

Results —Task Times

Tasks were performed faster using Fisheye Menus,

F(1,1206)=29.4, p<0.001

25% faster (4.0 vs 5.3 secs)

Difference more pronounced for longer menus

Time vs. Menu Length

menutype

7000.00

6000.00

time

3000.00

2000.00

1000.00

0.00

20

30

40

menuleng

FISHEYE

START

Dot/Lines show Means

5000.00

4000.00

50

And more pronounced

for items near the end of

the menus

Working With Children

KidPad – A story telling / authoring tool

Focus on children’s abilities

Made zooming & linking

accessible

Collaboration through

Single Display Groupware

[Hourcade, Bederson, Druin - SPE 2003]

[Benford, Bederson, et al. - CHI 2000]

[Boltman, Druin, Bederson et al. - AERA 2002]

www.cs.umd.edu/hcil/kidpad

International Children’s Digital Library

Largest freely available collection of

children’s books

23 languages, 260 books

Exploring interface and accessibility

Enhanced version uses PhotoMesa

Over 15,000 unique visitors / month

[Druin, Revelle, Bederson, et al. - JCAL 2003]

[Hourcade, Bederson, et al. - Interacting w/ Comp. 2003]

[Druin, Bederson, et al. - First Monday 2003]

[Revelle, Druin, Platner, Bedersonet al. J. of Science, Education and Technology 2002]

[Druin, Bederson, et al. - JCDL 2001]

www.icdlbooks.org

Study of Children’s Mouse Use

Accuracy %

100

4 years

5 years

50

Adult

0

16

32

64

Target Size (pixels)

Target Reentry

3

2

4 years

5 years

Adult

1

0

16

32

64

Target Size (Pixels)

[Hourcade, Bederson, Druin, Guimbretiere - TOCHI submitted]

Piccolo

- A Zoomable User Interface Toolkit

For Java programmers (porting to C#)

Offers a structured canvas

Supports 2D object-oriented graphics

layers

hierarchies (transformation, transparency, etc.)

cameras

efficiency mechanisms

=> Extensible and Efficient

www.cs.umd.edu/hcil/piccolo

History Lesson – ZUI Toolkits

First there was Pad++

Designed for prototyping

Used C++ and Tcl/Tk and

X or OpenGL graphics

It was useful, but didn’t scale up well

The API was defined in Tcl and the C++ code

was efficient, but messy…

[Bederson & Meyer - SPE 1998]

Then There Was Jazz

We wanted to spend less time on the toolkit

Goals became clear:

Small and easy to learn, use within existing GUI

framework

Manage painting, picking and event dispatch customizable

Interaction handlers on elements and groups

Non-rectangular, transparent, scaled, translated and

rotated graphics

Large numbers of objects in complex scenes.

Animated view navigations (pans and zooms)

Multiple views

Fast model manipulation

[Bederson, Meyer & Good - CHI 2000]

Inspired by 3D Graphics

We built “polylithic” scene graph

Different than “monolithic” GUI toolkits

Polylithic

Monolithic

Node

Node

Rectangle

Fade

Rectangle

FadeRectangle

Fade

Rectangle

Polylithic

Monolithic

Root

Root

Fade

...

Fade

Rectangle ... Rectangle

FadeRectangle

FadeRectangle ... FadeRectangle

[Bederson, Grosjean, Meyer - TSE submitted]

Polylithic Potential

Simpler objects, easier to maintain

More de-coupled objects, easier to extend

More run-time control

Could better support design environments

But …

More objects to control was significant problem

Introduced “editor” to manage object chains

Still not good enough for app programmers

Now there is Piccolo

Our “last” toolkit

In Java, and now also in C#

Same feature set as Jazz,

but monolithic

Biggest lesson: KISS

piccolo

piccolo.nodes

PText

PNode

PRoot

import edu.umd.cs.piccolo.nodes.*;

import edu.umd.cs.piccolox.*;

public class PHelloWorld extends PFrame {

public void initialize() {

PText text = new PText("Hello World!");

getCanvas().getLayer().addChild(text);

}

public static void main(String args[]) {

new PHelloWorld();

}

}

PLayer

PPath

PCamera

1.n

PImage

Class Hierarchy

PRoot

PLayer

PNode

PText

PCamera

PImage

PPath

Typical run-time structure

How do they compare?

Tough comparison – many design differences aside from

polylithic / monolithic architectures

Did case studies and a performance analysis

DateLens Mockup

Custom

Scene render time 1.5 msec

Lines of Code

272 lines

Class file size

10.4 kbytes

Memory usage

7.5 k (484k)

our code

(full application)

Piccolo

2.1 msec

171 lines

10.4 kbytes

8.0 k (516k)

Jazz

2.2 msec

219 lines

13.2 kbytes

10.2 k (535k)

Performance Analysis

Task

Custom

Piccolo

Scene graph Jazz

Scene graph

Overhead

Overhead

10,000 rectangles 265.0 msec 270.3 msec 2 %

282.8 msec 7 %

1,000 groups of

273.4 msec 3 %

281.2 msec 6 %

10 rectangles

100 groups of

267.2 msec 1 %

281.3 msec 6 %

10 groups of

10 rectangles

10 groups of

270.4 msec 2 %

278.1 msec 5 %

10 groups of

10 groups of

10 rectangles

Rendering Speed

Scene graph

manipulation speed

Task

10,000 rectangles

Build 10,000 nodes

Translate 10,000 nodes

Remove 10,000 nodes

1,000 x 10 rects

Build 10,000 nodes

Translate 10,000 nodes

Remove 10,000 nodes

100 x 10 x 10 rects

Build 10,000 nodes

Translate 10,000 nodes

Remove 10,000 nodes

10 x 10 x 10 x 10 rects

Build 10,000 nodes

Translate 10,000 nodes

Remove 10,000 nodes

Piccolo

Jazz

16.0 msec 219.0 msec

0.4 msec 23.5 msec

5.3 msec 5.3 msec

16.0 msec 218.0 msec

0.4 msec 50.8 msec

5.3 msec 5.3 msec

15.0 msec 226.5 msec

0.4 msec 62.5 msec

5.0 msec 10.6 msec

16.0 msec 226.5 msec

0.4 msec 82.3 msec

5.0 msec 10.3 msec

Architecture Reflections

Polylithic and Monolithic approachs each

have merits

Similar performance and code sizes

Base architecture on toolkit users and

expected life cycle of toolkit

More static => monolithic

More dynamic => polylithic

Piccolo in Use

Poseidon –

UML modeling,

Gentleware.com

Java Digital

Album

Infrastructure

SimBrain –

Neural Network

TimeSearcher

1,300 messages

in public email list

U. Victoria – Ontology Visualization

Conclusions

Does zooming work?

Is animation helpful?

Are toolkits beneficial?

=> Clearly yes (sometimes)

Good small representations needed

Animation to help maintain object constancy best

Understanding of domain and users crucial

Like all interfaces, good visualizations remain hard

[Hornbaek, Bederson & Plaisant - TOCHI 2002]

[Bederson & Boltman - InfoVis 1999]