Nonlinear Dimensionality Reduction Presented by Dragana Veljkovic

advertisement

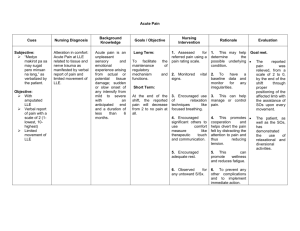

Nonlinear Dimensionality Reduction Presented by Dragana Veljkovic Overview Curse-of-dimensionality Dimension reduction techniques Isomap Locally linear embedding (LLE) Problems and improvements 2 Problem description Large amount of data being collected leads to creation of very large databases Most problems in data mining involve data with a large number of measurements (or dimensions) E.g. Protein matching, fingerprint recognition, meteorological predictions, satellite image repositories Reducing dimensions increases capability of extracting knowledge 3 Problem definition Original high dimensional data: X = (x1, …, xn) where xi=(xi1,…,xip)T underlying low dimensional data: Y = (y1, …, yn) where yi=(yi1,…,yiq)T and q<<p Assume X forms a smooth low dimensional manifold in high dimensional space Find the mapping that captures the important features Determine q that can best describe the data 4 Different approaches Local or Shape preserving Global or Topology preserving Local embeddings Local – simplify representation of each object regardless of the rest of the data Features selected retain most of the information Fourier decomposition, wavelet decomposition, piecewise constant approximation, etc. 5 Global or Topology preserving Mostly used for visualization and classification PCA or KL decomposition MDS SVD ICA 6 Local embeddings (LE) Overlapping local neighborhoods, collectively analyzed, can provide information on global geometry LE preserves the local neighborhood of each object preserving the global distances through the nonneighboring objects Isomap and LLE 7 Another classification Linear and Non Linear methods 8 Neighborhood Two ways to select neighboring objects: nearest neighbors (k-NN) – can make nonuniform neighbor distance across the dataset ε-ball – prior knowledge of the data is needed to make reasonable neighborhoods; size of neighborhood can vary k 9 Isomap – general idea Only geodesic distances reflect the true low dimensional geometry of the manifold MDS and PCA see only Euclidian distances and there for fail to detect intrinsic low-dimensional structure Geodesic distances are hard to compute even if you know the manifold In a small neighborhood Euclidian distance is a good approximation of the geodesic distance For faraway points, geodesic distance is approximated by adding up a sequence of “short hops” between neighboring points 10 Isomap algorithm Find neighborhood of each object by computing distances between all pairs of points and selecting closest Build a graph with a node for each object and an edge between neighboring points. Euclidian distance between two objects is used as edge weight Use a shortest path graph algorithm to fill in distance between all non-neighboring points Apply classical MDS on this distance matrix 11 Isomap 12 Isomap on face images 13 Isomap on hand images 14 Isomap on written two-s 15 Isomap - summary Inherits features of MDS and PCA: guaranteed asymptotic convergence to true structure Polynomial runtime Non-iterative Ability to discover manifolds of arbitrary dimensionality Perform well when data is from a single well sampled cluster Few free parameters Good theoretical base for its metrics preserving properties 16 Problems with Isomap Embeddings are biased to preserve the separation of faraway points, which can lead to distortion of local geometry Fails to nicely project data spread among multiple clusters Well-conditioned algorithm but computationally expensive for large datasets 17 Improvements to Isomap Conformal Isomap – capable of learning the structure of certain curved manifolds Landmark Isomap – approximates large global computations by a much smaller set of calculation Reconstruct distances using k/2 closest objects, as well as k/2 farthest objects 18 Locally Linear Embedding (LLE) Isomap attempts to preserve geometry on all scales, mapping nearby points close and distant points far away from each other LLE attempts to preserve local geometry of the data by mapping nearby points on the manifold to nearby points in the low dimensional space Computational efficiency Representational capacity 19 LLE – general idea Locally, on a fine enough scale, everything looks linear Represent object as linear combination of its neighbors Representation indifferent to affine transformation Assumption: same linear representation will hold in the low dimensional space 20 LLE – matrix representation X = W*X where X is p*n matrix of original data W is n*n matrix of weights and Wij =0 if Xj is not neighbor of Xi rows of W sum to one Need to solve system Y = W*Y Y is q*n matrix of underlying low dimensional data 2 Minimize error: (Y) Yi WijYj i j 21 LLE - algorithm Find k nearest neighbors in X space Solve for reconstruction weights W Compute embedding coordinates Y using weights W: create sparse matrix M = (I-W)'*(I-W) Compute bottom q+1 eigenvectors of M Set i-th row of Y to be i+1 smallest eigen vector 22 Numerical Issues Covariance matrix used to compute W can be ill-conditioned, regularization needs to be used Small eigen values are subject to numerical precision errors and to getting mixed But, sparse matrices used in this algorithm make it much faster then Isomap 23 LLE 24 LLE – effect of neighborhood size 25 LLE – with face picture 26 LLE – Lips pictures 27 PCA vs. LLE 28 Problems with LLE If data is noisy, sparse or weakly connected coupling between faraway points can be attenuated Most common failure of LLE is mapping close points that are faraway in original space – arising often if manifold is undersampled Output strongly depends on selection of k 29 References Roweis, S. T. and L. K. Saul (2000). "Nonlinear dimensionality reduction by locally linear embedding " Science 290(5500): 2323-2326. Tenenbaum, J. B., V. de Silva, et al. (2000). "A global geometric framework for nonlinear dimensionality reduction " Science 290(5500): 2319-2323. Vlachos, M., C. Domeniconi, et al. (2002). "Non-linear dimensionality reduction techniques for classification and visualization." Proc. of 8th SIGKDD, Edmonton, Canada. de Silva, V. and Tenenbaum, J. (2003). “Local versus global methods for nonlinear dimensionality reduction”, Advances in Neural Information Processing Systems,15. 30 Questions? 31