Risk (Potential Bugs) Analysis

advertisement

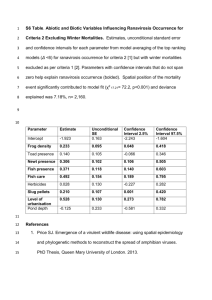

Risk Analysis for Testing • Based on Chapter 9 of Text • Based on the article “ A Test Manager’s Guide to Risks Analysis and Management” by Rex Black published in Software Quality Professional, Vol. 7, No1, pp 22-30. • Will follow the flow of the article more than chapter 9. The question is - - - • What are you testing? – What are areas of the system do you think is high risk? • How do you know which ones are the most important one? – How do you know which ones are high risk? • What is a risk ? --– it is A Potential Undesirable Outcome • So for system’s quality, a potential undesirable outcome is when the system fails to meet its expected behavior. • The higher this potential of undesirable outcome is, the more we want it not to occur. 1. We test to find these high potentially undesirable outcomes and get them fixed before release 2. We test to show that the areas tested do not harbor these high potentially undesirable outcomes. Quality Risks • For most systems, it is not probable to test all possibilities; so we know there are errors shipped. (There are risks!) • Two major concerns about risks are – How likely is it that certain risks (the potential problems) exist in the system? – What is the impact of the risk to: • Customer/user environment • Support personnel workload • Future sales of the product Quality Risk Analysis • In quality risk analysis for testing we perform 3 steps: – Identify the risks (in the system) – Analyze the risks – Prioritize the risks 5 Different Techniques for Quality Risk Analysis • All 5 techniques involve the three steps of identifying, analyzing and prioritizing based on a variety of sources for data and inputs. – – – – – Informal Analysis ISO 9126 Based Analysis Cost of Exposure Analysis Failure Mode and Effect Analysis Hazard Analysis • One may also use combinations of these Informal Quality Analysis • Identified by a “cross-section” of people – – – – – – – Requirements analyst Designers Coders Testers Integration and Builders Support personnel Possibly users/customers • Risks are identification, analysis and prioritization based on: – Past experiences – Available data from inspections/reviews of requirements and design – Data from early testing results • Mission-critical and safety-critical system tests would need to “formalize” the analysis of the past inspection/review results and tests results ISO 9126 Quality Risk Analysis Reliability • ISO 9126 Maintainability ISO 9126 defines on 6 Characteristics of Quality. – Functionality: suitability, accurateness, interoperability, compliance, security – Reliability: maturity, fault tolerance, recoverability – Usability: understandability, learnability, operability – Efficiency: time behavior, resource behavior – Maintainability: analyzability, changeability, stability, testability – Portability: adaptability, installability, conformance, replaceability • • These 6 characteristics form a convenient list of prompters for the identification, analysis , and prioritization of risks (potential problems) Good to follow an industry standard, especially for large software systems which require a broad coverage of testing. Cost of Exposure Analysis • Based on two factors: – The probability of the risk turning into reality or the probability that there is a problem – The cost of loss if there is a problem • The cost of loss may be the viewed as cost of impact to users in (work hours delayed, material loss, etc.) • The cost of loss may also be viewed from a reverse perspective --cost of fixing what the problem caused. – Based on the probability of occurrence and the cost of impact, one can prioritize the test cases. • Consider three risks – – – – Risk 1: prob. of occurrence (.3) x cost ($20k) = $6k Risk 2: prob. of occurrence (.25) x cost ($10k) = $2.5k Risk 3: prob. of occurrence (.1) x cost ($100k) = $10k In this case, you will prioritize in the order: » Risk 3, » Risk 1, and » Risk 2 • Both factors are hard to estimate for normal testers; need experiences. Failure Mode/Effect Analysis (FMEA) • This methodology was originally developed by the US military (MIL-P-1629) for detecting and determining the effect of system and equipment failures, where failures were classified according to their impact to: – – • a) the mission success and b) personnel/equipment safety. The steps are ( for each mechanical-electrical component ): – – – – Identify potential design and process failures Find the effect of the failures Find root cause of failures Prioritize the recommended action based on risk priority number which is computed by (probability of risk occurrence x severity of the failure x probability of detection of the problem) --- similar to Cost of Exposure Analysis – Recommend actions • For software ( for each function): – Same steps apply, but the reasons for failure are quite different from the physical component . • There is no aging and wear/tear • Mechanical stress needs to be replaced by performance stress Hazard Analysis • This is similar to Failure Mode and Effect Analysis except we look at the effects first and work backward and trace at potential cause(s). • In order to list the effects (or anticipate problems) , the testers will need to be fairly experienced in the application discipline and software technology Ranking of Problems (Hutcheson example) • Rank = 1 (Highest priority) – Failure would be critical – Required item by service level agreement (SLA) • Rank = 2 (High Priority) – Failure would be unacceptable – Failure would border the SLS limit • Rank = 3 (Medium priority) – Failure would be survivable – Failure would not endanger the SLA • Rank = 4 (Low priority) – Failure would be trivial and has small impact – Failure would not be applicable to SLA Risk Ranking Criteria (Hutcheson Example & Yours) • (Hutcheson) Similar in concept to the ISO 9126’s 6 characteristics – – – – – – – Is this risk a required item: (1= must have - - - 4 = nice to have) What is the severity of risk: ( 1 = most serious - - - 4 = least serious) What’s the probability of occurrence: (1= most likely - - - 4= least likely) Cost of fixing problem if it occurs: (1 = most expensive - - - 4 = least exp.) What is the failure visibility: (1 = most visible - - - 4 least visible) How tolerable will users be: (1 = most tolerable - - - 4 least tolerable) How likely will people succeed: (1= fail - - - 4 = succeed) Which from the above would you keep and what new ones would you add? e.g.: - How difficult is it to test for or discover this problem (1 hard - - - 4 =easy)