Test Plan (Not in Text) -- this is moved from earlier slot

advertisement

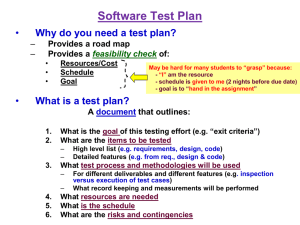

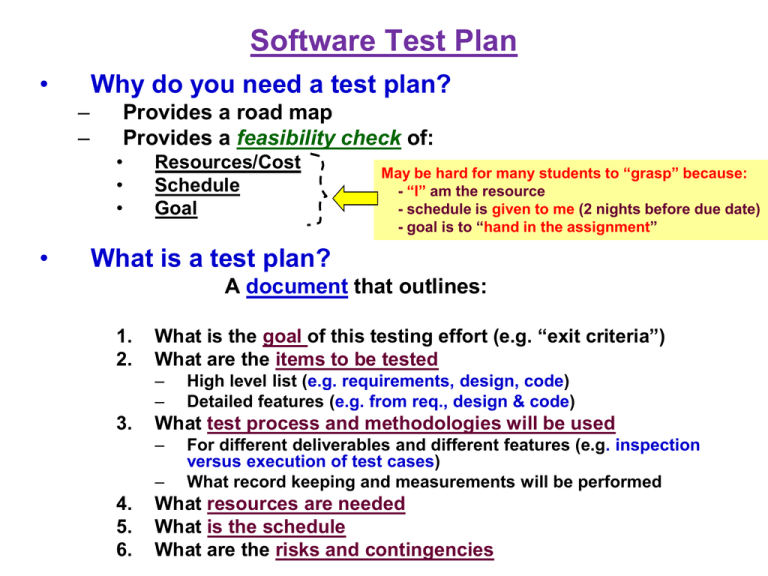

Software Test Plan • Why do you need a test plan? – – Provides a road map Provides a feasibility check of: • • • • Resources/Cost Schedule Goal May be hard for many students to “grasp” because: - “I” am the resource - schedule is given to me (2 nights before due date) - goal is to “hand in the assignment” What is a test plan? A document that outlines: 1. 2. What is the goal of this testing effort (e.g. “exit criteria”) What are the items to be tested – – 3. What test process and methodologies will be used – – 4. 5. 6. High level list (e.g. requirements, design, code) Detailed features (e.g. from req., design & code) For different deliverables and different features (e.g. inspection versus execution of test cases) What record keeping and measurements will be performed What resources are needed What is the schedule What are the risks and contingencies 1. Goal(s) of Your Testing • Goals may be different for each test. – They often need to match the product goal or the project goal – Generic : “Meets customer requirements or satisfaction” is a bit too broad. – A Level Deeper Examples are: • Ensure that all the (requirements) features exist in the system • Ensure that the developed components and the purchased components integrate as specified • Ensure that performance targets (response time, resource capacity, transaction rate, etc) are met. • Ensure the system is robust (stress the system beyond boundary) • Ensure that the user interface is “clear,” “novel,” or “catchy,” etc. • Ensure that the required functionality and features are “high quality.” • Ensure that industry standards are met. • Ensure that internationalism works (laws and looks) • Ensure that the software is migratable Note that this list seem to deal more with “implemented” design and code --- what about req. doc. itself? 2. Test Items – (what artifacts?) • Testing the deliverables (Depends on the goal): – – – – – Requirements specification Design specification Looking at Major Artifacts Executable code User guides Initialization data • Testing the individual function/features (Depends on the goal): Looking at Details of the artifacts – Logical application functions (list all of them – usually from requirement spec. - - - from the users perspective) – At the detail level for small applications (list all the modules – from design spec. or build list - - - from development perspective) – User interfaces (list all the screens – usually from Req. and Design spec ---- from user perspective) – Navigation and especially the “sequence” (e.g. usability is a goal) What is NOT included in the Test • List of items not included in this test • Possible Reasons of NOT testing the code: – Low risk items ---- how determined? – Future release items that has finished coding phase (and unfortunately “may be” a problem if included in the code) – Not a high priority item ( from req. spec.) 3. Test Process and Methodologies • What testing methodology will be applied: – Non executable – • formal inspection, informal reviews, (unit test user guide examples) – Executable – • unit test, functional test, system test, regression test, etc. • Blackbox testing – – – – Logic – Decision Table testing Boundary Value Equivalence testing Stress testing and performance testing Installation test • Whitebox testing – Path testing : Code coverage; Branch coverage; linearly indep. paths, D-U path coverage – Object testing (inheritance, polymorphism, etc.) • • • What test-fix methodology will be applied What “promotion” and locking mechanism will be used. What data will be gathered and what metrics will be used to gauge testing. – – – – • # of test cases and the amount of coverage (paths, code, etc.) # of Problems found by severity, by area, by source, by time # of problems fixed, returned with no resolution, in abeyance # of problems resolved by severity, turn around time, by area, etc. How to gauge if goals are met? – Metrics for validating the goal – Decision process for release Bug seeding approach? 4. Test Resources • Based on the amount of testing items and testing process defined so far, estimate the people resources needed • The skills of the people • Additional training needs • # of people • The non-people resources needed – Tools • Configuration management • Automated test tool • Test script writing tool – Hardware, network, database – Environment (access to: developers, support personnel, management personnel, etc.) 5. Test Schedule • Based on: – – – – Amount of test items Test process and methodology utilized Number of estimated test cases Estimated test resources • A schedule containing the following information should be stated: – Tasks and Sequences of Tasks – Assigned persons – Time duration 6. Risks and Contingencies • Examples of Risks: – Changes to original requirements or design – Lack of available and qualified personnel – Lack of (or late) delivery from the development organization: • Requirements • Design • Code – Late delivery of tools and other resources • State the contingencies if the Risk item occurs – Move schedule – Do less testing – Change the goals and exit criteria 7. Appendix of Actual Test Cases • General Test Case Specification Template • Developed Test Cases (Modular Level): – Black Box Testing – White Box Testing • Developed Test Cases (component/System Level): – Component Level – System Level You may want to see: IEEE 829 Documents on Testing • Test preparation documents: – – – – – IEEE 829 –Test plan IEEE 829 – Test Design Specifications IEEE 829 – Test Case Specifications IEEE 829 – Test Procedure Specification IEEE 829 – Test Item Transmittal Report • Test Running documents: – IEEE 829 – Test Log – IEEE 829 – Test Incident Report • Test Completion Document: – IEEE 829- Test Summary