CALIFORNIA STATE UNIVERSITY, NORTHRIDGE IMPLEMENTATION OF MEMORY EFFICIENT IP LOOKUP ARCHITECTURE

advertisement

CALIFORNIA STATE UNIVERSITY, NORTHRIDGE

IMPLEMENTATION OF MEMORY EFFICIENT IP LOOKUP

ARCHITECTURE

A graduate project submitted in partial fulfillment of requirements

For the degree of Master of Science in,

Electrical Engineering

By

Karthik Venkatesh Malekal

May 2014

The graduate project implemented by Karthik Venkatesh Malekal is approved:

___________________________________

_____________________

Dr. Shahnam Mirzaei

Date

___________________________________

_____________________

Dr. Somnath Chattopadhyay

Date

___________________________________

_____________________

Dr. Nagi El Naga, Chair

Date

California State University, Northridge

ii

ACKNOWLEDGMENTS

This project work would not have been possible without the guidance and the help of

several individuals who contributed and extended their valuable assistance in the

preparation and completion of this project.

Firstly, I would like to extend my most sincere gratitude to Dr. Nagi El Naga for all the

help and support I received from him. He has been an inspiration and a constant driving

force over the course of implementing this project making available to me, all the

required resources that were needed. It was an honor for me to have worked on a project

under his guidance.

I would also like to thank Dr. Shahnam Mirzaei for taking the time to guide me on several

occasions early on during the implementation of this project which was extremely helpful

and invaluable. I would like thank Dr. Somnath Chattopadhyay for his support and for

agreeing to be a member of the project committee.

Most importantly, I would like to thank my parents, Mr. Venkatesh A.K and Mrs. Geetha

Venkatesh for their constant support, prayers and well wishes at every step that I have

taken. I would also like to extend my sincere thanks to my brother, Vinay Malekal and

my sister-in-law, Mithila Malekal for their constant support over the past two years of my

Graduate studies.

Lastly, I extend my sincere gratitude to the Department of Electrical and Computer

Engineering at California State University, Northridge and to all professors who have in

one way or the other extended their support and help in the completion of this project.

iii

TABLE OF CONTENTS

Signature Page

ii

Acknowledgments

iii

List of Table

vi

List of Figures

vii

Abstract

ix

CHAPTER 1: Objective and Project Outline

1

1.1: Introduction

1

1.2: Objective

5

1.3: Project Outline

5

CHAPTER 2: IP Addressing

6

2.1: Classful Addressing Scheme

9

2.2: Classless Inter-Domain Addressing

10

2.3: Solutions to longest prefix match search process

13

2.3.1: Path compression techniques

17

2.3.2: Prefix expansion/Leaf pushing

18

2.3.3: Using multi-bit tries

21

2.3.4: Using a combination of leaf pushing and multi-bit tries

24

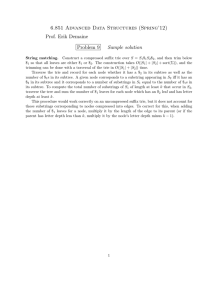

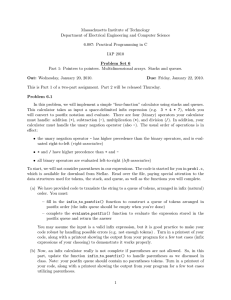

CHAPTER 3: Proposals for search process using binary tries

26

CHAPTER 4: Implementation details of memory efficient pipeline architecture

31

iv

4.1: Implementation of pipeline structure

34

4.1.1: The prefix table

34

4.1.2: The trie structure and mapping

36

4.2: Implementation of hardware

45

4.2.1: Data organization in memory blocks

46

4.2.2: Logical blocks used at each stage

47

4.2.3: Search process at stage 1

53

4.2.4: Search process in other stages of the pipeline

55

CHAPTER 5: Simulation results and analysis

59

5.1: Simulation analysis

59

5.2: Implementation analysis

65

CHAPTER 6: Conclusion

67

6.1: Project achievements

67

6.2: Scope for improvements

68

REFERENCES

70

APPENDIX A: Data in Memory Modules

71

APPENDIX B: Implementation codes

78

v

LIST OF TABLES

Table 2.1: An example forwarding table

8

Table 2.2: Prefixes and corresponding nodes in forwarding table

13

Table 4.1: Prefix/forwarding table showing sample prefix values and their

corresponding nodes

35

Table 4.2: Algorithm to find initial stride

39

Table 5.1: List of test vectors to test the IP Lookup request outputs

60

vi

LIST OF FIGURES

Figure 1.1: Block diagram of TCAM based solution for IP lookup

3

Figure 1.2: Block diagram of SRAM based solution for IP lookup

4

Figure 2.0: Block diagram of IP packet showing the source and destination IP

address

6

Figure 2.1: IP network address representation

11

Figure 2.2: Example binary trie structure

14

Figure 2.3: Example path compressed uni-bit trie

17

Figure 2.4: Leaf pushed uni-bit trie

19

Figure 2.5: Multi-bit trie structure with fixed stride

22

Figure 2.6: Leaf pushed multi-bit trie

24

Figure 3.1: Pipeline stages with a ring structure

27

Figure 3.2: Pipeline structure used in CAMP search algorithm

29

Figure 4.1: Sample trie structure with two subtries and mapping to pipeline

32

Figure 4.2: Example subtrie with one parent (node A), two children (nodes B

and C) and mapping to the pipeline

34

Figure 4.3: Trie structure corresponding to given prefixes in table 4.1

36

Figure 4.4: Leaf-pushed uni-bit trie structure

37

Figure 4.5: Multi-bit trie with initial stride, i = 2

39

Figure 4.6: Multi-bit trie with initial stride, i = 3

40

Figure 4.7: Subtrie with root at node “a” in the multi-bit trie from figure 4.6

41

Figure 4.8: Pipeline structure with nodes mapped to its memory modules

44

vii

Figure 4.9: General structure of the pipelined hardware

45

Figure 4.10: Input packet format

45

Figure 4.11: Data packet from memory block

47

Figure 4.12: Shift register module

47

Figure 4.13: Stage 1 memory module

48

Figure 4.13a: Memory module structure

49

Figure 4.14: Node distance check module

50

Figure 4.15: Update module

51

Figure 4.16: Write data packet

52

Figure 4.17: Block diagram of stage 1 of pipeline

53

Figure 4.17a: Block diagram of the stage module of a pipeline

56

Figure 5.1: Simulation output with outputs from each of the 7 stages in the

pipeline

61

Figure 5.2: Simplified simulation output showing only the inputs and final

output

62

Figure 5.3: Simulation outputs showing operation of NDC module

63

Figure 5.4: Simulation outputs showing write operation

64

viii

ABSTRACT

IMPLEMENTATION OF MEMORY EFFICIENT IP LOOKUP

ARCHITECTURE

By

Karthik Venkatesh Malekal

Master of Science in Electrical Engineering

The internet today has grown into a vast network of networks. It consists of a large

number of smaller networks connecting millions of users together by linking these

smaller networks together via a communication medium and routers. As the

communication speeds between these networks is growing rapidly over the past several

years, the demands on faster processing and routing of packets between the networks is

increasing at a rapid pace. A solution to this increased demand in packet routing can be

achieved by implementing the IP lookup process for packet routing using some form of a

tree structure. A TRIE based implementation can be a very efficient IP lookup process

which allows for a highly pipelined structure to achieve high throughputs. But mapping a

trie on to the pipelined stages without a proper structure would result in unbalanced

memory distribution over the stages of the pipeline.

In this project project, a simple and highly effective IP lookup process using a linear

pipeline architecture with a uniform distribution of the trie structure over all the pipelined

stages is presented. This is achieved by using a pipeline of memory modules with

additional logic at each stage to process the data resulting from the search at each stage.

The result as observed is a fast and linear operation of IP Lookup that can be

implemented on a FPGA with one Lookup request being processed at every clock cycle.

ix

CHAPTER 1

OBJECTIVE AND PROJECT OUTLINE

1.1 INTRODUCTION

The main function of network routers is to accept the incoming packets and forward them

to their final destinations. For this, the routers on the network will have to decide the

forwarding path for every incoming packet to reach the next network on the route to the

destination network. This forwarding decision made by the routers usually consists of the

IP address of the next-hop router and the outgoing port through which the packet will

have to be forwarded. This information is usually stored in the form of a forwarding table

in every router which the router builds using information collected from the routing

protocols.

The packet’s destination address is used as the key search field during the lookup through

the forwarding table to find the next-hop location for the packet. This process is called

the “Address Lookup”. Once information regarding the forwarding address is obtained

from the table, the router can route the packet on it’s incoming link through the

appropriate port on it’s output link to the next network. This process is called “Packet

Switching”.

With a continued increase in the network speed called the “Network Link Rates”, the IP

address lookup process in the network routers has become a significant bottleneck. With

1

advances in networking technologies used to route packets, the network link rates are

ever increasingly approaching speeds in the Terabits per second range. With these

increased network speeds, software based implementations of IP lookup can be very slow

and will mostly not be able to support such high speeds.

Hardware based implementation of the lookup process are more appropriate solutions as

the forwarding information search speeds can be highly improved as a result of the

overhead of processing the software running on the router can be removed with a pure

hardware implementation. Two types of hardware implementations are currently

available which can be used for this process. The first being the Ternary Content

Addressable Memory (TCAM) based solutions and secondly, the Static Random Access

Memory (SRAM) based solutions.

A. Ternary Content Addressable Memory (TCAM) based solutions

Ternary Content Addressable Memory, also called TCAM is a high speed memory

structure that uses the data provided by the user, in this case, the router has to search

its entire memory to find an exact match. Though this implementation of IP lookup

can be fast by obtaining outputs in one clock cycle, their throughput is limited by the

low speed of operation of the TCAM. Besides, the power consumption of TCAM

structures is also significantly higher and they lack the ability to be scalable. All these

factors prove that the TCAM based solutions are not a very effective in solving the IP

2

Lookup problem. The block diagram of a TCAM based IP lookup solution is

presented in Figure 1.1.

Figure 1.1: Block diagram of TCAM based solution for IP lookup

B. Static Random Access Memory (SRAM) based solutions

Static Random Access Memory, also called SRAM is a strong alternative to the use of

TCAMs for the purpose of IP Lookup. They usually have very high operation speeds

and their power requirements are also comparatively less when compared to that of

TCAMs. But the problem with SRAM based structure is that they require multiple

clock cycles to complete a single lookup operation. This limitation of the SRAM

based architecture can be overcome by the use of pipelining which would

significantly improve it’s throughput. An implementation of the IP Lookup process

using SRAMs is presented in the block diagram in Figure 1.2.

3

Figure 1.2: Block diagram of SRAM based solution for IP lookup

Using a binary search process on a tree like data structure called a “trie”, the IP lookup

process using the pipelined SRAM architecture can be implemented by mapping each trie

level to a particular stage of the pipeline with it’s own memory and logic processing unit

to perform the lookup. In this way, by implementing an entire trie onto different stages of

a complete pipelined structure, the IP lookup process can flow through the pipelined

stages resulting in the forwarding information being output at the end of the pipeline at

the end of every clock cycle.

Although the above method would increase the throughput of the system, the mapping

would result in an unbalanced distribution of the trie nodes on the different stages of the

pipeline. This in turn would result in the pipeline stage containing larger number of trie

nodes requiring multiple clock cycle to access the larger memory requirements at that

stage and will also lead to larger number of updates to the memory which are usually

dependent on the number of nodes stored in that memory block. As a result, when there

are large number of updates resulting from route insertions and deletions, the stages with

4

larger memory blocks in the pipeline will need proportionately larger number of updates

and can also lead to memory overflow. Hence such stages in the pipeline can become

bottlenecks and slow down the entire search process.

1.2 OBJECTIVE

In this project, a pipelined search architecture is implemented which has a uniform

distribution of the trie nodes across all the memory blocks of the pipeline structure which

would result in a constant search time across all the stages of the pipeline structure. It will

also result in a high throughput of one output at the end of every clock cycle resulting

from the pipeline operation of the search process. With this linear pipelined structure with

constant memory block sizes at each stage, the updates required to each memory block

can also be reduced due to the uniformity in the distribution of the trie nodes.

1.3 PROJECT OUTLINE:

Here, we build a pipelined search structure on a FPGA with each pipeline stage

containing a memory block and logic units that are used to carry out the search process

and the updates to the memory block when a trie node has to be either deleted or added

depending on the topology of the networks surrounding the router. Furthermore, the

nodes of the binary trie structure are uniformly distributed over all the memory blocks to

achieve a uniform search time at each of the pipeline stages. The pipelined search

architecture is designed to be implemented on a Xilinx Spartan 6 FPGA.

5

CHAPTER 2

IP ADDRESSING

In computer networking which is mainly based on packet switched network protocols, the

data to be transmitted are organized into packets of fixed length before transmission.

There are two forms of addressing that are used to route these data packets from a source

node to a destination node, where a node can be either a host computer at the user end or

a server servicing requests from users. The two addressing forms are, IPv4 (Internet

Protocol version 4) and IPv6 (Internet Protocol version 6). The main difference between

these two forms is that, IPv4 uses a 32 bits long address format for each packet whereas

IPv6 uses a 64 bits long address format. This increase in address field length in IPv6 is

mainly to cope with the fast increasing number of interconnected networks and the

number of devices that are connected to each of these networks. A block diagram of a

complete IPv4 packet is shown in Figure 2.0 which includes the 32 bit address field of

the destination node.

Figure 2.0: Block diagram of IP packet showing the source and destination IP address

In IPv4, as mentioned above, the source and destination IP addresses are 32 bits long.

These 32 bits are divided into 4 fields each of 8 bits long called Octets. In decimal form,

6

the IP address is represented in a dot notation form with the value of each octet followed

by a dot. As an example, let us consider a 32 bit IPv4 address in binary format as

11000000101010000000101000000000. This address can be broken down into 4 octets

and can be written as 11000000_10101000_00001010_00000000 which in decimal dot

notation format would result in the address 192.168.10.0.

In the Internet protocol the IP address is mainly required for the interconnection of

various networks. This naturally results in routing of packets based on the network

addresses rather than on host addresses. This resulted in the IP address consisting of two

levels of hierarchy,

A. The network address part: The network part of the IPv4 address is used to

identify the network to which the host is connected to. Thus, all hosts connected

to the same network will have the same network address field.

B. The host address part: The host address is used to identify the individual hosts

on a particular network

As the network address forms the first bits of the IP address, we can call the network

address a “Prefix” which can have a length of up to 32 bits in the IPv4 addressing space

and followed by a “*” which would represent the host address. As an example,

considering the IP address 192.168.10.0, let us assume “192.168”, i.e the first two octets

7

(11000000_10101000) of the IP address form the network address part and “10.0” is the

host address of one terminal on the network. Therefore the IP address

1100000010101000* (192.168.*) would represent all the 216 hosts connected to the

network addressed by “192.168”.

With this format of addressing, the packets are now routed using the network address

until the destination network is reached and then the host address can be used to identify

the intended host on that network to which the data packet has to be delivered. This

process is called “Address Aggregation” by which prefixes can be used to identify a

group of addresses. Also with this format of addressing, the forwarding table in the core

routers will now have to contain only address prefixes and the forwarding information

which can be either the Next-hop address or Output port number to the destination

network as shown in the example in table 2.1. So when a packet comes in, the router will

have to search for the prefixes in the forwarding table that matches the bits in the

destination address field of the packet.

Destination Network

Address Prefix

Next - Hop Address

Output Port Number

192.168

208.24.101.0

1

194.171

208.24.101.10

2

208.10

208.24.101.16

4

Table 2.1: An example Forwarding table

8

This format of IP addressing led to the formation of two different addressing schemes

known as,

1. Classful Addressing

2. Classless Inter-domain Addressing (CIDR)

2.1 CLASSFUL ADDRESSING SCHEME

During the early days of the internet, the addressing scheme was based on a simple

assumption of three different sizes for the network addresses, thus forming three different

classes of networks. These predetermined sizes were 8, 16 and 24 bits of the total 32 bit

length IP address. The classification of the networks was as follows:

a. Class A networks: This class of networks had the first 8 bits of the IP address

representing the network address while the remaining 24 bits represented the

hosts on that particular network. This meant that there were fewer number of

networks (a maximum of 28 = 256 networks) with each network having a large

number of hosts connected to them (a maximum of 224 hosts).

b. Class B networks: This class of networks had the first 16 bits of the IP address

representing the network address while the remaining 16 bits represented the

hosts on that particular network. This meant that there were sufficiently large

9

number of networks (a maximum of 216 networks) and 216 hosts connected to

each of these networks.

c. Class C networks: For these networks the first 24 bits of the IP address were used

to address the networks which meant a very large number of networks with only a

few hosts (a maximum of 28) being connected to each of these networks.

This kind of classification of the networks with fixed network address sizes resulted in

easier lookup procedures. But, it was determined over the course of the growth of the

internet and the increase in the number of networks and hosts that the distribution of the

addresses was wasteful. Also, with the growth of the internet, the size of the forwarding

tables also grew exponentially because the routers had to now maintain an entry for every

network in their forwarding tables. This resulted in slower IP Lookups and hence slower

overall network speeds.

2.2 CLASSLESS INTER-DOMAIN ADDRESSING

With an increase in the number of networks and number of hosts on each network, a

solution was needed to solve the problem of address shortages and wasteful allocation of

IP addresses to some networks. For this, an alternative addressing scheme was

introduced, which was called the ‘Classless Inter-Domain Routing’ (CIDR).

10

With the Classful addressing scheme, the prefix lengths were constrained to either 8, 16

or 24 bits to form the Classes A, B and C respectively. But with the CIDR addressing

scheme, the IP address space is utilized more efficiently by allowing the prefix (network

address) lengths to be arbitrary and not constraining them to be 8, 16 or 24 bits long. By

this scheme, the CIDR allows the network addresses to be aggregated at various levels

with the idea that addresses have a topological significance. This concept allows for the

recursive aggregation of the network addresses at various points in the topology of the

internet. This also leads to a reduction in the number of entries the routers will have to

maintain in their forwarding tables.

As an example to illustrate this, lets consider a group of networks represented by network

addresses starting from 192.168.10/24 to 192.168.20/24. Here the slash “ / ” symbol and

the number following the symbol (24 in this case) denote the number of bits in the IP

address that are used as the prefix (Network address). Here the leftmost 24 bits of the IP

address represent the network address. In 32 bit binary form, these address can be

represented as given below in Figure 2.1.

192.168.10/24 - 11000000_10101000_00001010

.

.

.

.

.

.

192.168.16/24 - 11000000_10101000_00010000

.

.

.

.

.

.

192.168.20/24 - 11000000_10101000_00010100

Figure 2.1: IP network address representation

11

In the set of network addresses above, it can be seen that the first 19 bits

(11000000_10101000_000) are common to all the networks and thus all these networks

can be combined into one network with the first 19 bits representing the network address

and can be denoted as 192.168.10/19. This process would allow a large number of

networks to be combined and represented by a common prefix value. By this, the amount

of forwarding information that will have to be maintained by the network routers will be

reduced significantly.

But for the above addressing scheme to work, all the networks with the common prefix

addresses will have to be serviced by the same service provider. If this criteria is not met,

a few additional information fields will have to be maintained to route the packets

correctly.

As an example, consider that the network with the address 192.168.16/24 is not serviced

by the same Internet Service Provider (ISP) as the other networks. This leads to a

discontinuity in the addressing scheme and packets addressed to the network address

192.168.16/24 may get dropped. A solution to this can be obtaining by still combining the

networks with the same 19 bit prefix values and an additional entry in the forwarding

table for the 192.168.16/24 network. Now, because of this situation, the router will have

to find an address that matches most accurately to the entries in the forwarding table. This

process is called the “Longest Prefix Matching”(LPM). Therefore, the address look up

12

process in the network routers will require the search for the longest matching prefix to

the destination address contained in the packet.

Although the CIDR addressing scheme reduces the number of entries that have to be

maintained in the forwarding tables, the IP lookup process now becomes complex as the

it does not involve only the bit pattern comparison but will also involve finding the

appropriate length of the prefix to be used for the search.

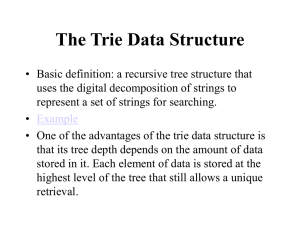

2.3 SOLUTIONS TO LONGEST PREFIX MATCH SEARCH PROCESS

The most commonly implemented method of search to implement the Longest Prefix

Match is through the use of Binary Trie structures. A binary trie is a tree like data

structure containing nodes that represent different levels of binary values from the top of

the tree to the bottom. This search mechanism uses the bits in the prefix address for

branching while traversing down the trie.

Consider the prefixes and the corresponding trie nodes for the prefixes in Table 2.2 to

represent the prefix and the forwarding information in a forwarding table.

PREFIXES

NODE

0*

P1

000*

P2

010*

P3

13

PREFIXES

NODE

01001*

P4

01011*

P5

011*

P6

110*

P7

111*

P8

Table 2.2: Prefixes and corresponding nodes in the forwarding table

This table can be represented in a binary trie structure as shown in Figure 2.2.

Figure 2.2: Example Binary trie structure

The nodes at the different levels of the trie structure represent the prefix values of length

equal to the position of the node in the trie structure. For example, the node P2 at level 3

of the trie represents the prefix value of 000. This sort of a binary trie structure is known

as a Uni-bit Trie. The nodes in such a structure are not only located at the edges of the trie

but are also present at intermediate positions in the trie. This is a result of the exception

networks that are served by different internet service providers as discussed above in

14

section 2.2. Therefore, for example, node P2 and P3 are exceptions to the group of

networks that are represented by the prefix value of node P1.

With this structure, there is usually an overlap of prefixes when a search is conducted.

Lets consider a prefix value of 010* that has to be looked up. As seen from the trie

structure above, both nodes P1 and P3 match this prefix value. To solve this problem, the

Longest Prefix Match (LPM) technique is used which results in node P3 being chosen as

the final result of the lookup process.

Tries are an easy way to find the longest matching prefix for the destination IP address.

The bits of the destination IP address are used in making the decision at each node of the

trie, the direction in which the search should proceed. If at a node, the next bit in the

address is a 1, the search takes the right path and if the bit value is 0, the search proceeds

towards the left path. As the search reaches a prefix node, that node will be registered as

the longest matching prefix and the search continues down the trie to find any other

longest matching prefix node that might be present. If no better matching node is found,

then the last register prefix will be chosen as the final result and the longest matching

prefix.

As an example, let us consider an IP address starting with the value 01001*. The search

begins at the node labeled “ROOT” and continues down towards the left path, where

node P1 is found. This node is now registered as the longest matching prefix and the

15

search continues to the right of node P1 down the trie. Subsequently at position 010, node

P3 is found which replaces node P1 as the longest matching prefix node. The search then

continues down the trie again until 01001 where node P4 is encountered. This node is

now registered as the longest matching prefix node and the search terminates as there are

no more values that can be searched in the trie structure.

The search through the trie structure leads to a hierarchical reduction in the search space

at each step of the trie. Updating the trie structure is also a simple process where a search

for a node can be performed and when the search reaches a node with no further nodes

below it, the appropriate node can be added to the structure to create a new prefix node.

The node deletion process will also be similar where the nodes below a particular one can

be deleted using the search process.

Although the above described process of Uni-bit trie search is simple, it can often leads to

unnecessary search operations being performed on a path of the trie even after a prefix

node has been registered and no more best matches can be found. This is a disadvantage

that can lead to the slowing down of the search process. Also every extra node on the trie

takes up a memory space resulting in a larger use of memory than required. A number of

ways have been suggested to overcome these limitation, some of which are discussed

here.

16

2.3.1 PATH COMPRESSION TECHNIQUE

In this technique of trie length reduction, the one way branch node are reduced by

eliminating intermediate node in the path that do not represent a prefix node. By doing

this, additional information has to be maintained in the nodes about which bit position in

the IP address has to be compared next to find the next node in the trie. As shown in the

diagram in Figure 2.3, the paths from node P1 to P2 and from the root node to the nodes

P7 and P8 have been compressed. The nodes now have to hold an additional information

about the position of the bit in the IP address that has to be compared next which is

represented by the decimal number on the side of every node other than the nodes at the

edges of the trie.

Figure 2.3: Example Path Compressed Uni-Bit trie

For example, consider the search has reached the intermediate node between nodes P7,

P8 and the root on the trie. This node has to now direct the search process to compare bit

number three of the destination IP address in order to determine if the output will be node

17

P7 or node P8. As soon a prefix node is encountered, a comparison of the actual prefix

value the node represents is made with that of the IP address. By this way, we see that the

search process is reduced by one step and the memory requirement is reduced by one on

this path of the trie. In a similar way, all the one way branches in a trie can be reduced

thus resulting in a speedup of the search process.

Although this method of binary trie search reduces the length of the trie structure, it adds

an additional value that has to be maintained at the nodes for the search process to

proceed. This is a disadvantage which can lead to additional memory usage.

2.3.2 PREFIX EXPANSION / LEAF PUSHING

In a router, the forwarding table consists of address prefixes that correspond to the next

hop information for a particular destination IP address. The prefixes are data obtained by

the routers over a period of time and by combining networks with the same prefix bits as

using the address aggregation process described in the previous sections. The problem

with the search process using either the Uni-bit trie structure or the path compressed unibit trie structure is that, the search process requires either storing a prefix node while the

search proceeds down a trie structure to find the longest matching prefix or backtracking

through the trie structure. That is, the search will have to either keep track of the best

matching node encountered or will have move up the trie if no valid matches are found

at the bottom edges of the trie structure. This is usually not a desirable attribute when the

18

trie structure is mapped onto pipelined stages for hardware implementation. While

additional memory is required to keep track of the best match prefix, backtracking will

involve the implementation of a looping structure within the pipeline structure. To avoid

these disadvantages, an optimization to the uni-bit trie called Prefix Expansion or Leaf

Pushing can be used.

In this process, the prefix nodes at the intermediate level of a trie structure are expanded

to be pushed down to the bottom edges of the trie structure. When this optimization is

performed on the uni-bit trie, there can be an overlap of nodes at the edges of the trie. At

the point where two nodes overlap, the node that was already present at the position is

given precedence over the new node. This enables the best matching prefix to be obtained

at the end of the trie.

As an example, the trie structure presented in Figure 2.4 represents a Prefix expanded/

Leaf Pushed trie structure.

Figure 2.4: Leaf pushed uni-bit trie structure

19

Comparing the trie structure above to that from Figure 2.1, it can be seen that the nodes

P1 and P3 which were present at intermediate location in the trie structure have been

pushed to the bottom edge of the trie by way of expanding their prefixes. Here,

A. Node P1’s prefix can be expanded by one bit to values 01* and 10* to push it to

the bottom of the trie.

B. Node P3’s prefix value can be expanded by 2 bits to values 01000*, 01001*,

01010* and 01011* to be pushed to the bottom of the trie.

When prefixes of nodes are expanded, there is a possibility of these nodes overlapping

with other nodes that represent the same prefix value but were part of the original trie

structure forming the exception nodes. In this case, when the expansion takes place,

preference is given to the node already present at a particular location over the expanded

node. This enables the best matching prefix to be found at the end of the trie search

process.

Another advantage of prefix expanding or leaf pushing is that all the nodes that represent

prefix values in the forwarding table are present only at the bottom of the trie structure.

This would eliminate the need to keep track of the best matching prefix node or the need

to backtrack during the trie search. This is due to the fact that all the prefix nodes are

pushed to the bottom of the trie and the nodes encountered at the end of the trie structure

20

are the best matching or the Longest Prefix Matches that can be found in the trie. As a

result of this, the mapping of the trie structure to the pipelined stages will become linear

and the extra memory needed to keep track of the prefix nodes can be eliminated.

2.3.3 USING MULTI BIT TRIES

When a search through the forwarding table in a router is conducted using a trie structure

described in sections 2.3.1, the search process by itself will be simple but will require

extra memory to remember the best matching prefix or will require backtracking through

the trie structure. Using prefix expansion explained in section 2.3.2 has benefits by which

it can eliminate the need for extra memory to register the matching nodes or the need for

backtracking. But with both these methods, the search is still performed on a bit by bit

basis. This has the disadvantage of requiring larger amount of time as only one bit of the

IP address is compared in one clock cycle.

This problem of increased search time can be reduced by searching through the trie

structure using multiple bits at a given time. This leads to the creation of Multi-bit tries

where search at each node of the trie will be performed using multiple bits.

To illustrate this process, the trie from Figure 2.2 has been transformed to a multi bit trie

and is shown in Figure 2.5.

21

Figure 2.5: Multi bit trie structure with fixed stride

It can be observed from the diagram in Figure 2.5 representing the trie structure that from

level 2 down the trie, the search process now uses 2 bits at a time. The first level still has

a single bit comparison. The number of bits that are used for comparison at a node in the

trie is called a “Stride”. So here, at level 1 of the trie which is comparison at the root

node, the Stride value is equal to 1 and the bit comparisons at levels 2 and 3 have a Stride

value equal to 2. If the Stride value used is constant throughout a level, then the trie is

called a “Fixed stride multi-bit trie” or else if the stride values vary on the same level of

a trie, it will be called a “Variable stride multi-bit trie”. The trie structure above

represents a fixed stride multi-bit trie as the stride at each level of comparison is constant.

The use of a multi-bit trie structure will now create a new problem where prefixes of

arbitrary lengths, that is, prefixes with lengths other than the stride values cannot be

compared. This will require some prefix transformations where the nodes with arbitrary

lengths of prefixes will have to be expanded. As an example, the trie structure in Figure

22

2.4 uses stride of 1 at level 1 and stride of 2 at levels 2 and 3. This would mean that, if

there existed a node with a prefix length of 4 bits say 0100*, that node will now have to

be expanded by one bit to 01000*, 01001* to comply with the search process. When this

expansion is done, there is a possibility of nodes overlapping as is the case in this

example where the expanded node would now overlap with node P4 that represents the

prefix value of 01001*. When this scenario occurs, the node P4 is preserved and is not

replaced by the expanded node as preserving the node will lead to a more accurate

comparison and thus the correct forwarding information.

Using this method, the trie structure can be compressed which will lead to a decrease in

the length of the trie and therefore a reduction in the number of pipelined hardware stages

that will be needed to map the trie structure. The worst case, reduction could be made to a

single stage when all 32 bits are compared at once. Though this would reduce the length

of the trie to 1 level, it will also require a larger memory with a longer memory access

time to search for the correct forwarding information. Also, though the overall search

time has now been reduced using multi bit comparisons, the problem of backtracking or

remembering the best matching prefix still exists in this trie structure which can still

reduce the search times through the trie.

23

2.3.4 USING A COMBINATION OF LEAF PUSHING AND MULTI BIT TRIES

As described above, although using either one of the search methods suggested has a set

of benefits, it would still have drawbacks like large size of the trie structure, requiring

extra memory to register matching nodes or even the need for backtracking through the

trie to find the best matching prefix or the Longest Prefix Match value. These drawbacks

can be reduced to a minimum when the prefix expansion/leaf pushing method and the

multi-bit trie search method suggested above are combined to form a trie structure that

will be both compact and will not require any backtracking.

To see the benefits of this search method, consider the trie in figure 2.2 to be leaf pushed

and expanded to form the multi bit trie structure presented in Figure 2.6.

Figure 2.6: Leaf pushed multi bit trie

24

With this trie structure, it can be seen that search is preformed at level 1 using a stride

value of 2 and then subsequently using a stride value of 1 on all the other levels of the trie

structure. Also, it’s seen that nodes P1 and P3 have been expanded and pushed to the

bottom edges of the trie structure. Although using this kind of a trie structure can not

produce a trie that is most compacted, it will result in a significantly compact structure

when compared to a uni-bit trie and will also have to added benefit where all the prefix

nodes are at the bottom of the trie making sure that the nodes encountered at the end of

the search are the best matching or the Longest prefix matches and thus eliminates the

need for any sort of backtracking or need to remember a previously encountered

matching node. Therefore, the speed of the search process and the memory requirements

with this structure can be optimized to obtain search results in the shortest time possible.

25

CHAPTER 3

PROPOSALS FOR THE SEARCH PROCESS USING BINARY TRIES

There are various proposals for the implementation of the IP lookup process using the

binary trie structure and its variations that have been discussed above. Some of the

proposals optimize the uni-bit trie structure directly while others modify the trie structure

as needed to map onto a set of pipelined stages. Some of the proposals have been briefly

described in this chapter.

One proposal is to use a binary trie with variable stride values at different levels of the

trie and mapping nodes at each level of the trie to a memory block in a pipeline stage.

Although using variable strides does produce a compact trie structure as described in

Chapter 2, the uneven distribution of nodes on different levels of the trie structure will

lead to the use of uneven memory blocks at different stages of the pipeline hardware

architecture which will mean that some memory blocks will consume more time than

others. Furthermore, using variable strides for hardware implementation can be a

complex design as the number of bits that will have to be compared at each stage can be

different and additional logic units will be required to make the decision about the

number of bits to be used for the search. It can also lead to a non-uniform distribution of

the nodes in the memory blocks at each stage of the pipeline.

26

Another proposal is to create a circular pipelined trie structure. In this, the pipelined

stages are configured in a circular structure with multi point access to allow for the

lookup process to begin at any point in the pipeline stages. The stage where the lookup

starts is called the “Starting Stage”. In this implementation, a trie is divided into many

smaller subtries of equal size and these subtries are then mapped to the different stages of

the pipeline structure to create a balanced memory distribution across all the stages. As a

result, the nodes are assigned to the pipeline stages based on the subtrie divisions rather

than on the level at which the nodes are present in the main trie structure. This sort of

mapping might require some subtries to wrap around if their roots are mapped to stages

closer to the end of the pipeline.

Due to this type of distribution of subtries, although all the incoming IP addresses enter

the pipeline at the first stage, the search may not start for the next few stages depending

on which stage of the pipeline the root of the subtrie corresponding to the address bits has

been mapped to. A solution to this problem was proposed by implementing a pipeline in a

ring structure consisting of two data paths.

Figure 3.1: Pipeline stages with a ring structure

27

The diagram in Figure 3.1 represents the ring pipeline structure consisting of two

datapaths. Here datapath 1 refers to the path the search algorithm takes during its first

pass and datapath 2 represents the path taken during the second pass through the pipeline.

The datapath 1 is designed to be operational only during the odd cycles of the clock

signal whereas the datapath 2 is active during the even cycles of the clock signal. Also,

this search algorithm will require the use of an index table for the search process to

initially find at which stage in the pipeline the actual search has to begin. Although this

idea of a ring pipeline produces an even distribution of nodes over the memory, the

throughput falls to 1 lookup every two clock cycles as the search has to now pass through

all the pipeline stages twice before a final output is obtained.

A different approach to the IP lookup through a ring pipeline architecture was suggested

and called the Circular, Adaptive and Monotonic Pipeline (CAMP). In this approach, the

trie structure was broken down into a root subtrie which is the starting point of the trie

structure and subsequent child node subtries. This search algorithm uses the multi bit trie

structure. Hence the initial stride of the trie is determined and is used in an index table to

hold the starting points of the subtries that are mapped to the pipeline stages.

The structure in Figure 3.2 will show the structure of the CAMP architecture in more

details.

28

Figure 3.2: Pipeline structure used in the CAMP search algorithm

In this lookup algorithm, the distribution of nodes to the memory stages of the pipeline

structure is made independent of the levels in a trie at which the nodes are present, that is,

the nodes are not mapped onto the memory based on the level in the trie structure where

they are present, rather they are mapped based on which subtrie they belong to. In this

way, the subtries are now mapped uniformly to the memory stages. While doing so, some

of the nodes of one subtrie may get mapped to the next memory stage and so on. These

stages in the pipeline are interconnected internally so that the lookup can pass through all

the stages.

Here, the initial stride bits listed in the index table at the beginning is used to find the

stage at which a search should begin. Now since search requests can arrive at different

stages at arbitrary time periods, a FIFO queue is placed in front of every stage to buffer

the requests while a previous search request is traversing through the stages of the

pipeline. When the stage is idle, the first request in its queue is accepted and the search

progresses. In this way, since the requests can be buffered at different stages, the order of

29

the incoming requests can be lost which would again require that the outputs be buffered

to reorder the outputs in the correct sequence to match that of the input sequence.

Also, since stages can be blocked due to previous requests passing through the pipeline,

there can be a long delay that can be encountered for processing certain search requests

over others. This is a major drawback of this pipeline structure which can reduce the

overall throughput by a significant amount.

30

CHAPTER 4

IMPLEMENTATION DETAILS OF THE MEMORY EFFICIENT PIPELINE

ARCHITECTURE

The main aim of the implementation is to create a pipelined IP address lookup

architecture with a uniform distribution of all the nodes in a trie structure, thereby

creating evenly balanced memory blocks across all the pipeline stages with uniform

memory access times for the lookup and to eliminate the need for any sort of loopback to

any of the previous stages in the pipeline which was the case in the pipeline architectures

described in the previous chapter. The pipeline is designed to be implemented on a Xilinx

Spartan 6 FPGA which has low power requirements and sufficient memory on board to

hold the prefix tables used here.

The Ring and CAMP architectures divide the main trie into many subtries which are then

uniformly distributed over the pipeline stages. But they do not enforce the constraint that

the roots of all the subtries be mapped to the first stage of the pipeline. This leads to the

pipelines having multiple starting points causing search conflicts and affecting the overall

throughput of the architecture.

There are two main constraints that are enforced in this implementation that try to solve

the issues from the previous implementations.

31

Constraint 1: The roots of all the subtries of the main trie are mapped to the first stage of

the pipeline.

Figure 4.1: Example trie structure with two subtries and mapping to the pipeline

Consider an example of a simple trie structure having two subtries with the roots of the

subtries at nodes B and C. Applying the above mentioned constraint to this trie, we see

that the roots of the subtries (node B and node C) are mapped to stage 1 and the child

nodes are mapped to subsequent stages.

This ensure that the search process has only one starting point, thereby eliminating any

loopbacks and ensuring that the output port number of the router through which the IP

packet has to forwarded that is found at a particular stage of the pipeline is the best match

for the IP address being used for the lookup process.

Also, the node distribution of the trie structure over the pipeline stages is made on the

basis of the level in the trie they are present at. With the earlier implementations, this

meant that the nodes at the same level in a subtrie are all mapped to the same pipeline

32

stage. With a relaxation of this rule whereby nodes on the same level of a subtrie can be

mapped to different stages of a pipeline structure, it can be seen that a more uniform

distribution of nodes can be achieved and this leads to the second constraint that has to be

followed for this implementation.

Constraint 2: If one node is the child of another node (parent node), then the parent node

has to be mapped to a pipeline stage preceding the stage to which the child node has

been mapped to.

Considering an example for this, let’s assume the presence of three nodes namely, nodes

A, B and C in a trie structure. Nodes B and C are the child nodes of parent node A. With

the above constraint enforced, this means that node A has to be mapped to a pipeline

stage preceding the stage to which nodes B and C have been mapped to. This constraint

again ensures that the entire trie structure is distributed uniformly from top to bottom

starting at the first stage and ending at the last stage of the pipeline. This method of node

distribution, as explained before helps in increasing the throughput by making sure that

the search process flows in only one direction starting at the first stage and ending at one

of the stages down the pipeline structure.

To support this type of node mapping, No-Operations (no-ops) have to be supported

using which a stage can be skipped over to reach the intended node located in one of the

later stages of the pipeline.

33

Figure 4.2: Example subtrie with one parent (node A), two children (nodes B, C) and

mapping to the pipeline

4.1 IMPLEMENTATION OF THE PIPELINE STRUCTURE

With the above mentioned constraints taken into consideration, the main goal now is to

achieve a throughput of one IP lookup for every clock cycle, a uniform distribution of

nodes across the memory and finally, a constant delay for every IP lookup. For this, the

pipeline structure is implemented using an example prefix table (forwarding table) and a

trie structure corresponding to the entries in the prefix table.

4.1.1 THE PREFIX TABLE

Let us assume a sample prefix table with the following entries as shown below in Table

4.1.

34

Prefix

Node

0*

P1

1*

P2

101*

P4

11010*

P5

1001*

P6

10101*

P7

1100*

P8

0 10011*

P9

101100*

P10

0 00010*

P11

111*

P12

0 1011*

P13

100011*

P14

11111*

P15

Table 4.1: Prefix/forwarding table showing sample prefix values and their corresponding

nodes

The prefix values in this table vary in size from 1 bit up to 6 bits in length. These prefixes

represent the address aggregation at arbitrary levels for the networks that was described

in Chapter 2. The nodes are used to represent the end location for a prefix search when

these prefixes are implemented as a trie data structure.

35

4.1.2 THE TRIE STRUCTURE AND MAPPING

Based on the set of prefix values presented above, a Uni-bit trie structure corresponding

to these prefix values is constructed as shown in the tree diagram presented in Figure 4.3.

Figure 4.3: Trie structure corresponding to the given prefixes in Table 4.1

It can be seen here that the “Root” node is the starting point of the trie and depending on

the value of the bits in the IP address being looked up, the search process can either go to

the left (when a bit is 0) or to the right (when a bit is 1) of a node down the trie structure

till the best matching result is found.

As explained in chapter 2, this type of uni-bit trie structure can be very inefficient in

terms of node distribution in the pipeline search structure which will also lead to a

complicated lookup process. This is due to the fact that address aggregation is at arbitrary

36

levels with prefix nodes placed at different levels of the trie structure. This will lead to

the requirement for some extra processing or may sometimes even lead to unexpected

results for a given destination IP address that is being used for the lookup. Therefore, the

Leaf pushing algorithm is applied to this trie structure to push all the prefix nodes at

arbitrary level to the bottom of the trie structure, thereby guaranteeing more accurate

results.

Therefore, the uni-bit trie structure after Leaf-Pushing will be as shown in the trie

structure represented in Figure 4.4.

Figure 4.4: Leaf-pushed uni-bit trie structure

Although the process of leaf pushing can lead to the creation of duplicate nodes at the

edges of the tries, it will result in a more uniform length of the prefix values in the prefix/

forwarding table.

37

But, we see here that the trie structure is still in the uni-bit format. By looking at the node

distributions, it can be seen that changing the uni-bit trie structure to a multi-bit trie

structure can lead to a decrease in the height of the entire trie structure by eliminating

intermediate nodes and thereby reducing the number of stages that will be required in the

pipeline structure used for the IP lookup process. However, using multi-bit addressing for

nodes throughout the trie structure would result in a uneven distribution of nodes in the

actual pipeline structure and will also cause complexity in the search process itself.

Therefore, multi-bit addressing is used only at the root of the trie structure to create

subtries which will remain as uni-bit structures. This multi-bit addressing used at the root

level of a trie structure, as explained in chapter 2 is called the “Initial stride” for the trie.

In order to obtain an initial stride value that would lead to a uniform distribution of nodes

across the pipeline’s memory, we have to consider a pipeline that is longer by atleast one

stage than the maximum height of the leaf pushed uni-bit trie structure starting at its root.

For the implementation here, since the maximum height of the trie is 6, a 7 stage pipeline

is considered. With this constraint, the algorithm that is used to select the length of the

initial stride is presented in Table 4.2.

38

Inputs: Leaf pushed uni-bit trie(T)

Number of pipeline stages(P)

Output: Initial stride, i

Initialize i to max[1, ((height of T) - P)]

Loop: use value of i to expand T to get expanded trie

if (2i-1) < (Number of node in expranded trie/Number of pipeline stage) < 2i

i is the required initial stride value

else

increment i by 1 and return to Loop

End Loop

Table 4.2: Algorithm to find initial stride

Using the algorithm in the above table to find the initial stride for the uni-bit trie above,

the first iteration through the loop would lead to the trie structure that would be

equivalent to that of the uni-bit trie (refer Figure 4.4) as the value of the initial stride (i)

will be 1.

For the second iteration through the algorithm, a multi-bit trie structure with initial stride,

i = 2 is constructed and is shown in the following diagram in Figure 4.5.

Figure 4.5: Multi-bit trie with initial stride i = 2

39

With the value of i = 2, the IF condition in the algorithm fails and therefore the value of i

is incremented by 1 and another iteration through the algorithm is made. The trie

structure resulting from an initial stride of 3 is shown in the tree diagram in Figure 4.6.

Figure 4.6: Multi-bit trie with initial stride, i = 3

With this multi-bit trie structure, six subtries are created with their roots at nodes a, b, c,

d, e and f with prefixes 011* and 010* representing an output port in stage 1. The leaf

pushing and use of multi-bit tries optimizes the forwarding table entries by creating

subtries which now represent a higher level of network address aggregation.

As the next step, the six subtries obtained in the multi-bit trie are now converted into a

segmented queue structure starting at the root of each subtrie till the last node of that

subtrie. The nodes at each level of the subtrie are mapped to one segment in the queue. In

this way, six segmented queues are created as shown below.

40

As an example to show the creation of the segmented queue structure, lets consider the

subtrie starting at node “a”.

Figure 4.7: Subtrie with root at node “a” in the multi-bit trie from figure 4.6

As there are two levels in the subtrie below the root node, there are two segments in the

queue corresponding to this subtrie. Starting at node ‘a’ if there are child nodes to node

‘a’, fill the first segment of the queue with the child node towards the left first (in this

case node P1) and then the second node (in this case, node ‘g’) to the second location in

the first segment of the queue.

After this is done, the queue will be as shown below.

P1

g

Next, if nodes P1 and ‘g’ had child nodes, their child nodes will be added to the second

segment similar to how nodes P1 and ‘g’ were added to the first segment. Here, since

node P1 does not have any child node, only the nodes below node g are added to the

second segment. The queue would appear as follows.

41

P1

g

P11

P1

Repeating the same process over all the subtries considering a different segmented queue

for each subtrie, the following queues will be created.

Segmented queue for subtrie with root at node “a”

P1

g

P11

P1

Segmented queue for subtrie with root at node “b”

h

i

P1

q

P13

P1

P9

Segmented queue for subtrie with root at node “c”

j

P6

P1

q

P13

P2

r

Segmented queue for subtrie with root at node “d”

k

l

P4

P7

s

Segmented queue for subtrie with root at node “e”

P8

m

P5

P2

Segmented queue for subtrie with root at node “f”

P12

n

P12

P15

42

P10

P4

Also, since the roots of all subtries are mapped to the memory block at the first stage of

the pipeline, the total number of memory stages available for mapping the remaining

nodes onto the 7 stage pipeline is now 6. To achieve an even distribution of nodes at each

stage, the total number of nodes are divided by the number of stages available to find the

number of nodes to be mapped to each stage. In this case, 6 nodes can be mapped to each

stage from stage 2 to stage 7.

These segmented queues are next sorted in a descending order based on,

A. The number of segments in each queue.

B. The number of nodes in the first segment of each queue.

The nodes are then popped from the queues in order. The segments at the front of the

segmented queues are the only segments allowed to pop the nodes. In this way, when the

current stage’s memory fills up, the queues are again re-arranged in the descending order

based on the above two criteria and the node popping continues until all the nodes in all

queues are mapped to the memories of the pipeline stages.

The node distribution across the 7 stages of pipeline used here after the mapping process

has been shown in Figure 4.8 in the following page.

43

Figure 4.8: Pipeline structure with nodes mapped to its memory modules

As seen in the diagram above, the root nodes of all the six subtries are mapped to the first

stage memory block of the pipeline structure. Therefore, the first stage is usually not

balanced. The remaining nodes are then evenly distributed over the remaining six stages.

The last stage has fewer nodes and any future nodes that might get added to the trie due

variations in the network topology can be mapped to the last stage.

44

4.2 IMPLEMENTATION OF THE HARDWARE

The general structure of the hardware to implement this search process is presented in

Figure 4.9.

Figure 4.9: General structure of the pipelined hardware

Each stage in the pipeline shown above consists of several logic units and a memory

block to store the data corresponding to the nodes mapped to that particular stage. The

logic units and the memory block are synchronous in their operation. These units will be

described in the sections in the pages to follow. The are two main inputs to this design,

1. Packet_in: This is a 44 bit packet containing two fields. First field is the 32 bits of

the IP address, the forwarding information of which has to be searched for in the

pipeline structure. The second part contains the Write information, to any of the

stages that might be required in case of an update operation. This is a 12 bit data.

Figure 4.10: Input packet format

45

2. Clock: This is the clock signal used for the synchronous operations in the logic

units and also for the synchronous memory access at each stage.

The output from the hardware is a 4 bit number representing the output port on the router

through which the IP packet received will have to be forwarded.

4.2.1 DATA ORGANIZATION IN MEMORY BLOCKS

Each of the seven memory blocks used in this implementation have 32 memory locations

with each location holding up to 8 bits resulting in a total of 256 bits (32 bytes) of data in

each memory bank. Every node mapped to a stage in the pipeline will occupy two

memory locations, one location to represent the child node when the next bit in the IP

address is a 0 and another to represent the child node when the next bit is 1.

Each 8 bit data in the memory locations holds the partial address to the memory location

where the next child node is present (4 bits), an enable bit(1 bit) used to enable memory

access at the next stage where the child node is present and the stage number (3 bits) at

which the memory block has to be accessed next to find the child node. The data packet

output from a memory block at the end of a clock cycle when a search is in progress is

shown in Figure 4.11 in the next page.

46

Figure 4.11: Data packet from memory block

The data in the memory blocks at each of the pipeline stages that forms a part of the

forwarding table used for the IP lookup process in this implementation is organized in the

tables listed in Appendix A.

4.2.2 LOGICAL BLOCKS USED AT EACH STAGE

1. The Shift Register block

Each of the seven stages in this implementation have a Shift register used to shift the

destination IP address for which the corresponding output port number is being looked up

by either 4 bits or by 1 bit. This shift register is a synchronous module shifting data to the

left at every clock cycle. The shift register is then followed by a register which is used to

synchronize the output data from the shift register module with output data from other the

blocks of that stage. A block diagram of the shift register module is shown in Figure 4.12.

This module has the incoming IP address and the clock signal as inputs.

Figure 4.12: Shift register module

47

2. Memory Module - Stage 1

The memory module at the first stage of the pipeline has a slightly different structure than

the memory modules used in the subsequent stages. It consists of a 32 by 8 bits memory

block followed by a register and a 2:1 multiplexer.

Figure 4.13: Stage 1 Memory module

The Address input is a 5 bit input used to access a memory location to either read or write

data at every clock cycle when a lookup request or an update request arrives. The Write

Enable signal is used to enable the memory block’s write process. The Write Data input is

used to hold the data to be written into the memory block in the event that an update to

the memory block has to be made.

The register at the output is used to register the output from the memory block and

synchronize it with the outputs from the other blocks. Finally the 2:1 multiplexer here is a

combinational block used to pass data to the next stage in the pipeline. The select line

48

input (Sel) to the multiplexer is the Enable bit (bit 3 in this case) from the data packet

output from the memory.

3. Memory Module - All other stages

The memory module used in all the other stages of the pipeline other than stage 1 has a

structure that varies from that used in stage 1. This module consists of a concatenation

block and a memory block. The register and 2:1 multiplexer that were present at stage 1

are now located outside the memory module in the top layer module for the stage. The

structure of the memory module used here is represented in the block diagram in Figure

4.13a.

Figure 4.13a: Memory module structure

The inputs to this module are the 8 bits of data from the previous stage and the MSB of

the shifted IP address from the previous stage. These two inputs are connected to the

concatenation block where the partial address in the Data_In input and the 1 bit MSB of

the IP address are concatenated to form the address of the memory location to be

accessed at that particular stage in the pipeline. The other inputs are the Write Enable, the

49

Write Data and the clock signal which have the same functionality as described in the

stage 1 memory module description.

4. Node Distance Check module

The second constraint used to map the trie nodes to the pipeline stages states that nodes

on the same level of a subtrie can be mapped to memory blocks of different pipeline

stages. With this type of distribution, there will be a need to introduce no-operations so

that certain stages can be skipped to resume search in stages where the next node will be

present. This is accomplished by using the enable bit and the stage number field in the

data packet output from the memory block and the Node Distance check module.

The block diagram of this module is presented in Figure 4.14.

Figure 4.14: Node distance check module

The operation of this module is as follows:

•

When stages are to be skipped, the data from the previous stage is passed to the

node distance check module.

•

In the first module named Node Distance Check, the bits representing the stage

number are extracted and checked to see if the stage number is 0. If not, the value

is decremented by 1 and forwarded to the next stage.

50

•

In the module labeled NDC Enable, if the stage number value is 0, the enable bit

is set or else the enable bit which will have a value of 0 will be passed forward

unchanged.

•

In the next module named NDC Concatenation, the partial memory address

(4bits), enable bit (1bit) and the stage number (3bits) are combined to form the

data output to be passed to the next stage in the pipeline.

5. Update Module

Routers have to very often accommodate updates resulting from changes in the network

topology surrounding the network to which they are connected. But this update operation

has to be carried out without stalling the pipeline operation so that the lookup operation is

not disrupted. The Update module is used here to accomplish this functionality. The block

diagram of this module is shown in Figure 4.15.

Figure 4.15: Update module

The data to be written during an update to the memory is passed into the pipeline as part

of the 44 bits long Packet_in data input. This data occupies the last 12 bits of Packet_in

input packet and the structure of the write data is shown in Figure 4.16 in the next page.

51

Figure 4.16: Write data packet

Here, the first bit is the enable bit which indicates if the write is to the current stage in the

pipeline or not. The next 3 bits, the stage number is used to indicate the stage number at

which the update has to be performed and the last 8 bits is the actual data that has to be

written to a particular location in the memory block.

The operation of the update module is as follow:

•

When Write_Data_In arrives, which is the 12 bits long update data, the 3 bit stage

number is extracted and checked to see if it’s value is 0. If the value is 0, then the

Write enable to the memory module is set to 1 and the enable bit in the data frame

is set to 0.

•

In the concatenation module, the data frame is rebuilt and forwarded to the

demultiplexer.

•

At the demultiplexer, the Enable bit in the data frame is used as the select line

input. When the bit is 0, the write data is forwarded to the write data line of the

current stage’s memory module, else the write data is forwarded to the update

module on the next stage in the pipeline.

52

4.2.3 SEARCH PROCESS IN STAGE 1

The first stage of the pipeline structure follows a search algorithm that is slightly different

from that followed by all the subsequent stages. This is a result of all the root nodes being

mapped to this stage whereby every IP lookup request will have to start at this stage and

flow forward. The first stage of the pipeline consists of the following units.

1. A shift register module used to left shift the incoming IP address by 4 bits.

2. A memory module that holds either the port number or the address and stage

number at which the child node exists.

3. A write block used for the update process whenever a node has to be deleted or

added depending the variations in the network topology surrounding the router.

A block diagram representing stage 1 of the pipeline is presented in Figure 4.17.

Figure 4.17: Block diagram of stage 1 of pipeline

53

The search process at this stage is as follows.

•

The 32 bits IP address and the 12 bits Write Data are extracted from the Packet_in

input.

•

The 4 MSB bits of the IP address are concatenated with a 0 bit and taken as input

to the address field for the memory module.

•

The Write Data input is checked in the write module to verify if any update has to

be made to the data in the memory module. If the Packet_in input contains update

information for the stage, then the Write Enable to the memory module is set to 1,

the Write Data is forwarded to the memory module where the update is made to

the memory location pointed to by the 5 bit address field input.

•

If no updates are found, then the Packet_in data is a lookup request. The lookup

proceeds by accessing the corresponding memory location pointed to by the

Address input field, the data from which points to either the output Port Number

on the router or information about the next stage to be accessed to find the next

node in the trie.

•

Finally, the Shift Register module left shifts the incoming IP address by 4 bits and

forwards it to the next stage in the pipeline.

54

4.2.4 SEARCH PROCESS IN OTHER STAGES OF THE PIPELINE

The search process in the remaining stages of the pipeline has a few extra processes

involved due to the Constraint 2 used for mapping the trie onto the pipeline stages. Here,

there is a need of extra logic to verify if the memory module at a certain stage has to be

accessed or if the data from the previous stage has to be forwarded to the next stages in

the pipeline where the next node information maybe present.

The following block are included in these stages of the pipeline.

1. A shift register module used to left shift the incoming IP address from the

previous stage by 1 bit depending on the enable bit to the shift register. The enable

input here is the Enable bit of the Data_In packet arriving from the previous stage.

2. A memory module that holds either the port number or the address and stage

number at which the child node exists.

3. A Node Distance Check module that is used to verify to which stage in the

pipeline the incoming data is intended for.

4. A write block used for the update process whenever a node has to be deleted or

added depending the variations in the network topology surrounding the router.

A block diagram representing this module of the pipeline is presented in Figure 4.17a in

the next page.

55

Figure 4.17a: Block diagram of the stage module of a pipeline

The search process at this stage is as follows.

•

The shifted IP address from the previous module is passed into the Shift Register

Module. The shift module at these stages is modeled to shift the IP address by 1

bit to the left. This module has an Enable bit which is bit 3 of the Data_In frame

from the previous stage. Depending on the value of this bit, the shift operation

will either be enabled or disable. This, again is a requirement to satisfy the

constraint 2 of the node mapping process where certain stages in the pipeline

maybe have to be skipped. When this happens, the IP address should not be

shifted to maintain the correctness of the lookup.

56

•

The Data_In packet from the previous stage is sent as input to the Memory

module and the Node Distance Check module.

•

At the Node Distance Check module the stage number in the Data_In frame is

checked and the value of the Enable bit altered depending on whether the stage

number is 0 or not.

•

In the Write module, the Write Data input from the previous stage is checked to

see if the Memory access at this stage is for a node lookup or for an update

operation. Depending on the data present in the Write Data, the Write Enable line

to the memory block is either set to 1 or left unchanged at 0.

•

At the Memory module, if the data in the Data_In frame is intended for the

present stage, the 4 MSB bits of the Data_In frame are extracted and then

concatenated with the MSB of the shifted IP address from the Shift Register

module. This 5 bit address is then used to access the memory location to find the

details corresponding to the node being searched for or to update data at that

location in memory.

•

The registers at the outputs of each of the modules are used to synchronize the

outputs from every module.

•

The outputs from the Memory module and the Node Distance Check module are

then connected to the inputs of a 2:1 multiplexer. The select line of this

multiplexer is the negated value of the Enable bit contained in the Data_In input

frame. Depending on this Enable bit, either the data frame from the Memory

57

module or the Node Distance Check module is sent as the Data Out output to the