PeachPy: A Python Framework for Developing High-Performance Assembly Kernels Marat Dukhan

advertisement

PeachPy: A Python Framework for Developing

High-Performance Assembly Kernels

Marat Dukhan

School of Computational Science and Engineering

College of Computing

Georgia Institute of Technology

Presentation on PyHPC 2013

Marat Dukhan (Georgia Tech)

PeachPy

PyHPC 2013

1 / 43

Outline

1

Motivation

2

PeachPy Foundations

3

PeachPy Basics

4

Assembly Programming Automation

5

Metaprogramming

6

Instruction Streams

7

Experimental Validation

8

Conclusion

Marat Dukhan (Georgia Tech)

PeachPy

PyHPC 2013

2 / 43

The Problem

dot_product:

XORPD xmm0, xmm0

XORPD xmm1, xmm1

XORPD xmm2, xmm2

SUB r8, 6

JAE .restore

align 16

.batch:

MOVUPD xmm3, [rcx]

MOVUPD xmm7, [rdx]

MULPD xmm3, xmm7

ADDPD xmm0, xmm3

MOVUPD xmm4, [rcx +

MOVUPD xmm7, [rdx +

MULPD xmm4, xmm7

ADDPD xmm1, xmm4

MOVUPD xmm5, [rcx +

MOVUPD xmm7, [rdx +

MULPD xmm5, xmm7

ADDPD xmm2, xmm5

ADD rcx, 48

ADD rdx, 48

SUB r8, 6

JAE .batch

.restore:

ADD r8, 6

JZ .reduce

.remainder:

MOVSD xmm3, [rcx]

MOVSD xmm4, [rdx]

MULSD xmm3, xmm4

ADDSD xmm0, xmm3

ADD rcx, 8

ADD rdx, 8

SUB r8, 1

JNZ .remainder

.reduce:

ADDPD xmm0, xmm1

ADDPD xmm0, xmm2

HADDPD xmm0, xmm0

RET

Marat Dukhan (Georgia Tech)

PeachPy

16]

16]

32]

32]

PyHPC 2013

3 / 43

The Problem

dot_product:

XORPD xmm0, xmm0

XORPD xmm1, xmm1

XORPD xmm2, xmm2

SUB r8, 6

JAE .restore

align 16

.batch:

MOVUPD xmm3, [rcx]

MOVUPD xmm7, [rdx]

MULPD xmm3, xmm7

ADDPD xmm0, xmm3

MOVUPD xmm4, [rcx +

MOVUPD xmm7, [rdx +

MULPD xmm4, xmm7

ADDPD xmm1, xmm4

MOVUPD xmm5, [rcx +

MOVUPD xmm7, [rdx +

MULPD xmm5, xmm7

ADDPD xmm2, xmm5

ADD rcx, 48

ADD rdx, 48

SUB r8, 6

JAE .batch

.restore:

ADD r8, 6

JZ .reduce

.remainder:

MOVSD xmm3, [rcx]

MOVSD xmm4, [rdx]

MULSD xmm3, xmm4

ADDSD xmm0, xmm3

ADD rcx, 8

ADD rdx, 8

SUB r8, 1

JNZ .remainder

.reduce:

ADDPD xmm0, xmm1

ADDPD xmm0, xmm2

HADDPD xmm0, xmm0

RET

Marat Dukhan (Georgia Tech)

dot_product:

XORPS xmm0, xmm0

XORPS xmm1, xmm1

XORPS xmm2, xmm2

SUB r8, 12

JAE .restore

align 16

.batch:

MOVUPS xmm3, [rcx]

MOVUPS xmm7, [rdx]

MULPS xmm3, xmm7

ADDPS xmm0, xmm3

MOVUPS xmm4, [rcx +

MOVUPS xmm7, [rdx +

MULPS xmm4, xmm7

ADDPS xmm1, xmm4

MOVUPS xmm5, [rcx +

MOVUPS xmm7, [rdx +

MULPS xmm5, xmm7

ADDPS xmm2, xmm5

ADD rcx, 48

ADD rdx, 48

SUB r8, 12

JAE .batch

.restore:

ADD r8, 12

JZ .reduce

.remainder:

MOVSS xmm3, [rcx]

MOVSS xmm4, [rdx]

MULSS xmm3, xmm4

ADDSS xmm0, xmm3

ADD rcx, 4

ADD rdx, 4

SUB r8, 1

JNZ .remainder

.reduce:

ADDPS xmm0, xmm1

ADDPS xmm0, xmm2

HADDPS xmm0, xmm0

HADDPS xmm0, xmm0

RET

16]

16]

32]

32]

PeachPy

16]

16]

32]

32]

PyHPC 2013

4 / 43

The Problem

dot_product:

XORPD xmm0, xmm0

XORPD xmm1, xmm1

XORPD xmm2, xmm2

SUB r8, 6

JAE .restore

align 16

.batch:

MOVUPD xmm3, [rcx]

MOVUPD xmm7, [rdx]

MULPD xmm3, xmm7

ADDPD xmm0, xmm3

MOVUPD xmm4, [rcx +

MOVUPD xmm7, [rdx +

MULPD xmm4, xmm7

ADDPD xmm1, xmm4

MOVUPD xmm5, [rcx +

MOVUPD xmm7, [rdx +

MULPD xmm5, xmm7

ADDPD xmm2, xmm5

ADD rcx, 48

ADD rdx, 48

SUB r8, 6

JAE .batch

.restore:

ADD r8, 6

JZ .reduce

.remainder:

MOVSD xmm3, [rcx]

MOVSD xmm4, [rdx]

MULSD xmm3, xmm4

ADDSD xmm0, xmm3

ADD rcx, 8

ADD rdx, 8

SUB r8, 1

JNZ .remainder

.reduce:

ADDPD xmm0, xmm1

ADDPD xmm0, xmm2

HADDPD xmm0, xmm0

RET

16]

16]

32]

32]

dot_product:

XORPS xmm0, xmm0

XORPS xmm1, xmm1

XORPS xmm2, xmm2

SUB r8, 12

JAE .restore

align 16

.batch:

MOVUPS xmm3, [rcx]

MOVUPS xmm7, [rdx]

MULPS xmm3, xmm7

ADDPS xmm0, xmm3

MOVUPS xmm4, [rcx +

MOVUPS xmm7, [rdx +

MULPS xmm4, xmm7

ADDPS xmm1, xmm4

MOVUPS xmm5, [rcx +

MOVUPS xmm7, [rdx +

MULPS xmm5, xmm7

ADDPS xmm2, xmm5

ADD rcx, 48

ADD rdx, 48

SUB r8, 12

JAE .batch

.restore:

ADD r8, 12

JZ .reduce

.remainder:

MOVSS xmm3, [rcx]

MOVSS xmm4, [rdx]

MULSS xmm3, xmm4

ADDSS xmm0, xmm3

ADD rcx, 4

ADD rdx, 4

SUB r8, 1

JNZ .remainder

.reduce:

ADDPS xmm0, xmm1

ADDPS xmm0, xmm2

HADDPS xmm0, xmm0

HADDPS xmm0, xmm0

RET

Marat Dukhan (Georgia Tech)

16]

16]

32]

32]

dot_product:

XORPD xmm0, xmm0

XORPD xmm1, xmm1

XORPD xmm2, xmm2

SUB rdx, 6

JAE .restore

align 16

.batch:

MOVUPD xmm3, [rdi]

MOVUPD xmm7, [rsi]

MULPD xmm3, xmm7

ADDPD xmm0, xmm3

MOVUPD xmm4, [rdi +

MOVUPD xmm7, [rsi +

MULPD xmm4, xmm7

ADDPD xmm1, xmm4

MOVUPD xmm5, [rdi +

MOVUPD xmm7, [rsi +

MULPD xmm5, xmm7

ADDPD xmm2, xmm5

ADD rdi, 48

ADD rsi, 48

SUB rdx, 6

JAE .batch

.restore:

ADD rdx, 6

JZ .reduce

.remainder:

MOVSD xmm3, [rdi]

MOVSD xmm4, [rsi]

MULSD xmm3, xmm4

ADDSD xmm0, xmm3

ADD rdi, 8

ADD rsi, 8

SUB rdx, 1

JNZ .remainder

.reduce:

ADDPD xmm0, xmm1

ADDPD xmm0, xmm2

HADDPD xmm0, xmm0

RET

PeachPy

16]

16]

32]

32]

dot_product:

XORPS xmm0, xmm0

XORPS xmm1, xmm1

XORPS xmm2, xmm2

SUB rdx, 12

JAE .restore

align 16

.batch:

MOVUPS xmm3, [rdi]

MOVUPS xmm7, [rsi]

MULPS xmm3, xmm7

ADDPS xmm0, xmm3

MOVUPS xmm4, [rdi +

MOVUPS xmm7, [rsi +

MULPS xmm4, xmm7

ADDPS xmm1, xmm4

MOVUPS xmm5, [rdi +

MOVUPS xmm7, [rsi +

MULPS xmm5, xmm7

ADDPS xmm2, xmm5

ADD rdi, 48

ADD rsi, 48

SUB rdx, 12

JAE .batch

.restore:

ADD rdx, 12

JZ .reduce

.remainder:

MOVSS xmm3, [rdi]

MOVSS xmm4, [rsi]

MULSS xmm3, xmm4

ADDSS xmm0, xmm3

ADD rdi, 4

ADD rsi, 4

SUB rdx, 1

JNZ .remainder

.reduce:

ADDPS xmm0, xmm1

ADDPS xmm0, xmm2

HADDPS xmm0, xmm0

HADDPS xmm0, xmm0

RET

PyHPC 2013

16]

16]

32]

32]

5 / 43

The Problem

dot_product:

XORPD xmm0, xmm0

XORPD xmm1, xmm1

XORPD xmm2, xmm2

SUB r8, 6

JAE .restore

align 16

.batch:

MOVUPD xmm3, [rcx]

MOVUPD xmm7, [rdx]

MULPD xmm3, xmm7

ADDPD xmm0, xmm3

MOVUPD xmm4, [rcx +

MOVUPD xmm7, [rdx +

MULPD xmm4, xmm7

ADDPD xmm1, xmm4

MOVUPD xmm5, [rcx +

MOVUPD xmm7, [rdx +

MULPD xmm5, xmm7

ADDPD xmm2, xmm5

ADD rcx, 48

ADD rdx, 48

SUB r8, 6

JAE .batch

.restore:

ADD r8, 6

JZ .reduce

.remainder:

MOVSD xmm3, [rcx]

MOVSD xmm4, [rdx]

MULSD xmm3, xmm4

ADDSD xmm0, xmm3

ADD rcx, 8

ADD rdx, 8

SUB r8, 1

JNZ .remainder

.reduce:

ADDPD xmm0, xmm1

ADDPD xmm0, xmm2

HADDPD xmm0, xmm0

RET

16]

16]

32]

32]

dot_product:

VXORPD ymm0, ymm0

VXORPD ymm1, ymm1

VXORPD ymm2, ymm2

SUB r8, 12

JAE .restore

align 16

.batch:

VMOVUPD ymm3, [rcx]

VMOVUPD ymm7, [rdx]

VMULPD ymm3, ymm7

VADDPD ymm0, ymm3

VMOVUPD ymm4, [rcx + 32]

VMOVUPD ymm7, [rdx + 32]

VMULPD ymm4, ymm7

VADDPD ymm1, ymm4

VMOVUPD ymm5, [rcx + 64]

VMOVUPD ymm7, [rdx + 64]

VMULPD ymm5, ymm7

VADDPD ymm2, ymm5

ADD rcx, 96

ADD rdx, 96

SUB r8, 12

JAE .batch

.restore:

ADD r8, 12

JZ .reduce

.remainder:

VMOVSD xmm3, [rcx]

VMOVSD xmm4, [rdx]

VMULSD xmm3, xmm4

VADDSD ymm0, ymm3

ADD rcx, 8

ADD rdx, 8

SUB r8, 1

JNZ .remainder

.reduce:

VADDPD ymm0, ymm1

VADDPD ymm0, ymm2

VEXTRACTF128 xmm1, ymm0, 1

VADDPD xmm0, xmm1

VHADDPD xmm0, xmm0

RET

Marat Dukhan (Georgia Tech)

dot_product:

XORPS xmm0, xmm0

XORPS xmm1, xmm1

XORPS xmm2, xmm2

SUB r8, 12

JAE .restore

align 16

.batch:

MOVUPS xmm3, [rcx]

MOVUPS xmm7, [rdx]

MULPS xmm3, xmm7

ADDPS xmm0, xmm3

MOVUPS xmm4, [rcx + 16]

MOVUPS xmm7, [rdx + 16]

MULPS xmm4, xmm7

ADDPS xmm1, xmm4

MOVUPS xmm5, [rcx + 32]

MOVUPS xmm7, [rdx + 32]

MULPS xmm5, xmm7

ADDPS xmm2, xmm5

ADD rcx, 48

ADD rdx, 48

SUB r8, 12

JAE .batch

.restore:

ADD r8, 12

JZ .reduce

.remainder:

MOVSS xmm3, [rcx]

MOVSS xmm4, [rdx]

MULSS xmm3, xmm4

ADDSS xmm0, xmm3

ADD rcx, 4

ADD rdx, 4

SUB r8, 1

JNZ .remainder

.reduce:

ADDPS xmm0, xmm1

ADDPS xmm0, xmm2

HADDPS xmm0, xmm0

HADDPS xmm0, xmm0

RET

dot_product:

XORPD xmm0, xmm0

XORPD xmm1, xmm1

XORPD xmm2, xmm2

SUB rdx, 6

JAE .restore

align 16

.batch:

MOVUPD xmm3, [rdi]

MOVUPD xmm7, [rsi]

MULPD xmm3, xmm7

ADDPD xmm0, xmm3

MOVUPD xmm4, [rdi +

MOVUPD xmm7, [rsi +

MULPD xmm4, xmm7

ADDPD xmm1, xmm4

MOVUPD xmm5, [rdi +

MOVUPD xmm7, [rsi +

MULPD xmm5, xmm7

ADDPD xmm2, xmm5

ADD rdi, 48

ADD rsi, 48

SUB rdx, 6

JAE .batch

.restore:

ADD rdx, 6

JZ .reduce

.remainder:

MOVSD xmm3, [rdi]

MOVSD xmm4, [rsi]

MULSD xmm3, xmm4

ADDSD xmm0, xmm3

ADD rdi, 8

ADD rsi, 8

SUB rdx, 1

JNZ .remainder

.reduce:

ADDPD xmm0, xmm1

ADDPD xmm0, xmm2

HADDPD xmm0, xmm0

RET

dot_product:

VXORPS ymm0, ymm0

VXORPS ymm1, ymm1

VXORPS ymm2, ymm2

SUB r8, 24

JAE .restore

align 16

.batch:

VMOVUPS ymm3, [rcx]

VMOVUPS ymm7, [rdx]

VMULPS ymm3, ymm7

VADDPS ymm0, ymm3

VMOVUPS ymm4, [rcx + 32]

VMOVUPS ymm7, [rdx + 32]

VMULPS ymm4, ymm7

VADDPS ymm1, ymm4

VMOVUPS ymm5, [rcx + 64]

VMOVUPS ymm7, [rdx + 64]

VMULPS ymm5, ymm7

VADDPS ymm2, ymm5

ADD rcx, 96

ADD rdx, 96

SUB r8, 24

JAE .batch

.restore:

ADD r8, 24

JZ .reduce

.remainder:

VMOVSS xmm3, [rcx]

VMOVSS xmm4, [rdx]

VMULSS xmm3, xmm4

VADDSS ymm0, ymm3

ADD rcx, 4

ADD rdx, 4

SUB r8, 1

JNZ .remainder

.reduce:

VADDPS ymm0, ymm1

VADDPS ymm0, ymm2

VEXTRACTF128 xmm1, ymm0, 1

VADDPS xmm0, xmm1

VHADDPS xmm0, xmm0

VHADDPS xmm0, xmm0

RET

dot_product:

VXORPD ymm0, ymm0

VXORPD ymm1, ymm1

VXORPD ymm2, ymm2

SUB rdx, 12

JAE .restore

align 16

.batch:

VMOVUPD ymm3, [rdi]

VMOVUPD ymm7, [rsi]

VMULPD ymm3, ymm7

VADDPD ymm0, ymm3

VMOVUPD ymm4, [rdi + 32]

VMOVUPD ymm7, [rsi + 32]

VMULPD ymm4, ymm7

VADDPD ymm1, ymm4

VMOVUPD ymm5, [rdi + 64]

VMOVUPD ymm7, [rsi + 64]

VMULPD ymm5, ymm7

VADDPD ymm2, ymm5

ADD rdi, 96

ADD rsi, 96

SUB rdx, 12

JAE .batch

.restore:

ADD rdx, 12

JZ .reduce

.remainder:

VMOVSD xmm3, [rdi]

VMOVSD xmm4, [rsi]

VMULSD xmm3, xmm4

VADDSD ymm0, ymm3

ADD rdi, 8

ADD rsi, 8

SUB rdx, 1

JNZ .remainder

.reduce:

VADDPD ymm0, ymm1

VADDPD ymm0, ymm2

VEXTRACTF128 xmm1, ymm0, 1

VADDPD xmm0, xmm1

VHADDPD xmm0, xmm0

RET

PeachPy

16]

16]

32]

32]

dot_product:

XORPS xmm0, xmm0

XORPS xmm1, xmm1

XORPS xmm2, xmm2

SUB rdx, 12

JAE .restore

align 16

.batch:

MOVUPS xmm3, [rdi]

MOVUPS xmm7, [rsi]

MULPS xmm3, xmm7

ADDPS xmm0, xmm3

MOVUPS xmm4, [rdi + 16]

MOVUPS xmm7, [rsi + 16]

MULPS xmm4, xmm7

ADDPS xmm1, xmm4

MOVUPS xmm5, [rdi + 32]

MOVUPS xmm7, [rsi + 32]

MULPS xmm5, xmm7

ADDPS xmm2, xmm5

ADD rdi, 48

ADD rsi, 48

SUB rdx, 12

JAE .batch

.restore:

ADD rdx, 12

JZ .reduce

.remainder:

MOVSS xmm3, [rdi]

MOVSS xmm4, [rsi]

MULSS xmm3, xmm4

ADDSS xmm0, xmm3

ADD rdi, 4

ADD rsi, 4

SUB rdx, 1

JNZ .remainder

.reduce:

ADDPS xmm0, xmm1

ADDPS xmm0, xmm2

HADDPS xmm0, xmm0

HADDPS xmm0, xmm0

RET

dot_product:

VXORPS ymm0, ymm0

VXORPS ymm1, ymm1

VXORPS ymm2, ymm2

SUB rdx, 24

JAE .restore

align 16

.batch:

VMOVUPS ymm3, [rdi]

VMOVUPS ymm7, [rsi]

VMULPS ymm3, ymm7

VADDPS ymm0, ymm3

VMOVUPS ymm4, [rdi + 32]

VMOVUPS ymm7, [rsi + 32]

VMULPS ymm4, ymm7

VADDPS ymm1, ymm4

VMOVUPS ymm5, [rdi + 64]

VMOVUPS ymm7, [rsi + 64]

VMULPS ymm5, ymm7

VADDPS ymm2, ymm5

ADD rdi, 96

ADD rsi, 96

SUB rdx, 24

JAE .batch

.restore:

ADD rdx, 24

JZ .reduce

.remainder:

VMOVSS xmm3, [rdi]

VMOVSS xmm4, [rsi]

VMULSS xmm3, xmm4

VADDSS ymm0, ymm3

ADD rdi, 4

ADD rsi, 4

SUB rdx, 1

JNZ .remainder

.reduce:

VADDPS ymm0, ymm1

VADDPS ymm0, ymm2

VEXTRACTF128 xmm1, ymm0, 1

VADDPS xmm0, xmm1

VHADDPS xmm0, xmm0

VHADDPS xmm0, xmm0

RET

PyHPC 2013

6 / 43

The Research Problem

This reasearch is about the problem of generating multiple similar assembly

kernels:

Kernels which perform similar operations

I

E.g. vector addition/subtraction

Kernels which do same operation on dierent data types

I

E.g. single- and double-precision dot product

Kernels which target dierent microarchitectures or ISA

I

E.g. dot product for AVX, FMA4, FMA3

Kernels which use dierent ABIs

I

E.g. x86-64 on Windows and Linux

Marat Dukhan (Georgia Tech)

PeachPy

PyHPC 2013

7 / 43

Outline

1

Motivation

2

PeachPy Foundations

3

PeachPy Basics

4

Assembly Programming Automation

5

Metaprogramming

6

Instruction Streams

7

Experimental Validation

8

Conclusion

Marat Dukhan (Georgia Tech)

PeachPy

PyHPC 2013

8 / 43

Assembly Compilation Process

Marat Dukhan (Georgia Tech)

PeachPy

PyHPC 2013

9 / 43

Assembly Compilation Process

Lets replace macro processor with something

More exible

More standardized

More popular

Marat Dukhan (Georgia Tech)

PeachPy

PyHPC 2013

10 / 43

Assembly Compilation Process

Lets replace macro processor with something

More exible

More standardized

More popular

Python!

Marat Dukhan (Georgia Tech)

PeachPy

PyHPC 2013

11 / 43

Introducing PeachPy

PeachPy is. . .

. . . an automation and metaprogramming tool for assembly

programming

. . . an Assembly-like DSL: PeachPy user is exposed to the same

low-level details as assembly programmer

. . . a Python framework: any PeachPy code is a valid Python

code

PeachPy is not. . .

. . . a compiler: PeachPy does not oer high-level programming

abstractions

. . . an assembler: PeachPy does not generate machine code

Marat Dukhan (Georgia Tech)

PeachPy

PyHPC 2013

12 / 43

PeachPy Philosophy

PeachPy is for writing high-performance codes

I

I

I

No support for invoke, OOP, and other "high-level assembly"

No kernel-mode instructions

No system instructions

PeachPy is for writing assembly codes

I

Not a replacement for high-level compiler

All optimizations possible to do in assembly should be possible to do

in PeachPy

Everything that can be automated in assembly programming should be

automated

Marat Dukhan (Georgia Tech)

PeachPy

PyHPC 2013

13 / 43

Outline

1

Motivation

2

PeachPy Foundations

3

PeachPy Basics

4

Assembly Programming Automation

5

Metaprogramming

6

Instruction Streams

7

Experimental Validation

8

Conclusion

Marat Dukhan (Georgia Tech)

PeachPy

PyHPC 2013

14 / 43

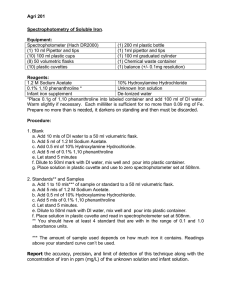

Minimal PeachPy Function

from peachpy.x64 import *

abi = peachpy.c.ABI('x64-sysv')

assembler = Assembler(abi)

x_argument = peachpy.c.Parameter("x",

peachpy.c.Type("uint32_t"))

arguments = (x_argument,)

function_name = "f"

microachitecture = "SandyBridge"

with Function(assembler, function_name,

arguments, microarchitecture):

RETURN()

print assembler

Marat Dukhan (Georgia Tech)

PeachPy

PyHPC 2013

15 / 43

Modules

PeachPy functionality is concentrated in three Python modules:

peachpy.c for C compatilibity classes (C types and ABIs)

peachpy.x64 for x86-64 assembly classes

peachpy.arm for ARM assembly classes

Assembly modules are intended to be imported into program workspace:

# This will make the syntax of PeachPy codes

# very similar to native assembly

from peachpy.x64 import *

Marat Dukhan (Georgia Tech)

PeachPy

PyHPC 2013

16 / 43

Assembler and Function

Assembler

I

I

I

Container for functions

Contains only functions with specied ABI

Normally may be saved as assembly le to disk

Function

I

I

Created using with syntax: with Function(...):

Creates an active instruction stream

Microarchitecture

I

I

String parameter for Function constructor

Restricts the set of supported instructions

Marat Dukhan (Georgia Tech)

PeachPy

PyHPC 2013

17 / 43

Instructions

All instructions are named in uppercase

All instructions are python objects

When an instruction is called (as Python object), it is generated and

added to the active PeachPy function (as assembly instruction)

PeachPy veries the correctness of instruction operands

Most computational x86-64 and many ARM instructions are supported

Traditional Assembly

PeachPy

.loop:

ADDPD xmm0, [rsi]

ADD rsi, 16

SUB rcx, 2

JAE .loop

LABEL( "loop" )

ADDPD( xmm0, [rsi] )

ADD( rsi, 16 )

SUB( rcx, 2 )

JAE( "loop" )

Marat Dukhan (Georgia Tech)

PeachPy

PyHPC 2013

18 / 43

Registers

PeachPy maps dierent types of architectural registers on Python classes

x86 register classes:

I

I

I

I

I

I

I

I

GeneralPurposeRegister (base class)

GeneralPurposeRegister8

GeneralPurposeRegister16

GeneralPurposeRegister32

GeneralPurposeRegister64

MMXRegister

SSERegister

AVXRegister

ARM register classes:

I

I

I

I

GeneralPurposeRegister

SRegister

DRegister

QRegister

Marat Dukhan (Georgia Tech)

PeachPy

PyHPC 2013

19 / 43

Registers

Architectural registers are represented in PeachPy as Python objects

All register names are in lowercase

Traditional x86-64 Assembly

PeachPy

MOVZX rax, al

PADD mm0, mm1

ADDPS xmm0, xmm1

VMULPD ymm0, ymm1, ymm2

MOVSX( rax, al )

PADD( mm0, mm1 )

ADDPS( xmm0, xmm1 )

VMULPD( ymm0, ymm1, ymm2 )

Traditional ARM Assembly

PeachPy

ADD r0, r0, r1

VLD1.32 {d0[]}, [r2]

VFMA.F32 q2, q1, q1

ADD( r0, r0, r1 )

VLD1.F32( (d0[:],), [r2] )

VFMA.F32( q2, q1, q1 )

Marat Dukhan (Georgia Tech)

PeachPy

PyHPC 2013

20 / 43

Outline

1

Motivation

2

PeachPy Foundations

3

PeachPy Basics

4

Assembly Programming Automation

5

Metaprogramming

6

Instruction Streams

7

Experimental Validation

8

Conclusion

Marat Dukhan (Georgia Tech)

PeachPy

PyHPC 2013

21 / 43

Register Allocation

Traditional x86-64 Assembly

VMOVAPD ymm0, [rsi]

VMOVAPD ymm1, ymm0

VFMADD132PD ymm1, ymm13, ymm12

VFMADD231PD ymm0, ymm1, ymm14

VFMADD231PD ymm0, ymm1, ymm15

PeachPy

ymm_x = AVXRegister()

VMOVAPD( ymm_x, [xPointer]

ymm_t = AVXRegister()

VMOVAPD( ymm_t, ymm_x )

VFMADD132PD( ymm_t, ymm_t,

VFMADD231PD( ymm_x, ymm_t,

VFMADD231PD( ymm_x, ymm_t,

Marat Dukhan (Georgia Tech)

)

ymm_log2e, ymm_magic_bias)

ymm_minus_ln2_hi, ymm_x )

ymm_minus_ln2_lo, ymm_x )

PeachPy

PyHPC 2013

22 / 43

In-place Memory Constant Declarations

Traditional x86-64 Assembly

Right here:

section .rdata rdata

c0 dq 3.141592, 3.141592

In a galaxy far far away:

section .text code

MULPD xmm0, [c0]

PeachPy

MULPD( xmm_x, Constant.float64x2(3.141592) )

Marat Dukhan (Georgia Tech)

PeachPy

PyHPC 2013

23 / 43

Hexadecimal Floating-Point Constants

Hexadecimal oating-point constants provide an accurate and portable way

to specify oating-point constants and without rounding errors

Required in C99 standard

Supported by gcc, clang, icc, xlc, and NASM

But not supported by GNU Assembler

PeachPy lets programmers use hexadecimal oating-point constants on all

supported platforms

C99

const double ln2 = 0x1.71547652B82FEp+0;

ARM Assembly (GNU)

x86-64 Assembly (NASM)

ln2: .quad 0x3FF71547652B82FE

ln2 dq 0x1.71547652B82FEp+0

PeachPy (x86-64 and ARM)

ln2 = Constant.float64('0x1.71547652B82FEp+0')

Marat Dukhan (Georgia Tech)

PeachPy

PyHPC 2013

24 / 43

Calling conventions

The Problem

Consider Assembly implementations for the C function

uint64_t add(uint64_t x, uint64_t y) {

return x + y;

}

Assembly for Microsoft x86-64 calling convention

add:

LEA rax, [rcx + rdx * 1]

RET

Assembly for System V x86-64 calling convention

add:

LEA rax, [rdi + rsi * 1]

RET

Marat Dukhan (Georgia Tech)

PeachPy

PyHPC 2013

25 / 43

Calling conventions

PeachPy Approach

PeachPy code

from peachpy.x64 import *

asm = Assembler(peachpy.c.ABI("x64-ms")) # or "x64-sysv"

x_arg = peachpy.c.Parameter("x",

peachpy.c.Type("uint64_t"))

y_arg = peachpy.c.Parameter("y",

peachpy.c.Type("uint64_t"))

with Function(asm, "add", (x_arg, y_arg), "Bobcat"):

x = GeneralPurposeRegister64()

LOAD.PARAMETER( x, x_arg ) # Does the magic!

y = GeneralPurposeRegister64()

LOAD.PARAMETER( y, y_arg ) # Does the magic!

LEA( rax, [x + y * 1] )

RETURN()

Marat Dukhan (Georgia Tech)

PeachPy

PyHPC 2013

26 / 43

ISA-based runtime dispatching

PeachPy known the instruction set of each instruction

PeachPy also collects ISA information about each function

This helps to do ne-grained runtime dispatching

I

More ecient vs recompiling the function for each ISA with high-level

compiler

Marat Dukhan (Georgia Tech)

PeachPy

PyHPC 2013

27 / 43

Outline

1

Motivation

2

PeachPy Foundations

3

PeachPy Basics

4

Assembly Programming Automation

5

Metaprogramming

6

Instruction Streams

7

Experimental Validation

8

Conclusion

Marat Dukhan (Georgia Tech)

PeachPy

PyHPC 2013

28 / 43

Parametrized Unroll

with Function(asm, "dot_product", args, "SandyBridge"):

xPointer, yPointer, zPointer, length = LOAD.PARAMETERS()

reg_size = 32

reg_elements = 8

unroll_regs = 8

acc = [AVXRegister() for _ in range(unroll_regs)]

temp = [AVXRegister() for _ in range(unroll_regs)]

...

LABEL( "process_batch" )

for i in range(unroll_regs):

VMOVAPS( temp[i], [xPointer + i * reg_size] )

VMULPS( temp[i], [yPointer + i * reg_size] )

VADDPS( acc[i], temp[i] )

ADD( xPointer, reg_size * unroll_regs )

ADD( yPointer, reg_size * unroll_regs )

SUB( length, reg_elements * unroll_regs )

JAE( "process_batch" )

...

Marat Dukhan (Georgia Tech)

PeachPy

PyHPC 2013

29 / 43

Parametrization by Element Type

reg_size = 32

reg_elements = reg_size / element_size

unroll_regs = 8

SIMD_LOAD = {4: VMOVAPS, 8: VMOVAPD}[element_size]

SIMD_MUL = {4: VMULPS, 8: VMULPD}[element_size]

SIMD_ADD = {4: VADDPS, 8: VADDPD}[element_size]

...

LABEL( "process_batch" )

for i in range(unroll_regs):

SIMD_LOAD( temp[i], [xPointer + i * reg_size] )

SIMD_MUL( temp[i], [yPointer + i * reg_size] )

SIMD_ADD( acc[i], temp[i] )

ADD( xPointer, reg_size * unroll_regs )

ADD( yPointer, reg_size * unroll_regs )

SUB( length, reg_elements * unroll_regs )

JAE( "process_batch" )

...

Marat Dukhan (Georgia Tech)

PeachPy

PyHPC 2013

30 / 43

Supporting Multiple Instruction Sets

if Target.has_fma():

VMLAPS = VFMADDPS if Target.has_fma4() else VFMADD231PS

else:

def VMLAPS(x, a, b, c):

t = AVXRegister()

VMULPS( t, a, b )

VADDPS( x, t, c )

...

LABEL( "processBatch" )

for i in range(unroll_regs):

VMOVAPS( temp[i], [xPointer + i * reg_size] )

VMLAPS( acc[i], temp[i], [yPointer + i * reg_size], acc[i] )

ADD( xPointer, reg_size * unroll_regs )

ADD( yPointer, reg_size * unroll_regs )

SUB( length, reg_elements * unroll_regs )

JAE( "processBatch" )

...

Marat Dukhan (Georgia Tech)

PeachPy

PyHPC 2013

31 / 43

Outline

1

Motivation

2

PeachPy Foundations

3

PeachPy Basics

4

Assembly Programming Automation

5

Metaprogramming

6

Instruction Streams

7

Experimental Validation

8

Conclusion

Marat Dukhan (Georgia Tech)

PeachPy

PyHPC 2013

32 / 43

Introduction

Usually we want assembly instructions to appear in the same order as we

write them.

To increase IPC it is useful to interleave two parts of code using

dierent types of instructions. However, it might be convenient to

write the code of those parts separately.

I

I

ARM Cortex-A9 can decode one SIMD instruction and one scalar

instruction per cycle. By interleaving SIMD and scalar processing we

can achieve higher performance.

On x86 we may use scalar instructions to detect special cases while

SIMD units are busy doing calculations.

For software pipelining we may want the skew the sequences of similar

instructions relative to each other.

I

But we don't want to skew our code

Marat Dukhan (Georgia Tech)

PeachPy

PyHPC 2013

33 / 43

Instruction Stream Objects

The Python with statement can be used to redirect generated instructions

to an InstructionStream object.

scalar_stream = InstructionStream()

with scalar_stream:

x = GeneralPurposeRegister64()

MOV( x, [xPointer] )

CMP.JA( x, threshold, "above_threshold" )

vector_stream = InstructionStream()

with vector_stream:

...

Instructions from instruction stream can then be re-issued to current

instruction stream:

while scalar_stream or vector_stream:

scalar_stream.issue()

vector_stream.issue()

Marat Dukhan (Georgia Tech)

PeachPy

PyHPC 2013

34 / 43

Software Pipelining

Instruction streams are useful for implementing software pipelining

instruction_columns = [InstructionStream(),

InstructionStream(), InstructionStream()]

for i in range(unroll_regs):

with instruction_columns[0]:

VMOVDQU( ymm_x[i], [xPointer + i * reg_size] )

with instruction_columns[1]:

VPADDD( ymm_x[i], ymm_y )

with instruction_columns[2]:

VMOVDQU( [zPointer + i * reg_size], ymm_x[i] )

with instruction_columns[0]:

ADD( xPointer, reg_size * unroll_regs )

with instruction_columns[2]:

ADD( zPointer, reg_size * unroll_regs )

Marat Dukhan (Georgia Tech)

PeachPy

PyHPC 2013

35 / 43

Outline

1

Motivation

2

PeachPy Foundations

3

PeachPy Basics

4

Assembly Programming Automation

5

Metaprogramming

6

Instruction Streams

7

Experimental Validation

8

Conclusion

Marat Dukhan (Georgia Tech)

PeachPy

PyHPC 2013

36 / 43

Is It Worth The Eort?

Albeit PeachPy simplies develiping assembly kernels, PeachPy is still

assembly, with all its drawbacks.

For many HPC scientists C code with compiler intrinsics is a viable

alternative to writing assembly:

C code with intrinsics is more portable with assembly

Many of the problems targeted by PeachPy become irrelevant (e.g.

calling convention)

Compiler could take into account more processors details than humans

We did a simple experiment to check if PeachPy (and assebly in general)

can deliver better performance than optimizing compilers.

Marat Dukhan (Georgia Tech)

PeachPy

PyHPC 2013

37 / 43

Experimental Setup

For the experiment we used branchless versions of vector logarithm and

exponential functions from Yeppp! library. These are high-performance

implementation originally developed and tuned using Peach-Py.

We converted the assembly instructions one-to-one to C++ intrinsics and

compiled with modern C++ compilers. The C++ code is a nearly ideal

input for a compiler:

Code is already vectorized with intrinsics.

Each function processes 40 elements and has only one branch.

The only parts left to the compilers are register allocation and

instruction scheduling.

Initial instruction schedulling is close to optimal.

A lot of room for improving instruction scheduling: the original version

contains 581 instructions for log function and 400 instructions for exp.

The produced codes are benchmarked on Intel Core i7-4770K processor

with the recent Haswell microarchitecture.

Marat Dukhan (Georgia Tech)

PeachPy

PyHPC 2013

38 / 43

Benchmarking Results

Marat Dukhan (Georgia Tech)

PeachPy

PyHPC 2013

39 / 43

Outline

1

Motivation

2

PeachPy Foundations

3

PeachPy Basics

4

Assembly Programming Automation

5

Metaprogramming

6

Instruction Streams

7

Experimental Validation

8

Conclusion

Marat Dukhan (Georgia Tech)

PeachPy

PyHPC 2013

40 / 43

Plans and Goals

The current priorities for PeachPy are

Support for PowerPC (including Blue Gene/Q) and Xeon Phi

architectures

Distribute PeachPy via Python Package Index

Enable generation of machine code directly from PeachPy

Provide additional features for x86-64 and ARM architectures (e.g.

table lookups)

ARM64 and x86-32 ports

In the long term we hope that PeachPy will replace conventional assembly

in HPC workow.

Marat Dukhan (Georgia Tech)

PeachPy

PyHPC 2013

41 / 43

Public Availability

PeachPy repository is hosted on bitbucket.org/MDukhan/peachpy

The primary user of PeachPy is Yeppp! library (www.yeppp.info).

I

The codegen directory in Yeppp! source tree contains a large number

of Peach-Py codes.

Marat Dukhan (Georgia Tech)

PeachPy

PyHPC 2013

42 / 43

Funding

This research was supported in part by

National Science Foundation

(NSF) under NSF CAREER

award number 0953100.

A grant from the Defense

Advanced Research Projects

Agency (DARPA) Computer

Science Study Group program.

Declaimer

Any opinions, conclusions or recommendations expressed in this presentation are

those of the authors and not necessarily reect those of NSF or DARPA.

Marat Dukhan (Georgia Tech)

PeachPy

PyHPC 2013

43 / 43