Toward Data Centered Tools for Understanding and Transforming Legacy Business Applications

advertisement

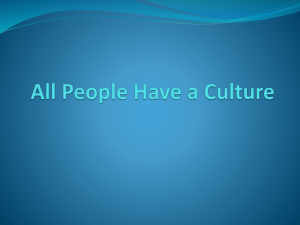

Toward Data Centered Tools for Understanding and Transforming Legacy Business Applications Satish Chandra Jackie de Vries John Field Raghavan Komondoor∗ Frans Nieuwerth Howard Hess Manivannan Kalidasan G. Ramalingam Justin Xue IBM Research Abstract We assert that tools for understanding and transforming legacy business applications should be built around logical data models, rather than the structure of source code artifacts or control flow. In this position paper, we argue that data centered tools are beneficial for a variety of frequently-occurring code understanding and transformation scenarios for legacy business applications, and outline the goals and status of the Mastery project at IBM Research, whose aim is to build a suite of logical model recovery tools, focusing initially on data models. Order key * 1 * 1 Order Financial Product Company Order key • product key • order number Info: • order amount • current status, … Product key • company code • product number Info: • price table, … Statistics: • previous day • month to date Company code * 1 Delete … 1 0..1 * Transaction Correct … New Order … ...... The Problem For the past two years, our group at IBM’s T.J. Watson Research Center has been investigating tools and techniques for analyzing and transforming legacy business applications, focusing on mainframe-based systems written in Cobol. Many such applications have evolved over the course of decades, and, although they often implement core business functionality, they are typically extremely difficult to update in a timely manner in response to new business requirements. Some of the impediments to legacy system evolution and transformation include: Size: Legacy application portfolios, i.e., complete collections of interdependent programs and persistent data stores, can be very large. For example, one IBM customer had a portfolio consisting of 700 interdependent applications, 3000 online datasets, 27000 batch jobs, and 31,000 compilation units. The sheer volume of information contained in an application of this size makes it impossible for an individual to understand the relationships between all parts of the application. Redundancy: Over time, applications frequently develop a great deal of redundant code and data. The reasons include lack of full integration of redundant software artifacts from business mergers, creation of redundant structures for performance reasons, or quick hacks coded by programmers adding new functionality under tight time constraints. Technology: The software structure of legacy code often reflects the limitations of the languages, systems, and middleware on which it was originally designed to run, long after improved systems and libraries become available. Skills: As new languages and systems software become popular, skills in legacy languages and systems atrophy. For all of the reasons listed above, there has been increasing interest in the use of automated and semi-automated tools to analyze and transform legacy code to allow evolving business requirements. ∗ Contact author: komondoo@us.ibm.com Figure 1: A high-level logical data model for a typical business application. Such tools include program understanding tools for extracting information from large code bases, tools for extracting semanticallyrelated code statements through techniques such as slicing, tools for migrating from one library or middleware base to another, tools for integrating legacy code with modern middleware, etc. 2 Importance of Data Centered Tools Our group has worked on a number of research topics related to various program analysis and transformation tools, including, e.g., language porting, slicing, and redundancy detection tools; however, we have come to the conclusion over time that there are unique benefits in having tools that are centered on understanding the data manipulated by programs. We have therefore embarked on a new project, called Mastery, which is concerned with extracting logical data models from legacy applications, linking these models in a semantically well-founded way back to their physical realizations in code (a physical model), and using these models and the links between them as the foundation for a variety of program understanding and transformation tools. Why do we believe that logical data models are critical for understanding and transforming legacy applications? Consider the UML-style model depicted in Fig. 1. This model describes key data structures and their interrelationships for a typical financial application. In this case, the application is a batch application that processes transactions pertaining to orders for financial products; the processing of a transaction results either in the creation of a new order for a product, or in the correction of an error in an existing unfulfilled order, or in the cancellation of an unfulfilled order, etc. 01 TRANSACTION-REC. 05 TRANS-DET-KEY .. 39 bytes .. 05 GENERAl-INFO. 10 REC-TYPE PIC X(3). 05 FILLER PIC X(154). 01 LOCAL-VARS. 05 ORDER-BUF .. 0..1 * Transaction Order key * Order Financial Product … Product key • company code • product number Info: • price table Statistics: • previous day • month to date 01 ORDER-REC. .. 01 NTR-TRANSACTION-REC. 05 NTR-KEY .. 39 bytes .. 05 .. other New Order fields .. * 01 PR1-PRODUCT-REC. 05 PRODUCT-KEY .. 18 bytes .. .. 05 PRICE-TABLE .. 01 PR2-PRODUCT-REC. 05 PRODUCT-KEY .. 18 bytes .. .. 05 PREVIOUS-DAY-SALES .. 05 MTD-SALES .. .. (perhaps historical) artifact is elided in the logical model, and both records are linked to a single “Financial Product” entity. 1 For large legacy applications, persistent data is the principle coupling mechanism connecting components of an application portfolio. Yet, as an application evolves to meet new business requirements, it is our observation that the structure and coherence of the data models underlying the code “decays” faster than the structure and coherence of the basic control and process flow through the application. We conjecture that this is because of the relative ease of adding new functionality by creating modules that manipulate new data items that are related to, but stored and manipulated separately from, the original data manipulated by the application, as opposed to refactoring the basic process flow through the application. 1 New Order Order key … 3 Figure 2: An outline of the data declarations in the application represented by the logical data model in Fig. 1, with links between data items and logical entities shown using dashed arrows. Applications of Logical Data Models Because persistent data is the backbone of most commercial applications, logical data models serve as good “anchors” around which to base program exploration, understanding, and transformation activities. Consider the following list of very commonly occurring code modification tasks for legacy applications: The application represented by the model in Fig. 1 is large (around 60,000 lines of Cobol) and complex. The complexity of the code obscures its essential functionality, which is to process different kinds of transactions pertaining to orders for products. This functionality is expressed succinctly and at a high level of abstraction by the data model. In other words, the “business logic” of the application is concerned primarily with maintaining and updating certain relationships among persistent and transient data items; therefore, the data model embodies much of the interesting functionality of the application, even though the model contains no representation of “code”. It is notable that the logical data model shown in Fig. 1 differs much from the data declarations in the source code of the application. Fig. 2 shows an outline of these data declarations, with the data items linked (links shown using dashed arrows) to the corresponding logical data model entities (this figure contains a relevant subset of the logical model in Fig. 1). The data declarations are spread over several source files; furthermore, they reveal little about the structure of and relationships between the logical entities manipulated by the application, which is obtainable only by an analysis of the code that uses the data. As illustrated in Fig. 2, the logical data model adds value by making information that is hidden in the code explicit, such as: • adding fields/attributes to an existing entity • making data representations (e.g., field expansion to accommodate growing numbers of customers, materials, etc.) • building adapters to, e.g., allow one application to query and update data in another system • merging databases representing similar entities (e.g., accounts) maintained by merged businesses • moving flat files to relational databases, e.g., to allow transactional online access to data previously accessed via offline batch applications. All of these scenarios fundamentally revolve around major changes to data and data relationships and small changes to “process flow” or “business logic”, rather than the reverse. The logical model, together with the links from logical entities to physical realizations in the source code, makes it easy to browse the application in terms of the logical entities, to understand the impact in the source of any change to a logical entity, and hence to plan transformations. Logical data models have other applications, too. The “business rules” of an application (rules that constitute the business logic) can usually be organized around logical model entities; therefore, logical models can be used as a basis for code documentation or porting. Logical models can also be used to identify redundancies and problematic relationships while integrating the applications of businesses that merge. For all of the reasons enumerated above, we believe that logical data models should form the foundation for legacy analysis, understanding, and transformation tools. Logical entities The logical entities manipulated by the program include Transactions, “Delete” transactions, “Correct” transactions, Orders, etc. Physical data items (variables) correspond to these entities; e.g., ORDER-BUF and ORDER-REC store Orders (as indicated by the links). Logical subtypes Transactions are of several kinds (have several subtypes). Associations Entities are associated with (or pertain to) other entities, as indicated by the solid arrows. Associations have multiplicities; e.g., the labels on the association from Transaction to Order indicate that each transaction pertains to zero or one (existing) Orders, and that each Order has zero or more transactions pertaining to it on any given day. 4 Challenges in Building Logical Data Models In general, the goal of the Mastery project in extracting logical data models is to recover information at roughly the level of UML class diagrams and OCL constraints, as if such models had been used to design the application from scratch. That is, we are looking not just for a diagrammatic representation of the physical data declarations, but a natural model corresponding to the way experienced designers Aggregation The information corresponding to a single financial product is stored in two physical records, PR1-PRODUCT-REC and PR2-PRODUCT-REC, which are tied together by their PRODUCT-KEY attribute. This 2 think about the system. To extract such a model programmatically requires analysis that abstracts away physical artifacts. In general, it can be quite challenging to construct semantically well-founded logical data models from legacy applications. For example, Cobol programmers frequently overlay differing data declarations on the same storage (using the REDEFINE construct). Sometimes overlays represent disjoint unions, i.e., situations in which the same storage is used for distinct unrelated types, typically depending on some “tag” variable. However, overlays are also used to reinterpret the same underlying type in different, nondisjoint ways; e.g., to split an account number into distinct subfields representing various attributes of the accounts. The disjoint and non-disjoint use of overlays can be distinguished in general only by examining the way the data are used in code, and not just by examining the data declarations. Examples of other challenging problems related to extracting logical data models from applications include: Identifying logical entities and the correspondences between program variables and logical entities. Identifying distinct variables that aggregate to a single logical entity because they are always used together. Identifying associations (with multiplicities) between entities based on implicit and explicit primary/foreign key relationships. Identifying uses of generic polymorphism, i.e., data types designed to be parameterized by other data types. These problems are challenging because they require sophisticated analysis of the application’s code. For illustrations of these problems (except generic polymorphism) see Figures 1 and 2, and the discussion in Section 2. 5 Figure 3: Screen snapshot depicting the Mastery Modeling Tool (MMT). tool contains several components. An importer processes Cobol source files and Cobol copybooks to build a physical model that can be browsed inside the tool. A model extractor builds a UMLclass-diagram-like logical model (as described in Section 5.1), and links that model to the physical data model. Currently, the model extractor identifies basic logical types, subtypes, and association relations. Extensive model view facilities allow logical models and their links to physical data items to be browsed and filtered from many perspectives. A query facility allows expressive queries on the properties of models and links. Such queries can be used, e.g., to understand the impact of various proposed updates to the application, from a data perspective. A report generation capability generates HTML-based reports depicting the logical and physical models for easy browsing outside the MMT tool. The Mastery Project and the Mastery Modeling Tool In this section, we give a brief overview of the Mastery project at IBM Research, whose aim is to create data centered modeling tools, and to develop the algorithmic foundations for model extraction. In the long run, our goal is to address process model and business rule extraction, too, in addition to data model extraction. Mastery currently consists of two distinct threads: research on novel algorithms for logical data model abstraction, and development of an interactive tool (the Mastery Modeling Tool, or MMT) for extracting, querying, and manipulating logical models from Cobol applications. 5.1 6 There are commercially available tools that assist with data modeling, e.g., ERWin [6], Rational Rose [8], Relativity [7], SEEC [11], and Crystal [9]. However, none of these tools infer a logical data model for an existing application by analyzing how data is used in the code of the application. They either support forward engineering of applications from data models (ERWin and Rose), or infer data models from data declarations alone (Relativity, SEEC, and Rose), or infer logical data models by analyzing the contents of data stores as opposed to the code that uses the data (Crystal). Previously reported academic work in using type inference for program understanding includes [13, 4, 16, 14]. Of these, the approaches of [16, 14] are close in spirit to the type inference component of our model extractor; however, our approach has certain distinctions such as the use of a heuristic to distinguish disjoint uses of redefined variables. Other non-type-inference-based previous approaches in inferring logical data models (or certain aspects of logical data models) include [2, 3, 5, 15]; details of these approaches are outside the scope of this paper. Examples of approaches for inferring business rules (as opposed to data models) are [12, 1]. Model Extraction Algorithms Research Our basic algorithmic idea is to use dataflow information to infer information about logical types. For example, given a statement assigning A to B, we can infer that at that point in the code A and B have the same type (i.e., correspond to the same logical entity). Further, if we know that K is a variable containing a key value for a logical type τ , and K is assigned to a field F of record R, then we can infer that there is a logical association between the type of R and τ . We regard reads from external or persistent data sources as defining “basic entities” from which more elaborate relations can be inferred. We have also incorporated a heuristic that uses the declarations of variables to infer subtype relationships. The simple ideas outlined above are greatly complicated by various Cobol programming idioms, particularly the elaborate use of overlays. The details of our work regarding these aspects are outside the scope of this paper. 5.2 Related Work The Mastery Modeling Tool The Mastery Modeling Tool is built on the open source Eclipse [10] tool framework. A snapshot of the tool is depicted in Fig. 3. The 3 References [1] S. Blazy and P. Facon. Partial evaluation for the understanding of fortran programs. Intl. Journal of Softw. Engg. and Knowledge Engg., 4(4):535–559, 1994. [2] G. Canfora, A. Cimitile, and G. A. D. Lucca. Recovering a conceptual data model from cobol code. In Proc. 8th Intl. Conf. on Softw. Engg. and Knowledge Engg. (SEKE ’96), pages 277–284. Knowledge Systems Institute, 1996. [3] B. Demsky and M. Rinard. Role-based exploration of objectoriented programs. In Proc. 24th Intl. Conf. on Softw. Engg., pages 313–324. ACM Press, 2002. [4] P. H. Eidorff, F. Henglein, C. Mossin, H. Niss, M. H. Sorensen, and M. Tofte. Annodomini: from type theory to year 2000 conversion tool. In Proc. 26th ACM SIGPLANSIGACT Symp. on Principles of Programming Languages, pages 1–14. ACM Press, 1999. [5] M. D. Ernst, J. Cockrell, W. G. Griswold, and D. Notkin. Dynamically discovering likely program invariants to support program evolution. In Proc. 21st Intl. Conf. on Softw. Engg., pages 213–224. IEEE Computer Society Press, 1999. [6] http://ca.com. [7] http://relativity.com. [8] http://www-306.ibm.com/software/rational. [9] http://www.businessobjects.com. [10] http://www.eclipse.org. [11] http://www.seec.com. [12] F. Lanubile and G. Visaggio. Function recovery based on program slicing. In Proc. Conf. on Software Maintenance, pages 396–404. IEEE Computer Society, 1993. [13] R. O’Callahan and D. Jackson. Lackwit: a program understanding tool based on type inference. In Proc. 19th intl. conf. on Softw. Engg., pages 338–348. ACM Press, 1997. [14] G. Ramalingam, J. Field, and F. Tip. Aggregate structure identification and its application to program analysis. In Proc. 26th ACM SIGPLAN-SIGACT Symp. on Principles of Programming Languages, pages 119–132. ACM Press, 1999. [15] A. van Deursen and T. Kuipers. Identifying objects using cluster and concept analysis. In Proc. 21st Intl. Conf. on Softw. Engg., pages 246–255. IEEE Computer Society Press, 1999. [16] A. van Deursen and L. Moonen. Understanding COBOL systems using inferred types. In Proc. 7th Intl. Workshop on Program Comprehension, pages 74–81. IEEE Computer Society Press, 1999. 4