Document 14832631

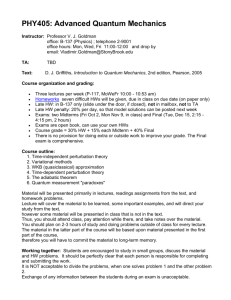

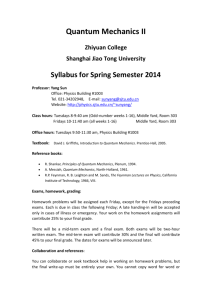

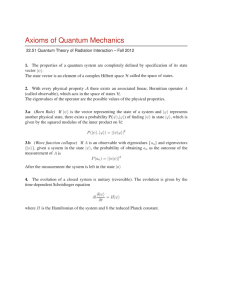

advertisement

Automation and Remote Control, Vol. 63, No. 2, 2002, pp. 209–219. Translated from Avtomatika i Telemekhanika, No. 2, 2002, pp. 44–55.

c 2002 by Granichin.

Original Russian Text Copyright STOCHASTIC SYSTEMS

Randomized Algorithms for Stochastic

Approximation under Arbitrary Disturbances

O. N. Granichin

St. Petersburg State University, St. Petersburg, Russia

Received March 20, 2001

Abstract—New algorithms for stochastic approximation under input disturbance are designed.

For the multidimensional case, they are simple in form, generate consistent estimates for unknown parameters under “almost arbitrary” disturbances, and are easily “incorporated” in the

design of quantum devices for estimating the gradient vector of a function of several variables.

1. INTRODUCTION

In the design of optimization and estimation algorithms, noises and errors in measurements

and properties of models are usually attributed with some useful statistical characteristics, which

are used in demonstrating the validity of the algorithm. For example, noise is often assumed

to be centered. Algorithms based on the ordinary least-squares method are used in engineering

practice for simple averaging of observation data. If noise is assumed to centered without any

valid justification, then such algorithms are unsatisfactory in practice and may even be harmful.

Such is the state of affairs under “opponent’s” counteraction. In particular, if noise is defined by a

deterministic unknown function (opponent suppresses signals) or measurement noise is a dependent

sequence, then averaging of observations does not yield any useful result. Analysis of typical results

in such situations shows that the estimates generated by ordinary algorithms are unsatisfactory,

observation sequences are believed to be degenerated and such problems are usually disregarded.

Another close problem in applying many recurrent estimation algorithms is that observation

sequences do not have adequate variability. For example, the main aim in designing adaptive

controls is to minimize the deviation of the state vector of a system from a given trajectory and

this often results in a degenerate observation sequence. Consequently, identification is complicated

and successful identification requires “diverse” observations.

Our new approach to estimation and optimization under poor conditions (for example, degenerate observations) is based on the use of test disturbances. The information in the observation

channel can be “enriched” for solving many problems by introducing a test disturbance with known

statistical properties into the input channels or algorithm. Sometimes the measured random process can be used as the test disturbance. In control systems, test actions can be introduced via

the control channel, whereas in other cases, a randomized observation plan (experiment) can be

used as the test action. In studying a renewed system with test disturbance, which is sometimes

simply the old system in a different form, results on convergence and applicability fields of new

algorithms can be obtained through traditional estimation methods. One remarkable characteristic of such algorithms is their convergence under “almost arbitrary” disturbances. An important

constraint on their applicability is that the test disturbance and inherent noise are assumed to be

independent. This constraint in many problems is satisfied. Such is the case if noise is taken to

be an unknown bounded deterministic function or some external random perturbation generated

by some statistical properties of the test disturbance unknown to the opponent counteracting our

investigation.

c 2002 MAIK “Nauka/Interperiodica”

0005-1179/02/6302-0209$27.00 210

GRANICHIN

Surprisingly, researchers did not notice for long that in case of noisy observations, search algob

rithms with sequential (n = 1, 2, . . . ) changes in the estimate θ

n−1 along some random centered

vector ∆n

b =θ

b

θ

n

n−1 − ∆n y n ,

might converge to the true vector of controlled parameters θ ∗ under not only “good,” but also

“almost arbitrary” disturbances. This happens if observations y n are taken at some point defined

b n−1 and vector ∆n , called the simultaneous test disturbance. Such an

by the previous estimate θ

algorithm is called the randomized estimation algorithm, because its convergence under “almost

arbitrary” noises is demonstrated through the stochastic (probabilistic) properties of the test disturbance. In the near future, experimenters will radically change their present cautious attitude to

stochastic algorithms and their results. Modern computing devices will be supplanted by quantum

computers, which operator as stochastic systems due to the Heisenberg principle of uncertainty.

By virtue of the possibility of quantum parallelism, randomized-type estimation algorithms will,

most probably, form the underlying principle of future quantum computing devices.

A main feature of the stochastic approximation algorithms designed in paper is that the unknown

maximized function is measured in each step not at the point defined by the previous estimate, but

at its slightly perturbed position. Precisely this “perturbation” plays the part of simultaneous test

disturbance, “enriching” the information arriving at the observation channel. The convergence of

randomized stochastic approximation algorithms under “almost arbitrary” disturbances was first

investigated in [1–4]. In [5], such algorithms are studied, but under “good” disturbances in observations, and the asymptotic optimality of recurrent algorithms in minimax sense is demonstrated.

A similar algorithm is studied in [6, 7], in which it is shown that the number of iterations required

for attaining the necessary estimation accuracy is not attained, though the number of measurements in the multidimensional case at each step is considerably less compared to the classical

Kiefer–Wolfowitz procedure. In foreign literature, the new algorithm is called the simultaneous

perturbation stochastic approximation. Similar algorithms are investigated in designing adjustment algorithms for neural networks [8, 9]. The consistency of recurrent algorithms under “almost

arbitrary” perturbations are also investigated in [10, 11].

In this paper, we study a more general formulation of the problem of minimization of mean-risk

functional with measurements of the cost function under “almost arbitrary” perturbations. We

shall demonstrate that sequences of estimates generated by different algorithms converge to the

true value of unknown parameters with probability 1 in the mean-square sense.

2. FORMULATION OF THE PROBLEM AND MAIN ASSUMPTIONS

Let F (w, θ) : Rp × Rr → R1 be a θ-differentiable function and let x1 , x2 . . . , be a sequence of

measurement points chosen by the experimenter (observation plan), at which the value

yn = F (wn , xn ) + vn

of the function F (wn , ·) is accessible to observation at every instant n = 1, 2, . . . , with additive

disturbances vn , where {wn } is a noncontrollable sequence of random variables (wn ∈ Rp ) having,

in general, identical unknown distribution Pw (·) with a finite carrier.

Formulation of the problem. Using the observations y1 , y2 . . . , construct a sequence of estimates

b

{θ n } for the unknown vector θ ∗ minimizing the function

Z

f (θ) =

F (w, θ)Pw (dw)

Rp

of the type of mean-risk functional.

AUTOMATION AND REMOTE CONTROL

Vol. 63

No. 2

2002

RANDOMIZED ALGORITHMS FOR STOCHASTIC APPROXIMATION

211

Usually, minimization of the function f (·) is studied with a simple observation model

yn = f (xn ) + vn ,

which easily matches within the general scheme. The generalization in the formulation is stipulated

by the need to take account of multiplicative perturbations in observations:

yn = wn f (xn ) + vn ,

which is contained in the general scheme with the function F (w, x) = wf (x), and the need to

generalize the observation model [12], where

1

F (w, x) = (x − θ ∗ − w)T (x − θ ∗ − w).

2

Let us formulate the main assumptions, denoting the Euclidean norm and scalar multiplication

in Rr by k · k and h·, ·i, respectively.

SA.1 The function f (·) is strongly convex, i.e., has a unique minimum in Rr at some point

∗

θ = θ ∗ (f (·)) and

(x − θ ∗ , ∇f (x)) ≥ µkx − θ ∗ k2 ∀x ∈ Rr

with some constant µ > 0.

SA.2 The gradient of the function f (·) satisfies the Lipschitz condition

k∇f (x) − ∇f (θ)k ≤ Akx − θk ∀x, θ ∈ Rr

with some constant A > µ.

3. TEST PERTURBATION AND MAIN ALGORITHMS

Let ∆n , n = 1, 2, . . . , be an observed sequence of independent random variables in Rr , called

the simultaneous test perturbation, with distribution function Pn (·).

Let us take a fixed initial vector θb0 ∈ Rr and choose two sequences {αn } and {βn } of positive

numbers tending to zero. We design three algorithms for constructing sequences of measurement

points {xn } and estimates {θn }. The first algorithm uses at every step (iteration) one observation

b

xn = θ

n−1 + βn ∆n , yn = F (wn , xn ) + vn

bn = θ

b n−1 − αn Kn (∆n )yn ,

θ

(1)

βn

whereas the second and third algorithms, which are randomized variants of the Kiefer–Wolfowitz

procedure, use two observations

b

b

x2n = θ

n−1 + βn ∆n , x2n−1 = θ n−1 − βn ∆n

αn

b =θ

b

θ

Kn (∆n )(y2n − y2n−1 ),

n

n−1 −

(2)

2βn

b n−1 + βn ∆n ,

x2n = θ

b n−1

x2n−1 = θ

αn

b =θ

b

θ

Kn (∆n )(y2n − y2n−1 ).

n

n−1 −

βn

AUTOMATION AND REMOTE CONTROL

Vol. 63

No. 2

2002

(3)

212

GRANICHIN

All these three algorithms use some vector functions (kernels) Kn (·) : Rr → Rr , n = 1, 2, . . . , with

a compact carrier, which, along with distribution functions of the test perturbation Pn (·), satisfy

the conditions

Z

Z

Kn (x)Pn (dx) = 0,

Z

sup

n

Kn (x)xT Pn (dx) = I,

kKn (x)k2 Pn (dx) < ∞,

n = 1, 2, . . . ,

(4)

where I is an r-dimensional unit matrix.

Algorithm (2) was first developed by Kushner and Clark [13] for a uniform distribution and

function Kn (∆n ) = ∆n . Algorithm (1) was first designed in [1] for constructing a sequence of

consistent estimates under “almost arbitrary” perturbations in observations, using the same simple

kernel function, but for a more general class of test perturbations. Polyak and Tsybakov [5]

investigated algorithms (1) and (2) with a vector function Kn (·) of sufficiently general type for

uniformly distributed test perturbation under the assumption that the observation perturbations

are independent and centered. Spall [6, 7] studied algorithm (2) for a test perturbation distribution

with finite inverse moments and vector function Kn (·) defined by the rule

Kn

x

(1)

(2)

,x

(r) T

,...,x

=

1

1

1

, (2) , . . . , (r)

(1)

x

x

x

T

.

Using this vector Kn (·) and constraints on the distribution of the simultaneous test perturbation,

Chen et al. [10] studied algorithm (3).

4. CONVERGENCE WITH PROBABILITY 1 AND IN THE MEAN-SQUARE SENSE

Instead of algorithm (1), let us study a close algorithm with projection

b n−1 + βn ∆n , yn = F (wn , xn ) + vn

xn = θ

b =P

θ

n

Θn

b

θ

n−1 −

αn

Kn (∆n )yn .

βn

(5)

For this algorithm, it is more convenient to prove the consistency of the estimate sequence. In this

algorithm, PΘn , n = 1, 2, . . . , are operators of projection onto some convex closed bounded subsets

Θn ⊂ Rr containing the point θ ∗ , beginning from some n ≥ 1. If the set Θ containing the point θ ∗

is known in advance, then we can take Θn = Θ. Otherwise, the sets {Θn } may extend to infinity.

Let W = supp(Pw (·)) ⊂ Rp be the carrier of the distribution Pw (·) and let Fn−1 be the σ-algebra

b0, θ

b1, . . . , θ

b n−1 formed by algorithm (5)

of probabilistic events generated by the random variables θ

(or (2), or (3)). In applying algorithm (2) or (3), we have

v n = v2n − v2n−1 ,

wn =

w2n

w2n−1

!

,

dn = 1,

and in constructing estimates by algorithm (5), we have

v n = vn ,

w n = wn ,

dn = diam(Θn ),

where diam(·) is the Euclidean diameter of the set.

Theorem 1. Let the following conditions hold:

(SA.1) for the function f (θ) = E{F (w, θ)},

AUTOMATION AND REMOTE CONTROL

Vol. 63

No. 2

2002

RANDOMIZED ALGORITHMS FOR STOCHASTIC APPROXIMATION

213

(SA.2) for the function F (w, ·) ∀w ∈ W,

(4) for the functions Kn (·) and Pn (·), n = 1, 2, . . . ,

∀θ ∈ Rr the functions F (·, θ) and ∇θ F (·, θ) are uniformly bounded on W,

∀n ≥ 1 the random variables v 1 , . . . , v n and vectors w1 , . . . , wn−1 do not depend on wn and ∆n ,

and the random vector w n does not depend on ∆n , and

E{v n2 } ≤ σn2 , n = 1, 2, . . . .

P

If

αn = ∞, αn → 0, βn → 0, and α2n βn−2 (1 + d2n + σn2 ) → 0 as n → ∞, then the sequence

n

b n } generated by algorithm (5) (or (2), or (3)) converges to the point θ ∗ in the

of estimates {θ

b n − θ ∗ k2 } → 0 as n → ∞.

mean-square sense: E{kθ

P

Moreover, if αn βn2 < ∞ and

n

X

α2n βn−2 1 + E{v n2 | Fn−1 } < ∞

n

b → θ ∗ as n → ∞ with probability 1.

with probability 1, then θ

n

The proof of Theorem 1 is given the Appendix.

The conditions of Theorem 1 hold for the function F (w, x) = wf (x) if the function f (x) satisfies

conditions (SA.1) and (SA.2).

The observation perturbations vn in Theorem 1 can be said to be “almost arbitrary” since they

may also be nonrandom, but independent and bounded or the realization of some stochastic process

with arbitrary dependencies. In particular, to prove the assertions of Theorem 1, there is no need

to assume that v n and Fn−1 are dependent.

The assertions of Theorem 1 do not hold if the perturbations v n depend on the observation

point v n = v n (xn ) in a specific manner. If such a dependence holds, for example, due to rounding

errors, then the observation perturbation must be subdivided into two parts v n (xn ) = ven + ξ(xn )

in solving such a problem. The first part may satisfy the conditions of Theorem 1. If the second

part results from rounding errors, then it, as a rule, has good statistical properties and may not

prevent the convergence of algorithms.

The condition that the observation be independent of the test perturbation can be slackened.

It suffices to assume that the conditional mutual correlation between v n and Kn (∆n ) tend to zero

with probability 1 at a rate not less than αn βn−1 as n → ∞.

Though algorithms (2) and (3) may look alike, algorithm (3) is more suitable for use in realtime systems if observations contain arbitrary perturbations. For algorithm (2), the condition that

observation perturbation v2n and the test perturbation ∆n be independent is rather restrictive,

because the vector ∆n is used at the previous instant (2n − 1) in the system. In the operation of

algorithm (3), perturbations v2n and the test perturbation vector ∆n simultaneously appear in the

system. Hence they can be regarded as independent.

For `-times continuously differentiable functions F (w, ·), the vector function Kn (·) is constructed

in [5] with the help of Lagrange orthogonal polynomials pm (·), m = 0, 1, . . . , `, using a uniform test

perturbation on an r-dimensional cube [−1/2, 1/2]r as the probabilistic distribution.

Moreover, it

`

is shown that the mean-square convergence rate asymptotically behaves as O n `+1 . A similar

result can also be obtained for the general test perturbation distribution studied in this paper.

5. QUANTUM COMPUTER AND GRADIENT VECTOR ESTIMATION

Owing to the simplicity of representation, randomized stochastic approximation algorithms can

be used not only in programmable computing devices, but also permit the incorporation of the

AUTOMATION AND REMOTE CONTROL

Vol. 63

No. 2

2002

214

GRANICHIN

simultaneous perturbation principle into the design of classical-type electronic devices. A fundamental point in demonstrating the convergence of any method in practical application is that the

observation perturbation must be independent of the generated test perturbation, and the components of the test perturbation vector must be mutually independent. In realizing the algorithm on a

conventional computer implementing elementary operations in succession, the designed algorithms

are not as effective as theoretical predictions. The very name “simultaneously perturbed” implies

the imperative requirement for practical realization, viz., capable of being implemented in parallel.

Nevertheless, if the vector arguments of a function are large dimensional (hundreds or thousands),

it is not a simple matter to organize parallel computations on conventional computers.

We consider a model of a “hypothetical” quantum computer, which obviously generates not only

a simultaneous test perturbation, but also realizes a fundamental nontrivial concept—measurement

of quantum computation result—since the concept itself underlies the design of the randomized

algorithm in the form of product of the computed value of a function and test perturbation. In

other words, we give an example of the design of a quantum device for estimating the gradient

vector of an unknown function of several variables in one cycle. The epithet “hypothetical” is

purposefully put within quotes, because quantum computer is generally believed to be a machine

of the near future after Shor has read his report at the Berlin Mathematical Congress in 1998 [14].

As in [14], let us describe the mathematical model of a quantum computer that computes by a

specific circuit. We use, whenever possible, the generalizations of concepts describing the model of a

conventional computer. A conventional computer handles bits, which take value from the set {0, 1}.

It is based on a finite ensemble of circuits that can be applied to a set of bits. A quantum computer

handles q-bits (quantum bits)—a quantum system of two states (a microscopic system describing,

for example, an excited ion or polarized photon, or the spin of atomic kernel). The behavioral

characteristics of this quantum system, such as interference, superposition, stochasticity, etc., can

be described exactly only through the rules of quantum mechanics [15]. Mathematically, a q-bit

takes its values from a complex projective (Hilbert) space C2 . Quantum states are invariant to

multiplication by a scalar. Let |0i, |1i be the base of this space. We assume that a quantum

computer, like a conventional computer, is equipped with a discrete set of basic components, called

the quantum circuits. Every quantum circuit is essentially a unitary transformation, which acts

on a fixed number of q-bits. One of the fundamental principles of quantum mechanics asserts that

the joint quantum state space of a system consisting of ` two-state systems is the tensor product

of their component state spaces. Thus, the quantum state space of a system of ` q-bits is the

l

projective Hilbert space C2 . The set of base vectors of this state space can be parametrized by bit

rows of length `: |b1 b2 . . . bl i. Let us assume that classical data, a row i of length k, k ≤ `, is fed

to the input of a quantum computer. In quantum computation, ` q-bits initially exist in the state

|i00 . . . 0i. An executable circuit is constructed from a finite number of quantum circuits acting

on these q-bits. At the end of computation, the quantum computer passes to some state—a unit

l

vector in the space C2 . This state can be represented as

W =

X

ψ (s) | si,

s

where summation with respect to s is taken over all binary rows of length `, ψ (s) ∈ C,

P

s

|ψ (s) |2 = 1.

Here ψ (s) is called the probabilistic amplitude and W , the superposition of the base vectors |si. The

Heisenberg uncertainty principle asserts that the state of a quantum system cannot be predicted

exactly. Nevertheless, there are several possibilities of measuring all q-bits (or a subset of q-bits).

The state space of our quantum system is Hilbertian and the concept of state measurement is

equivalent to the scalar product in this Hilbert space with some given vector V:

hV, Wi.

AUTOMATION AND REMOTE CONTROL

Vol. 63

No. 2

2002

RANDOMIZED ALGORITHMS FOR STOCHASTIC APPROXIMATION

215

The projection of each of q-bits on the base {|0i, |1i} is usually used in measurement. The result

of this measurement is the computation result.

Let us examine the segment of the randomized stochastic approximation algorithm (3) (or (1),

b (x) function

or (2)), that is used in computing the approximate value of the gradient vector g

f (·) : Rr → R at some point x ∈ Rr . Let the numbers in our quantum computer be expressed

through p binary digits and ` = p × r (in modern computers, p = 16, 32, 64). In the algorithm,

let Kn (x) = x, β = 2−j , 0 ≤ j < p, and let the simultaneous test perturbation fed to the input

of the algorithm be defined by a Bernoulli distribution. For any number u ∈ R, let su denote

(1)

(p)

its binary representation in the form of a bit array su = bu . . . bu . Assume that there exists a

quantum circuit that computes the value of the function f (x). More exactly, there exists a unitary

l

l

transformation Uf : C2 → C2 that for any x = x(1) , . . . , x(r)

(1)

(p)

T

∈ Rr , associates the base element

(1)

(p)

|sx(1) . . . sx(r) i = bx(1) . . . bx(1) . . . bx(r) . . . bx(r)

E

with another base element

E E

(1)

(p)

sf (x) 00 . . . 0 = bf (x) . . . bf (x) 00 . . . 0i = Uf |sx(1) . . . sx(r) .

Let the hypothetical quantum computer contain, at least, four ` q-bit registers: an input register

I for transforming conventional data into quantum data, two working registers W1 and W2 for

manipulating quantum data, and an output register ∆ for storing the quantum value, projection

onto which is the computation result in conventional form (measurement). The design of a quantum

circuit for estimating the gradient vector of the function f (·) requires a few standard quantum

(unitary) transformations that are applicable to register data. We assume that the transformation

result is stored in the same register to which the transformation is applied.

Addition/Subtraction U±X of a vector with (from) another vector stored in the register X.

Rotation of the first q-bits UR1,p+1,...,(r−1)p+1 converts the state of q-bits 1, p + 1, . . . , (r − 1)p + 1

1

1

into √ |0i + √ |1i.

2

2

Shift by j q-bits USj displaces the state vector by j q-bits, adding new bits |0i.

The gradient vector of a function f (·) at a point x can be estimated by the following algorithm.

1. Zero the states of all registers I, W1 , W2 , ∆.

2. Feed a bit array sx to the input I and transform the register ∆:

∆ := UR1,p+1,...,(r−1)p+1 ∆.

3. Compute the value of the functions f (x) and f (x + 2−j ∆)

W1 := Uf U+I W1 ,

W2 := Uf U+I USj U+∆ W2 .

4. Compute the difference W2 := U−W1 W2 .

5. Sequentially (i = 1, 2, . . . , r) measure the components of result

gb (i) (x) = h∆, W2 i, W2 := USp W2 .

Computation result is determined by the formula for estimating the gradient vector of f (·):

∇f (x) ≈ ∆

AUTOMATION AND REMOTE CONTROL

f (x + β∆) − f (x)

.

β

Vol. 63

No. 2

2002

216

GRANICHIN

6. CONCLUSIONS

Unlike deterministic methods, stochastic optimization considerably widens the range of practical

problems for which an exact optimal solution can be found. Stochastic optimization algorithms are

effective for information network analysis, optimization via modeling, image processing, pattern

recognition, neural network learning, and adaptive control. The role of stochastic optimization is

expected to grow with the complication of modern systems in the same way as population growth

and depletion of natural resources stimulate the use of more intensive technologies in those areas

where they were not required earlier. The trend of modern development of computation techniques

heralds that traditional deterministic algorithms will be supplanted by stochastic algorithms, because there already exist pilot quantum computers based on stochastic principles.

APPENDIX

Proof of Theorem 1. Let us consider algorithm (5). Theorem 1 for algorithms (2) and (3)

are proved almost along similar lines and can be demonstrated by analogy. For sufficiently large n

under which θ ∗ = θ ∗ (f (·)) ∈ Θn , using projection properties we easily obtain

2

αn

b

∗ 2

∗

b

Kn (∆n )yn θ n − θ ≤ θ n−1 − θ −

.

β

n

Now applying the operation of conditional expectation for the σ-algebra Fn−1 , we obtain

2

2

E

αn D b

b

b

∗

∗

E θ n − θ |Fn−1 ≤ θ

θ n−1 − θ ∗ , E{Kn (∆n )yn | Fn−1 }

n−1 − θ − 2

βn

2

n

o

α

+ n2 E yn2 kKn (∆n )k2 |Fn−1 .

βn

(6)

By virtue of the mean value theorem, condition (SA.2) for the function F (·, ·) yields

1

1

kx − θ ∗ k2 ,

∇θ F (w, θ ∗ )2 + A +

2

2

|F (w, x) − F (w, θ ∗ )| ≤

x ∈ Rr .

Since the function F (·, θ) is uniformly bounded, using the notation

ν1 = sup

w∈W

1

|F (w, θ )| + ∇θ F (w, θ ∗ )2 ,

2

∗

we find that

2

2

∗ 2

2

b

b

F (w, θ

n−1 + βn x) ≤ (ν1 + (2A + 1) kθ n−1 − θ k + kβn xk )

uniformly for w ∈ W. By condition (4), we have

n

o

E vn2 kKn (∆n )k2 |Fn−1 ≤ sup Kn (x)2 ξn2 .

x

Since the vector functions Kn (·) are bounded and their carriers are compact, the last two inequalities

for the last term in the right side of (6) yield

n

o

n

E kyn k2 kKn (∆n )k2 |Fn−1 ≤ 2E vn2 kKn (∆n )k2 |Fn−1

ZZ

+2

2

b

F w, θ

n−1 + βn x

≤ C1 + C2

(d2n

o

kKn (x)k2 Pn (dx)Pw (dw)

b

∗ 2

2

+ 1) θ n−1 − θ + βn + C3 ξn2 .

AUTOMATION AND REMOTE CONTROL

Vol. 63

No. 2

2002

RANDOMIZED ALGORITHMS FOR STOCHASTIC APPROXIMATION

217

Here and in what follows, Ci , i = 1, 2, . . . , are positive constants.

Now let us consider

βn−1 E {yn Kn (∆n )|Fn−1 } = βn−1

ZZ

b n−1 + βn x Kn (x)Pn (dx)Pw (dw)

F w, θ

+βn−1 E{vn Kn (∆n )|Fn−1 }.

(7)

For the second term in the last relation, since vn and ∆n are independent, from (4) we obtain

E{vn Kn (∆n ) | Fn−1 } = E{vn | Fn−1 }

Z

Kn (x)Pn (dx) = 0.

Since the function ∇θ F (·, θ) is uniformly bounded, we have

Z

∇θ F (w, x)Pw (dw) = ∇f (x).

Rp

Using the last relation and condition (4), let us express the first term in (7) as

βn−1

Z +

b n−1 ) +

= ∇f (θ

ZZ

βn−1

ZZ

b n−1 + βn x)Kn (x)Pn (dx)Pw (dw) = ∇f (θ

b n−1 )

F (w, θ

b

b

F (w, θ n−1 + βn x)Kn (x)Pn (dx) − ∇θ F (w, θ n−1 ) Pw (dw)

Z

Kn (x)x

Z1

T

b n−1 + tβn x) − ∇ F (w, θ

b n−1 ))dtPn (dx)Pw (dw).

(∇θ F (w, θ

θ

0

Since conditions (SA.2) hold for any function F (w, ·) and condition (4) holds for Kn (·), for the

absolute value of the second term in the last equality we have

ZZ

Z1 T

b

b

Kn (x)x

∇θ F (w, θ n−1 + tβn x) − ∇θ F (w, θ n−1 ) dtPn (dx)Pw (dw)

0

≤

ZZ

kKn (x)k kxkAkβn xkPn (dx)Pw (dw) ≤ C4 βn .

Consequently, for the second term in the right side of inequality (6) we obtain

−2

D

E

αn D b

θ n−1 − θ ∗ , E{Kn (∆n )yn | Fn−1 }

βn

E

b n−1 ) + 2C4 αn βn θ

b n−1 − θ ∗ , ∇f (θ

b n−1 − θ ∗ .

≤ −2αn θ

Substituting the expressions for the second and third terms in the right side into (6), we find that

2

2

b

b

∗

∗

E θn − θ | Fn−1 ≤ θ

n−1 − θ D

E

b

∗

∗

b

b

−2αn θ

n−1 − θ , ∇f (θ n−1 ) + 2C4 αn βn θ n−1 − θ 2

α2

b

∗

2

2

+ n2 C1 + C2 (d2n + 1) θ

−

θ

+

β

+

C

ξ

n−1

3 n .

n

βn

Since condition (SA.1) holds for the function f (·) and the inequality

∗ 2

b

ε−1 βn + εβn−1 kθ

b

n−1 − θ k

θ n−1 − θ ∗ ≤

2

AUTOMATION AND REMOTE CONTROL

Vol. 63

No. 2

2002

218

GRANICHIN

holds for any ε > 0, we obtain

2 b

∗ E θ

n − θ Fn−1

2 b n−1 − θ ∗ ≤ θ

+ε−1 C4 αn βn2 +

1 − (2µ − εC4 )αn + C2 α2n βn−2 (d2n + 1)

α2n 2

2

C

+

C

β

+

C

ξ

1

2

3

n

n .

βn2

Choosing a small ε such that εC4 < µ, a sufficiently large n, and applying the conditions of

Theorem 1 for number sequences, let us transform the last inequality to the form

2 b

∗ E θ n − θ Fn−1 ≤

2

b

θ n−1 − θ ∗ (1 − C5 αn ) + C6 αn βn2 + α2n βn−2 (1 + ξn2 ) .

Hence, by the conditions of Theorem 1, all conditions of the Robbins–Siegmund Lemma [16] that

b → θ ∗ as n → ∞ with probability 1 are satisfied. To

are necessary for the convergence of θ

n

prove the conditions of Theorem 1 for mean-square convergence, let us examine the unconditional

mathematical expectation of both sides of the last inequality

2 b − θ∗

E θ

n

2 ∗

b

≤ E θ

n−1 − θ (1 − C5 αn ) + C6 αn βn2 + α2n βn−2 (1 + σn2 ) .

b n } to the point θ ∗ is implied by Lemma 5 of [17].

The mean-square convergence of the sequence {θ

This completes the proof of Theorem 1.

REFERENCES

1. Granichin, O.N., Stochastic Approximation with Input Perturbation for Dependent Observation Disturbances, Vestn. Leningrad. Gos Univ., 1989, Ser. 1, no. 4, pp. 27–31.

2. Granichin, O.N., A Procedure of Stochastic Approximation with Input Perturbation, Avtom. Telemekh.,

1992, no. 2, pp. 97–104.

3. Granichin, O.N., Estimation of the Minimum Point for an Unknown Function with Dependent Background Noise, Probl. Peredachi Inf., 1992, no. 2, pp. 16–20.

4. Polyak, B.T. and Tsybakov, A.B., On Stochastic Approximation with Arbitrary Noise (The KW Case),

in Topics in Nonparametric Estimation. Adv. in Soviet Mathematics. Am. Math. Soc., Khasminskii, R.Z.,

Ed., 1992, no. 12, pp. 107–113.

5. Polyak, B.T. and Tsybakov, A.B., Optimal Accuracy Orders of Stochastic Approximation Search Algorithms, Probl. Peredachi Inf., 1990, no. 2, pp. 45–53.

6. Spall, J.C., A Stochastic Approximation Technique for Generating Maximum Likelihood Parameter

Estimates, Am. Control Conf., 1987, pp. 1161–1167.

7. Spall, J.C., Multivariate Stochastic Approximation Using a Simultaneous Perturbation Gradient Approximation, IEEE Trans. Autom. Control, 1992, vol. 37, pp. 332–341.

8. Alspector, J., Meir, R., Jayakumar, A., et al., A Parallel Gradient Descent Method for Learning in

Analog VLSI Neural Networks, in Advances in Neural Information Processing Systems, Hanson, S.J.,

Ed., San Mateo: Morgan Kaufmann, 1993, pp. 834–844.

9. Maeda, Y. and Kanata, Y., Learning Rules for Recurrent Neural Networks Using Perturbation and Their

Application to Neuro-control, Trans. IEE Japan, 1993, vol. 113-C, pp. 402–408.

10. Chen, H.F., Duncan, T.E., and Pasik-Duncan, B., A Kiefer–Wolfowitz Algorithm with Randomized

Differences, IEEE Trans. Autom. Control, 1999, vol. 44, no. 3, pp. 442–453.

11. Ljung, L. and Guo, L., The Role of Model Validation for Assessing the Size of the Unmodeled Dynamics,

IEEE Trans. Autom. Control, 1997, vol. 42, no. 9, pp. 1230–1239.

AUTOMATION AND REMOTE CONTROL

Vol. 63

No. 2

2002

RANDOMIZED ALGORITHMS FOR STOCHASTIC APPROXIMATION

219

12. Granichin, O.N., Estimation of Linear Regression Parameters under Arbitrary Disturbances, Avtom. Telemekh., 2002, no. 1, pp. 30–41.

13. Kushner, H.J. and Clark, D.S., Stochastic Approximation Methods for Constrained and Unconstrained

Systems, Berlin: Springer-Verlag, 1978.

14. Shor, P.W., Quantum Computing, 9 Int. Math. Congress, Berlin, 1998.

15. Faddeev, L.D. and Yakubovskii, O.A., Lektsii po kvantovoi mekhanike dlya studentov matematikov (Lectures on Quantum Mechanics for Students of Mathematics), St. Petersburg: RKhD, 2001.

16. Robbins, H. and Siegmund, D., A Convergence Theorem for Nonnegative Almost Super-Martingales and

Some Applications, in Optimizing Methods in Statistics, Rustagi, J.S., Ed., New York: Academic, 1971,

pp. 233–257.

17. Polyak, B.T., Vvedenie v optimizatsiyu (Introduction to Optimization), Moscow: Nauka, 1983.

This paper was recommended for publication by B.T. Polyak, a member of the Editorial Board

AUTOMATION AND REMOTE CONTROL

Vol. 63

No. 2

2002