Document 14827058

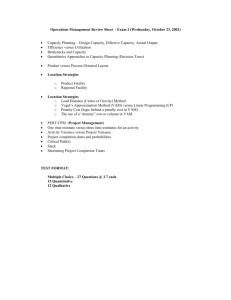

advertisement