Expected convex hull trimming of a data set

advertisement

Expected convex hull trimming of a data set

Ignacio Cascos

Department of Statistics, Universidad Carlos III de Madrid

Av. Universidad 30, E-28911 Leganés (Madrid) Spain

ignacio.cascos@uc3m.es

Summary. Given a data set in Rd , we study regions of central points built by

elementwise addition of the convex hulls of all their subsets with a fixed number of

elements. An algorithm to compute such central regions for a bivariate data set is

described.

Key words: convex hull, depth-trimmed regions, Minkowski sum

1 Introduction

The lack of a natural order in the multivariate Euclidean space makes it difficult

to translate many univariate data analytic tools to the multivariate setting. The

approach based on depth functions and depth-trimmed regions was first proposed

by Tukey [Tuk75] and has been extensively developed in the last years, see Liu et

al. [LPS99] for statistical applications of depth functions.

Depth functions assign a point its degree of centrality with respect to a multivariate probability distribution or a data cloud and thus provide a center-outward

ordering of points, see Tukey [Tuk75] or Zuo and Serfling [ZS00a]. Meanwhile, depthtrimmed regions are sets of central points. They are usually obtained from a depth

function as its level sets. Among others, Koshevoy and Mosler [KM97] and Cascos

and López-Dı́az [CLD05] have proposed and studied particular instances of families

of central regions.

We will propose a new family of central regions. Given a data set x1 , . . . xn ∈ Rd ,

its mean value x is the sum of all the points from the data set multiplied by n−1 .

Imagine we want to obtain a set that contains x and is central with respect to the

data cloud. We can build a natural candidate taking all the segments that join two

distinct points from the data set and averaging them. The way we average them

is by taking their Minkowski (or elementwise) sum weighted by the inverse of the

−1

number of segments, that is, we multiply the sum by n2

. Such a procedure can

be generalized from the set of all pairs of points to the set of all k-tuples for any

1 ≤ k ≤ n.

In Section 2, we will briefly introduce the expected convex hull trimmed regions of a data set. We use the classical method of the circular sequence to develop

674

Ignacio Cascos

and algorithm of complexity O(n2 log n) to compute arbitrary expected convex hull

trimmed regions of a bivariate data set in Section 3. Sections 4 and 5 are devoted

to present an example and an alternative representation of the expected convex hull

trimmed regions. Finally, an application of these trimmed regions to measure multivarite scatter is discussed in Section 6 and some concluding remarks presented in

Section 7.

2 The expected convex hull trimming

Before defining the expected convex hull trimmed regions, we will introduce some

notation and tools that are needed.

Given x1 , . . . , xn ∈ Rd , we denote its convex hull by co{x1 , . . . , xn }. For two sets

F, G ⊂ Rd , its Minkowski sum or elementwise sum is given by

F + G := {x + y : x ∈ F, y ∈ G}.

For λ ∈ R, we define λF := {λx : x ∈ F }.

Definition 1. Given x1 , . . . , xn ∈ Rd and 1 ≤ k ≤ n, the expected convex hull

trimmed region of level k is given by

!−1

CDk (x1 , . . . , xn ) :=

n

k

X

co{xi1 , . . . , xik } ,

1≤i1 <...<ik ≤n

where the summation is in the Minkowski or elementwise sense and is performed

over all the subsets of k elements of {x1 , . . . , xn }.

The reason we name these sets expected convex hull trimmed regions is that through

the Minkowski sum and the multiplication by a scalar, we are averaging (finding

expectations of) convex hulls. Whenever it is possible, we will write CDk shortly

instead of CDk (x1 , . . . , xn ).

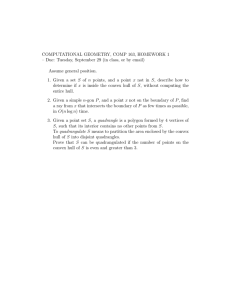

In Figure 4, we have plotted the expected convex hull region of a data set of

level k = 2. In three different frames, we have respectively depicted n = 5 points in

the plane, the 10 segments joining them and CD2 .

Fig. 1. Building CD2 .

Expected convex hull trimming of a data set

675

2.1 Properties of the expected convex hull trimmed regions

We will briefly summarize the main properties of the expected convex hull trimmed

regions.

Theorem 1. For x1 , . . . xn ∈ Rd we have,

(i) affine equivariance: for any matrix A and any point b, we have

CDk (Ax1 + b, . . . , Axn + b) = ACDk (x1 , . . . , xn ) + b;

(ii) nested: if k1 ≤ k2 , then CDk1 ⊂ CDk2 ;

(iii) convex: CDk is convex;

(iv) compact: CDk is compact;

(v) if k = 1: CD1 = {x};

(vi) if k = n: CDn = co{x1 , . . . , xn }.

Properties (i)–(iv) in Theorem 1 are similar to those studied for depth-trimmed

regions in Theorem 3.1 in [ZS00b], except for the fact that the inclusion of the

nesting property (ii) is here reversed with respect to the order relation in parameter

k. Properties (iii) and (iv) follow from the fact that CDk is the Minkowski sum of a

finite number of polytopes and thus, it is a polytope.

It is possible to define a depth function in terms of these regions. For a fixed

data set {x1 , . . . , xn } and any x ∈ Rd , we can define the function

CD(x) :=

min{k : x ∈ CDk }−1 if x ∈ co{x1 , . . . , xn }

.

0

otherwise

The function CD is a bounded, non-negative mapping and from Theorem 1 it follows

that it satisfies the properties of affine invariance, minimality at x, decreasing in

arrays from the mean value and vanishing at infinity. Therefore CD is a depth

function, see Definition 2.1 in [ZS00a].

2.2 Extreme points

Since the set CDk is a polytope, there is a finite number of points in its boundary

such that CDk is their convex hull. The minimal set of such points is constituted by

its extreme points. These can be expressed in terms of summations of the extreme

points of each of the summands co{xi1 , . . . , xik }.

For any set F ⊂ Rd , its support function is defined as h(F, u) := sup{hx, ui :

x ∈ F } for any u ∈ Rd . Since the support function is linear on F and

h(co{x1 , . . . , xn }, u) = max{hx1 , ui, . . . , hxn , ui}, we obtain

k

h(CD , u) =

n

k

!−1

X

max{hxi1 , ui, . . . , hxik , ui}.

1≤i1 <...<ik ≤n

Let x1 , . . . , xn be pairwise distinct.

Directions in the d-dimensional Euclidean space can be interpreted as elements

of S d−1 , the unit sphere in Rd . If u ∈ S d−1 \ L where L is the set of directions that

are orthogonal to the line through any two points xi and xj with i 6= j, then there

is πu a permutation of {1, . . . , n} with

hxπu (1) , ui < hxπu (2) , ui < . . . < hxπu (n) , ui.

676

Ignacio Cascos

We will characterize the extreme point of CDk in the direction of u. Given j ≥ k,

the number of sets of k points such that hxπu (j) , ui is greater than any other hxi , ui

j−1

is kj − j−1

where k−1

= 0, or equivalently k−1

. Consequently the point

k

k

x(u) =

n

k

!−1

n

X

j=k

!

j−1

xπu (j)

k−1

is the extreme point of CDk such that h(CDk , u) = hx(u), ui. It can alternatively be

written as

x(u) =

n

k X

(j − 1)(k−1) xπu (j) ,

n(k) j=k

where n(k) =

Qk−1

j=0 (n

(1)

− j) if n < k and k(k) = k!

Theorem 2. Let x1 , . . . , xn ∈ Rd be pairwise distinct, the set of extreme points of

CDk is {x(u) : u ∈ S d−1 \ L}.

By considering all possible orderings of {x1 , . . . , xn }, we obtain

CDk = co

n

k X

(k−1)

(j

−

1)

x

:

π

∈

P

,

n

π(j)

n(k) j=k

(2)

where Pn is the set of permutations of {1, . . . , n}. Unfortunately formula (2) is not

efficient to compute the extreme points of CDk since many of the points involved

are not extreme points.

In R2 , the number of directions involved in Theorem 2 is at most n(n − 1) and

we will take advantage of this to build an algorithm in the next section.

3 Algorithm

Given a bivariate data set, it is possible to consider all the orderings of its 1dimensional projections in an efficient way. This can be done through a circular sequence, see Chapter 2 in [Ede87]. Ruts and Rousseeuw [Rut96] and Dyckerhoff [Dyc00] have used circular sequences to build algorithms to compute the

extreme points of the halfspace and zonoid trimmed regions for a bivariate data set.

The algorithm we present here is based on that of Dyckerhoff [Dyc00], where we

have modified Step 5 to obtain our family of central regions.

Step 1.

Store the x-coordinates of the data points in an array X and the

y-coordinates in an array Y, so that each point of the data cloud can be written

as (X[i], Y[i]) for certain i. These arrays must be ordered in such a way that

X[i] ≤ X[i + 1] and if X[i] = X[i + 1], then Y[i] > Y[i + 1]. Initialize the arrays

ORD and RANK with the values from 1 to n. Initialize the arrays EXT1 and

EXT2 to store the extreme points.

Step 2.

In an array ANGLE of length n2 , store the angle between the first

coordinate axis and the normal vector of the line through the points (X[i], Y[i])

and (X[j], Y[j]), take always angles in [0, π). This array is finally sorted in an

increasing way.

Expected convex hull trimming of a data set

677

Step 3.

Find all successive entries of the array ANGLE with the same value,

these will correspond to sets of collinear points.

Step 4.

Reverse the order of each set of collinear points in the arrays ORD

and RANK. If (X[i], Y[i]) and (X[j], Y[j]) are collinear, then interchange the

values of ORD[RANK[i]] and ORD[RANK[j]] and afterwards RANK[i] and

RANK[j]. If (X[p], Y[p]), (X[q], Y[q]) and (X[r], Y[r]) with p < q < r are also

collinear then ORD[RANK[p]], ORD[RANK[q]] and ORD[RANK[r]] are interchanged with ORD[RANK[r]], ORD[RANK[q]] and ORD[RANK[p]] respectively

and RANK[p], RANK[q] and RANK[r] with RANK[r], RANK[q] and RANK[p].

Step 5.

If any RANK[i] ≥ k has been modified in Step 4, append

n

X

(j − 1)(k−1) (X[ORD[j]], Y[ORD[j]])

(3)

j=k

at the end of the array EXT1.

If any RANK[i] ≤ n − k has been modified in Step 4, append

n

X

(j − 1)(k−1) (X[ORD[n + 1 − j]], Y[ORD[n + 1 − j]])

(4)

j=k

at the end of the array EXT2.

Notice that each new entry of EXT1 or EXT2 can be computed in terms of the

previous one and the entries modified in the array ORD in Step 4 and thus it

is not necessary to perform the summation of n − k + 1 terms.

Step 6.

If there is any angle left in the array ANGLE continue with Step 3.

Step 7.

Multiply the entries of EXT1 and EXT2 by k/n(k) and concatenate

both arrays to obtain the sequence of extreme points in counterclockwise order.

The sorting of the array ANGLE in Step 2 has complexity O(n2 log n) and it

determines the overall complexity of the algorithm.

4 Example

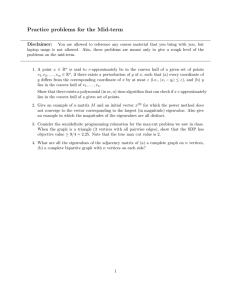

We have applied the algorithm described in Section 3 to some real data and the

outcome is depicted in Figure 2. The data corresponds to the results of 25 heptathletes in the 1988 Olympics in the 100 meters hurdles race (in seconds, axis X) and

their shot put distances (in meters, axis Y) and has been obtained from Hand et

al. [HDL94].

Dyckerhoff [Dyc00] has plotted the zonoid trimmed regions for this same data

set, we have chosen the heptathlon data so that the interested reader can compare

the central regions presented here with the ones plotted in [Dyc00].

5 Alternative trimmed regions

The main idea in this paper is to build central regions of a data set by averaging

convex hulls of combinations of its points through Minkowski summation. The population counterpart of these central regions is discussed by Cascos [Cas06a] and it is

defined in terms of the selection expectation for random sets, see Molchanov [Mol05].

Ignacio Cascos

10

11

12

13

14

15

16

678

13

14

15

16

Fig. 2. Contour plots of the expected convex hull trimmed regions of the heptathlon

data set with k ranging from 1 to 25.

The set CDk is build as a U -statistic, i.e., we consider all subsets from the data

set with a fixed size. An alternative family of central regions is obtained if the points

from the data set are selected at random and with replacement, that is, for k ≥ 1

we consider all combinations of k integers 1 ≤ i1 , i2 , . . . , ik ≤ n,

n

n

X

1 X

···

co{xi1 , . . . , xik }.

k

n i =1

i =1

1

(5)

k

The extreme points would be now

x(u) =

n

1 X k

j − (j − 1)k xπu (j) .

k

n j=1

(6)

If we replace (j − 1)(k−1) with j k − (j − 1)k in equations (3) and (4) of Step 5 and

k/n(k) with n−k in Step 7, the algorithm from Section 3 can be used to compute the

extreme points of (5). These changes are due to the differences between the extreme

points in equations (1) and (6).

6 Multivarite scatter estimates

The expected convex hull central regions provide us with information about the

scatter of the data. The bigger the regions are, the more scattered the data set

Expected convex hull trimming of a data set

679

is. Consequently, the volume of the expected convex hull trimmed regions can be

used to define multivariate scatter estimates. Take k = 2, then the set CD2 is a

sum of segments, i.e., a zonotope. It is, in fact, a translation of the zonotope of a

symmetrization of the empirical probability associated to the data set, see [Ms02].

Applications of zonotopes in data analysis have been discussed by Koshevoy and

Mosler [KM97] and Mosler [Ms02]. Using the relation

CD2 =

X

1

co{xi , xj }

n(n − 1) i6=j

(7)

and the formula for the volume of a zonotope, see Mosler [Ms02], we obtain the

d-dimensional volume of the set CD2

vold CD2 =

n X

n

n X

n

X

X

1

det[xi − xj , . . . , xi − xj ],

·

·

·

1

1

d

d

d

d

d!n (n − 1) i =1 j =1

i =1 j =1

1

1

d

d

where det[xi1 − xj1 , . . . , xid − xjd ] is the determinant of the matrix whose columns

are xi1 − xj1 , . . . , xid − xjd .

A consequence of the expected convex hull trimmed regions of level 2 being zonotopes, see equation (7), is that they will always be centrally symmetric. Nevertheless,

we understand CD2 has interest of its own as a descriptive statistic although we are

working with skewed data. Notice that, in such a case, the trimmed regions of higher

levels will not be symmetric.

7 Concluding remarks

Our effort in this paper has focused on the description of the expected convex hull

regions and the development of and algorithm for efficiently finding their extreme

points for a bivariate data set. However, the set of extreme points described in

Theorem 2 holds in any dimension. It is thus possible to built an approximate

algorithm for dimensions greater than 2 considering only a random subset of all

directions as Dyckerhoff [Dyc00] briefly discusses.

Acknowledgements

The financial support of the grant MTM2005-02254 of the Spanish Ministry of Science and Technology is acknowledged.

References

[Cas06a] Cascos, I.: Depth functions based on a number of observations of a random

vector. In preparation (2006)

[CLD05] Cascos, I., López-Dı́az, M.: Integral trimmed regions. J. Multivariate

Anal., 96, 404–424 (2005)

680

[Dyc00]

Ignacio Cascos

Dyckerhoff, R.: Computing zonoid trimmed regions of bivariate data sets.

In: Bethlehem J., van der Heijden, P. (eds) COMPSTAT 2000. Proceedings in Computational Statistics, 295–300. Physica-Verlag, Heidelberg

(2000)

[Ede87] Edelsbrunner, H.: Algorithms in Combinatorial Geometry. SpringerVerlag, Berlin (1987)

[HDL94] Hand, D.J., Daly, F., Lunn, A.D., McConway, K.J., Ostrowski, E. (eds):

A Handbook of Small Data Sets. Chapman and Hall, London (1994)

[KM97] Koshevoy, G., Mosler, K.: Zonoid trimming for multivariate distributions.

Ann. Statist., 25, 1998–2017 (1997)

[LPS99] Liu, R.Y., Parelius, J.M., Singh, K.: Multivariate analysis by data depth:

descriptive statistics, graphics and inference. Ann. Statist. 27, 783–858

(1999)

[Mol05] Molchanov, I.: Theory of Random Sets. Springer-Verlag, London (2005)

[Ms02]

Mosler, K.: Multivariate dispersion, central regions and depth. The lift

zonoid approach. LNS 165. Springer-Verlag, Berlin (2002)

[Rut96] Ruts, I., Rousseeuw, P.J.: Computing depth contours of bivariate point

clouds. Comput. Stat. Data Anal., 23, 153–168 (1996)

[Tuk75] Tukey, J.W.: Mathematics and the picturing of data. In: Proceedings

of the International Congress of Mathematicians, Vancouver, 2, 523–531

(1975)

[ZS00a] Zuo, Y., Serfling, R.: General notions of statistical depth function. Ann.

Statist., 28, 461–482 (2000)

[ZS00b] Zuo, Y., Serfling, R.: Structural properties and convergence results for

contours of sample statistical depth functions. Ann. Statist., 28, 483–499

(2000)