ISS CRITICAL INCIDENT REPORT

advertisement

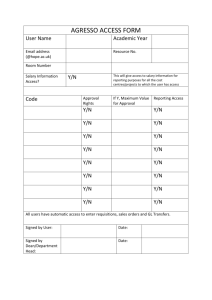

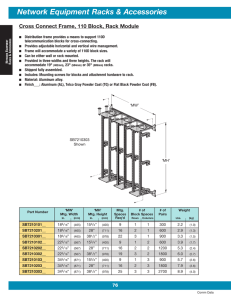

ISS CRITICAL INCIDENT REPORT INCIDENT DETAILS Brief description: On the morning of Monday 20th January an alarm was audible from a PDU (power distribution unit) This was investigated. At the time of the investigation a top of rack cisco network switch failed. On attaching to the switch directly it was found that the network configuration had been wiped An attempt to copy a saved version of the configuration to the switch was unsuccessful It was determined that a new switch should be configured with a copy of the original configuration and swopped into the rack As a result of the outage the following services were affected Agresso DB Core DB IDP HR Document Management (Therefore) VMware Some internet access Start date and time: 10:15 am End date and time: 13:20 Date of this report:24th January 2014 Incident Number: how to get this INCIDENT SUMMARY Data Centre switch went off line, this switch supports services Agresso DB, Core DB IDP, HR Document Management (Therefore) and two infoblox boxes support dhcp and dns. An attempt was made to connect to the switch directly but the configuration was wiped and we were unable to upload a copy of the configuration. It was decided that the best course of action was to replace the switch with one from stock, a replacement switch was configured with the copy of the configuration and installed in the Data Centre rack and repatched. The space within the rack was minimal and required careful attention to removal of the existing and install of the new to ensure that no other devices were affected. Once the switch was installed and repatched a number of services did not return automatically and a reboot of RAC2 and RAC3 was carried out. Also two ESX hosts would not connect to the network, the issue with the ESX hosts was resolved later when it was determined that a line of configuration code was missing from their uplink ports. The reason for this is that the switch which replaced the existing had a higher revision of code that the existing. This switch has now been checked and no other anomalies exist. INCIDENT ANALYSIS How and when did ISS find out about the incident? The issue was noted by the Data Centre team when access VMware environment was not possible Which service(s) were affected? Agresso DB Core DB IDP HR Document Management (Therefore) Sporadic external internet access due to 2 infoblox boxes being down What locations were affected? Throughout campus What was the user impact? o Intermittent loss of connectivity to external internet this was due to the fact that 2 x Infoblox boxes has lost network connectivity o Clients being provisioned with service through these boxes would have experienced a delay in reconnecting through the remaining load balanced Infoblox boxes o Access to core was unavailable How was service restored? A replacement switch was taken from stores and configured with a saved copy of the existing switch configuration. This configuration is saved on Ciscoworks. The existing switch was removed from the rack – this was a time consuming task due to the close proximity of devices within the rack. Once removed the replacement switch was installed in the rack and all devices reconnected to the correct switch ports. On reconnection all devices reconnected except for VMware hosts ESX 01 and ESX05 and the RAC 2 and RAC 3 A reboot of the RAC hardware was required to restore connectivity to the RAC environment The ESX hosts remained offline, a reboot of the hosts did not restore service If service restoration was a work round how & when will a permanent solution be effected? A work around was not carried out the faulty switch was replaced. A replacement for this spare has now been received What is needed to prevent a recurrence? With regard to the faulty switch as we cannot determine the root cause of the switch failure we must ensure that spares are onsite and an up to date configuration is available so that a hardware failure can be remediated as quickly as possible. A resilient configuration was in place for this cabinet in that two switches were installed and available for server connections and configuration. Are we currently at risk of recurrence or other service disruption following the incident? In what way? What needs to be done to mitigate the risk? ROOT CAUSE On Monday morning 20th January, a breaker on a rack PDU tripped. This took out 4 outlets which caused the redundant power supplies on numerous servers to fail. During routine troubleshooting a top of rack switch failed and the config became corrupted. It is not understood how this occurred or indeed if the faulty switch may have tripped the breaker originally. This seems unlikely as the switch was connected to the same PDU but a different breaker. The troubleshooting efforts of the PDU should not have had any impact on the switch. The failed switch impacted the network on RAC2, RAC3, ESX01, ESX05 and 2 Infoblox appliances. The main services impacted include Agresso DB and application servers, Core DB, IDP, HR Document Mgmt System (Therefore). It raised serious questions about the configuration and resilience of our VMware network and configuration of the RAC Cluster. o VMware did not work as designed as both servers were connected to 2 different switches for resilience. After the vSPhere5 debacle, Logicallis had recommended some configuration work on our VMware network but it was decided to not to change any network configs while the environment was stable. RAC2 and RAC3 servers were hosting the Core and Agresso DB’s. However the RAC cluster should be operating as a 4 node cluster as it has been designed. FOLLOW UP ACTIONS AND RECOMMENDATIONS Ensure spare switch and up to date configuration is available for all Data Centre switches Double check switch configuration once it has been uploaded – incorrectly configured ports resulted in ESX hosts being unable to connect – “switch port mode trunk” element was missing from port configuration Follow up on the RAC cluster configuration to determine why it did not failover LESSONS LEARNED The Data Centre switch failed during the investigation of a PDU (power distribution unit) alarm Work on PDU’s during the production window should be minimized to CIR’s