Document 14671613

advertisement

International Journal of Advancements in Research & Technology, Volume 3, Issue 11, November-2014

ISSN 2278-7763

173

Exploiting Large Margin Nearest Neighbors in CBIR

1.K.Selvam , AP/CSE Parisutham Institute of Technology and Science,Thanjavur.,komselvam@gmail.com.

2.R.KalaiSelvan , AP/CSE Parisutham Institute of Technology and science,Thanajvur.rk.kalai@gmail.com

3.A.Rajarajan ,AP/CSE Parisutham Institute of Technology and

Science,Thanjavur.rajarajan.ag@gmail.com

4.DR.K.L.Shunmuganathan Hod/CSE, RMKEC,Chennai,klsnathan@gmail.com.

ABSTRACT

Machine learning algorithms are one the unsurpassed methods and also the topical delve in the area in the

constituency of image processing in image retrieval. Since, A immense set of modus operandi have been

implemented in query based image retrieval the recent and restructured one is using large margin for image retrieval

from the databases. The stepladder in the processing are listed in vibrant model. In the beginning queries are timehonored as input from the client consumer side for outlook processing. Subsequently the desired results are

publicized passing through a gizmo named as RAS. The query image is harmonized with the images are there in the

databases. For categorize the algorithm which is thrash out large margin nearest neighbors. In distance metric

learning algorithms large margin nearest neighbors is one of the most recent for calculating the nearest

neighbors(mahbolobis distance).In this papers nearest neighbors ‘are premeditated by using the large margin nearest

neighbors.

IJOART

1. Introduction about large

neighbors.

margin nearest

The Large margin nearest neighbors’ is a algebraic

part of the machines learning algorithms. Its superior

forms the nearest neighbors. The foremost scheme of

the large margin is convex optimization. Machines

learning are further classified into two categories are

as supervised learning and unsupervised learning.

This large margin nearest neighbors’ are with the sole

purpose belongs to supervised learning. This wholly

refers to the classification rules. In the course of

action basically two things are involved one is test

data and another one training data.[1]

III.

IV.

V.

VI.

In chemical researches using that large

margin nearest neighbors the component

ratio can be clearly monitored using this

algorithm.

In population interpolation using this large

margin nearest algorithm classification will

be more accurate.

In forest firing using that algorithm affected

areas will be clearly visualized.

Using the imputing values in large margin is

a future in machine learning.

2.Comparative study:

2.1Dimensionality reduction:

1.2 Application of

neighbors’

I.

II.

the above thrash out nearest

Based on the large margin nearest neighbors

the classification of virus accepted can be

more accurate.

In visual image processing the retrieval of

images from satellite will be classified very

keen using large margin nearest neighbors.

Copyright © 2014 SciResPub.

Enhance the discriminate ability of low dimensional

space. In the follow a line of investigation of

machine learning dimensionality reduction is the

process of reducing the number of random variables

under consideration.[2]

2.2 SVM

IJOART

International Journal of Advancements in Research & Technology, Volume 3, Issue 11, November-2014

ISSN 2278-7763

In Support Vector machine the process start with

collecting with the data and zero mean is calculated

and then convergence matrix is calculated and with

the help this feature vector will be new dataset. It

provides a roadmap for how to reduce a complex

dataset to a lower dimension. [3]

2.3 PCA

3.0 RAS TOOL FOR LARGE

NEAREST NEIGHBOURS

174

MARGIN

In the RAS gizmo is the GUI used for finding the

large margin based nearest neighbor in a image .

Using this gizmo the addict can confer a image at

liberty as a query image from the databases and that

query images are judge against with the images with

the catalog and the domino effect is put on view.

This is linear approach which Identifies

data

patterns. Then the subsequently footstep is Consider

m data each with n dimension. After that Mean value

calculation and subtraction covariance matrix

generation. With that Calculate Eigen values and

Eigenvectors.

Discard

useless

Eigenvectors

reconstruct the data. This may be the following

idea.[4]

2.4 LDA

LDA it is come under the topic of

supervised learning classification with training data.

Step 1:

Mean calculation and subtraction

respectively.[5]

IJOART

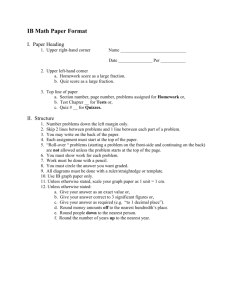

RAS TOOL.

Step 2: Prior probability calculation.

Step 3: Generate a discriminate function.

Step 4: Classify the incoming sample data with the

discriminate function covariance is calculated.

For the dimensionality reduction purposes the

algorithm used in feature detections. The size of the

input data is too large for avoiding the redundancy it

may be termed as feature extractions.

4.Implemented Methods

2.5 KNN

Classifying the a new objects based on

attributes and training samples.(i.e) Given a Query

point we find k number of objects called as training

points and finding the closest to the querypoint.

4.1Feature Extraction

The term Feature Extraction is transforming the data

into high dimensional spaces. Then only the next step

will go ahead. After that the dimensionality will be

reduced.[7][8]

Drawbags:

4.2 Feature selection

1.Searching should be in the entire training dataset.

Step 2.Reduce the error rate.

This is the one of the most important technique which

is used for applying the machine learning techniques.

Finding the subset of the original variables for

processing is refereed as Feature selection. After out

into the feature selection the implementation of

matching is going to started.[9]

Step 3.Convex optimization

4.4 Image Comparison and Image Matching

2.6 LMNN

Step 1.Loss function

Step 4.Energy based classification.[6]

Copyright © 2014 SciResPub.

IJOART

International Journal of Advancements in Research & Technology, Volume 3, Issue 11, November-2014

ISSN 2278-7763

Based on the preprocessing steps the image will be

compared with the image databases with respect to

the query which is given as a input from the user.

After that the featured image will be taken from the

image databases with respect to the query.[10][11]

4.5 Feature Matching

With the reference to feature selection feature

matching is done for getting for compare the query

image with the database images[12].

5.0 Imposters nearest neighbors

5.1 Impostors

An impostor of a data point a is another data point b

with a different class label (i.e.) which is one of the

nearest neighbors of. During learning the algorithm

tries to minimize the number of impostors for all data

instances in the training set.[13]

175

Before now the same dataset know how to be secondhand which is used in the nearest neigbours.Ball trees

are binary trees, with the root node containing all of

the points in the dataset. pick a point at random, find

the farthest point from your random selection (x1),

and find the farthest point from x1 (x2). The line

connecting x1 and x2 reasonably approximates the

direction of greatest variance in the dataset. Bring to

a close upon accomplishment a petite number of

elements in a ball[15]

8.0 Appendix code:

Code for finding the imposters’ class.

function tive = find impostors(k1,k2,k3);

[~,N]=size(k1);

active=zeros(k3.no_potential_impo,N);

for i=1:k3.classes

ni=find(k2==i);

yi=k1(:,ii);

yj=find(k2~=i);

kj=k1(:,jj);

sj=buildmtreemex(pj,50);

IJOART

6.0 Architecture diagram:

active(:,ii)=jj(usemtreemex(ni,nj,Tj,k2.no_potential_i

mpo));

end;

Code for finding the nearest neighbours.

function gets_i=fneighbors(y1,k2,k3);

[D,N]=size(y1);

tars_ind=zeros(N,k2.K);

for i=1:k2.classes

u1=i;

j=find(k3==u);

X=y1(:,jj);

T1=buildmtreemex(Xu,50);

tars=usemtreemex(Xu,Xu,T,k3.K+1);

tars_ind(jj,:)=jj(targets(2:end,:))';

end;

Predictions and ensemble.

7.0 Advanced methods:

The new methods here implemented are

7.1 Ball tree:

A ball tree is a basic entity in the data structure

formally designated to enable the searching for the

fast nearest neighbor searching in high-dimensional

sitting room, by wrapping the points in a dataset with

a system of balls disciplined approved in a tree.

Copyright © 2014 SciResPub.

Od=pd;

O1=C1;

OdV=pV;

pr = pd + k3.lr * p'; % update predictions

ir=length(ensemble{1})+1;

ee{1}(iter) = os.lr; % add learning rate to ensemble

ee{2}{iter} = tree; % add tree to ensemble

Build up ball trees

IJOART

International Journal of Advancements in Research & Technology, Volume 3, Issue 11, November-2014

ISSN 2278-7763

for c=1:length(un)

cx{c}=find(y==un(c));

forest{c}.tree=buildmtreemex(Lx(:,cx{c}),ps.treesize

);

end;

imp=[];

for c=1:length(un)-1

if(~pars.quiet)fprintf('All impostors for class %i \r',

c);end;

for c2=c+1:length(un)

try

ls=findNimex(forest{cx}.tree,Lx(:,classindex{cy}),L

x(:,classindex{cz}),

Ni(class index{c2}),Ni(class index{c}));

With this the calling function will give the output of

large margin nearest neighbors.

9.0 Conclusion

Darmstadt, Germany gregor@arbylon.net History:

version 1: May 2005, version 2.9: 15 September

2009.

6.A k-Nearest Neighbor Based Algorithm

for Multi-label Classi cation Min-Ling Zhang and

Zhi-Hua Zhou National Laboratory for Novel

Software Technology,Nanjing University, Nanjing

210093, China Email: fzhangml,

zhouzhg@lamda.nju.edu.cn

7.Online Learning for Latent Dirichlet

Allocation Matthew D. Hoffman Princeton

University Princeton, NJ

mdhoffma@cs.princeton.edu, David M. Blei

Princeton University Princeton, NJ

blei@cs.princeton.edu Francis Bach INRIA—Ecole

francis.bach@ens.fr.

8. K Nearest Neighbor Queries and KNNJoins in Large Relational Databases (Almost) for

Free Bin Yao, Feifei Li, Piyush Kumar Computer

Science Department, Florida State University,

Tallahassee, FL, U.S.A. {yao, lifeifei,

piyush}@cs.fsu.edu.

IJOART

Thus is RAS tool is intended to fabricate bestow the

nearest neighbors for finding the nearest neighbors’

in an image which is already stored in image

databases. These large margin nearest neighbours is

future enhanced.

References:

176

1.Distance Metric Learning for Large

Margin Nearest Neighbor Classi cation Kilian Q.

Weinberger, John Blitzer and Lawrence K. Saul

Department of Computer and Information Science,

University of Pennsylvania Levine Hall, 3330 Walnut

Street, Philadelphia.

2.Dimensionality Reduction Methods Luigi

D’Ambra1 , Pietro Amenta2 , and Michele Gallo3

Metodolo ski zvezki, Vol. 2, No. 1, 2005, 115-123.

3.The Entire Regularization Path for the

Support Vector Machine Trevor Hastie

hastie@stanford.edu, Robert Tibshirani

tibs@stanford.edu Editor: Nello Cristianin Journal of

Machine Learning Research 5 (2004) 1391–1415

Submitted 3/04; Published 10/04.

4.Sparse Principal Component Analysis Hui

Zou¤ , Trevor Hastiey , Robert Tibshiraniz April 26,

2004.

9. Feature Detection with Automatic Scale

Selection Tony Lindeberg Computational Vision and

Active Perception Laboratory (CVAP) Department

of Numerical Analysis and Computing Science KTH

(Royal Institute of Technology) S-100 44 Stockholm,

Sweden. Http://www.nada.kth.se/~tony Email:

tony@nada.kth.se Technical report ISRN

KTH/NA/P{96/18{SE, May 1996, Revised August

1998. Int. J. Of Computer Vision, vol 30, number 2,

1998.(In press).

10. Distance Metric Learning for Large Margin

Nearest Neighbor Classi cation Kilian Q.

Weinberger, John Blitzer and Lawrence K. Saul

Department of Computer and Information Science,

University of Pennsylvania Levine Hall, 3330 Walnut

Street, Philadelphia, PA 19104 {kilianw, blitzer,

lsaul}@cis.upenn.edu.

11. Image Compression using Approximate Matching

and Run Length Samir Kumar Bandyopadhyay,

Tuhin Utsab Paul, Avishek Raychoudhury

Department of Computer Science and Engineering,

University of Calcutta Kolkata-700009, India

(IJACSA) International Journal of Advanced

Computer Science and Applications, Vol. 2, No. 6,

2011

5.Parameter estimation for text analysis

Gregor Heinrich Technical Report Fraunhofer IGD

Copyright © 2014 SciResPub.

IJOART

International Journal of Advancements in Research & Technology, Volume 3, Issue 11, November-2014

ISSN 2278-7763

177

12. An introduction to class action procedure in the

united states Janet Cooper Alexander.

13. An Introduction to Face Recognition

Technology,Shang-Hung Lin, Ph.D. IC Media

Corporation,shanghung.lin@ic-media.com Informing

special science issue om mathematical information

technologies part -2 ,vol 3,nov 2001.

14. Five Balltree Construction Algorithms stephen m.

omohundro International Computer Science Institute

1947 Center Street, Suite 600 Berkeley, California

94704 Phone: 415-643-9153 Internet:

om@icsi.berkeley.edu November 20, 1989.

15. Journal of Machine Learning Research 12 (2011)

2121-2159 Submitted 3/10; Revised 3/11; Published

7/11 Adaptive Sub gradient Methods for Online

Learning and Stochastic optimization John Duchi

jduchi@cs.berkeley.edu Computer science division

university of California, berkeley Berkeley, CA

94720 USA Elad Hazan.

IJOART

Copyright © 2014 SciResPub.

IJOART